Video Transcript:

-If you want to build your own private ChatGPT service with enterprise grade architecture, today I’ll show you your options and considerations for using OpenAI GPT models hosted in Azure.

-Including what you can do now to get up and running quickly using open source samples with a familiar experience that can be branded for your organization and used with just the model’s open world knowledge to generate responses. Followed by how you can go to the next level with Azure AI Studio to build copilot-style apps that let you bring in your own data for grounding the models to generate more relevant responses.

-And as you deploy them, leverage the Azure OpenAI Landing Zone’s reference architecture to run your AI apps securely at scale. In fact, there may be a few reasons why building your own private chat experience can benefit your organization.

-For example, starting with data protection, if you are not using a trusted service with security and privacy guarantees like Copilot for Microsoft 365 or others, or if you are using ones with less guarantees, information typed into prompts for additional context or summarization could be retained by the vendor for future model training. And that could mean that in the future, your competitors could gain access to your protected information.

-Of course, to avoid non-sanctioned services you might be tempted to block people in your organization from using these public services outright. That said, it doesn’t have to be that way. You can still give people what they want and retain control to meet your requirements by architecting and building your own private solutions the right way.

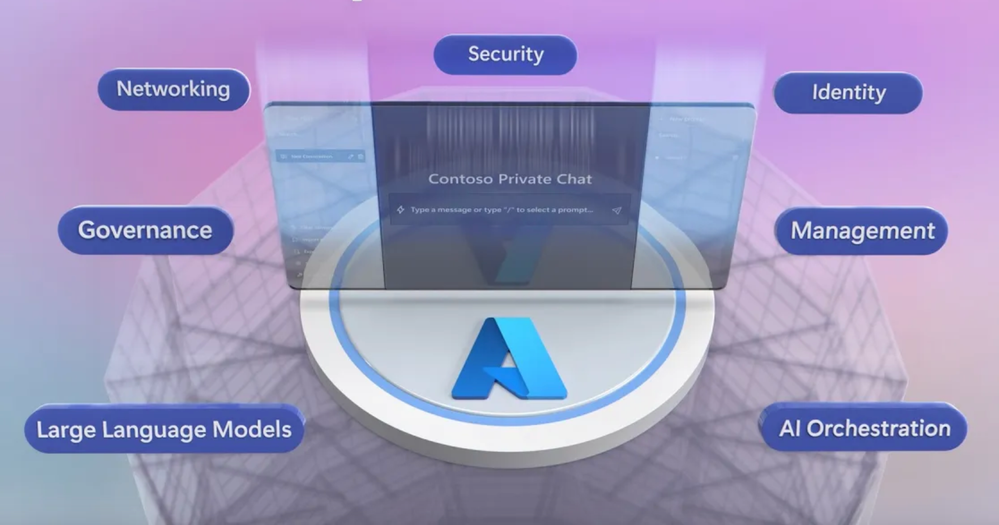

-And that’s where Azure can help, It brings together all of the pieces you need to architect a complete private ChatGPT-style app, with multiple options for your web front-end from Azure Container Apps to Azure Web Apps and more. As well as for your back-end data tier whether structured or unstructured.

-And you can access private instances of GPT large language models hosted in the Azure OpenAI service, where your data will never be used to train them. There are optional AI services including Azure AI Search for retrieval-augmented generation And of course, the new Azure AI Studio gives you the tools to customize and manage your AI apps at scale So where do you start?

-Well, one of the fastest ways to get up and running is to use an opensource ChatGPT style app sample. I’ll choose one of my favorites with around 100 contributors, to build a Proof of Concept and show immediate progress, and this is just one of many samples submitted by the community that I can choose from.

-This app uses the services I mentioned previously as part of the architecture. And its user interface runs in Azure Container Apps, as a quick way to get up running. It uses ChatBot UI open-source code in GitHub that you can find at aka.ms/GitHubChatBotUI. And its container design makes it well suited for proof of concept, low administrative effort experiences.

-Moving back to the interface, you’ll notice it looks quite familiar to anyone who’s worked with ChatGPT. This is a private ChatGPT-style app, running completely in my corporate domain within my Azure subscription that only Contoso employees can access. So let’s try it out. I’ll prompt it to “summarize this text using sections with bullet lists” from a product we’re working on at Contoso. And there it is. This looks pretty good.

-And again, because these services are running on your own private resources, you don’t need to worry about what your users paste into their prompts. Next with a bit of customization, you can connect these ChatGPT-style experiences safely up to your internal data or use plugins to connect it to APIs. In my case, I’ve connected our app to a few standard docs like this Health and Wellness Plan, so that our app can find information like this using basic orchestration with prompt flows.

-So, now I’ll move back to our app, and this time, I’ll submit a prompt for something that wouldn’t work with a public model using the doc I just showed. And let’s see if you were paying attention. So what is Contoso’s monthly stipend for purchasing healthy food? And immediately, you’ll see that it’s returning a personalized response using the information I gave it. Looks like it’s a $100 a month. Which, if I switch back to my source doc, appears to be correct.

-The process essentially appends relevant information it can find using search to your prompts in order to generate an informed response. So, you don’t need to train or modify the large language model to get something like this to work. Then to build or customize these experiences, this is again where Azure AI Studio is a great place to extend what your app can do. So using the Azure AI Studio and its playground, you can further tune chat experiences.

-You can tie in enterprise data needed for retrieval-augmented generation, and then build out custom orchestration using prompt flow automation, you can even put the right content filters in place to ensure inputs and outputs are aligned with your communication standards.

-And much more which you can see in detail on our recent show at aka.ms/AIStudioMechanics. Once you have your proof of concept and customizations in place, here’s where we can architect for security, reliability and scale. To fast track your efforts and remove the guesswork, this is where Azure Landing Zones gives you a tried and tested target architecture of the services you’ll want to use for an app like this.

-In fact this is the new Azure OpenAI Landing Zone reference architecture which you can find at aka.ms/OpenAILZ. This helps you preconfigure what you need for your AI app using Terraform configurations as part of your larger enterprise architecture. And provides a recommended services as part of an AI subscription. Let me break it down for you. It starts with Azure Front Door as a foundational service for ingress and egress.

-And you can add Azure API Management, for additional traffic throttling options. Then, you can use these together with an Application Gateway which includes Web App Firewall settings, to protect APIs from common web-based attacks Then an Azure Web Apps instance, which you can use to host your app front end, or Container Apps like in our sample.

-Next, the heart of the app will run on Azure AI services which as mentioned includes Azure AI Search for retrieval-augmented generation. You can use an Azure Storage Account to store files for your app and more. The Azure OpenAI Service gives you secure access to GPT large language models. And Cosmos DB which with its support for NoSQL and unlimited scale is a recommended option if you want to store and maintain session state.

-It’s in fact the database used by ChatGPT itself. Secrets can be protected by Azure Key Vault, and connections are kept secure using Private Endpoint. Microsoft Entra services provide identity and access management for users, and Managed Identities let you securely connect to backend resources and manage authentication, so that you aren’t storing credentials within your app.

-Then for DNS, you can use Azure Private DNS Zones and its Resolver service for name resolution on your Virtual Network or VNet for secure internal and private cloud resource access. Importantly, because you’re using Azure Landing Zones, it has data and service isolation built-in, and you can easily scale application and platform resources independently as more people start to use your app. we explain all of this and more on our full show on the topic at aka.ms/LandingZoneMechanics.

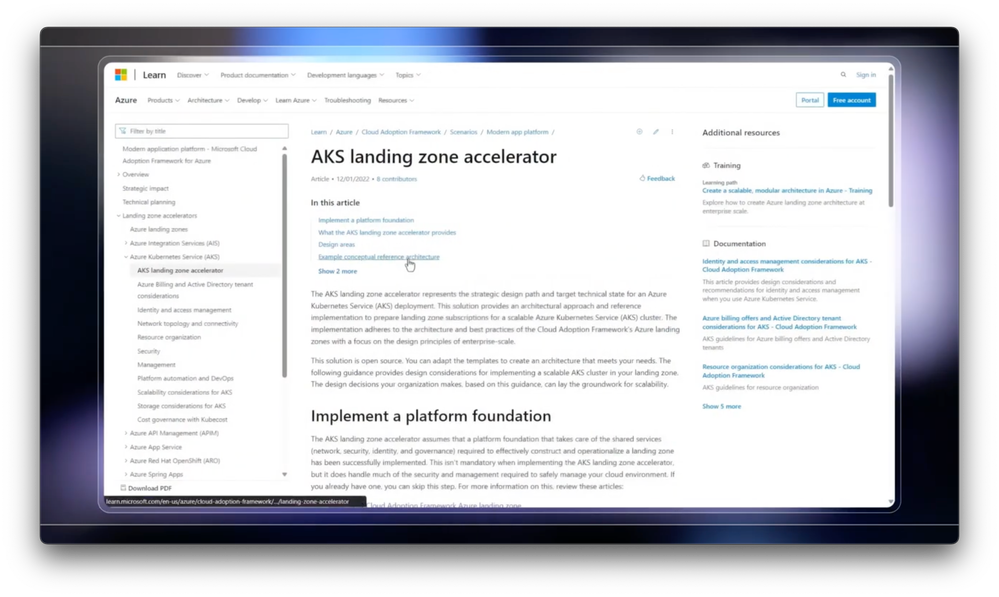

-To simplify the deployment of Azure Landing Zones, beyond Terraform options, we’ve automated the complete deployment using Bicep templates at the platform level that you can find at aka.ms/LZ And once you have that set up, you can use Landing Zone Accelerators, to build out the reference architectures for apps, like the one I showed for Azure OpenAI.

-This hydrates a dedicated Azure subscription per app with the recommended application-specific services. For something like our ChatGPT-style app, that means the configuration and security policies you assign are scoped and isolated to everything for that individual app within its own subscription.

-And you’ll use one of these as the landing zone type for your ChatGPT-style app. You can find more Landing Zone Accelerators at aka.ms/LZAccelerators. And so that’s what you can do now to build your own private ChatGPT-style apps and how to make them enterprise-ready using Azure Landing Zones.

-Again, to save time architecting the services for your app, you can get to the OpenAI Landing Zone at aka.ms/OpenAILZ. Of course, keep watching Microsoft Mechanics for the latest updates, and thanks for watching.