- Home

- Azure

- Microsoft Developer Community Blog

- Getting started with Microsoft Phi-3-mini - Try running the Phi-3-mini on iPhone with ONNX Runtime

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Microsoft, Google, and Apple have all released SLM (Microsoft phi3-mini, Google Gemma, and Apple OpenELM) adapted to edge devices at different times . Developers deploy SLM offline on Nvidia Jetson Orin, Raspberry Pi, and AI PC. This gives generative AI more application scenarios. We learned several ways to deploy applications from the previous article, so how do we deploy SLM applications to mobile devices?

This article is a preliminary exploration based on iPhone. We know that Microsoft phi3-mini has released three formats on Hugging Face, among which gguf and onnx are quantized models. We can deploy phi3-mini's quantized model based on different hardware conditions. So lets get started and explore the quantitative model based on the Phi-3-mini onnx format. If you want to use the GGUF format, it is recommended to use LLM Farm app.

Generative AI with ONNX Runtime

In the era of AI , the portability of AI models is very important. ONNX Runtime can easily deploy trained models to different devices. Developers do not need to pay attention to the inference framework and use a unified API to complete model inference. In the era of generative AI, ONNX Runtime has also performed code optimization (https: //onnxruntime.ai/docs/genai/). Through the optimized ONNX Runtime, the quantized generative AI model can be inferred on different terminals. In Generative AI with ONNX Runtime, you can inferene AI model API through Python, C#, C / C++. of course,Deployment on iPhone can take advantage of C++'s Generative AI with ONNX Runtime API..

Steps

A. Preparation

-

macOS 14+

-

Xcode 15+

-

iOS SDK 17.x

-

Install Python 3.10+ (Conda is recommended)

-

Install the Python library - python-flatbuffers

-

Install CMake

B. Compiling ONNX Runtime for iOS

git clone https://github.com/microsoft/onnxruntime.git

cd onnxruntime

./build.sh --build_shared_lib --ios --skip_tests --parallel --build_dir ./build_ios --ios --apple_sysroot iphoneos --osx_arch arm64 --apple_deploy_target 17.4 --cmake_generator Xcode --config Release

Notice

- Before compiling, you must ensure that Xcode is configured correctly and set it on the terminal

sudo xcode-select -switch /Applications/Xcode.app/Contents/Developer

-

ONNX Runtime needs to be compiled based on different platforms. For iOS, you can compile based on arm64 / x86_64

-

It is recommended to directly use the latest iOS SDK for compilation. Of course, you can also lower the version to be compatible with past SDKs.

C. Compiling Generative AI with ONNX Runtime for iOS

Note: Because Generative AI with ONNX Runtime is in preview, please note the changes.

git clone https://github.com/microsoft/onnxruntime-genai

cd onnxruntime-genai

git checkout yguo/ios-build-genai

mkdir ort

cd ort

mkdir include

mkdir lib

cd ../

cp ../onnxruntime/include/onnxruntime/core/session/onnxruntime_c_api.h ort/include

cp ../onnxruntime/build_ios/Release/Release-iphoneos/libonnxruntime*.dylib* ort/lib

python3 build.py --parallel --build_dir ./build_ios_simulator --ios --ios_sysroot iphoneos --osx_arch arm64 --apple_deployment_target 17.4 --cmake_generator Xcode

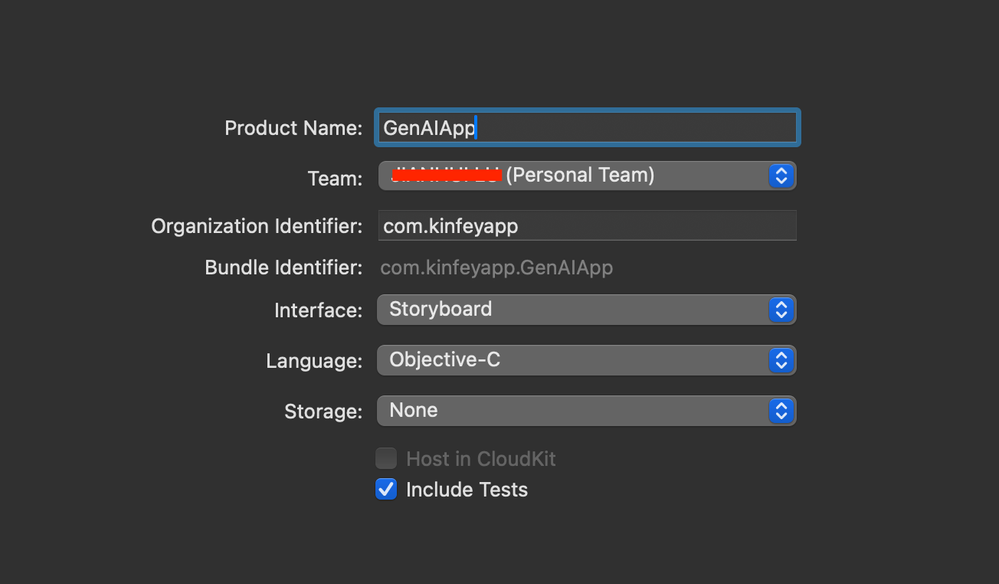

D. Create an App application in Xcode

I chose Objective-C as the App development method , because using Generative AI with ONNX Runtime C++ API, Objective-C is better compatible. Of course, you can also complete related calls through Swift bridging.

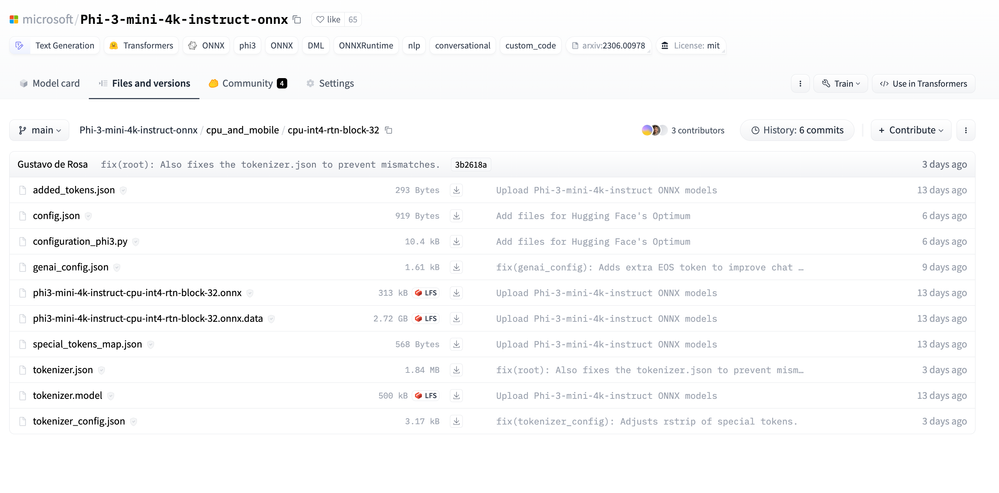

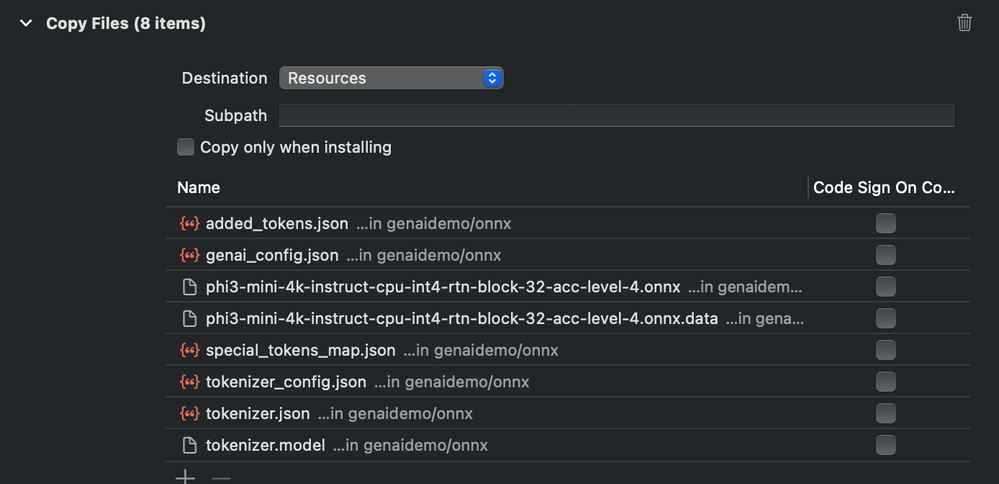

E. Copy the ONNX quantized INT4 model to the App application project

We need to import the INT4 quantization model in ONNX format, which needs to be downloaded first

After downloading, you need to add it to the Resources directory of the project in Xcode.

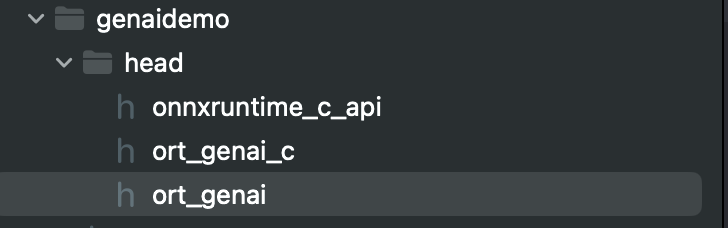

F. Add the C++ API in ViewControllers

Notice:

- Add the corresponding C++ header file to the project

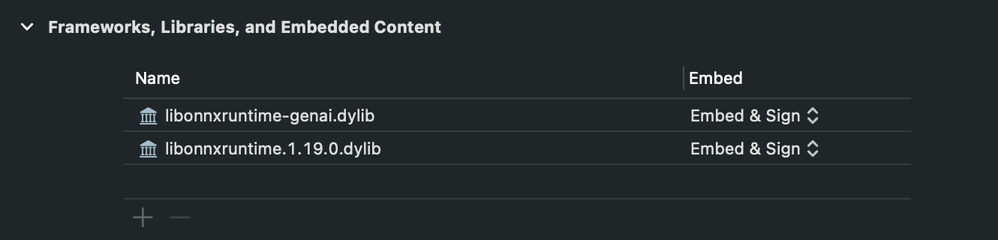

- add onnxruntime-genai.dylib in Xcode

-

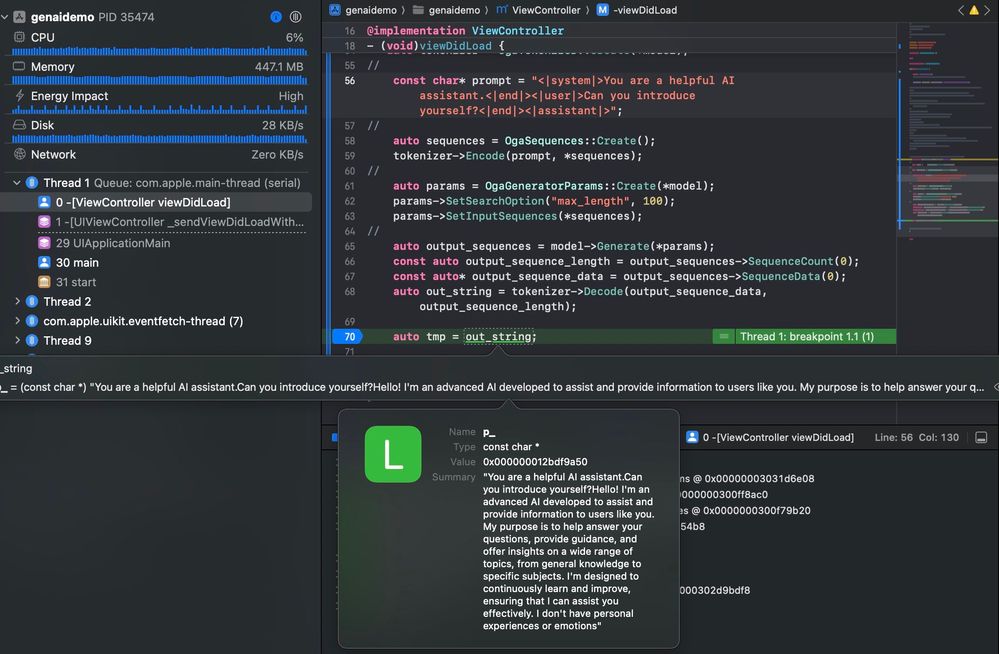

Directly use the code on C Samples for testing in this samples. You can also directly add moreto run(such as ChatUI)

-

Because you need to call C++, please change ViewController.m to ViewController.mm

NSString *llmPath = [[NSBundle mainBundle] resourcePath];

char const *modelPath = llmPath.cString;

auto model = OgaModel::Create(modelPath);

auto tokenizer = OgaTokenizer::Create(*model);

const char* prompt = "<|system|>You are a helpful AI assistant.<|end|><|user|>Can you introduce yourself?<|end|><|assistant|>";

auto sequences = OgaSequences::Create();

tokenizer->Encode(prompt, *sequences);

auto params = OgaGeneratorParams::Create(*model);

params->SetSearchOption("max_length", 100);

params->SetInputSequences(*sequences);

auto output_sequences = model->Generate(*params);

const auto output_sequence_length = output_sequences->SequenceCount(0);

const auto* output_sequence_data = output_sequences->SequenceData(0);

auto out_string = tokenizer->Decode(output_sequence_data, output_sequence_length);

auto tmp = out_string;

G. Look at the running results

Sample Codes: https://github.com/Azure-Samples/Phi-3MiniSamples/tree/main/ios

Summary

This is a very preliminary running result, because I am using an iPhone 12 so the running is relatively slow, and the CPU usage reaches 130% during inference. It would be better to have Apple MLX framework to cooperate with inference under the iOS mechanism, so what I am looking forward to in this project is that Generative AI with ONNX Runtime can provide hardware acceleration for iOS. Of course you can also try a newer iPhone device to test.

This is just a preliminary exploration, but it is a good start. I look forward to the improvement of Generative AI with ONNX Runtime.

Resources

-

LLMFarm’s GitHub Repo https://github.com/guinmoon/LLMFarm

-

Phi3-mini Microsoft Blog https://aka.ms/phi3blog-april

-

Phi-3 technical report https://aka.ms/phi3-tech-report

-

Getting started with Phi3 https://aka.ms/phi3gettingstarted

-

Learn about ONNX Runtime https://github.com/microsoft/onnxruntime

-

Learn about Generative AI with ONNX Runtime https://github.com/microsoft/onnxruntime-genai

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.