- Home

- Azure

- Microsoft Developer Community Blog

- Building your own copilot – yes, but how? (Part 2 of 2)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With the term copilot we refer to a virtual assistant solution hosted in the Cloud, using an LLM as a chat engine, which is fed with business data and custom prompts and eventually integrated with 3rd party services and plugins.

When it comes to copilots, Microsoft is not just a service provider. Of course we can use built-in copilots like Copilot for M365, Copilot in Bing or GitHub Copilot. But we can also choose to build our own copilot, by leveraging the same infrastructure - Azure AI – on which Microsoft Copilots are based.

In the first blog of this series, we covered how to build a copilot on custom data using low code tools and Azure out-of-the-box features. In this blog post we’ll focus on developer tools and code-first experience.

LLMOps and the development life-cycle of generative AI applications

With the advent of Large Language Models (LLMs) the world of Natural Language Processing (NLP) has witnessed a paradigm shift in the way we develop AI apps. In classical Machine Learning (ML) we used to train ML models on custom data with specific statistical algorithms to predict pre-defined outcomes. On the other hand, in modern AI apps, we pick an LLM pre-trained on a varied and massive volume of public data, and we augment it with custom data and prompts to get non-deterministic outcomes. This has impacts not only in how we build modern ai apps, but also in how we evaluate, deploy and monitor them, which means on the whole development life cycle, leading to the introduction of LLMOps – which is MLOps applied to LLMs.

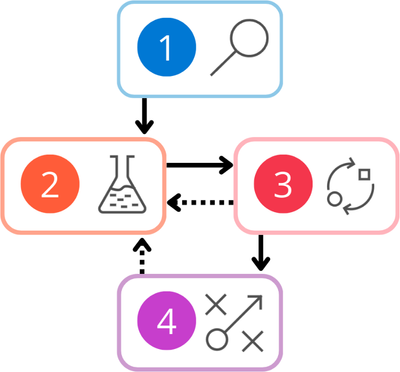

As we dive into building a copilot application, it’s important to understand the whole life cycle of a copilot application, consisting in 4 stages.

- Initialization: defining the business use case and designing the solution.

- Experimentation: building a solution and testing it with a small dataset.

- Evaluation and refinement: assessing the solution with a larger dataset, evaluating it against metrics like groundedness, coherence and relevance.

- Production: deploying and monitoring the final application.

This is an iterative process: during both stage 3 and 4, we might find that our solution needs to be improved; so, we can revert back to experimentation, applying changes to the LLM, the dataset or the flow and then evaluating the solution again.

Prompt Flow

Prompt Flow is a developer tool within the Azure AI platform, designed to help us orchestrate the whole AI app development life cycle described above. With prompt flow, we can create intelligent apps by developing executable flow diagrams that include connections to data, models, custom functions, and enable the evaluation and deployment of apps. Prompt Flow is accessible in the Azure Machine Learning studio and the Azure AI Studio, but it is also available as a Visual Studio Code extension to provide a code first experience alongside the existing design surface.

A flow often consists of 3 parts:

- Inputs: Data passed as input into the flow.

- Nodes: Tools that perform data processing, task execution, or algorithmic operations. A node can use one of the whole flow's inputs, or another node's output.

- Outputs: Data produced by the flow.

There are also different kinds of flows, but in the scope of building a copilot app, the proper type of flow to use is called chat flow, which offers support for chat-related functionalities.

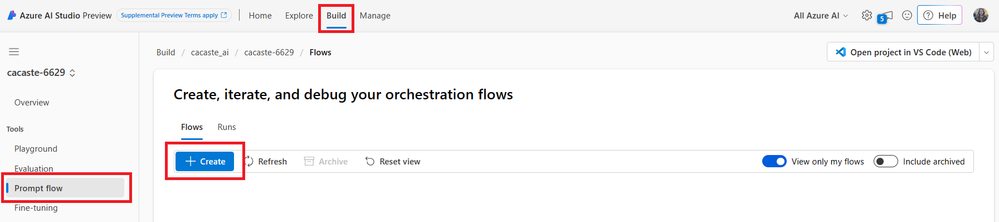

To get started, we should create a new Azure AI Project in Azure AI studio, navigate to Build ->Tools->Prompt Flow and then create a new chat flow, by clicking on the +Create button.

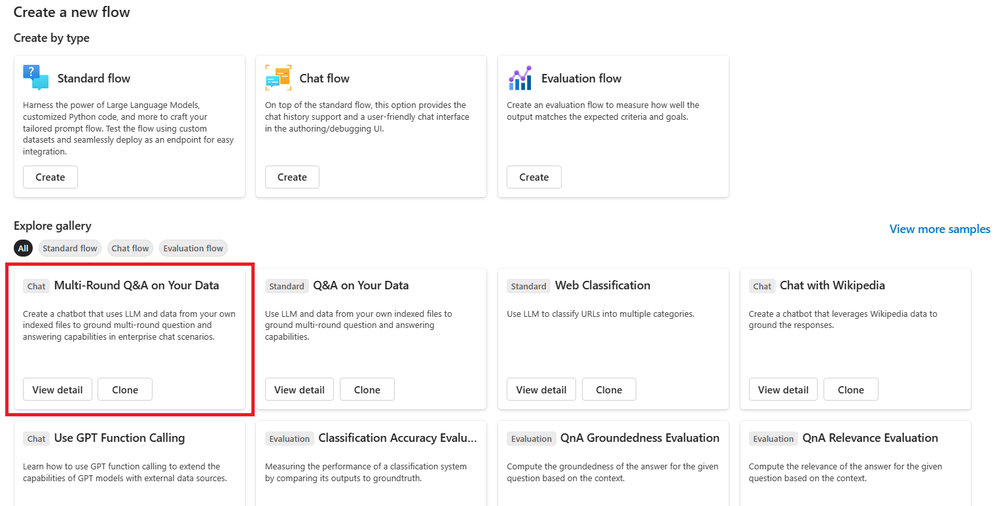

We can also leverage a set of existing templates as a starting point of our application. For the copilot scenario based on the RAG pattern, we can clone the Multi-round Q&A on your data sample.

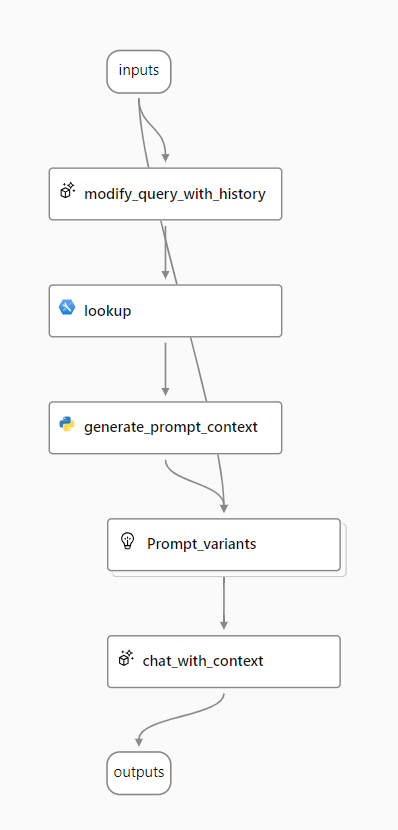

The sample includes 5 nodes:

- Modify_query_history: uses the prompt tool to append the chat history to the query input in a form of a standalone contextualized question

- Lookup: uses the index lookup tool to extract relevant information from the provided search index

- Generate_prompt_context: uses the Python tool to format the output of the lookup node in a list of strings combining the content and the source of each retrieved information.

- Prompt_variants: defines 3 variants of the prompt to the LLM, combining context and chat history with 3 different versions of the system message. Using variants is helpful to test and compare the performance of different prompt content in the same flow.

- Chat_with_context: uses the LLM tool to send the prompt built in the previous node to a language model to generate a response using the relevant context retrieved from your data source.

The description of the visual flow is contained into a flow.dag.yaml file that can be inspected and modified at any time and which references other source files, like jinja templates to craft the prompts and python source files to define custom functions.

After configuring the sample chat flow to use our indexed data and the language model of our choice, we can use built-in functionalities to evaluate and deploy the flow. The resulting endpoint can then be integrated with an application to offer users the copilot experience.

Also, through the Python tool, Prompt Flow provides the flexibility to extend our solution using AI orchestrator frameworks like Semantic Kernel and LangChain.

Custom solutions

Curated approaches make it simple to get started, but for more control over the architecture, we might need to build a custom solution for specific scenarios.

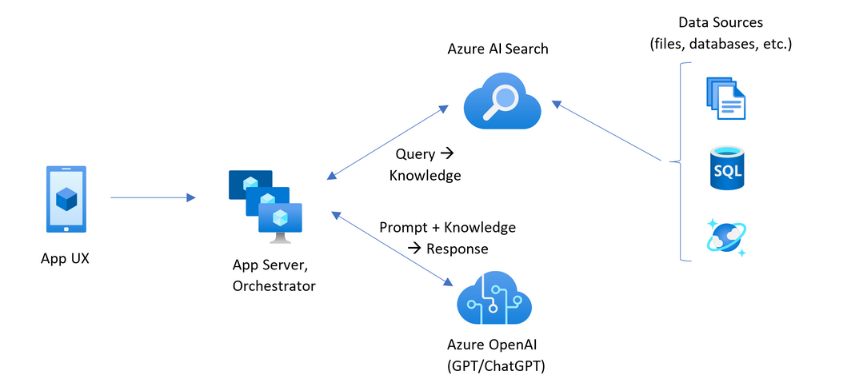

A custom RAG pattern within the Azure ecosystem might look like this.

The solution, hosted in the Cloud as a Web App, is built of 2 components:

- A graphical interface - app UX - enabling the user to chat with the copilot assistant in natural language.

- The app backend, acting as an orchestrator which coordinates all the other services in the architecture:

- Azure AI Search indexing the documents – pulled from Cosmos DB, SQL db or other sources- to optimize search across them;

- Azure OpenAI Service, which provides: keywords to enhance the search over the data, answers in natural language to the final user and embeddings from the ada model if vectorized search is enabled.

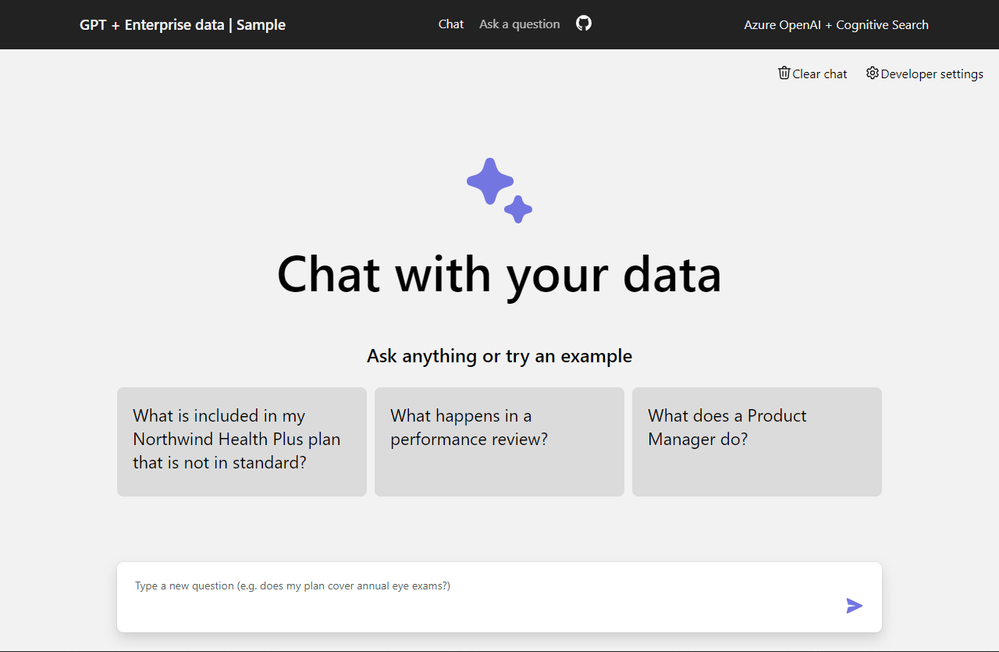

Building a custom solution means that we have the maximum level of flexibility in terms of the language and the framework we want to use for our solution and the services we wish to integrate. On the other hand, getting started with a custom solution from scratch might be intimidating. Microsoft enterprise chat app open-source samples – available in different programming languages – mitigate this challenge, by offering a good starting point for an operational chat app with the following basic UI.

Summary

In this blog series (read part 1) we have presented a few options to implement a copilot solution based on the RAG pattern with Microsoft technologies. Let’s now see them all together and make a comparison.

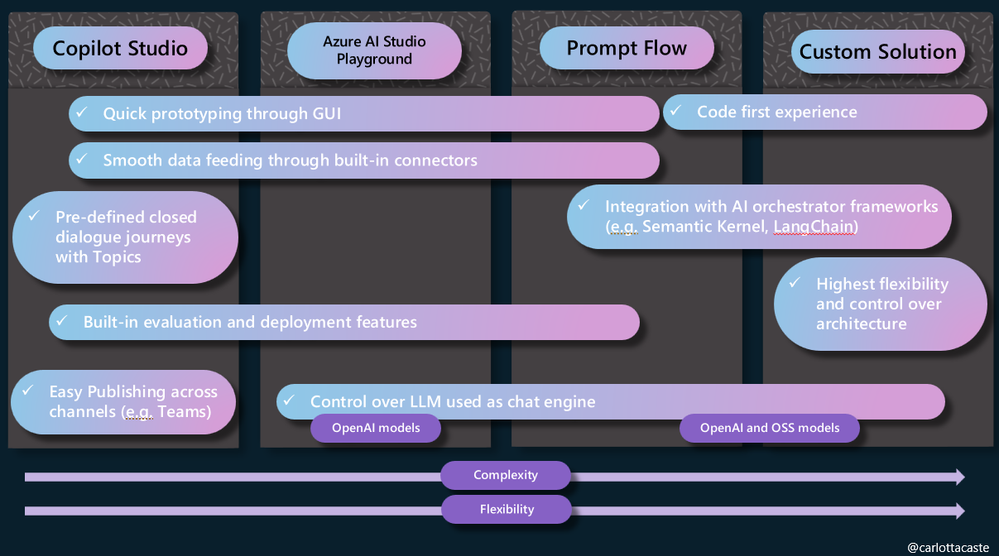

To summarize:

- Microsoft Copilot studio is a great option for low code developers that wish to pre-define some closed dialogue journeys for frequently asked questions and then use generative answers for fallback. It should be the first choice for customers familiar with the Power Platform suite and it enables them to get a quick prototype published on pre-defined channels (Teams, Facebook or Slack) in minutes and with no code.

- Azure AI Studio Playground with the Azure OpenAI on your data feature should be the first option to consider for developers that need an end-to-end solution for Azure OpenAI Service with an Azure AI Search retriever, leveraging built-in connectors. Similarly to Copilot Studio, it enables quick prototyping through GUI, but it also enables the choice of the LLM used as chat engine (within the OpenAI family).

- When developers need more control over processes involved in the development cycle of LLM-based AI applications, they should use Prompt Flow to create executable flows and evaluate performance through large-scale testing. Prompt Flow also allows the integration of custom Python code and several LLMs in the same solution (both OpenAI or Open Source). It is available both as low code experience (through Azure AI Studio and Azure ML Studio) and as a code-first experience (as a VS Code extension).

- To get the highest level of flexibility and control over solution architecture, developers might need to build custom solutions. This option is also the one which implies a higher level of complexity.

Useful resources

Building a copilot with Prompt Flow:

- Complete this Microsoft Learn Path -> Create custom copilots with Azure AI Studio (preview) - Training | Microsoft Learn

- Join this Learn Live session -> Developing a production level RAG workflow

Implementing RAG pattern in a custom solution:

- Explore Azure samples -> Microsoft enterprise chat app open-source samples

- Join this Learn Live session -> Create an Azure AI Search solution

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.