- Home

- Security, Compliance, and Identity

- Microsoft Defender for Cloud Blog

- Effective novelty detection in cloud security domain

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

In cloud security domain, we often need to monitor entities – such as users, IP addresses, applications, or access tokens – and their patterns of behavior. We might want to detect ‘novelties’ – unexpected and previously unseen values of these entities - which can indicate security issues. Some examples of such scenarios are:

- IP address belonging to a previously unseen ASN range accesses cloud storage.

- Previously unseen application logs to SQL database.

- A new user logs to an administration portal.

Novelty might indicate a security incident – in which case an alert should be sent to resource owner or security officer for investigation. However, it might also indicate a benign case – such as joining of a new employee or enrollment of a new device. Thus, besides detecting novelty, an anomaly model should be used.

When the monitored entity has low cardinality (meaning the number of possible values is relatively small), detecting novelty is quite straightforward. For example, let’s say we monitor users accessing a cloud resource and want to trigger a signal when a new user appears. This can be achieved by saving the list of active users per meaningful batch (e.g., an hour or a day). When a new batch arrives, we check for each of the appearing usernames in how many previous batches it appeared.

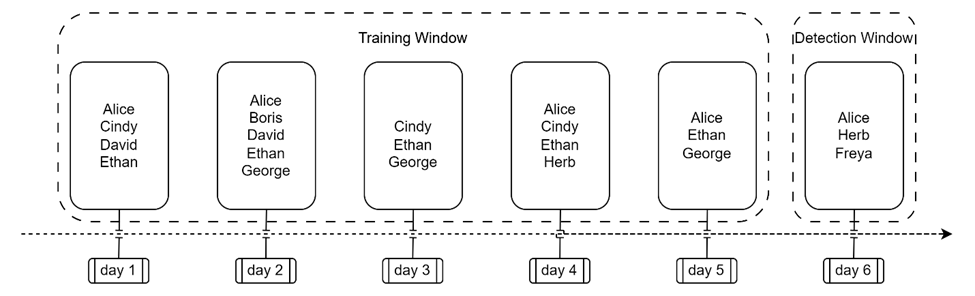

Figure 1: Novelty detection for low-cardinality entities

In Figure 1, we can see the lists of users accessing a cloud resource during 5 training days. On day 6 a new batch of users appears. We can see that Alice is a commonly seen user, Herb is known but rare (and thus is somewhat anomalous), while Freya is seen for the first time – and might require further investigation.

In real life situations we will deal with larger number of batches, in which case we can improve the training efficiency by aggregating training batches in iterative manner.

Figure 2: Iterative mode

As can be seen in Figure 2, table aggregated by name is easier to maintain and process – we can see that Freya doesn’t appear in training data, so her appearance is a novelty. It is also easy to see that Boris and Herb appear only in a single day, so they might be somewhat anomalous.

We can extend this notion and define the degree of ‘anomaly’ of an entity as a function of the number of batches it appears in – perhaps with some normalization.

Anomaly model for novelty appearance can also be added to list data by modeling the rate of appearance of new entities per batch. If the rate is low, then appearance of novelty is anomalous – and thus might be suspicious.

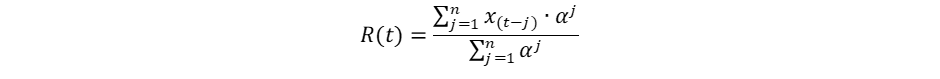

For example, if x(i) is the number of new entities appearing in batch i and a is the decay rate parameter, the appearance rate estimate R(t) for batch t based on a window of size n can be calculated as:

Setting a=1 results in a simple average; lower values of a result in faster decay, so that batches further away have smaller effect. High value of R(t) means that new values tend to appear more frequently, thus being less anomalous.

Problem

Entities commonly appearing in the cloud can have very high cardinalities. For example, there are more than 4 billion IP addresses that can potentially reach a resource and the number of usernames or access tokens is theoretically uncapped. Monitoring entities with high cardinalities is technically problematic: retaining long lists requires large amounts of storage, and checking these lists for existing values is inefficient.

In some cases, this can be remediated by aggregating entities to meaningful higher-level families. For example, instead of monitoring exact IP addresses, we can use IP ranges or ASNs. In this case the problem is reverted to low cardinality scenario, as discussed above.

However, this is not always desired or possible. We might want to monitor exact IP addresses (to cover internal malicious agent cases); aggregating usernames can be problematic as well since no meaningful ‘families’ of users are defined.

Bloom filters: basic properties

In such cases, Probabilistic Data Structures - in particular, Bloom filters – can be helpful. Probabilistic approach allows us to build an efficient platform, providing flexible operations covering multiple security scenarios, at the cost of insignificant and controlled loss of accuracy. In the next paragraphs we explain how to implement Bloom filters and benefit from their advantages in cloud security domain.

Let’s start with a brief introduction of Bloom filters. Since general explanations are readily available, we’ll present a mock sample that is not a true implementation – but we hope will be useful in illustrating Bloom filter logic and qualities.

We will continue the previous scenario of checking whether usernames appearing in a new batch appeared in previous batches.

Let’s represent bits by letters of ABC. In this case, an empty Bloom filter will look like this:

Figure 3: Empty instance of Bloom filter

Letters represent the bits’ indexes, while the values (currently all 0) are binary flags, representing the fact that all bits are currently empty.

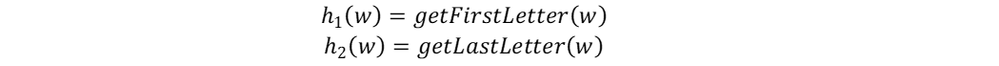

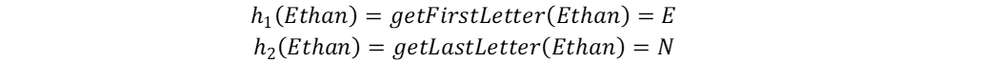

The next step in building a Bloom filter is defining a set of k independent, uniform and preferably efficient hash functions. In real life a number of suitable fast implementations are recommended – such as murmur, Jenkins or FNV. In our mock sample, we’ll define the following functions:

If w represents a username, for example Alice, we get:

In a similar way:

Note that the output of each function is the index of a single bit in our Bloom filter – in our mock case, a letter. Generally speaking, a suitable hash function maps an entity to a single bit in the Bloom filter object.

Let’s define a way to assign a new entity w to the existing Bloom filter. This is done by looking at the output of predefined k hash functions and turning the values of all resulting bits to 1.

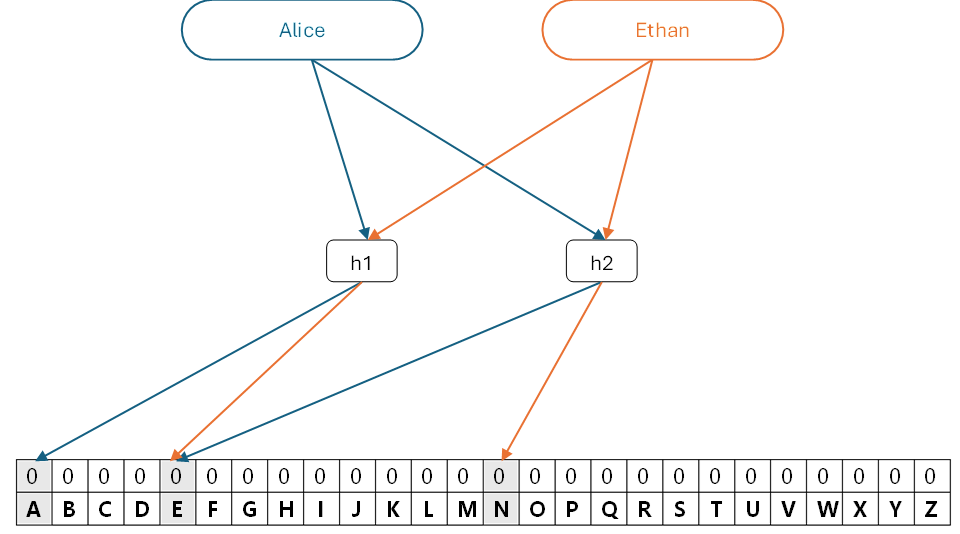

As shown in figure 4, we start with an empty filter – and want to assign usernames Alice and Ethan to it. Since the output of h1 for Alice is A, we turn the value of bit A in the filter to 1; likewise, h2(Alice)=E, so we turn the value of bit E to 1 as well. Similarly, the output h1(Ethan)=E and h2(Ethan)=N. If we add this username to the same filter, the bit E already equals to 1 (output of h2(Alice)), so we leave it as is, and turn the value of bit N to 1.

Figure 4: Assigning entities to Bloom filter

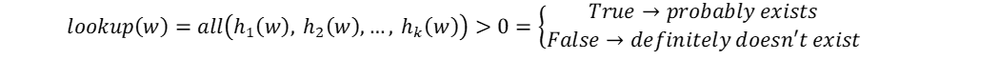

Let’s define a way to check whether an entity w already appears in Bloom filter. This is done by running it through the same k hash functions and checking the status of the output bits in the filter. If all of the output bits in the filter are equal to 1, then entity w probably appears in the filter (we’ll address the probabilistic part a bit later). If any of the output bits are equal to 0, then entity w definitely doesn’t appear in the filter.

Figure 5: Looking up entities in Bloom filter

To summarize:

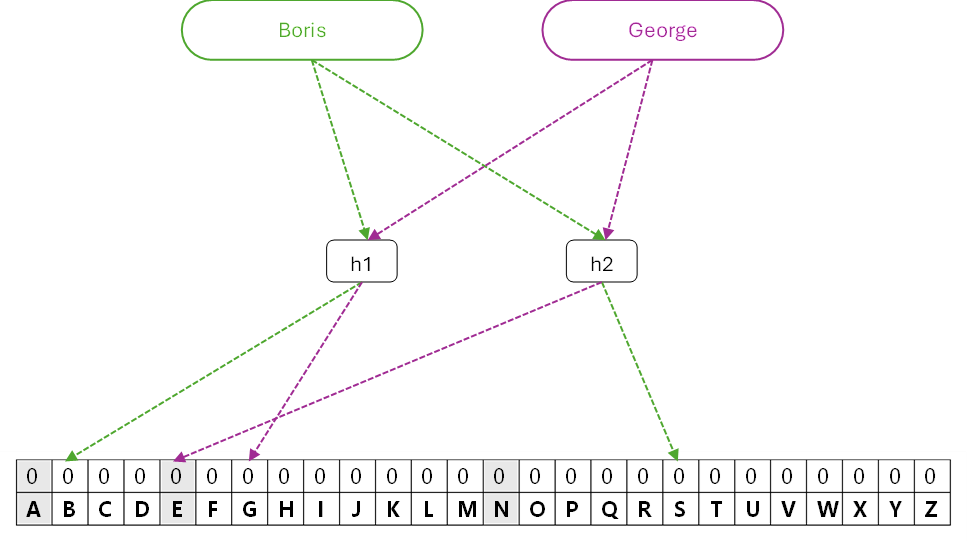

Let’s delve deeper into this logic and see how it works. Let’s check whether username Boris appears in our filter. We know that if we would have added Boris in the past, the bit value of B should be 1. Since the value is 0 (only the values of A, E and N are 1), we know that Boris is definitely not assigned. When checking whether George appears in the filter, we can see that the value of E is 1 (courtesy of Ethan or Alice), but G is 0 – so George and Boris are not assigned, with no possibility of error.

However, let’s look for a user named Nerya. The output values of h1 and h2 are, respectively, N and A. The values of both bits in Bloom filter are 1, so we might conclude that Nerya is assigned. However, this is an error – since these are the results of other entities (e.g. Alice and Ethan) – leading to potential False Positive. It is easy to see that lookup precision constantly drops when more entities are added to existing Bloom filter since there is more chance that relevant bits are turned on by other entities. This can be remediated by increasing the filter size, and to some extent – by adding more hash functions.

Calculating the probability of False Positive is straightforward, due to the fact that hash functions are independent and uniform. Thus, assuming n potentially existing entities, k hash functions and a Bloom filter with m bits, we can calculate the probability that l specific bits (representing some entity) were turned on at random. We can also find the optimal number of hash functions that minimizes this probability for given n and m.

For example, if we expect up to 2 million entities, and want to keep False Positive rate below 0.1%, we need to use a Bloom filter with 28 million bits, which can be stored as a 3.4MB object.

The concept of assigning and looking for entities is similar to the trivial list example that appears in figure 2 – but the implementation is probabilistic and not exact. This allows us to balance between required storage size and desired accuracy. What’s especially valuable in security domain is that the accuracy cost is reflected only in False Positives, while the False Negative rate of Bloom filters is always zero.

This means that if we see that a username exists in the filter – this is probably true, with controlled probability of False Positive. If we see that it isn’t there, this is definitely true – with no possibility of False Negative. In security domain, this is translated to the useful fact that if novelty is detected, it is surely correct.

Note that in current implementation there is no need to count hits on bits, revert recurring bits back to 0, etc. – although it is possible in other versions of Probabilistic Data Structures, leading to interesting options.

Bloom Filters: advanced properties

Beyond assignment and lookup functions as discussed above, Bloom filters have a number of interesting qualities that can be useful in security domain.

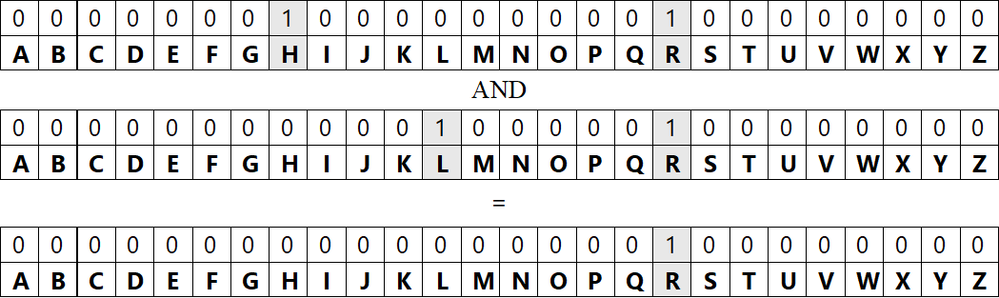

Since Bloom filters are essentially bit arrays, merging several filters can be done efficiently using bitwise operations. Creating an intersection of filters can be done by bitwise AND operations.

Figure 6: Intersection of Bloom filters

Lookup in the resulting intersection filter allows to quickly check whether the entity appears in all of the original filters. This is helpful when looking for very common or stable entities. In our sample, it can help in finding entities appearing in all of the daily batches.

Alternatively, we can define each filter to represent some type of behavior; then looking in the intersection allows to check whether some entity displays all of the behaviors. For example, we can define a filter for role (administrator or contributor), operation (read or write) and source (internal or external). By combining the lookups in these filters, we can define interesting scenarios. For example, we can check whether some contributor user performed write operations from internal source, but not write operations from external source – which might be suspicious.

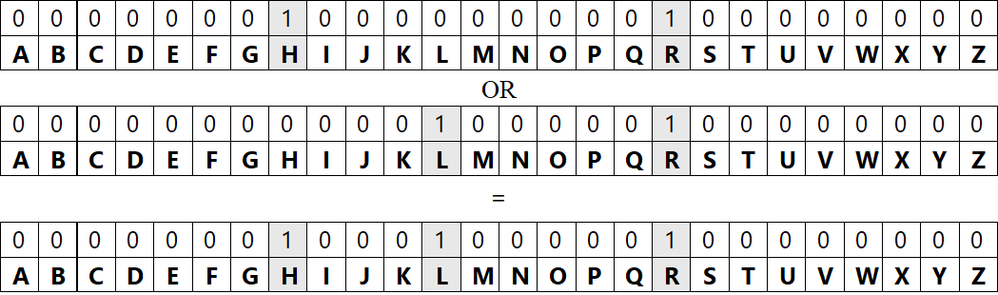

Creating a union is similarly achieved by bitwise OR.

Figure 7: Union of Bloom filters

Lookup in the resulting union filter allows to quickly check whether the entity appears in any of the original filters. This is helpful for quick initial lookup in the union filter before actually checking in individual filters.

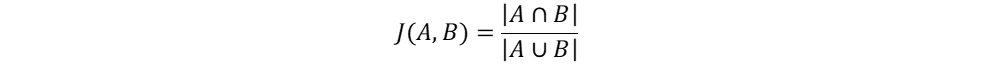

We can also define a metric of similarity between Bloom filters. For example, we can use Jaccard similarity coefficient between sets A and B, defined as:

The value of Jaccard coefficient is a number between 0 and 1, representing similarity between sets. We can use the sizes (sums of bits) of intersection and union of Bloom filters (as defined above) in this formula. Numbers closer to 1 indicate that the same bits in the filters are on – meaning that the filters represent the same entities with more probability (although not certainly). Possible usage of these filters would be to gauge stability between batches in our samples, meaning that the same usernames appear on daily basis. Alternatively, we can compare usernames between different resources to look for resources accessed by similar groups of users.

Feature selection using Bloom filters

Besides high cardinality that is common in cloud security domain, another problem is created by appearance of multiple variables – known as ‘curse of dimensionality’. For example, it is common practice to log IP addresses along with usernames, agents and device IDs. Even if each of these variables doesn’t have exceptionally high cardinality, the number of potential combinations grows very quickly.

Merging properties of Bloom filters, as discussed above, can be very useful in alleviating this problem. Instead of logging each combination, we can retain one dedicated Bloom filter for each variable and rely on merging properties (and accuracy estimation) to check multiple scenarios.

Now let’s see how Bloom filters can be used effectively in cloud security domain.

Implementation

In current implementation we explore the application of Bloom filters for detecting and preventing security threats in a cloud environment by monitoring massive amounts of IP addresses accessing a server each day.

As previously shown, we chose Bloom filters for this scenario due to their unique characteristics, making them a highly efficient and practical solution for detecting and preventing security threats in a cloud environment.

As described above, Bloom filters provide a fast probabilistic approach to query whether an IP address is already present in the set. This ability is crucial for real-time threat detection and prevention, unlike storing and querying for IPs appearing in raw data. In addition, False Positives can be controlled by adjusting the parameters of the Bloom filter, allowing for an optimal balance between the rate of False Positives and the size of Bloom filter object. Finally, Bloom filters can be easily implemented, updated, and combined, making them a flexible and scalable solution.

We simulate web server access logs from the 17th of April to the 22nd of April and use this period to train a Bloom filter object. On the 23rd of April, we predict whether an IP is seen or not using the fitted Bloom filter objects. We used a standard Python Bloom filter implementation, with an approximate size of 5MB, containing around 38.3 million bits. The total unique number of IPs simulated in this experiment is 2,001,000 while we reserve 1,000 IPs that will be first seen on 23rd April. Throughout the training period, 1.8 million to 1.9 million unique IP addresses from the remaining 2 million IPs appear each day.

The following code snippet creates a list of Bloom filters as each filter is trained on each day's data. We want to keep the error level below the predefined threshold of 0.0001 for the expected 2.001 million elements. For this, we need around 38 million bits in the filter (around 4.5 MB) and 13 hash functions. For more details about the calculation, you can use an online Bloom filter accuracy calculator Bloom filter calculator (hur.st) .

def create_bloom(iterator):

bf = BloomFilter(max_elements=2.001*10**6, error_rate=1e-4)

for i in iterator:

bf += i[0]

yield bf

bfs = [spark.read.parquet(f'/log_events{i}.parquet')\

.select("ip")\

.rdd\

.mapPartitions(create_bloom)\

.treeReduce(lambda bf1, bf2: bf1.__ior__(bf2), partitions_num)

for i in range(1, 6)]

We used Spark RDD (Resilient Distributed Dataset) instead of DataFrame in the code snippet due to the need for customized operations and low-level transformations like mapPartitions and treeReduce. Applying custom partition-level operations and more efficient reduce operations is achievable with RDDs, while the DataFrame API lacks this built-in capability. Also, we can control the number of partitions in a given execution using the `partitions_num` variable.

During the mapPartitions phase, Bloom filters are fitted in a distributed manner, with each dataset partition having its own separate Bloom filter object. This implies that we obtain a corresponding fitted Bloom filter for every data partition. Since we only need one Bloom filter to represent the network traffic of a given day, during the treeReduce phase, we use the union of the fitted bloom filters across the partitions using simple OR operation, as shown in the code snippet.

Now we have a list of five Bloom filters bfs, each fitted for a given day and we want to predict which IPs are first seen on April 23rd. Hence, we will union all the five Bloom filters into one bf. After that, we will use this filter to check whether each IP appearing on 23rd has been seen before or not. The following code presents an example of how to union the list of bloom filters:

bf = bfs[0]

for bf_other in bfs[1:]:

bf.union(bf_other)

The union operation implements OR operation, as shown in Figure 7. Each Bloom filter object is composed of the following parameters:

bfs[0]

>>BloomFilter(ideal_num_elements_n=2001000, error_rate_p=0.000100, num_bits_m=38359404)

We can see that the parameters correspond with the Bloom filter calculation: for storing 2.001 million entities (n) and retaining an error rate (p) of 0.0001, we need around 38 million bits (m). Without getting into the implementation of the Bloom filter object, but to understand how the OR operation is performed, we can look at the array of bits each Bloom filter contains. The numbers represent which bits are “on” as shown in Figure 6 of how to insert a new entity.

Hence, for the first day, we can see which first ten bits are on:

bfs[0].backend.array_[:10]

>>array('L', [2132816780, 929291496, 979582583, 2750484562, 801488345, 1351563566, 259677395, 1443724065, 3424155643, 3505712587])

And for the second day, you can see which first ten bits are on:

bfs[1].backend.array_[:10]

>>array('L', [2132816780, 388226280, 979581495, 2750484562, 801488345, 1351563310, 259677395, 1443723553, 1209563130, 3237268939])

Although we can see the relevant bits that are on, we don’t have an indication of which IPs appeared in each Bloom filter; for this, we need to use the hashing functions, just as shown in Figure 5. The union operation will result in a new Bloom filter, which will union all the activated bits.

Now that we have one Bloom filter that “knows” whether an IP was seen during the previous week or not, we can use this information for the next day, April 23rd. As previously mentioned, we added one thousand IPs that didn’t appear in the previous week. The following code snippet will show how to predict for each IP shown on April 23rd whether it is first seen or not. We would like to utilize the Bloom filter in a distributed manner:

def first_seen_iter(list_of_records):

final_iter = []

for l in list_of_records:

final_iter.append([l['ip'], l['date'], l['ip'] in bf])

return iter(final_iter)

df_test_first_predict = df_test.repartition(par_n)\

.rdd\

.mapPartitions(first_seen_iter)\

.toDF(['ip', 'date', 'seen_before'])\

.cache()

During the mapPartitions phase, we propagate the trained Bloom filter to the different partitions, and in each partition, we use the Bloom filter to check whether an IP appears in the Bloom filter or not, creating a new column seen_before.

We can now filter the DataFrame with rows where the seen_before column is false, indicating IPs that are seen for the first time.

df_test_first_predict.filter(f.col('seen_before') == 'false').count()

>> 1000

As expected, the union Bloom filter detects all the thousand of previously not seen IPs.

However, what if we require more than just a simple "yes" or "no" response indicating whether a specific IP has previously accessed our server? Same as shown in Figure 2 – suggesting an iterative mode: if the number of previous appearances of an IP is low, it might be considered anomalous. In this scenario, we can utilize all the fitted Bloom filters and tally the number of times each IP appeared in each filter rather than merging them into a single filter. To better understand this concept, take a look at the following code:

def get_bf_count(bf_list, ip):

rst = 0

for bf in bf_list:

if ip in bf:

rst += 1

return rst

def count_seen_iter(list_of_records):

final_iter = []

for l in list_of_records:

final_iter.append([l['ip'], l['date'], get_bf_count(bfs, l['ip'])])

return iter(final_iter)

df_test_count_predict = df_test.rdd\

.mapPartitions(count_seen_iter)\

.toDF(['ip', 'date', 'count_seen'])\

.cache()

The get_bf_count function returns the number of IP appearances in each of the Bloom filters we train on each day. During the mapPartitions phase, we propagate the trained Bloom filter list to the different partitions, and in each partition, we use the Bloom filter list to sum the number of appearances of an IP in the Bloom filters, creating a new column count_seen.

Figure 8: Number of days on IP

As we can see in Figure 8, most of the IPs appeared in the last week for at least 4 days. The number of first seen IPs is 1000 as we saw in the last example. But the number of IPs that appear only once is 76, and we may want to investigate those IPs as well.

Although the simulated period was relatively short, the code has been implemented in PySpark, and the training of the Bloom filters was in a distributed manner. Thus, it can be easily scaled up to accommodate a larger timeframe with more data points.

Multivariate scenario

A more advanced approach to utilize the Bloom filter properties involves training a separate filter on every relevant feature. This strategy allows a better way of finding anomalous combinations of features.

For instance, we might want to identify anomalous access to blobs in cloud storage using access tokens such as the Shared Access Signature (SAS). The relevant attributes for this task would be the SAS token, a source IP address, and the full path of the blob.

Maintaining a table that stores all potential combinations of SAS tokens, IP addresses, and blobs is not feasible. A more efficient way would involve keeping unique SAS tokens, IP addresses, and blobs in separate tables. We may trigger an alert if a new entry has an unseen combination of SAS token, IP address, and blob.

This solution presents significant challenges related to high storage costs, scalability, and performance. Maintaining aggregated tables of all previous access records would require vast amounts of storage space, which could be expensive to maintain, especially as this data grows over time. In terms of scalability, managing and processing this ever-expanding dataset may become increasingly difficult. Lastly, fetching and searching those tables could lead to performance degradation or slow response times.

Utilizing Bloom filters for each attribute can be a more efficient solution. This approach enables quick checks of whether a new entity has already been seen, consuming less space and allowing high-rate retrieval. An alert can be triggered if we get a negative answer from all filters for a new entity, meaning the new record is a new combination of a SAS token, IP address, and a blob.

Summary

The key advantage of Bloom filters lies in their ability to provide a controlled flexible trade-off between accuracy (only False Positives) and efficiency (storage/search). This makes Bloom filters a valuable practical approach for security features dealing with entity monitoring in high-cardinality cloud environments.

Their more advanced properties – such as merge operations – allow them to support more complex scenarios while remaining scalable and predictable.

In this post, we introduced Bloom filters to the security domain, discussed their properties, and shared sample code for implementing different scenarios.

To learn more about protecting multi-cloud environments, read about Microsoft Defender for Cloud.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.