During my session at the recent Microsoft Exchange Conference (MEC), I revealed Microsoft’s preferred architecture (PA) for Exchange Server 2013. The PA is the Exchange Engineering Team’s prescriptive approach to what we believe is the optimum deployment architecture for Exchange 2013, and one that is very similar to what we deploy in Office 365.

The PA is designed with several business requirements in mind. For example, requirements that the architecture be able to:

- Include both high availability within the datacenter, and site resilience between datacenters

- Support multiple copies of each database, thereby allowing for quick activation

- Reduce the cost of the messaging infrastructure

- Increase availability by optimizing around failure domains and reducing complexity

The specific prescriptive nature of the PA means of course that not every customer will be able to deploy it (for example, customers without multiple datacenters). And some of our customers have different business requirements or other needs, which necessitate an architecture different from that shown here. If you fall into those categories, and you want to deploy Exchange on-premises, there are still advantages to adhering as closely as possible to the PA where possible, and deviate only where your requirements widely differ. Alternatively, you can consider Office 365 where you can take advantage of the PA without having to deploy or manage servers.

Before I delve into the PA, I think it is important that you understand a concept that is the cornerstone for this architecture – simplicity.

Simplicity

Failure happens. There is no technology that can change this. Disks, servers, racks, network appliances, cables, power substations, generators, operating systems, applications (like Exchange), drivers, and other services – there is simply no part of an IT services offering that is not subject to failure.

One way to mitigate failure is to build in redundancy. Where one entity is likely to fail, two or more entities are used. This pattern can be observed in Web server arrays, disk arrays, and the like. But redundancy by itself can be prohibitively expensive (simple multiplication of cost). For example, the cost and complexity of the SAN based storage system that was at the heart of Exchange until the 2007 release, drove the Exchange Team to step up its investment in the storage stack and to evolve the Exchange application to integrate the important elements of storage directly into its architecture. We recognized that every SAN system would ultimately fail, and that implementing a highly redundant system using SAN technology would be cost-prohibitive. In response, Exchange has evolved from requiring expensive, scaled-up, high-performance SAN storage and related peripherals, to now being able to run on cheap, scaled-out servers with commodity, low-performance SAS/SATA drives in a JBOD configuration with commodity disk controllers. This architecture enables Exchange to be resilient to any storage related failure, while enabling you to deploy large mailboxes at a reasonable cost.

By building the replication architecture into Exchange and optimizing Exchange for commodity storage, the failure mode is predictable from a storage perspective. This approach does not stop at the storage layer; redundant NICs, power supplies, etc., can also be removed from the server hardware. Whether it is a disk, controller, or motherboard that fails, the end result should be the same, another database copy is activated and takes over.

The more complex the hardware or software architecture, the more unpredictable failure events can be. Managing failure at any scale is all about making recovery predictable, which drives the necessity to having predictable failure modes. Examples of complex redundancy are active/passive network appliance pairs, aggregation points on the network with complex routing configurations, network teaming, RAID, multiple fiber pathways, etc. Removing complex redundancy seems unintuitive on its face – how can removing redundancy increase availability? Moving away from complex redundancy models to a software-based redundancy model, creates a predictable failure mode.

The PA removes complexity and redundancy where necessary to drive the architecture to a predictable recovery model: when a failure occurs, another copy of the affected database is activated.

The PA is divided into four areas of focus:

- Namespace design

- Datacenter design

- Server design

- DAG design

Namespace Design

In the Namespace Planning and Load Balancing Principles articles, I outlined the various configuration choices that are available with Exchange 2013. From a namespace perspective, the choices are to either deploy a bound namespace (having a preference for the users to operate out of a specific datacenter) or an unbound namespace (having the users connect to any datacenter without preference).

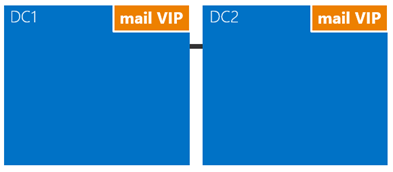

The recommended approach is to utilize the unbound model, deploying a single namespace per client protocol for the site resilient datacenter pair (where each datacenter is assumed to represent its own Active Directory site - see more details on that below). For example:

- autodiscover.contoso.com

- For HTTP clients: mail.contoso.com

- For IMAP clients: imap.contoso.com

- For SMTP clients: smtp.contoso.com

Each namespace is load balanced across both datacenters in a configuration that does not leverage session affinity, resulting in fifty percent of traffic being proxied between datacenters. Traffic is equally distributed across the datacenters in the site resilient pair, via DNS round-robin, geo-DNS, or other similar solution you may have at your disposal. Though from our perspective, the simpler solution is the least complex and easier to manage, so our recommendation is to leverage DNS round-robin.

In the event that you have multiple site resilient datacenter pairs in your environment, you will need to decide if you want to have a single worldwide namespace, or if you want to control the traffic to each specific datacenter pair by using regional namespaces. Ultimately your decision depends on your network topology and the associated cost with using an unbound model; for example, if you have datacenters located in North America and Europe, the network link between these regions might not only be costly, but it might also have high latency, which can introduce user pain and operational issues. In that case, it makes sense to deploy a bound model with a separate namespace for each region.

Site Resilient Datacenter Pair Design

To achieve a highly available and site resilient architecture, you must have two or more datacenters that are well-connected (ideally, you want a low round-trip network latency, otherwise replication and the client experience are adversely affected). In addition, the datacenters should be connected via redundant network paths supplied by different operating carriers.

While we support stretching an Active Directory site across multiple datacenters, for the PA we recommend having each datacenter be its own Active Directory site. There are two reasons:

- Transport site resilience via Shadow Redundancy and Safety Net can only be achieved when the DAG has members located in more than one Active Directory site.

- Active Directory has published guidance that states that subnets should be placed in different Active Directory sites when the round trip latency is greater than 10ms between the subnets.

Server Design

In the PA, all servers are physical, multi-role servers. Physical hardware is deployed rather than virtualized hardware for two reasons:

- The servers are scaled to utilize eighty percent of resources during the worst-failure mode.

- Virtualization adds an additional layer of management and complexity, which introduces additional recovery modes that do not add value, as Exchange provides equivalent functionality out of the box.

By deploying multi-role servers, the architecture is simplified as all servers have the same hardware, installation process, and configuration options. Consistency across servers also simplifies administration. Multi-role servers provide more efficient use of server resources by distributing the Client Access and Mailbox resources across a larger pool of servers. Client Access and Database Availability Group (DAG) resiliency is also increased, as there are more servers available for the load-balanced pool and for the DAG.

Commodity server platforms (e.g., 2U, dual socket servers with no more than 24 processor cores and 96GB of memory, that hold 12 large form-factor drive bays within the server chassis) are use in the PA. Additional drive bays can be deployed per-server depending on the number of mailboxes, mailbox size, and the server’s scalability.

Each server houses a single RAID1 disk pair for the operating system, Exchange binaries, protocol/client logs, and transport database. The rest of the storage is configured as JBOD, using large capacity 7.2K RPM serially attached SCSI (SAS) disks (while SATA disks are also available, the SAS equivalent provides better IO and a lower annualized failure rate). Bitlocker is used to encrypt each disk, thereby providing data encryption at rest and mitigating concerns around data theft via disk replacement.

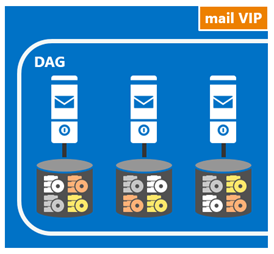

To ensure that the capacity and IO of each disk is used as efficiently as possible, four database copies are deployed per-disk. The normal run-time copy layout (calculated in the Exchange 2013 Server Role Requirements Calculator) ensures that there is no more than a single copy activated per-disk.

At least one disk in the disk pool is reserved as a hot spare. AutoReseed is enabled and quickly restores database redundancy after a disk failure by activating the hot spare and initiating database copy reseeds.

Database Availability Group Design

Within each site resilient datacenter pair you will have one or more DAGs.

DAG Configuration

As with the namespace model, each DAG within the site resilient datacenter pair operates in an unbound model with active copies distributed equally across all servers in the DAG. This model provides two benefits:

- Ensures that each DAG member’s full stack of services is being validated (client connectivity, replication pipeline, transport, etc.).

- Distributes the load across as many servers as possible during a failure scenario, thereby only incrementally increasing resource utilization across the remaining members within the DAG.

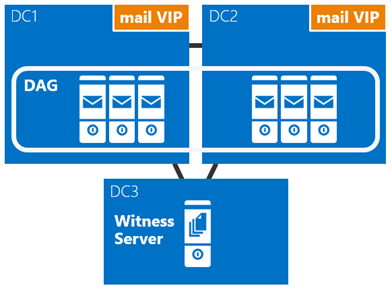

Each datacenter is symmetrical, with an equal number of member servers within a DAG residing in each datacenter. This means that each DAG contains an even number of servers and uses a witness server for quorum arbitration.

The DAG is the fundamental building block in Exchange 2013. With respect to DAG size, a larger DAG provides more redundancy and resources. Within the PA, the goal is to deploy larger DAGs (typically starting out with an eight member DAG and increasing the number of servers as required to meet your requirements) and only create new DAGs when scalability introduces concerns over the existing database copy layout.

DAG Network Design

Since the introduction of continuous replication in Exchange 2007, Exchange has recommended multiple replication networks for separating client traffic from replication traffic. Deploying two networks allows you to isolate certain traffic along different network pathways and ensure that during certain events (e.g., reseed events) the network interface is not saturated (which is an issue with 100Mb, and to a certain extent, 1Gb interfaces). However, for most customers, having two networks operating in this manner was only a logical separation, as the same copper fabric was used by both networks in the underlying network architecture.

With 10Gb networks becoming the standard, the PA moves away from the previous guidance of separating client traffic from replication traffic. A single network interface is all that is needed because ultimately our goal is to achieve a standard recovery model despite the failure - whether a server failure occurs or a network failure occurs, the result is the same, a database copy is activated on another server within the DAG. This architectural change simplifies the network stack, and obviates the need to eliminate heartbeat cross-talk.

Witness Server Placement

Ultimately, the placement of the witness server determines whether the architecture can provide automatic datacenter failover capabilities or whether it will require a manual activation to enable service in the event of a site failure.

If your organization has a third location with a network infrastructure that is isolated from network failures that affect the site resilient datacenter pair in which the DAG is deployed, then the recommendation is to deploy the DAG’s witness server in that third location. This configuration gives the DAG the ability to automatically failover databases to the other datacenter in response to a datacenter-level failure event, regardless of which datacenter has the outage.

Figure 3: DAG (Three Datacenter) Design

If your organization does not have a third location, then place the witness server in one of the datacenters within the site resilient datacenter pair. If you have multiple DAGs within the site resilient datacenter pair, then place the witness server for all DAGs in the same datacenter (typically the datacenter where the majority of the users are physically located). Also, make sure the Primary Active Manager (PAM) for each DAG is also located in the same datacenter.

Data Resiliency

Data resiliency is achieved by deploying multiple database copies. In the PA, database copies are distributed across the site resilient datacenter pair, thereby ensuring that mailbox data is protected from software, hardware and even datacenter failures.

Each database has four copies, with two copies in each datacenter, which means at a minimum, the PA requires four servers. Out of these four copies, three of them are configured as highly available. The fourth copy (the copy with the highest Activation Preference) is configured as a lagged database copy. Due to the server design, each copy of a database is isolated from its other copies, thereby reducing failure domains and increasing the overall availability of the solution as discussed in DAG: Beyond the “A”.

The purpose of the lagged database copy is to provide a recovery mechanism for the rare event of system-wide, catastrophic logical corruption. It is not intended for individual mailbox recovery or mailbox item recovery.

The lagged database copy is configured with a seven day ReplayLagTime. In addition, the Replay Lag Manager is also enabled to provide dynamic log file play down for lagged copies. This feature ensures that the lagged database copy can be automatically played down and made highly available in the following scenarios:

- When a low disk space threshold is reached

- When the lagged copy has physical corruption and needs to be page patched

- When there are fewer than three available healthy copies (active or passive) for more than 24 hours

By using the lagged database copy in this manner, it is important to understand that the lagged database copy is not a guaranteed point-in-time backup. The lagged database copy will have an availability threshold, typically around 90%, due to periods where the disk containing a lagged copy is lost due to disk failure, the lagged copy becoming an HA copy (due to automatic play down), as well as, the periods where the lagged database copy is re-building the replay queue.

To protect against accidental (or malicious) item deletion, Single Item Recovery or In-Place Hold technologies are used, and the Deleted Item Retention window is set to a value that meets or exceeds any defined item-level recovery SLA.

With all of these technologies in play, traditional backups are unnecessary; as a result, the PA leverages Exchange Native Data Protection.

Summary

The PA takes advantage of the changes made in Exchange 2013 to simplify your Exchange deployment, without decreasing the availability or the resiliency of the deployment. And in some scenarios, when compared to previous generations, the PA increases availability and resiliency of your deployment.

Ross Smith IV

Principal Program Manager

Office 365 Customer Experience