You can now sign up for Azure OpenAI Service!

The current releases of this demo uses OpenAI. The OpenAI API is designed for developers with API call or you can use Azure OpenAI studio. The goal of this demos is to make a fun and easy to use fine-tuning model which will answer all student course related questions anytime.

The goals of this AI is to save educators’s time. For IVE in Hong Kong, we are speaking Cantonese and students always ask question in traditional Chinese in general.

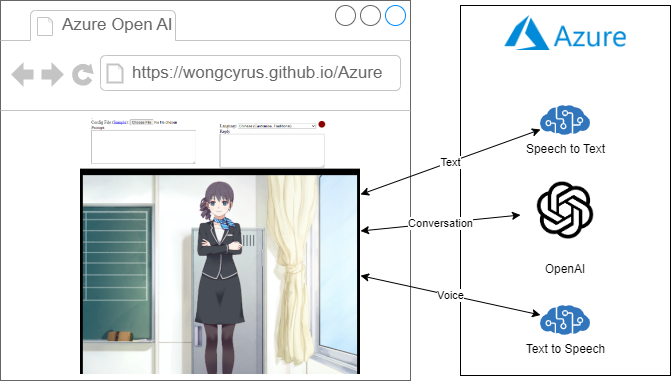

Therefore, we decide to make a virtual assistant that make use of Azure Open AI and Azure speech services to solve the problem.

Students can talk to her with all languages supported by Microsoft Cognitive Services!

Demo In English

Demo In Cantonese

Please note the current version changes to upload a json config file instead of input 4 text fields.

Try it out

Prerequisite

- You need to have an Azure Subscription with Azure Open AI and Microsoft Cognitive Services Text to Speech services.

- Create OpenAI model deployment, then note down the endpoint URL and key, and you can follow the “Quickstart: Get started generating text using Azure OpenAI”. For chatbot use case, you can pick “text-davinci-003”.

- Create “Text to speech” resources, and you can follow “How to get started with neural text to speech in Azure | Azure Tips and Tricks”. Note down the region and key.

Try the Live2D Azure OpenAI chatbot

- Go to https://wongcyrus.github.io/AzureOpenAILive2DChatbotDemo/index.html

- Click on “Sample” link and download the json config file.

- Edit the config file with your own endpoint and API key.

- Upload your config file.

- For text input, type in the “Prompt” text box, click on the avatar, and it will response to your prompt!

For voice input, select your language, click on the red dot, and speak with your Mic. Click on the red dot again to indicate your speech is completed, and it will response to your speech.

How does it work?

This application is just a simple client-side static web with HTML5, CSS3, and JavaScript. We fork and modify Cubism Web Samples from Live2D which is a software technology that allows you to create dynamic expressions that breathe life into an original 2D illustration.

High level overview

Behind the scene, it is a TypeScript application and we hack the sample to add ajax call when event happens.

For voice input:

- When user click on the red dot, it starts capturing mic input with MediaRecorder.

- When user click on the red dot again, it stops capturing mic input, and call startVoiceConversation method with language and a Blob object in webm format.

- The startVoiceConversation chains down to different Live2D objects from main.ts, LAppDelegate, to LAppLive2DManager which makes a series of Ajax call to Azure Services through AzureAi class. They are getTextFromSpeech, getOpenAiAnswer, and getSpeechUrl.

Since Azure Text-to-Speech for short audio does not support webm format and getTextFromSpeech converts webm to wav format with webm-to-wav-converter. - With the wav data from Azure Text-to-Speech, it calls wavFileHandler loadWavFile method which sample the voice and get the voice level.

- Call the startRandomMotion method of the model object, and it adds lipsync actions according to the voice level. Play the audio right before the parent model update call.

For text input, it is very similar, but the trigger event is model on tap and skips step 1 and 2.

There is no Azure Open AI JavaScript SDK at this moment. For speech service, we was tried microsoft-cognitiveservices-speech-sdk but we hits a Webpack problem, then we decide to use REST API for all Azure API call instead.

AzureAi Class

import { LAppPal } from "./lapppal";

import { getWaveBlob } from "webm-to-wav-converter";

import { LANGUAGE_TO_VOICE_MAPPING_LIST } from "./languagetovoicemapping";

export class AzureAi {

private _openaiurl: string;

private _openaipikey: string;

private _ttsapikey: string;

private _ttsregion: string;

private _inProgress: boolean;

constructor() {

const config = (document.getElementById("config") as any).value;

if (config !== "") {

const json = JSON.parse(config);

this._openaiurl = json.openaiurl;

this._openaipikey = json.openaipikey;

this._ttsregion = json.ttsregion;

this._ttsapikey = json.ttsapikey;

}

this._inProgress = false;

}

async getOpenAiAnswer(prompt: string) {

if (this._openaiurl === undefined || this._inProgress || prompt === "") return "";

this._inProgress = true;

const conversations = (document.getElementById("conversations") as any).value;

LAppPal.printMessage(prompt);

const conversation = conversations + "\n\n## " + prompt

const m = {

"prompt": `##${conversation}\n\n`,

"max_tokens": 300,

"temperature": 0,

"frequency_penalty": 0,

"presence_penalty": 0,

"top_p": 1,

"stop": ["#", ";"]

}

const repsonse = await fetch(this._openaiurl, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'api-key': this._openaipikey,

},

body: JSON.stringify(m)

});

const json = await repsonse.json();

const answer: string = json.choices[0].text

LAppPal.printMessage(answer);

(document.getElementById("reply") as any).value = answer;

(document.getElementById("conversations") as any).value = conversations + "\n\n" + answer;

return answer;

}

async getSpeechUrl(language: string, text: string) {

if (this._ttsregion === undefined) return;

const requestHeaders: HeadersInit = new Headers();

requestHeaders.set('Content-Type', 'application/ssml+xml');

requestHeaders.set('X-Microsoft-OutputFormat', 'riff-8khz-16bit-mono-pcm');

requestHeaders.set('Ocp-Apim-Subscription-Key', this._ttsapikey);

const voice = LANGUAGE_TO_VOICE_MAPPING_LIST.find(c => c.voice.startsWith(language) && c.IsMale === false).voice;

const ssml = `

<speak version=\'1.0\' xml:lang=\'${language}\'>

<voice xml:lang=\'${language}\' xml:gender=\'Female\' name=\'${voice}\'>

${text}

</voice>

</speak>`;

const response = await fetch(`https://${this._ttsregion}.tts.speech.microsoft.com/cognitiveservices/v1`, {

method: 'POST',

headers: requestHeaders,

body: ssml

});

const blob = await response.blob();

var url = window.URL.createObjectURL(blob)

const audio: any = document.getElementById('voice');

audio.src=url;

LAppPal.printMessage(`Load Text to Speech url`);

this._inProgress = false;

return url;

}

async getTextFromSpeech(language: string, data: Blob) {

if (this._ttsregion === undefined) return "";

LAppPal.printMessage(language);

const requestHeaders: HeadersInit = new Headers();

requestHeaders.set('Accept', 'application/json;text/xml');

requestHeaders.set('Content-Type', 'audio/wav; codecs=audio/pcm; samplerate=16000');

requestHeaders.set('Ocp-Apim-Subscription-Key', this._ttsapikey);

const wav = await getWaveBlob(data, false);

const response = await fetch(`https://${this._ttsregion}.stt.speech.microsoft.com/speech/recognition/conversation/cognitiveservices/v1?language=${language}`, {

method: 'POST',

headers: requestHeaders,

body: wav

});

const json = await response.json();

return json.DisplayText;

}

}

LAppLive2DManager startVoiceConversation method

public startVoiceConversation(language: string, data: Blob) {

for (let i = 0; i < this._models.getSize(); i++) {

if (LAppDefine.DebugLogEnable) {

LAppPal.printMessage(

`startConversation`

);

const azureAi = new AzureAi();

azureAi.getTextFromSpeech(language, data)

.then(text => {

(document.getElementById("prompt") as any).value = text;

return azureAi.getOpenAiAnswer(text);

}).then(ans => azureAi.getSpeechUrl(language, ans))

.then(url => {

this._models.at(i)._wavFileHandler.loadWavFile(url);

this._models

.at(i)

.startRandomMotion(

LAppDefine.MotionGroupTapBody,

LAppDefine.PriorityNormal,

this._finishedMotion

);

});

}

}

}

Simulate ChatGPT in Azure OpenAI

It is very simple! You just need to keep your conversation and send the whole conversation to Azure OpenAI completion API.

There is a node package called chatgpt which is a hack to call chatgpt from node.js. It introduces conversationId and parentMessageId.

For our case, we just keep sending the whole conversation to Azure Open AI model.

GitHub Repo

Project Source

https://github.com/wongcyrus/AzureOpenAILive2DChatbot

Remember follow Live2D instruction! You need to download and copy Core & SDK files.

I suggest you to use CodeSpace for your development.

Demo Site

https://github.com/wongcyrus/AzureOpenAILive2DChatbotDemo

I just code some files after the production build.

Conclusion

This project is a Single Page Application and 100% server less. It is possible to wrap the Azure OpenAI API with API management, deploy to Azure Static Web Apps and use Azure Function to have a fine grant control of the usage.

For education, we are thinking about using fine-tuned/customize model to build a virtual tutor and accurately answer our course related questions.

For fun, it is just like a virtual girl friend and you can chat with her for anything!

Project collaborators include Lo Hau Yin Samuel, and Shing Seto plus 3 Microsoft Student Ambassador Andy Lum, Peter Liu, and Jerry Lee, from the IT114115 Higher Diploma in Cloud and Data Centre Administration.

About the Author

Cyrus Wong is the senior lecturer of Department of Information Technology (IT) of the Hong Kong Institute of Vocational Education (Lee Wa... and he focuses on teaching public Cloud technologies. He is one of the Microsoft Learn for Educators Ambassador and Microsoft Azure MVP from Hong Kong.

Updated Feb 17, 2023

Version 8.0cyruswong

Iron Contributor

Joined August 23, 2021

Educator Developer Blog

Follow this blog board to get notified when there's new activity