- Home

- Security, Compliance, and Identity

- Core Infrastructure and Security Blog

- ConfigMgr: Avoiding Remote Management Point Pitfalls

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Hello!

I’m Pavel Yurenev, a Support Escalation Engineer specializing in Microsoft Configuration Manager at Microsoft Customer Service & Support (CSS). As Reactive Support, we assist customers with issues arising from Microsoft software products. Unfortunately, some supported product configurations are poorly supportable.

Today, I want to discuss certain design features of Configuration Manager Management Points (MPs) and provide guidance for architects and administrators.

TL; DR:

Avoid installing Management Points in the remote locations from the Site Server unless:

- the network latency is low

and/or - its clients should not reach the Site Server network location (DMZ scenario).

How Management Point works:

The MP role is vital for all ConfigMgr operations as it links clients with the SQL database resources. Having no working MPs is usually a good reason to log a Severity A (aka CritSit) ticket with Microsoft CSS.

At the high level, there are two types of client-MP interactions:

- Client fetches data from the database: think policies or service locations (like Content Location requests).

- Client uploads data to the database: think inventory or state/status messages.

The first flow is “read-only”. The client request made via HTTP or HTTPS is just converted to SQL query by the MP binaries – and forwarded to the SQL database in the backend. The answer is returned to the client leaving no changes in the database itself.

The second flow is supposed to update the database with the data provided by the clients. Normally MPs do not have SQL access rights to do this, leaving the data inserts to the Site Server role – and here is where remote MPs have issues.

Clients use HTTP(S) protocol to upload the data to Management Points. This is supplemented by BITS technology for the sake of bandwidth control and reliability.

The MP converts the uploaded data to files: normally one file per the data piece uploaded. If MP is remote from the Site Server, MP places them to MP Outbox folders corresponding to the data type. The file size ranges from a kilobyte (status message) to tens of megabytes (full inventory).

Once done, the special MP component called SMS_FILE_DISPATCH_MANAGER aka MPFDM moves the files from MP Outboxes to the Site Server Inboxes for the processing. It is the Site Server responsibility to update the database.

Caveat:

The important part here is that MPFDM uses SMB protocol to move the files: and both target and source folders have specially crafted ACL sets. Therefore, it takes up to fifteen network roundtrips to move a small file depending on multiple factors.

This makes the file move process specifically prone to the network latency. Considering the files are mostly small, the network bandwidth does not matter much.

Note that Client – MP communication protocol HTTP/HTTPS is far more optimized to the high-latency connections since beginning of WWW. Therefore, it usually makes no sense to install the Management Point in the location being remote from the Site Server.

Lab tests and math:

Calculating the upper estimate for the file transfer rate with the 100 ms roundtrip latency gives:

- 100 ms latency * 10 roundtrips = 1 second to move a file.

- 1 file per second = 60 files moved per minute.

The real-world numbers are even lower:

|

Latency test |

Avg. Latency |

Avg. Files Moved / Min |

|

Zero |

<1 ms |

450 Files / Min |

|

Low |

25 ms |

120 Files / Min |

|

Mild |

45 ms |

53 Files / Min |

|

High |

86 ms |

28 Files / Min |

Let us say there are 1000 clients talking to the remote MP and the administrator deploys a simple non-OSD Task Sequence (TS) of 10 steps to them. This should generate a Status Message per step plus additional messages for TS start and completion.

Running this TS then generates 12 000 messages that MP converts to files. Processing this backlog should take no less than 12 000/60 = 200 minutes by MPFDM, causing the reporting delay of more than 3 hours.

At the same time, the payload of these messages would not be more than 12 000 * 1 KB ~= 12 MB. Even on as poor as 1 Mbps connection, it would take less than 2 minutes to upload them via HTTP(S) to an MP close to the Site Server.

When it is too late:

Sometimes customers raise a Support Case once they notice the backlog in MP Outboxes approaching millions of files and being months old. The high latency never allows this backlog to be moved by MPFDM, so there is nothing that can be done on ConfigMgr side but to point the clients to another MP closer to the Site Server.

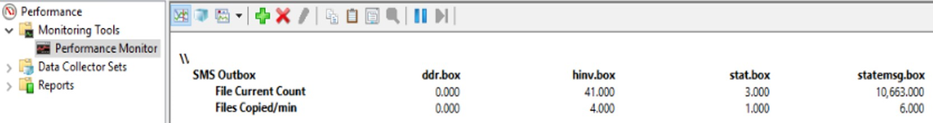

The screenshot below lists the real-world performance counters (current backlogs and file copy rates) in the high latency scenario. State messages are most usual to accumulate in the MP\Outboxes\statemsg.box. Note the copy rate counter goes to 0 if there are no files to be copied.

As a palliative measure, the administrator can use multi-threaded robocopy to move the files: e.g.:

robocopy <MP Installation Folder>\MP\OUTBOXES\statemsg.box\ \\<Site Server SMS_XXX share>\inboxes\auth\statesys.box\incoming\ *.smx /mov /mt:16

Alternatively, stop MPFDM component, compress the backlogged files – and move them as a single piece to the Site Server. Once done, unpack them to the proper inbox folder and restart MPFDM.

Note the MPFDM.log writes down the paths for both source and destination folders:

~Moved file E:\SMS\MP\OUTBOXES\statemsg.box\RA6KXTDY.SMX to \\CBH-PS1SITE.cbhierarchy.com\SMS_PS1\inboxes\auth\statesys.box\incoming\RA6KXTDY.SMX.

Credits:

I would like to thank Justin Edgerton (Cloud Solutions Architect) for the invaluable network tests and the idea of this article.

Also, I’d like to thank Viacheslav Denisov (ex-MSFT Support Engineer) for the real-world case and performance counters screenshot.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.