- Home

- Azure Data

- Azure Synapse Analytics Blog

- Build a Structured Data Lake with Azure Synapse and Database Templates

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

Azure Synapse database templates were announced at Ignite earlier this month, allowing users to kickstart the creation of lake databases without writing any code. Lake databases are a powerful tool to add structure to your data lake, using it more like a relational system than a blob store. This article will walk through the basics of creating a lake database, loading it with data, and then using the metadata provided by the templates to light up experiences in Power BI.

Video

You can view a video version of this tutorial here:

https://www.microsoft.com/en-us/videoplayer/embed/RWQZPz

Prerequisites

- An Azure subscription

- Azure Synapse Analytics workspace

- Azure Data Lake Storage Gen2 (ADLS)

Using database templates to create a lake database

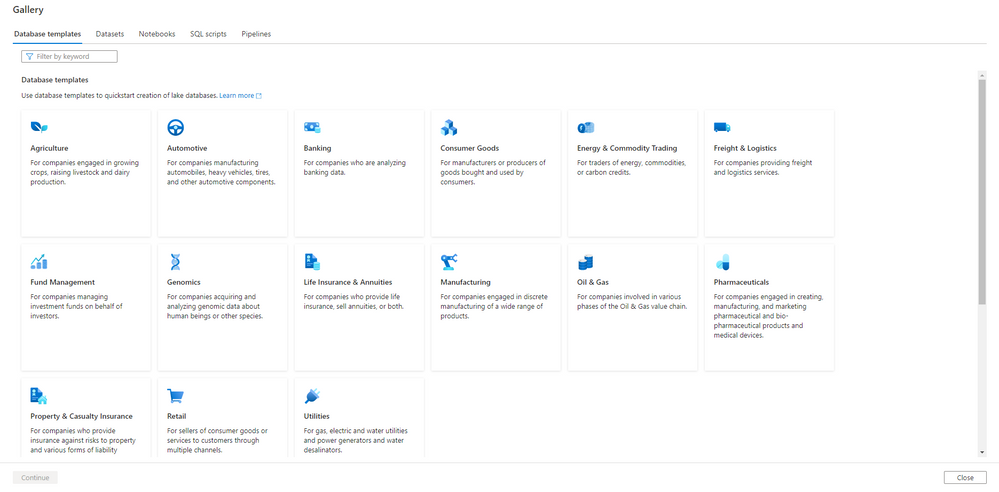

To get started creating your lake database, navigate to the gallery in Azure Synapse and open the database templates tab. Here you will see a list of templates for a variety of industries that we currently support. Choose the template that best matches your industry. For this article we will pick Retail.

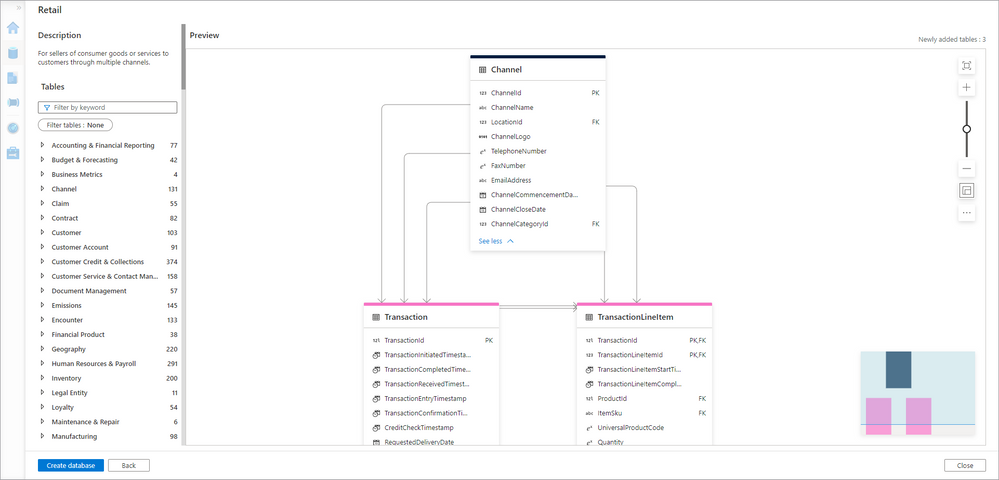

Choosing a template and selecting Continue opens the canvas where you can explore the template to find the tables you want to use. This page is focused solely on identifying the items from the template to start with, so don’t worry if a table isn’t an exact match. For example, we selected Transaction, TransactionLineItem, and Channel. Once that’s done select Create database.

You will land on the lake database tab, where you can customize the tables in your database and configure the storage settings. First, set the database storage settings to the ADLS path where you want the data to be stored. Next, you’ll need to customize the table schema to match your data requirements. By selecting the table on the canvas, you can view the full schema and customize any component of it you want. For example, we removed most of the columns from Transaction, keeping only TransactionId, CustomerId, and SalesChannelId. We then renamed SalesChannelId to ChannelId since our channel ids represent more generic types of channels.

For full details of customizing your database, review the doc here.

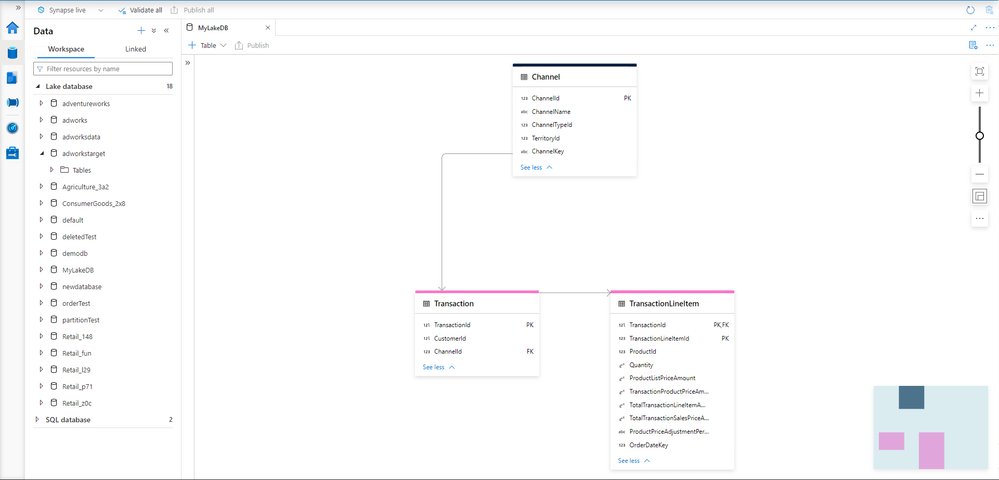

We’ve customized our tables to fit the requirements, as shown the screenshot below. In addition, the storage settings were updated and we renamed the database to MyLakeDB.

The database now has the schema we need for the Channel, Transaction, and TransactionLineItem tables. We can publish the database to save our changes and create the metadata definitions within Synapse. This makes the metadata consumable in other applications like Power BI.

Building pipelines to load data into the lake db

At this point, we have an empty database created. The folders in our data lake are either blank or haven’t been created yet. Further, querying the database will return no data. There are two main ways to load data into a lake database: Synapse data flows or the map data tool. Data flows are an existing generally available capability that allows for transformation-first approach to loading data. The map data tool is a new preview feature that auto-generates pipelines from mappings created using a data-first approach. You can learn more about the map data tool here, but we will be using data flows to load the data in this article.

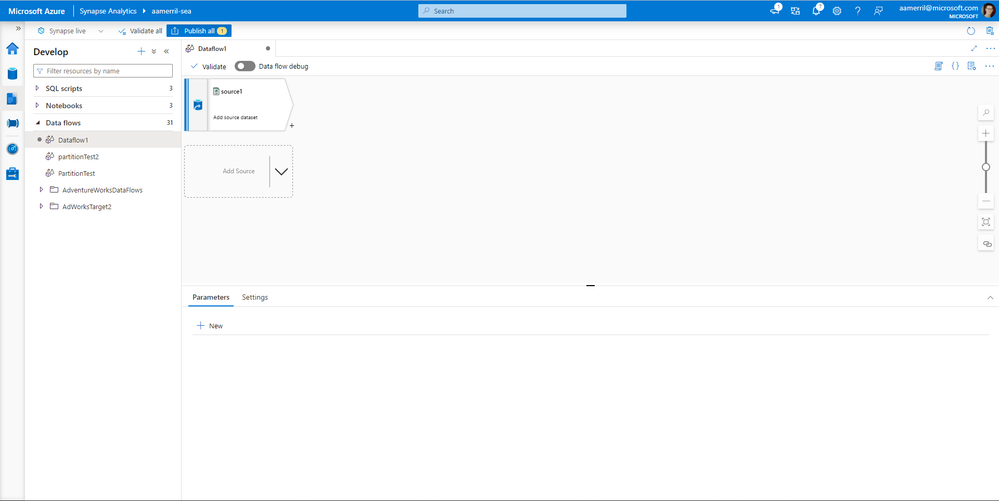

I will need to create 3 data flows, one for each table in my database. I start by creating a blank data flow and configuring the source to point to the dataset I want to load into my database. My source dataset is a CSV file with sales data that I will map into my target database.

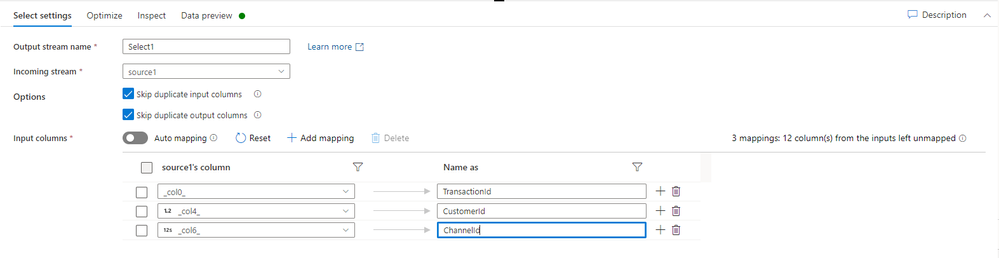

Synapse data flows let me apply transformations to the source dataset until it fits the schema for my target destination. The specific transformations required will depend on your data, but in this use case we are using only a select to remove some unneeded columns. For more information on using Synapse data flows, see this article here.

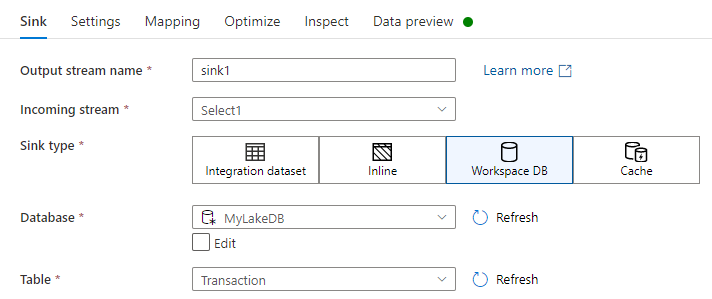

In addition, we use the Workspace DB sink type to write data into the lake database. The Workspace DB option provides us a list of databases in our Synapse workspace to use as targets. It currently supports lake databases and spark databases. Using the dropdowns in the sink settings, select the database and table you want to write data to. The connector will then pull the storage settings from the published database and use them when writing the data.

I will repeat this process for each of my tables, resulting in 3 separate data flows.

Lastly, we will need to create a pipeline to serve as a wrapper for our data flow. Create a new pipeline using the “+” button on the Integrate tab. Add a data flow activity to it and set the data flow to match the one created above. You can add all 3 data flows to the pipeline as sequential activities. Assuming they are no join order dependencies, sequencing of the data flows shouldn’t matter. Publish your data flows and pipeline to complete the process.

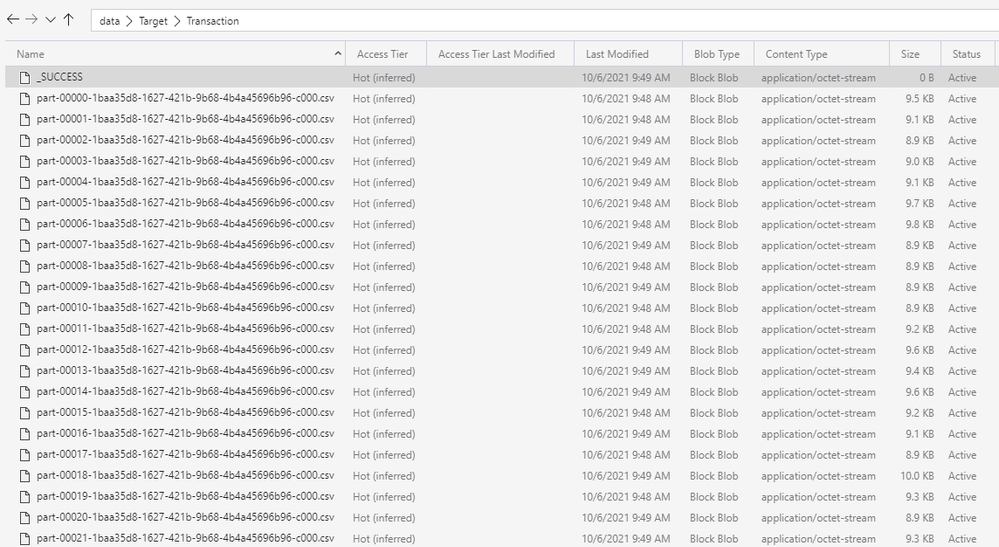

With the pipeline published, you can now execute it to start the loading process. You can monitor the pipeline run using the Monitor tab in Synapse. It should take just a few minutes depending on your data volume. Once the run is complete, head over to Azure Storage Explorer to see the data landed in your lake.

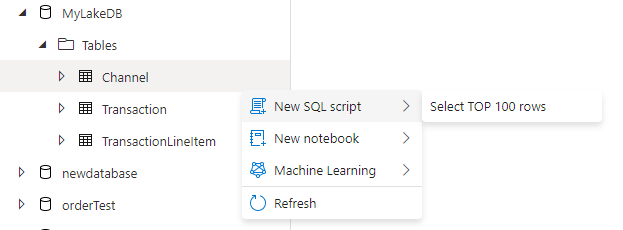

We can verify the lake database is working as expected by opening up Synapse, selecting the table from the left-hand side, and choosing “SELECT TOP 100 ROWS”. This will let us use the SQL Serverless capabilities to view the data in our lake without needing a dedicated SQL cluster.

Using Power BI to build a report from the lake db

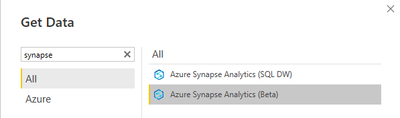

Now that we have our lake database created in Synapse, we can leverage the new Synapse connector in Power BI to simplify the process of creating a dashboard. The new connector, called Azure Synapse Analytics (beta) provides a direct connection to your Synapse workspaces and pulls in meta data from the lake database you created, such as the descriptions and relationships. Choose Get data from Power BI desktop and type “Synapse” into the search box to find the connector.

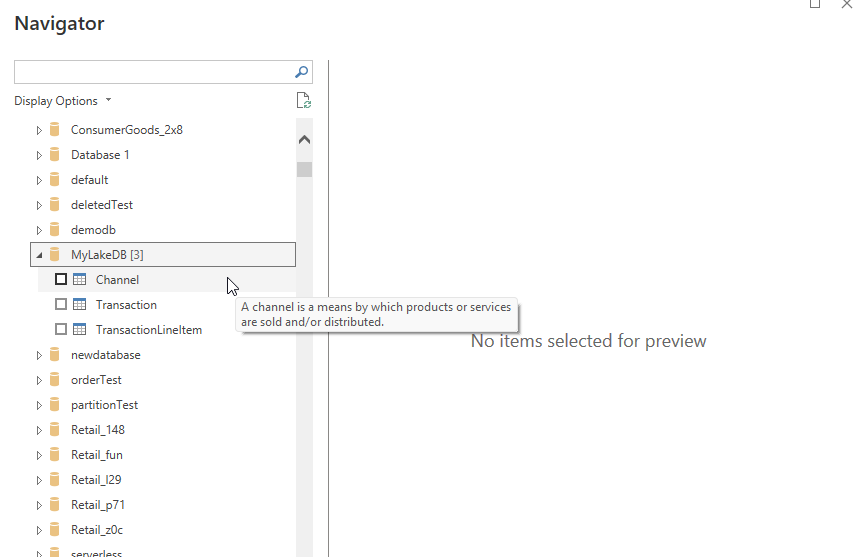

The connector will load all of the Synapse workspace you have access to, and you can filter down to just the one you want. Find the database you created and expand it to see the table. Power BI pulls the description info from each table in Synapse, allowing you to see the description of each table when you hover over it.

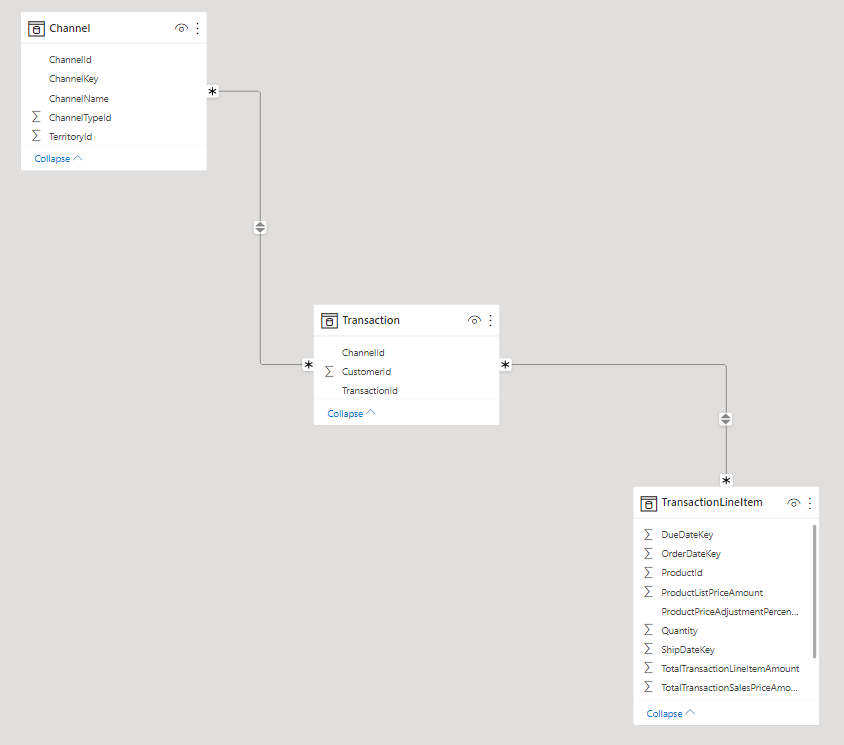

In addition, the relationship information from the lake database is used by Power BI to populate the relationships in the Power BI data model. This allows for business users who might be less familiar with the original lake database to still easily create meaningful reports using the data in the lake.

With the lake database schema loaded into Power BI, users can get to work creating dashboards that answer important questions for their business. In this case, we can now analyze our total revenue by channel in a performant manner.

Summary

As we saw in this article, lake databases and database templates in Azure Synapse Analytics provide an easy and powerful way to add structure and semantics to your data lake. Users can then leverage those semantic values in other apps like Power BI to simplify the creation of reports, and ensure they are using the most accurate and up-to-date data.

To learn more about database templates and lake database in Azure Synapse, check out the links below.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.