- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Lustre on Azure

January 6, 2020: This content was recently updated to improve readability and to address some technical issues that were pointed out by readers. Thanks for your feedback. -AzureCAT

Microsoft Azure has Lv2 virtual machine (VM) instances that feature NVM Express (NVMe) disks disks for use in a Lustre filesystem. This is a cost-effective way to provision a high-performance filesystem on Azure. The disks are internal to the physical host and don’t have the same service-level agreement (SLA) as premium disk storage, but when coupled with a hardware security module (HSM) they are a fast on-demand, high-performance filesystem.

This guide outlines setting up a Lustre filesystem and PBS cluster with both AzureHPC (scripts to automate deployment using the Azure CLI) and Azure CycleCloud, running the IOR filesystem benchmark. It uses the HSM capabilities for archival and backup to Azure Blob Storage and viewing metrics in Log Analytics.

Provisioning with AzureHPC

First, download AzureHPC by running the following Git commands:

git pull https://github.com/Azure/azurehpc.git

Next, set up the environment for your shell by running:

source azurehpc/install.sh

Note: This

install.shfile should be "sourced" in each bash session where you want to run theazhpc-*commands (alternatively, put in your~/.bashrc).

The AzureHPC project contains a Lustre filesystem and a PBS cluster example. To clone this example, run:

azhpc-init \

-c $azhpc_dir/examples/lustre_combined \

-d <new-directory-name>

The example has the following variables that must be set in the config file:

| Variable | Description |

| resource_group | The resource group for the project |

| storage_account | The storage account for HSM |

| storage_key | The storage key for HSM |

| storage_container | The container to use for HSM |

| log_analytics_lfs_name | The name to use in log analytics |

| log_analytics_workspace | The log analytics workspace id |

| log_analytics_key | The log analytics key |

Note: Macros exist to get the

storage_keyusingsakey.<storage-account-name>,log_analytics_workspaceusinglaworkspace.<resource-group>.<workspace-name>andlog_analytics_keyusinglakey.<resource-group>.<workspace-name>.

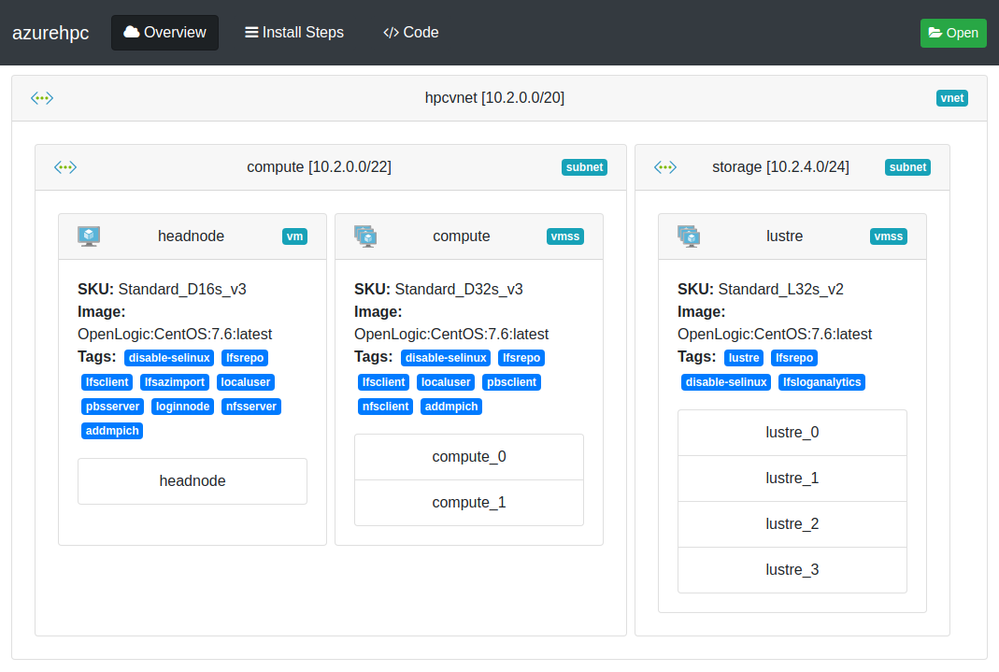

Other values for the VM SKU or number of instances to use. This example has a headnode (D16_v3), two compute nodes (D32_v3), and four Lustre nodes (L32_v2). There is also an Azurehpc web tool you can use to view a config file by clicking Open and load locally or by passing a URL, for example, the lustre_combined example.

Figure 1. AzureHPC web tool showing lustre_combined example

Once the config file is setup, run:

azhpc-build

The progress is displayed as it runs. For example:

paul@nuc:~/Microsoft/azurehpc_projects/lustre_test$ azhpc-build

You have 2 updates available. Consider updating your CLI installation.

Thu 5 Dec 10:45:13 GMT 2019 : Azure account: AzureCAT-TD HPC (f5a67d06-2d09-4090-91cc-e3298907a021)

Thu 5 Dec 10:45:13 GMT 2019 : creating temp dir - azhpc_install_config

Thu 5 Dec 10:45:13 GMT 2019 : creating ssh keys for hpcadmin

Generating public/private rsa key pair.

Your identification has been saved in hpcadmin_id_rsa.

Your public key has been saved in hpcadmin_id_rsa.pub.

The key fingerprint is:

SHA256:sM+Wb0bByl4EoxrLV6TdkLEADSP/Mj0w94xIopH034M paul@nuc

The key's randomart image is:

+---[RSA 2048]----+

| .. ++. .o |

|...o ...*. |

|o ..= o=.* |

| o ooB=*o = |

|. .+E*=So . |

| +o.++.o |

| . .=o |

| ...o |

| o. |

+----[SHA256]-----+

Thu 5 Dec 10:45:13 GMT 2019 : creating resource group

Location Name

---------- -------------------------

westeurope paul-azurehpc-lustre-test

Thu 5 Dec 10:45:16 GMT 2019 : creating network

Thu 5 Dec 10:45:23 GMT 2019 : creating subnet compute

AddressPrefix Name PrivateEndpointNetworkPolicies PrivateLinkServiceNetworkPolicies ProvisioningState ResourceGroup

--------------- ------- -------------------------------- ----------------------------------- ------------------- -------------------------

10.2.0.0/22 compute Enabled Enabled Succeeded paul-azurehpc-lustre-test

Thu 5 Dec 10:45:29 GMT 2019 : creating subnet storage

AddressPrefix Name PrivateEndpointNetworkPolicies PrivateLinkServiceNetworkPolicies ProvisioningState ResourceGroup

--------------- ------- -------------------------------- ----------------------------------- ------------------- -------------------------

10.2.4.0/24 storage Enabled Enabled Succeeded paul-azurehpc-lustre-test

Thu 5 Dec 10:45:35 GMT 2019 : creating vmss: compute

Thu 5 Dec 10:45:40 GMT 2019 : creating vm: headnode

Thu 5 Dec 10:45:46 GMT 2019 : creating vmss: lustre

Thu 5 Dec 10:45:52 GMT 2019 : waiting for compute to be created

Thu 5 Dec 10:47:24 GMT 2019 : waiting for headnode to be created

Thu 5 Dec 10:47:26 GMT 2019 : waiting for lustre to be created

Thu 5 Dec 10:48:28 GMT 2019 : getting public ip for headnode

Thu 5 Dec 10:48:29 GMT 2019 : building hostlists

Thu 5 Dec 10:48:33 GMT 2019 : building install scripts

rsync azhpc_install_config to headnode0d5c95.westeurope.cloudapp.azure.com

Thu 5 Dec 10:48:42 GMT 2019 : running the install scripts

Step 0 : install_node_setup.sh (jumpbox_script)

duration: 20 seconds

Step 1 : disable-selinux.sh (jumpbox_script)

duration: 1 seconds

Step 2 : nfsserver.sh (jumpbox_script)

duration: 32 seconds

Step 3 : nfsclient.sh (jumpbox_script)

duration: 28 seconds

Step 4 : localuser.sh (jumpbox_script)

duration: 2 seconds

Step 5 : create_raid0.sh (jumpbox_script)

duration: 21 seconds

Step 6 : lfsrepo.sh (jumpbox_script)

duration: 1 seconds

Step 7 : lfspkgs.sh (jumpbox_script)

duration: 221 seconds

Step 8 : lfsmaster.sh (jumpbox_script)

duration: 25 seconds

Step 9 : lfsoss.sh (jumpbox_script)

duration: 5 seconds

Step 10 : lfshsm.sh (jumpbox_script)

duration: 134 seconds

Step 11 : lfsclient.sh (jumpbox_script)

duration: 117 seconds

Step 12 : lfsimport.sh (jumpbox_script)

duration: 12 seconds

Step 13 : lfsloganalytics.sh (jumpbox_script)

duration: 2 seconds

Step 14 : pbsdownload.sh (jumpbox_script)

duration: 1 seconds

Step 15 : pbsserver.sh (jumpbox_script)

duration: 61 seconds

Step 16 : pbsclient.sh (jumpbox_script)

duration: 13 seconds

Step 17 : addmpich.sh (jumpbox_script)

duration: 4 seconds

Thu 5 Dec 11:00:23 GMT 2019 : cluster ready

Once complete, you can connect to the headnode with the following command:

azhpc-connect -u hpcuser headnode

Provisioning with Azure CycleCloud

This section walks you through setting up a Lustre filesystem and an autoscaling PBSPro cluster where the Lustre client is set up. This process uses an Azure CycleCloud project, which is available here.

Installing the Lustre project and templates

These instructions assume an Azure CycleCloud application server running and a terminal with both Git and the Azure CycleCloud CLI installed.

First, check out the cyclecloud-lfs repository:

git clone https://github.com/edwardsp/cyclecloud-lfs

This repository contains the Azure CycleCloud project and templates. There is an lfs template for the Lustre filesystem and a pbspro-lfs template, which is a modified version of the official pbspro template (from here). The pbspro-lfs template is included in the GitHub project to test the Lustre filesystem. Instructions for adding the Lustre client to another template are found here.

The following commands upload the project and import the templates to Azure CycleCloud:

cd cyclecloud-lfs

cyclecloud project upload <container>

cyclecloud import_template -f templates/lfs.txt

cyclecloud import_template -f templates/pbspro-lfs.txt

Note: Replace

<container>with the Azure CycleCloud "locker" you want to use. You can list your lockers by runningcyclecloud locker list.

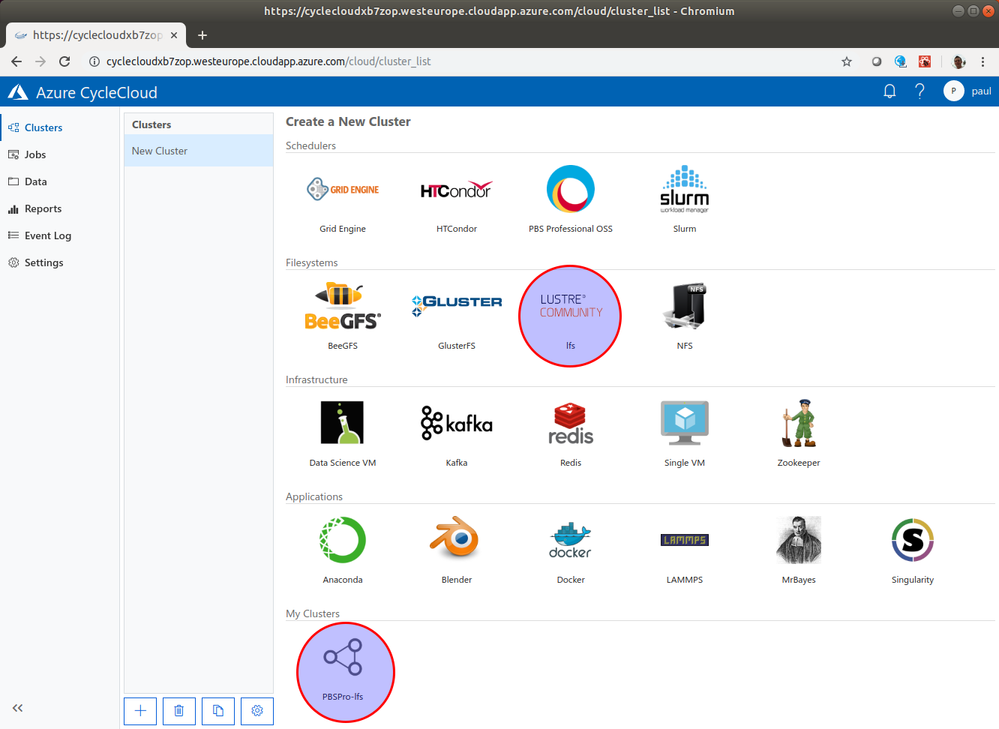

Once these commands are run, you will see the new templates in your Azure CycleCloud web interface, as shown in Figure 2.

Figure 2. Azure CycleCloud web interface

Creating the Lustre Cluster

1. Create the lfs cluster and choose a name, as shown in Figure 3:

Figure 3. Lustre Cluster About

Note: This name is used later in the PBS cluster to reference this filesystem.

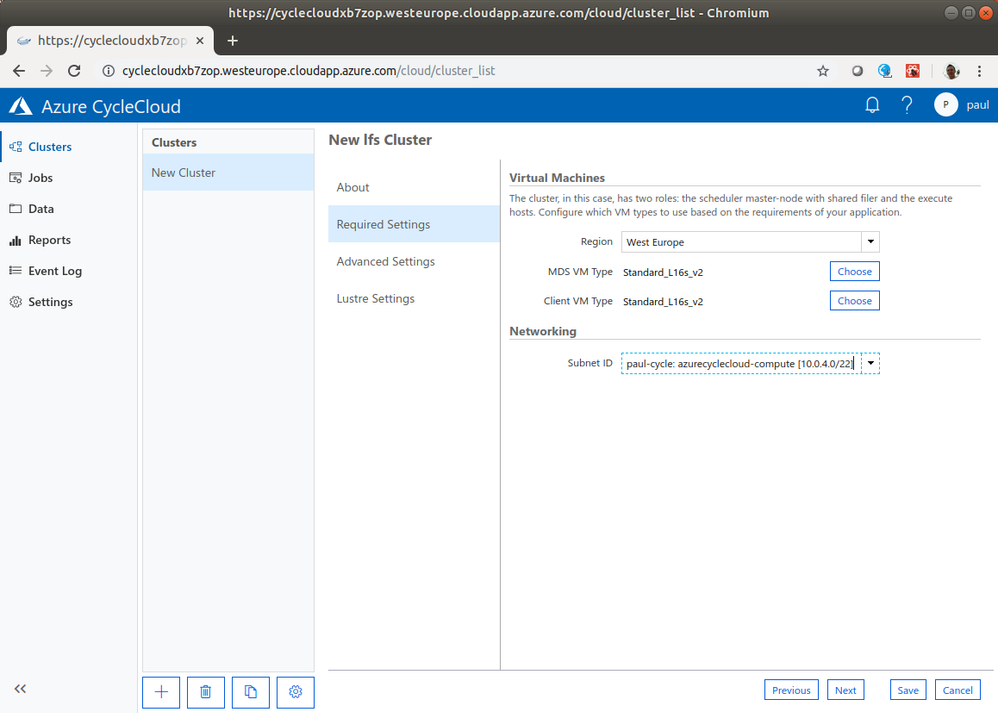

2. Click Next to move to the Required Settings. Here you can choose the region and VM types. Only choose L_v2 instance type. It is not recommended to go beyond L32_v2 as the network thoughput does not scale linearly beyond this size. All NVME disks are combined in a RAID 0 for the OST in the virtual machine.

Figure 4. Lustre Cluster Required Settings

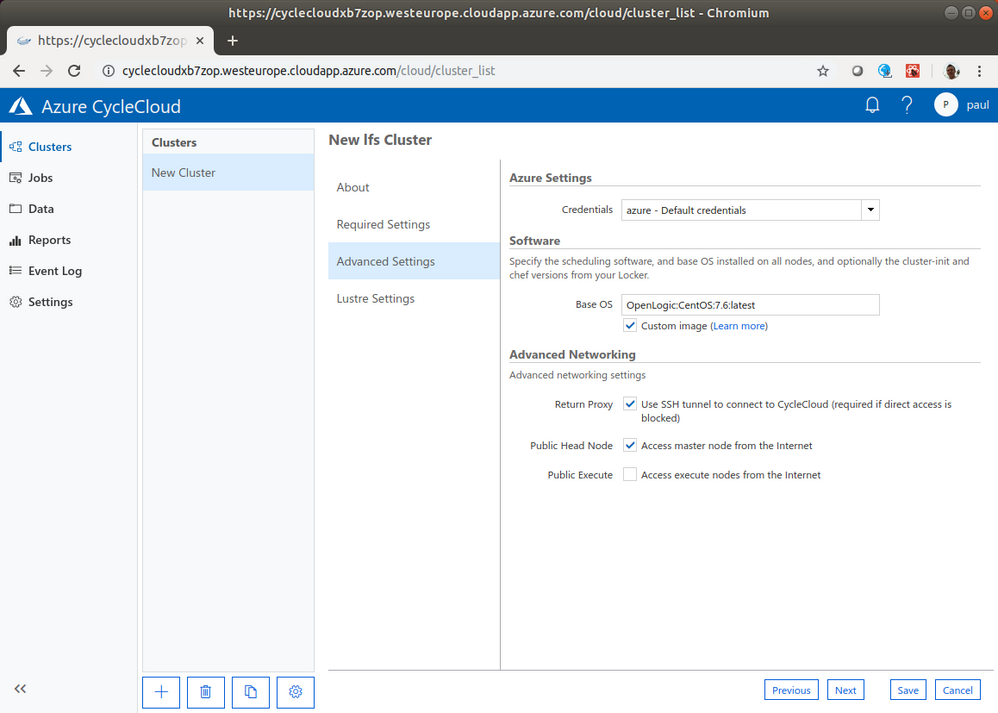

3. Choose the Base OS in Advanced Settings. This determines which version of Lustre to use. The scripts are set up to use the Whamcloud repository for Lustre, so RPMs for Lustre 2.10 are only available up to CentOS 7.6, and Lustre 2.12 is available for CentOS 7.7.

Note: Both the server and client Lustre versions need to match.

Figure 5. Lustre Cluster Advanced Settings

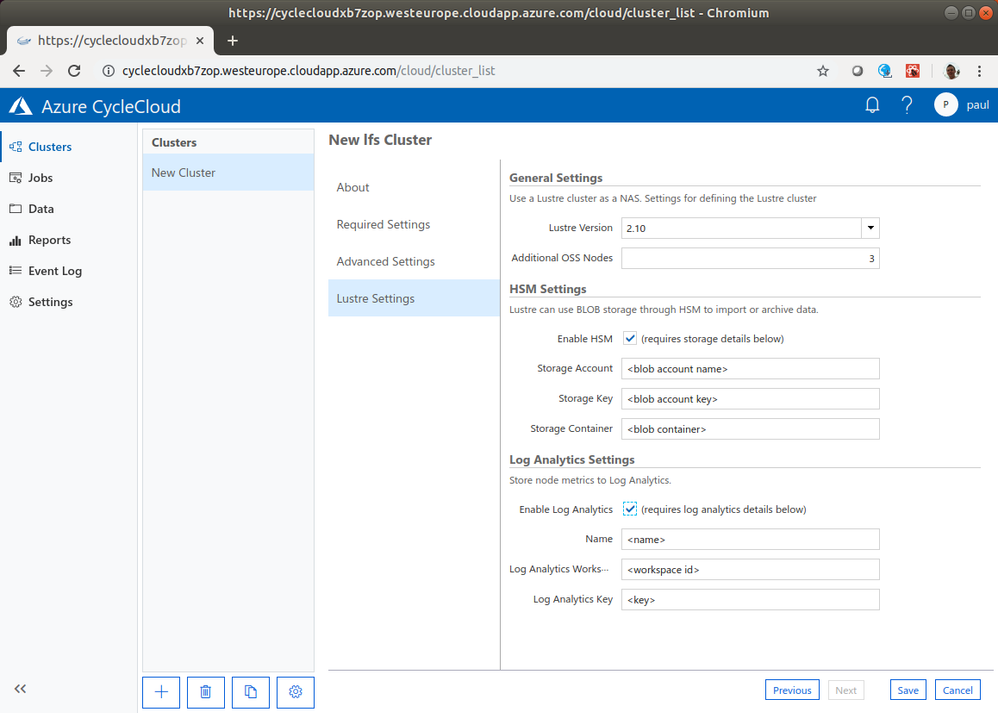

4. In Lustre Settings, you can choose the Lustre version and number of Additional OSS nodes. The number of OSS nodes chosen here and can't be modified without recreating the filesystem.

5. To use HSM, enable the checkbox and provide details for a Storage Account, Storage Key, and Storage Container. All files selected in the container are imported into Lustre when the filesystem is started.

Note: This only populates the metadata and files are downloaded on-demand as they are accessed. Alternatively, they can be restored using the

lfs hsm_restorecommand.

6. To use Log Analytics, enable the checkbox and provide details for the Name, Log Analytics Workspace, and Log Analytics Key. The Name is the log name to use for the metrics.

Figure 6. Lustre Cluster Advanced Settings

Click Save and start the cluster.

Creating the PBS Cluster

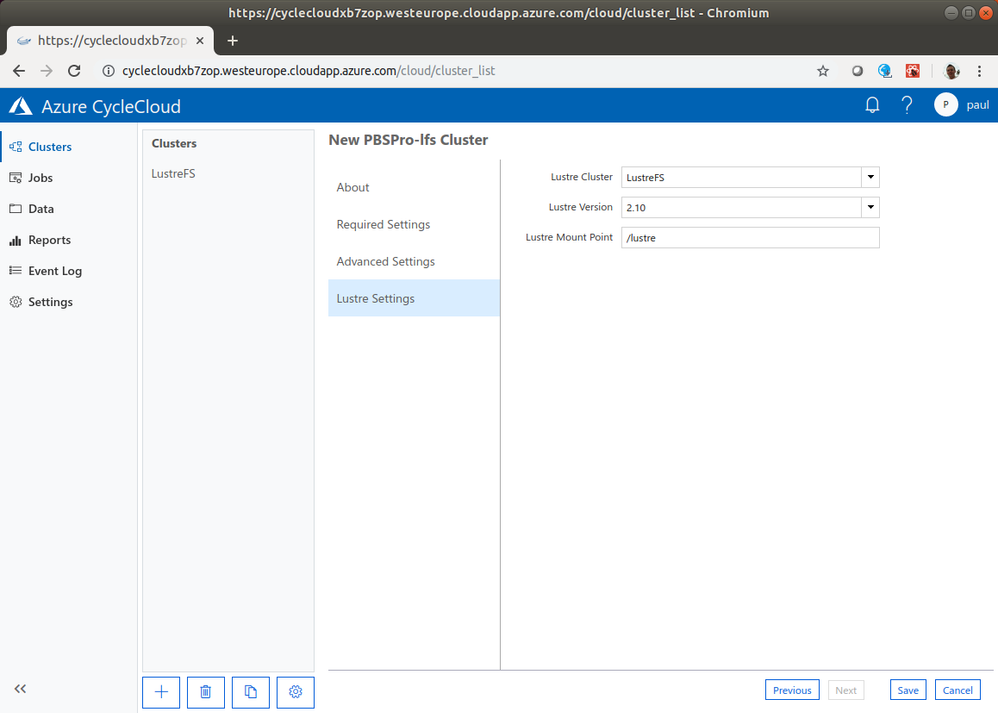

To test the Lustre filesystem, create a pbspro-lfs cluster as follows:

- Name the cluster, select the region, SKUs, and autoscale settings, and choose a subnet with access to the Lustre cluster.

- In the Advanced Settings make sure you know which version of CentOS you are using. At the time of writing,

Cycle CentOS 7is version 7.6, but you may want to explicitly set the version with a custom image as the Azure CycleCloud version may be updated. - In Lustre Settings, choose from the available Lustre clusters in the dropdown menu.

- Make sure the Lustre Version is correct for the OS that is chosen and check that it matches it matches the Luster cluster.

- Choose the path for Lustre to be mounted on all the clients and click Save.

Figure 7. PBSPro-lfs Cluster Advanced Settings

Once the Lustre Cluster is running, you can start this cluster.

Lustre performance

We're using ior to test the performance. Either the AzureHPC or CycleCloud version can be used, but the commands change slightly depending on the image and OS version used. The following commands relate to the lustre_combined AzureHPC example.

First, connect to the headnode:

azhpc-connect -u hpcuser headnode

We are compiling ior, and this requires the MPI compiler on the headnode:

sudo yum -y install mpich-devel

Now, download and compile ior:

module load mpi/mpich-3.0-x86_64

wget https://github.com/hpc/ior/releases/download/3.2.1/ior-3.2.1.tar.gz

tar zxvf ior-3.2.1.tar.gz

cd ior-3.2.1

./configure --prefix=$HOME/ior

make

make install

Move to the lustre filesystem:

cd /lustre

Create a PBS job file. For example, run_ior.pbs:

#!/bin/bash

source /etc/profile

module load mpi/mpich-3.0-x86_64

cd $PBS_O_WORKDIR

NP=$(wc -l <$PBS_NODEFILE)

NODES=$(sort -u $PBS_NODEFILE | wc -l)

PPN=$(($NP / $NODES))

TIMESTAMP=$(date +"%Y-%m-%d_%H-%M-%S")

mpirun -np $NP -machinefile $PBS_NODEFILE \

$HOME/ior/bin/ior -a POSIX -v -z -i 1 -m -d 1 -B -e -F -r -w -t 32m -b 4G \

-o $PWD/test.$TIMESTAMP \

| tee ior-${NODES}x${PPN}.$TIMESTAMP.log

Submit an ior benchmark as follows:

client_nodes=2

procs_per_node=32

qsub -lselect=${client_nodes}:ncpus=${procs_per_node}:mpiprocs=${procs_per_node},place=scatter:excl run_ior.pbs

Figure 8 shows the results of testing the bandwidth of the Lustre filesystem, scaling from 1 to 16 OSS VMs.

Figure 8. IOR benchmark results

In each run, the same number of client VMs were used as there are OSS VMs and 32 processes were run on each client VM. Each client VM is a D32_v3, which has expected bandwidth of 16,000 Mbps (see here) and each OSS VM is an L32_v2, which has the expected bandwidth of 12,800 Mbps (see here). This means that a single client should be able to saturate the bandwidth of one OSS. The max network is the expected bandwidth from the OSS multiplied by the number of OSS VMs.

Using HSM

The AzureHPC examples and the Azure CycleCloud templates set up HSM on Lustre and import the storage container when the filesystem is created. Only the metadata is read, so files are downloaded on-demand as they are accessed. But, other than on-demand downloads, all the other commands for archival are not automatic.

The copytool for Azure is available here. This copytool supports users, groups, and UNIX file permissions that are added as meta-data to the files stored in Azure Blob storage.

HSM commands

The HSM actions are available with the lfs command. All the commands that follow work with multiple files as arguments.

Archive

The lfs hsm_archive command copies the file to Azure Blob storage. Example usage:

$ sudo lfs hsm_archive myfileRelease

The lfs hsm_release command releases an archived file from the Lustre filesystem. It no longer takes up space in Lustre, but it still appears in the filesystem. When opened, it's downloaded again. Example usage:

$ sudo lfs hsm_release myfileRemove

The lfs hsm_remove command deletes an archived file from the archive.

$ sudo lfs hsm_remove myfile

State

The lfs hsm_state command shows the state of the file in the filesystem. This is output for a file that isn't archived:

$ sudo lfs hsm_state myfile

myfile: (0x00000000)

This is output for a file that is archived:

$ sudo lfs hsm_state myfile

myfile: (0x0000000d) exists archived, archive_id:1

This is output for a file that is archived and released (that is, in storage but not taking up space in the filesystem):

$ sudo lfs hsm_state myfile

myfile: (0x0000000d) released exists archived, archive_id:1Action

The lfs hsm_action command displays the current HSM request for a given file. This is most useful when checking the progress on files being archived or restored. When there is no ongoing or pending HSM request, it displays NOOP for the file.

Rehydrating the whole filesystem from blob storage

In certain cases, you may want to restore all the released (or imported) files into the filesystem. This is best used in cases where all the files are required and you don't want the application to wait for each file to be retrieved separately. This can be started with the following command:

cd <lustre_root>

find . -type f -print0 | xargs -r0 -L 50 sudo lfs hsm_restore

The progress of the files can be checked with sudo lfs hsm_action. To find out how many files are left to be restored, use the following command:

cd <lustre_root>

find . -type f -print0 \

| xargs -r0 -L 50 sudo lfs hsm_restore \

| grep -v NOOP \

| wc -l

Viewing Lustre metrics in Log Analytics

Each Lustre VM logs the following metrics every sixty seconds if log analytics is enabled:

- Load average

- Kilobytes free

- Network bytes sent

- Network bytes received

You can view this in the portal by selecting Monitor and then Logs. Here is an example query:

<log-name>_CL

| summarize max(loadavg_d),max(bytessend_d),max(bytesrecv_d) by bin(TimeGenerated,1m), hostname_s

| render timechart

Note: Substitute

<log-name>for the name you chose.

Figure 9. Log Analytics data

Summary

This post outlined setting up a Lustre filesystem and PBS cluster using AzureHPC and Azure CycleCloud. It uses the HSM capabilities for archival and backup to Azure Blob Storage and viewing metrics in Log Analytics. This is a cost-effective way to provision a high-performance filesystem on Azure.

Learn more

- AzureCAT eBook: Parallel Virtual File Systems on Microsoft Azure

- Parallel Virtual File Systems on Microsoft Azure - Part 2: Lustre on Azure

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.