- Home

- Azure

- Azure Architecture Blog

- Optimize Azure OpenAI Applications with Semantic Caching

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

One of the ways to optimize cost and performance of Large Language Models (LLMs) is to cache the responses from LLMs, this is sometimes referred to as “semantic caching”. In this blog, we will discuss the approaches, benefits, common scenarios and key considerations for using semantic caching.

What is semantic caching?

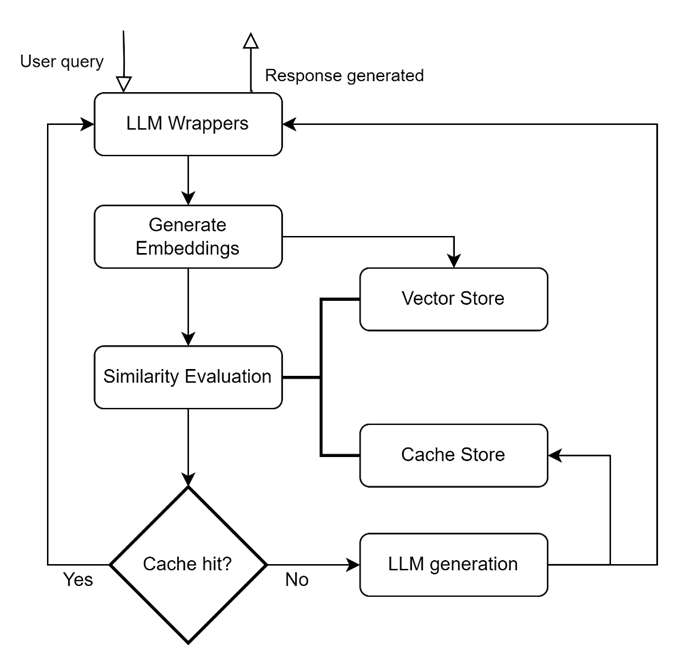

Caching systems typically store commonly retrieved data for subsequent serving in an optimal manner. In the context of LLMs, semantic cache maintains a cache of previously asked questions and responses, uses similarity measures to retrieve semantically similar queries from the cache and respond with cached responses if a match is found within the threshold for similarity. If cache is not able to return a response, then the answer can be returned from a fresh LLM call.

Key building blocks of a semantic caching layer:

LLM Wrappers are used to add integration and ability to support different LLMs (Llama, OpenAI, etc.,). Generate Embeddings helps generating embedding representation for user queries. The generated embeddings are typically persisted in the vector store. A Vector Store is used to persist embeddings for queries and support fast retrieval of embeddings at query invocation time, they can be in-memory or specialized vector databases optimized for storage, indexing and retrieval. (e.g., FAISS, Hnswlib, PGVector, Chroma, CosmosDB, etc.,). Cache Store persist responses from the LLMs and serves responses when cache is hit. (eg. SQLite, Elasticsearch, Redis, MongoDB, etc.,). Similarity Evaluation module uses similarity metrics / distances to compare the input query with vector store queries based on embeddings.

KPIs / Logging: Some of the caching specific KPIs include cache hit ratio (requests handled by cache / total requests) and latency (time for processing a query to be processed and corresponding response to be retrieved from the cache).

Benefits of semantic caching:

- Cost optimization: Since the responses are served without invoking LLMs, there can be significant cost benefits for caching responses. We have come across use cases where customers have reported 20 – 30 % of the total queries from users can be served by the caching layer.

- Improvement in latency: LLMs are known to exhibit higher latencies to generate responses. This can be reduced by response caching, to the extent that queries are answered from caching layer and not by invoking LLMs every time.

- Scaling: Since questions responded by cache hit do not invoke LLMs, provisioned resources/endpoints are free to answer unseen/newer questions from users. This can be helpful when applications are scaled to handle more users.

- Consistency in responses: Since caching layer answers from cached responses, there is no actual generation involved and the same response is provided to queries deemed semantically similar.

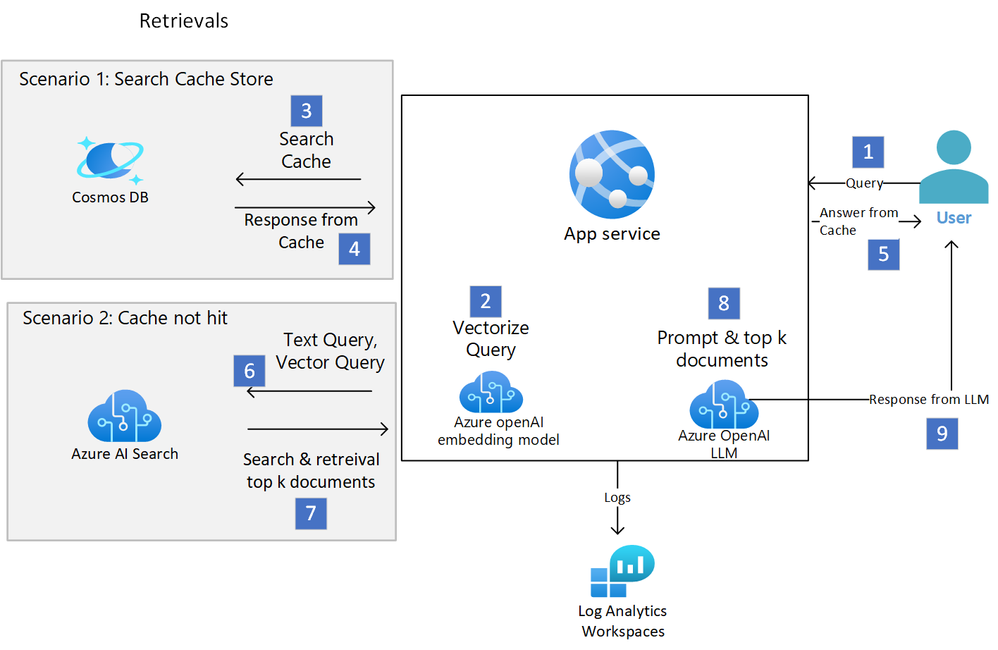

Reference architecture for implementation on Azure:

- User sends query through user application.

- The query is converted to a vector with AOAI embedding model.

Retrievals and Response (Scenario 1)

- Azure cosmos DB acts as vector store, ingesting newer queries and retrieving existing queries based on similarity measures. Note: If the application is image retrieval, images are stored in a blob storage with the locations stored in vector store.

- If cache is hit based on threshold for similarity, response is retrieved from caching layer (for eg. text responses can be stored and served from Cosmos DB, while images can be served from blob storage based on location in Cosmos DB).

- Response is served to the user.

Retrievals and Response (Scenario 2)

- If cache is not hit, the user query (text and /or vector) is searched in Azure AI search (service used in implementing RAG system.

- Top K documents matching documents are retrieved and passed to the LLM.

- Response is generated from LLMs based on the relevant data retrieved from Azure AI search.

- Response from LLM is passed to the user back.

Logs passed to Log Analytics Workspace - KPIs like number of times response is served from cache and the tokens served from cache can be obtained from the logs persisted to log analytics workspace for later analysis to understand the impact of caching.

The orchestration of querying the vector store, or directing the query to LLM, logging etc. are handled by a custom program or customizing existing frameworks like GPTcache or Langchain or Autogen.

Approaches for Implementation:

While it is possible to build and implement the logic from scratch, there are certain existing libraries which can help accelerate development. In this section, we will briefly look at some popular open-source frameworks that have semantic caching implemented.

1. GPTCache:

GPTCache is an opensource framework (MIT License) and employs embedding algorithms to convert queries into embeddings and performing similarity search on the embeddings. GPTCache uses an LLM Adapter, embedding generator, cache manager, similarity evaluator and post processors as components. It is modular in nature and supports multiple options for LLMs, embeddings, vector store and cache stores. Using GPTCache involves the following steps:

- Build your cache: Choose an embedding function, similarity evaluation function, where to store your data, and the eviction policy.

- Choose your LLM: GPTCache currently has support for OpenAI models and langchain framework. Langchain supports a variety of LLMs, such as Anthropic, Huggingface, and Cohere models.

Below are the components of GPTCache:

- pre-process func: The preprocessing function is used to obtain information related to user question from the user LLM request parameter list, convert to a string and return it. The returned value is the input to the embedding model in a subsequent step. The request parameter is different for different LLMs and hence some level of pre-processing is needed. Some options for the pre-processing function include using last message content, last content without prompt template, concatenating contents of messages, compression of context using summarization, etc. Preprocessing for image and audio involve getting filenames and reading the data.

from gptcache.processor.pre import last_content

content = last_content({"messages": [{"content": "foo1"}, {"content": "foo2"}]})

# content = "foo2"from langchain import PromptTemplate

from gptcache import Config

from gptcache.processor.pre import last_content_without_template

template_obj = PromptTemplate.from_template("tell me a joke about {subject}")

prompt = template_obj.format(subject="animal")

value = last_content_without_template(

data={"messages": [{"content": prompt}]},

cache_config=Config(template=template_obj.template),

)

print(value)

# ['animal']

- embedding: Convert the input into a multidimensional array of numbers based on the input type. Text, audio and image embeddings are supported. Onnx, openai embedding models, SBERT, Cohere, Huggingface models like Rwkv, Distilbert-base-uncased are some of the text embeddings supported. Timm, ViT image embeddings supported.

from gptcache.embedding import LangChain

from langchain.embeddings.openai import OpenAIEmbeddings

test_sentence = 'Hello, world.'

embeddings = OpenAIEmbeddings(model="your-embeddings-deployment-name")

encoder = LangChain(embeddings=embeddings)

embed = encoder.to_embeddings(test_sentence)

- data manager: Responsible for controlling the operation of both the Cache Storage and Vector Store and eviction policy to manage the memory.

- cache store: Cache Storage is used to store the response from LLMs. Cached responses are retrieved to assist in evaluating similarity and are returned to the requester if there is a good semantic match.

- vector store: The Vector Store module helps find the K most similar requests from the input request's extracted embedding.

- object store: If it is a multi-modal cache, object store is optionally required.

- similarity evaluation: This module collects data from both the Cache Storage and Vector Store, and uses various strategies to determine the similarity between the input request and the requests from the Vector Store. Based on this similarity, it determines whether a request matches the cache. GPTCache provides a standardized interface for integrating various strategies, along with a collection of implementations to use. The similarity evaluation evaluates the recalled cache data according to the current user’s llm request and obtain a float value. CohereRerankEvaluation, SearchDistanceEvaluation, OnnxModelEvaluation, SequenceMatchEvaluation are some of the similarity options available.

- post-process func: used to get the final answer to user questions based on all cached data that meet the similarity threshold. Currently existing postprocessing functions include

- first, get the most similar cached answer

- random, randomly fetch a similar cached answer

- temperature_softmax, select according to the softmax strategy, which can ensure that the obtained cached answer has a certain randomness

Usage with custom definition:

The below example uses sqlite as scalar store persistence, faiss for vector store and Onnx model embeddings with a similarity threshold mentioned.

import time

from gptcache import Cache, Config

from gptcache.adapter import openai

from gptcache.adapter.api import init_similar_cache

from gptcache.embedding import Onnx

from gptcache.manager import manager_factory

from gptcache.processor.post import random_one

from gptcache.processor.pre import last_content

from gptcache.similarity_evaluation import OnnxModelEvaluation

openai_complete_cache = Cache()

encoder = Onnx()

sqlite_faiss_data_manager = manager_factory(

"sqlite,faiss",

data_dir="openai_complete_cache",

scalar_params={

"sql_url": "sqlite:///./openai_complete_cache.db",

"table_name": "openai_chat",

},

vector_params={

"dimension": encoder.dimension,

"index_file_path": "./openai_chat_faiss.index",

},

)

onnx_evaluation = OnnxModelEvaluation()

cache_config = Config(similarity_threshold=0.75)

init_similar_cache(

cache_obj=openai_complete_cache,

pre_func=last_content,

embedding=encoder,

data_manager=sqlite_faiss_data_manager,

evaluation=onnx_evaluation,

post_func=random_one,

config=cache_config,

)

questions = [

"what's github",

"can you explain what GitHub is",

"can you tell me more about GitHub",

"what is the purpose of GitHub",

]

for question in questions:

start_time = time.time()

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": question}],

cache_obj=openai_complete_cache,

)

print(f"Question: {question}")

print("Time consuming: {:.2f}s".format(time.time() - start_time))

print(f'Answer: {response["choices"][0]["message"]["content"]}\n')

Cache loading.....

Question: what's github

Time consuming: 3.16s

Answer: GitHub is a web-based platform used for version control and collaboration in software development projects. It allows developers to store, manage, and share their code with others. GitHub provides features such as bug tracking, feature management, task management, and wikis for documentation. It also offers a social networking element, allowing users to follow and contribute to projects, collaborate with others, and discover new code repositories.

Question: can you explain what GitHub is

Time consuming: 0.46s

Answer: GitHub is a web-based platform used for version control and collaboration in software development projects. It allows developers to store, manage, and share their code with others. GitHub provides features such as bug tracking, feature management, task management, and wikis for documentation. It also offers a social networking element, allowing users to follow and contribute to projects, collaborate with others, and discover new code repositories.

Question: can you tell me more about GitHub

Time consuming: 0.55s

Answer: GitHub is a web-based platform used for version control and collaboration in software development projects. It allows developers to store, manage, and share their code with others. GitHub provides features such as bug tracking, feature management, task management, and wikis for documentation. It also offers a social networking element, allowing users to follow and contribute to projects, collaborate with others, and discover new code repositories.

Question: what is the purpose of GitHub

Time consuming: 0.56s

Answer: GitHub is a web-based platform used for version control and collaboration in software development projects. It allows developers to store, manage, and share their code with others. GitHub provides features such as bug tracking, feature management, task management, and wikis for documentation. It also offers a social networking element, allowing users to follow and contribute to projects, collaborate with others, and discover new code repositories.

Please note that keeping a lower threshold will lead to a lower likelihood of hitting the cache.

A smaller value means higher consistency with the content in the cache, a lower cache hit rate, and a lower cache miss hit; a larger value means higher tolerance, a higher cache hit rate, and at the same time also have higher cache misses.

2. Langchain

Langchain has support for caching and provides options through in memory cache, integration with GPTcache or through other backends and vector stores like Cassandra, Redis, Azure Cosmos DB among others.

Implementing caching with Langchain and Azure CosmosDB:

#from langchain.cache import AzureCosmosDBSemanticCache

from langchain_community.cache import AzureCosmosDBSemanticCache

from langchain_community.vectorstores.azure_cosmos_db import (

CosmosDBSimilarityType,

CosmosDBVectorSearchType,

)

from langchain_openai import OpenAIEmbeddings

import urllib

# Read more about Azure CosmosDB Mongo vCore vector search here https://learn.microsoft.com/en-us/azure/cosmos-db/mongodb/vcore/vector-search

INDEX_NAME = "langchain-test-index"

NAMESPACE = "langchain_test_db.langchain_test_collection"

CONNECTION_STRING = (

"mongodb+srv://cosmoscachedemo:" + urllib.parse.quote_plus("cadccs@24") + "@cosmos4mongo.mongocluster.cosmos.azure.com/?tls=true&authMechanism=SCRAM-SHA-256&retrywrites=false&maxIdleTimeMS=120000")

DB_NAME, COLLECTION_NAME = NAMESPACE.split(".")

# Default value for these params

num_lists = 3

dimensions = 1536

similarity_algorithm = CosmosDBSimilarityType.COS

kind = CosmosDBVectorSearchType.VECTOR_IVF

m = 16

ef_construction = 64

ef_search = 40

score_threshold = 0.1

set_llm_cache(

AzureCosmosDBSemanticCache(

cosmosdb_connection_string=CONNECTION_STRING,

cosmosdb_client=None,

embedding=AzureOpenAIEmbeddings(),

database_name=DB_NAME,

collection_name=COLLECTION_NAME,

num_lists=num_lists,

similarity=similarity_algorithm,

kind=kind,

dimensions=dimensions,

m=m,

ef_construction=ef_construction,

ef_search=ef_search,

score_threshold=score_threshold,

)

)

3. Autogen:

AutoGen is an open-source framework, fully customizable, and helps orchestration of complex workflows by enabling multiple agents that can interact with each other. AutoGen supports caching API requests so that they can be reused when the same request is issued.

from autogen import Cache

# Use Redis as cache

with Cache.redis(redis_url="redis://localhost:6379/0") as cache:

user.initiate_chat(assistant, message=coding_task, cache=cache)

# Use DiskCache as cache

with Cache.disk() as cache:

user.initiate_chat(assistant, message=coding_task, cache=cache)

# The cache can also be passed directly to the model client's create call

client = OpenAIWrapper(...)

with Cache.disk() as cache:

client.create(..., cache=cache)

For backward compatibility, DiskCache is on by default with cache_seed set to 41. To disable caching completely, set cache_seed to None in the llm_config of the agent.

assistant = AssistantAgent(

"coding_agent",

llm_config={

"cache_seed": None,

"config_list": OAI_CONFIG_LIST,

"max_tokens": 1024,

},

)

Caching scenarios:

Chat:

For conversational AI applications, previous turns are considered for understanding the current turn context and generate answer. In such scenarios, current query alone may not be sufficient to provide the correct response. In such cases, summarization or extraction of keywords from previous turns can be added to the current query for embedding generation, cache response generation and retrieval. GPTCache supports concatenating multiple content elements in the input message payload, which can include previous turns.

Text To Image generation:

An object store is defined in addition to vector and cache stores to store and retrieve the images.

from gptcache import cache

from gptcache.adapter import openai

from gptcache.processor.pre import get_prompt

from gptcache.embedding import Onnx

from gptcache.similarity_evaluation.distance import SearchDistanceEvaluation

from gptcache.manager import get_data_manager, CacheBase, VectorBase, ObjectBase

onnx = Onnx()

cache_base = CacheBase('sqlite')

vector_base = VectorBase('milvus', host='localhost', port='19530', dimension=onnx.dimension)

object_base = ObjectBase('local', path='./images')

data_manager = get_data_manager(cache_base, vector_base, object_base)

cache.init(

pre_embedding_func=get_prompt,

embedding_func=onnx.to_embeddings,

data_manager=data_manager,

similarity_evaluation=SearchDistanceEvaluation(),

)

cache.set_openai_key()

response = openai.Image.create(

prompt="a white siamese cat",

n=1,

size="256x256"

)

image_url = response['data'][0]['url']

response = openai.Image.create(

prompt="a white siamese cat",

n=1,

size="256x256"

)

image_url = response['data'][0]['url']

NL2SQL / Codex scenarios:

We can cache the generated query / code for code generation scenarios. Code execution can be done based on latest data.

import time

def response_text(openai_resp):

return openai_resp["choices"][0]["text"]

from gptcache import cache

from gptcache.adapter import openai

from gptcache.embedding import Onnx

from gptcache.processor.pre import get_prompt

from gptcache.manager import CacheBase, VectorBase, get_data_manager

from gptcache.similarity_evaluation.distance import SearchDistanceEvaluation

print("Cache loading.....")

onnx = Onnx()

data_manager = get_data_manager(CacheBase("sqlite"), VectorBase("faiss", dimension=onnx.dimension))

cache.init(pre_embedding_func=get_prompt,

embedding_func=onnx.to_embeddings,

data_manager=data_manager,

similarity_evaluation=SearchDistanceEvaluation(),

)

cache.set_openai_key()

questions = [

"A query to list the names of the departments which employed more than 10 employees in the last 3 months\nSELECT",

"Query the names of the departments which employed more than 10 employees in the last 3 months\nSELECT",

"List the names of the departments which employed more than 10 employees in the last 3 months\nSELECT",

]

for question in questions:

start_time = time.time()

response = openai.Completion.create(

engine="gpt-35-turbo-instruct",

prompt="### Postgres SQL tables, with their properties:\n#\n# Employee(id, name, department_id)\n# Department(id, name, address)\n# Salary_Payments(id, employee_id, amount, date)\n#\n### " + question,

temperature=0,

max_tokens=150,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0,

stop=["#", ";"]

)

print(question, response_text(response))

print("Time consuming: {:.2f}s".format(time.time() - start_time))

Response:

SELECT Department.name

FROM Department

INNER JOIN Employee ON Employee.department_id = Department.id

INNER JOIN Salary_Payments ON Salary_Payments.employee_id = Employee.id

WHERE Salary_Payments.date >= CURRENT_DATE - INTERVAL '3 months'

GROUP BY Department.name

HAVING COUNT(Employee.id) > 10

Time consuming: 0.71s

Key considerations when applying semantic caching:

- Identification of frequent questions & answer patterns evolve over a period of time, and likely to involve a feedback loop from users/subject matter experts. During initial production deployment, there will be a limited set of caching opportunities. As the application gets more traction, there will be sufficient responses with customer feedback that can be used for the caching layer.

- Responses to personalized questions (could be based on the persona of the user or specific questions related to a user where answer is variable to users, or timing of queries that may involve fetching user specific information) need to be handled with care. For eg. A HR bot may be answer leave balance queries for users. This could involve different response based on staff level / designations or specific leave balances based on individuals.

- Improper cache management/design could potentially lead to data and prompt leakage. For eg. Caching personalized responses as indicated above can lead to these avoidable situations.

- The threshold used for similarity needs to be carefully considered. Too low thresholds could mean accuracy and user experience issues, while too high threshold would mean very limited usage of the cached responses.

- Temperature settings are typically used to generate diverse responses to the same query. Caching is implemented for default / zero temperature settings and higher temperature invoking the LLMs for response generation.

- For RAG based use cases, there is a likelihood of the documents getting updated in indexing/search layer. In such cases, cache will become obsolete and hence new responses need to be cached to avoid giving wrong/ outdated response.

References:

- zilliztech/GPTCache: Semantic cache for LLMs. Fully integrated with LangChain and llama_index. (gith...

- codefuse-ai/CodeFuse-ModelCache: A LLM semantic caching system aiming to enhance user experience by ...

- How to better configure your cache — GPTCache

- MSFTeegarden/RedisSemanticCache (github.com)

- Tutorial: Conduct vector similarity search on Azure OpenAI embeddings using Azure Cache for Redis - ...

- Caching Generative LLMs | Saving API Costs - Analytics Vidhya

- How to cache LLM calls using Langchain. (linkedin.com)

- Caching LLM Queries for performance & cost improvements | by Zilliz | Nov, 2023 | Medium

- Mastering LLM Techniques: Inference Optimization | NVIDIA Technical Blog

- LLM Caching | AutoGen (microsoft.github.io)

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.