- Home

- Azure

- Apps on Azure Blog

- Building a SaaS Application on Azure AKS with Github Actions

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Building a SaaS Application on Azure AKS with Github Actions

SaaS is a business and software delivery model that enables organisations to offer their solution in a service centric approach at scale usually on a subscription basis. SaaS adoption usually targets growth driven transformation, market expansion, operational simplicity and faster innovation with faster product releases.

SaaS infrastructures offer better cost efficiencies by leveraging economies of scale, resource pooling and sharing, and increased utilisation of the infrastructure in general. SaaS infra pattern is especially attractive for ISV’s since SaaS accelerates tenant onboarding, enables data collection and mining for platform users (e.g calculate CAC, CLTV, MRR, product consumption metrics…) and makes it much easier to address costly customer services centrally while enabling proactive customer engagements driven data.

SaaS infrastructure hosts multiple isolated tenants with the tools and services to automatically provision them as well as collect metrics and analytics for each. Each customer onboarded to a SaaS application is called a “tenant”. All the customers of a SaaS system run within a single Azure subscription/tenant using partially or fully shared resources. Such an infrastructure is called a “multi-tenant” infrastructure.

On this blog we will explain how to build a multi-tenant SaaS infrastructure on Azure AKS. There are many different ways to engage the multi-tenant architecture challenge in the cloud. We will be focusing on a specific deployment model “cluster multi-tenancy” for an app developed cloud natively with microservices architecture.

Blog will mostly focus on two of the largest challenges for building a SaaS infra. These are a- tenant isolation capabilities of AKS b- automation of tenant onboarding onto AKS.

SaaS Infrastructure requirements

A SaaS solution by definition is multi-tenant. However multi-tenancy alone is not enough to make a solution a SaaS solution. Reliability and security pillars should be treated as priority infrastructure pillars as problems in these two pillars might create reputational failure especially at product launch and cause the startup services and products to fail.

There are four major Infrastructure requirements for a SaaS solution.

- multi-tenancy implementation

- Identity solution for SaaS users

- Automation of tenant onboarding

- Per tenant metrics and analytics driving billing and metering

Let’s dive in for a bit more detail for each…

1. Multi-tenancy for compute

Containers are a good fit for SaaS infrastructures since they can scale rapidly following the load on the system granularly, improving cost efficiency at the infrastructure layer.

Tenancy model covers a wide spectrum of choices, starting with dedicated clusters for each tenant to using shared clusters for all the tenants. In real cases deployment models adopt a combination of these two models keeping some infrastructure as fully shared to keeping dedicated infrastructure for tenants and services with special requirements.

On this blog, we will focus on the “cluster multi-tenancy” model where multiple instances of a workload are deployed onto the same compute cluster for a set of customers. Cluster multi-tenancy is an alternative to managing many single-tenant clusters. There might be cases where some services might be deployed to dedicated clusters for “premium” customers to ensure service availability, whereas “freemium” services are deployed to a shared cluster.

Running multiple tenants over shared infrastructure means each SaaS provider can acknowledge efficiencies with the economies of scale benefits that pooling the resources brings. However, this necessitates isolating each individual tenant’s resources and protecting them from their neighbours (e.g. from unauthorised access, data exfiltration or DoS attacks) that can be initiated from within a neighbour tenant on the same shared infrastructure. Sharing the same infrastructure also brings up problems with “noisy neighbours” causing “fairness” issues, where one tenant monopolises resources at the expense of others. In these cases, “hostile multi-tenancy” necessitates “hard tenant isolation” which we will explore further here without any assumptions of any level of trust between tenants. Tenant isolation requires implementation of certain AKS / k8s capabilities at the control plane as well as at the data plane which we will dive deeper in the configuration section. Blog is accompanied by a sample implementation for multi-tenancy on AKS with control plane and data plane isolation features.

2. Automating tenant onboarding

Tenants should be onboarded with a simple, repeatable process where customisations are parameterised and automated. (e.g. namespaces should be created automatically for each tenant and services to be deployed into the new namespace. Azure functions are perfectly suited for automating onboarding where an Azure function captures tenant context after tenant registration and initiate IaC templates run with Github Actions for each tenant.

Below requirements are covered for the sake of completeness and Azure implementations will be covered in separate blog posts.

3. Setting up an Identity solution for SaaS

Setting up an identity solution is key for the success of a multi tenant architecture. On-boarded customers generally would like to have an SSO experience logging into SaaS services through integrations with their Active Directory deployments. Azure Active Directory B2C is a key solution here which permits SaaS users to use their preferred social, enterprise, or local account identities to get single sign-on access to the SaaS applications or API’s.

4. Metering & Analytics

There needs to be hooks embedded into SaaS infrastructure components so that each customers' consumption of compute resources is correctly metered and priced.

One way to do this is injecting tenant context into infrastructure logging and monitoring. A billing app can treat these logs as “CDR”’s processing the cost for each tenant and generates individual detailed tenant billing.

Other methods include consumption estimation rather than consumption calculation. These models will be less accurate but easier to implement. Some methods SaaS providers can leverage here are:

- Using per-tenant transaction metrics (API Calls a tenant receives) or number of SaaS users for each tenant

- Using indicative consumption metrics (e.g. data accumulated for each tenant)

- Leveraging “Azure cost Analysis” using Azure resource tags (e.g. AKS node-pool tags)

Azure resource tags can be used for infrastructure components that are provisioned dedicated to individual tenants. e.g. For dedicated clusters, tenant context can be injected in the form of AKS node-pool tags, as this blog is focusing on AKS implementation details for SaaS.

We will now dig deeper into tenant isolation, both with native Kubernetes constructs and with unique AKS and Azure capabilities….

k8s / AKS for multi-tenancy

Multi-tenancy requires tenant isolation.

Tenant isolation requires…

a - control plane isolation

b - data plane isolation

Now let’s cover K8s & AKS capabilities and constructs for multi-tenancy control plane and data plane isolation requirements…

AKS Control Plane Isolation

k8s namespaces: namespaces provide a mechanism for isolating groups of API resources within a single AKS cluster. They can be used as a logical container to store and separate per tenant resources on the shared cluster.

Resource quotas: can be used to limit the number of API resources (e.g. number of pods) that a tenant can create within a namespace. This helps alleviate “noisy neighbour” concerns within a cluster.

Service meshes: A service mesh is a layer for facilitating service-to-service communications between services or microservices, using a proxy. There are various service mesh implementations like Open Service Mesh, Istio, Linkerd. Service meshes can improve SaaS infrastructure with the following capabilities…

- Providing observability into container communications

- Providing secure connections

- Traffic management with routing rules, circuit breakers, fault injection, retries and backoff for failed requests.

Below are further important control-plane features that will help with building SaaS infrastructures addressing specific SaaS infra requirements…

KEDA - fast autoscaling is an important requirement for SaaS infrastructure. This is especially important for b2c SaaS offerings which causes platform users to behave in sync responding to certain events e.g. a crypto exchange infra which needs to scale very fast depending on crypto asset moves, or an online marketplace which announces discounts to their users at certain times. AKS support for KEDA enables k8s to scale with both external and enhanced internal metrics which can act as leading indicators for scaling events speeding up pod autoscaling helping k8s HPA (horizontal pod autoscaler) . (KEDA documentation). Scaling ahead based on events has a much greater effect then waiting for the workload to increase, resource consumption to spike and only then start scaling – Proper application scaling makes a difference and can a lot of times by itself mitigate the pitfalls that come with node scaling. The best way to avoid scaling time is to scale ahead with KEDA…

With all the potential fast scaling KEDA might offer for multi-tenancy, at the moment KEDA doesn’t support per tenant (namespace) autoscaling and scales all pods in all namespaces when scaling metrics are exceeded.

AKS Data Plane Isolation

Network policies: pod-to-pod communication can be controlled using Network policies which restrict pod-to-pod communication namespace labels or IP address ranges.

QoS: Different SLA’s to different tenants (freemium vs paid) can be assigned with K8s bandwidth plugin, pod priority and preemption. e.g. higher priority pods (belonging to a paid customer) will evict a pod that belongs to a freemium customer when cluster resources are not enough to deploy an additional paid customer pod.

Kata Containers: Containers are processes running on a shared kernel; they mount file systems like /sys and /proc from the underlying host, making them less secure than an application that runs on a virtual machine which has its kernel. Kata Containers are lightweight VM’s (with a dedicated kernel with nested-virtualisation) that are seamlessly integrated to k8s (e.g. for auto scaling or rolling updates). The design goal for Kata containers is having the security benefit of having a dedicated kernel without the speed hit of running on a VM. Kata containers provide stronger workload isolation using hardware virtualisation technology as a second layer of defense and enables “confidential computing” use-cases on AKS. Although Kata containers offer a heavy-handed approach to data plane isolation might be indispensable for highly regulated industry workloads where isolation requirements are more stringent. Kata containers promise great potential in hostile multi-tenancy use cases.

Defender- Microsoft Defender for containers helps you run your containers securely with data plane hardening, vulnerability assessment and continuous monitoring capabilities.

SaaS Reference Architecture on Azure

Here is the proposed architecture to deploy a multi-tenant SaaS infrastructure on AKS automated with Github Actions. An Azure Function gets the tenant context after registration and with that, can trigger a GitHub Actions workflow by making a call to GitHub's REST API. The REST API call can be made using the HTTP trigger of an Azure Function, which will then run the GitHub Action jobs that will push tenant specific configuration to AKS and launch application pods.

It is worth mentioning API Management level throttling and traffic shaping can be used to protect the AKS backend. API keys can be bound to usage plans on the API Gateway so that users are throttled at the gateway level before causing noisy neighbour issues within the compute infrastructure.

Deploying a cloud-native SaaS Application on Azure AKS with Github Actions

We will first launch the AKS cluster and apply cluster “global” configuration. Once the cluster is up and running we will be pushing the “per tenant configuration” to the cluster which will be defined below.

To store container images we need to create an ACR - container registry. For SaaS all tenants need to run on the same version of the software hence we wouldn’t expect repo to be populated. As per standard devops deployments, development will use ACR to track code and use CI/CD to deploy the images onto AKS.

Configuration Summary

AKS SaaS cluster Configuration

Below are the required configurations for the AKS cluster.

- Enable CNI for advanced networking (AKS policies require CNI plugin.)

- Enable network policy

- Azure Policy / Enable - network policy feature can only be enabled when the cluster is created. It is important not to skip enabling network policy.

- Enable secret store CSI driver (will be enabled per Tennant)

az aks get-credentials \

--resource-group aksbookrg01 \

--name aks-saas-cluster

- Enable “Defender for Containers” for the cluster

Defender for containers assists infrastructure with the following three core aspects of security…

- Environment hardening - every request to the k8s API server will be monitored against a predefined set of best practices.

- Vulnerability Assessment - images in ACR are scanned for vulnerabilities.

- run-time threat protection for nodes and clusters - detect suspicious activity happening in cluster nodes.

- Enable KEDA - You can enable KEDA by running the following Azure CLI commands per Tennant.

az extension add --name aks-preview

az feature register --namespace "Microsoft.ContainerService" --name "AKS-KedaPreview"

az feature show --namespace "Microsoft.ContainerService" --name "AKS-KedaPreview"

az provider register --namespace Microsoft.ContainerService

With an Azure Resource Manager template and specifying the workloadAutoScalerProfile field:

"workloadAutoScalerProfile": {

"keda": {

"enabled": true

}

}

Per tenant AKS configuration

- separate namespace per tenant

When you host multiple tenant applications on a single AKS cluster, and each is in a separate namespace, then each workload should use a different Kubernetes service account and credentials to access the downstream Azure services link. Service account credentials are stored as k8s secrets.

- Implement k8s resource quotas per tenant

You need to enforce resource quotas at the namespace level. You need to monitor resource usage and adjust quotas per tenant (read as namespace) as needed.

Resource quotas can be used to set quotas for

- Compute resources—such as CPU & memory, or GPU…

- Storage resources—total number of volumes or amount of disk space.

- Object count—max number of secrets, services, or jobs that can be created.

e.g. below .yaml sets resource quotas for a namespace to 6 vCPU’s and 20 GB of memory and a max pod count of 3…

apiVersion: v1

kind: ResourceQuota

metadata:

name: dev-app-team

spec:

hard:

cpu: "6"

memory: 20Gi

pods: "3"

Resource quota is applied with…

kubectl apply -f dev-app-team-quotas.yaml --namespace dev-apps

When resource quota is configured you need to make sure resource configs are added to pod manifest files too. Otherwise pods will not be added to the cluster.

- Implement per tenant network policies

With network policies you define an ordered set of rules in a .yaml manifest to send and receive traffic and apply them to a collection of pods that match one or more label selectors or confine traffic within the same namespace only.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: demo-policy

namespace: demo

spec:

podSelector:

matchLabels:

app: server

ingress:

- from:

- podSelector:

matchLabels:

app: client

ports:

- port: 80

protocol: TCP

Deploying a Sample SaaS Application to AKS

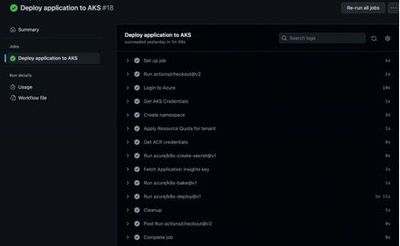

For configuration automation we will use GitHub Actions…

Once the cluster is up and running tenants can be onboarded to the cluster through the registration process through the portal.

Tenant specific configuration will be automated with Github Actions which will be triggered with an Azure Function.

For Github actions to be able to push tenant specific configuration, we need to connect GitHub Actions to Azure through Azure AD so that GitHub Actions can access the required services (e.g. Azure AKS) for deployments.

This requires..

- Configuring a service principal that Github Actions will use and defining its scope

- Add principal credentials to Github Actions as secrets

- Add a “login” workflow step to log Github Actions to Azure.

- Adding workflow steps so that you can use kubectl with github actions as explained here.

Configured with the correct access rights, Github actions will first create the cluster and push configurations which require cluster level scope.

Once the cluster is up and running another Github Actions workflow can either be triggered manually or integrated to an Azure function and can push the tenant specific configuration to the AKS cluster. The configuration will..

- create an AKS namespace with kubectl embedded into a Github Actions job.

- configure resource quotas for the namespace.

- Implement per tenant network policies for tenant pods

- Create a secret store per tenant

- Fetch ACR credentials per tenant

- …and finally deploy the application instance pods for the tenant.

You can clone the code from the github repository…https://github.com/torosgo/saas-aks

Authors

Ozgur Guler, Partner CSA Digital Natives

Toros Gokkurt, Partner CSA App Innovation

References:

Kubernetes multi-tenancy documentation

Architect multitenant solutions on Azure

Azure Kubernetes Service (AKS) considerations for multitenancy

Kata Containers An introduction and overview

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.