- Home

- Azure

- Analytics on Azure Blog

- Processing Millions of Events using Databricks Delta Live Tables, Azure Event Hubs and Power BI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This post is authored by Patrick Kragthorpe-Shirley (RBA) and Frank Munz (Databricks). RBA Inc is a digital and technology consultancy and is a Microsoft Solutions Partner and Databricks Partner. More about the authors at the end of this article.

As the Director of Software Engineering and Data at RBA, Patrick continues to seek ways to enhance our data and analytics offerings. Recently, he explored the capabilities of the Databricks Data Intelligence Platform, driven by a desire to understand its potential for his organization and his clients. His immediate goal was to develop an application leveraging Databricks real-time data streaming capabilities and its seamless integration with Azure, aiming to present a compelling and inspiring showcase of the power of Azure Databricks at an upcoming company meeting.

In this blog post, he will take us on the journey through the creation of the application using live data. How did he approach this? Let's dive into the technical specifics!

Inspiration

A colleague who attended the Data+AI Summit (DAIS) in San Francisco in the summer of 2023 brought to my attention an engaging demo by Frank Munz from Databricks. Frank created a POC of a distributed crowd-sourced seismograph. He captured accelerometer data from 150 mobile phones from attendees in the audience and streamed a remarkable volume of 60 million events per day with Delta Live Tables (DLT) and Databricks Workflows. All the data engineering was running on serverless compute (which was in preview at the time of this writing) and scaled without any configuration necessary.

To visualize the high throughput, Frank used Spark Structured Streaming for real-time analysis in a Databricks notebook. He calculated on the fly the average acceleration of all participants' phones for 2-second sliding windows. The output was then displayed in real-time as a moving bar graph.

The fun part was the gamification element. Once the app was running, Frank split attendees into two groups and let them compete against each other to see who could simulate a larger earthquake.

What is Delta Live Tables?

To give you a bit more context if you are new to Databricks: Delta Live Tables is an ETL tool to easily create low-cost ETL pipelines in SQL (or Python). You can write Python/SQL code and DLT manages all the operational tasks such as cluster management, upgrades, error handling, retries, data quality, and observability.

Data pipelines in DLT are composed of Streaming Tables and Materialized Views (MVs). Streaming Tables incrementally ingest data exactly-once from any source. Materialized Views, typically used for complex transformations, store the result of a precomputed query and are refreshed periodically (pretty much like what you might remember from the old days with databases such as Oracle).

Both Streaming Tables and Materialized Views can be queried just like any other Delta table. Additionally, they work with the Databricks SQL editor, which offers features like syntax highlighting, code completion, and Assistant-generated SQL code.

A Rough Idea

After reviewing Frank's DAIS session, I recognized the potential for a similar demo that we could adapt for our use. I embraced the challenge of translating Frank's application that was running Databricks on AWS to our stack on Microsoft Azure.

This entailed using Azure Databricks (a trial setup was sufficient for our exploration), switching from AWS Kinesis to Azure Event Hubs, and using the Azure Static Web Application for the data-producing web app.

This approach not only maintained the serverless architecture of Frank's original demo but also leveraged our expertise as a Microsoft Solutions partner. Additionally, I aimed to enhance the demo by incorporating real-time data visualization with Power BI.

Below, I outline the key steps taken to realize this project.

Putting it all Together: Streaming Data with Delta Live Tables on Azure

Step 1: Creating the Infrastructure: Azure Resources and Databricks Integration

To set up the necessary environment, scripting the deployment of Azure resources was important for me. The key components were Databricks and Azure Event Hubs, essential for data streaming. In exploring various data ingestion methods for the Delta Live Tables pipeline in Databricks, I opted to replace Frank's implementation for Amazon Kinesis with Azure Event Hubs (Kafka streams). Consequently, it was imperative to select the Standard SKU for Azure Event Hubs deployment, enabling the required Kafka streaming functionality.

Step 2: Developing the Web Application: Leveraging React and Azure Static Web App

The original static web application that captured the accelerometer data to generate events was a single page web application (SPA) hosted on Amazon S3. This is an elegant, low maintenance, low cost, and scalable approach.

I wanted to produce the same results but leverage a more modern JavaScript framework and therefore, I chose to build the web application using React and then leverage Webpack to bundle the app. I deployed to Azure Static Web App through an Azure DevOps pipeline.

Once I had the repo and pipeline created, I needed to solve the problem of writing events to EventHub. Importing the @azure/event-hubs module into the React application along with the @azure/identity module made it easy to produce events on the appropriate EventHub in Azure.

Step 3: Migration from Kinesis to Azure Event Hubs

Frank's original pipeline was easy to understand and straightforward. It consumes the events, creates a structured object from the JSON event data, and stores it in a Delta table. To repurpose Frank’s demo, all I had to do was to change the original Amazon Kinesis endpoint to a Kafka / Azure Event Hubs endpoint, which was also fairly straight-forward.

Below I listed the changes I had to make. All of these settings are specific to Kinesis and the flavor of the Kafka service and I had to provide them in DLT for authentication. The core DLT logic did not require a single change.

I started with Frank's version for Amazon Kinesis which looked as follows

# read AWS Kinesis STREAM

myStream = (spark.readStream

.format("kinesis")

.option("streamName", STREAM)

.option("region", "us-east-1")

.option("awsAccessKey", awsKey)

.option("awsSecretKey", awsSecretKey)

.load()

)

I modified that to work with Azure Event Hubs and Kafka as follows:

# Event Hubs configuration

# Kafka Consumer configuration

KAFKA_OPTIONS = {

"kafka.bootstrap.servers" : f"{EH_NAMESPACE}.servicebus.windows.net:9093",

"subscribe" : EH_NAME,

"kafka.sasl.mechanism" : "PLAIN",

"kafka.security.protocol" : "SASL_SSL",

"kafka.sasl.jaas.config" : f"kafkashaded.org.apache.kafka.common.security.plain.PlainLoginModule required username=\"$ConnectionString\" password=\"{EH_CONN_STR}\";",

"kafka.request.timeout.ms" : 30000,

"kafka.session.timeout.ms" : 45000,

"maxOffsetsPerTrigger" : 10000,

"failOnDataLoss" : False,

"startingOffsets" : "latest"

}

myStream = (spark.readStream

.format("kafka")

.options(**KAFKA_OPTIONS)

.load()

During the conversion, I faced a challenge with JSON events encoding. These events were base64 encoded, meaning when we parsed them into structured data, they appeared as encoded strings. This was because writing data into EventHub automatically converted byte data into a base64 string.

To address this, we had to incorporate a decoding step in our data pipeline, using unbase64(…) to decode the data, as demonstrated below.

import base64

from_json(unbase64(col("value")).cast("string"), motion_schema).alias("motion"))

Step 4: Data Pipeline: Databricks Delta Live Tables for Event Ingestion

Once events were being generated and pushed to Azure Event Hubs, the next step was to ingest those events with a DLT pipeline.

DLT itself doesn't need any compute configuration and spins up its own cluster. There is a serverless feature in preview now. For the Spark Streaming notebook running the visualization, I went with the preconfigured personal compute.

Then, I connected my code repository, enabling me to select the Python notebook for the DLT data pipeline through the DLT settings wizard. A single Python file for DLT is what does all the work to ingest the data into Databricks and structures the data for building the visualizations. Pipelines can span multiple notebooks if required.

Once the pipeline was running, I could see the number of records ingested per table.

While Frank decided to use Python for DLT development because of the Kinesis ingestion, I learned that now you could code this entirely in SQL. You can now call the SQL function read_kafka() function to consume data from a Kafka topic and write to a table for querying.

Step 5: Making Sure it Makes Sense

The first question I asked myself here was, it can't really be that easy, can it? To verify the data was coming through, I checked the auto-derived schema, data quality and the number of records dropped in the DLT pipeline under Data Quality and only then ran the Spark Streaming notebook for visualization.

Step 6: Visualization

Despite migrating the whole app from AWS to Azure and from Kinesis to Kafka, the visualization notebook using Spark Streaming worked as is, since it displays the streaming of processed incoming events from an open format Delta table in a simple bar chart.

Feeling a sense of accomplishment in getting an application that fascinated 150 attendees at the Data + AI Summit converted to Azure, I thought it would be cool to add a Power BI dashboard to the mix to render the data outside of Databricks.

Step 7: Adding Real-Time Visualization with Power BI

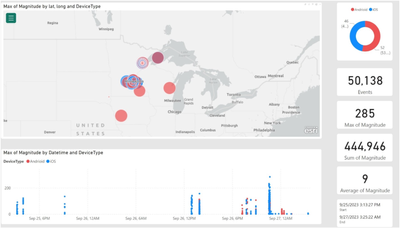

RBA has offices all over the US and since my idea was to demonstrate the Databricks application at a company meeting, I wanted to take another step further with the demo. I added GPS location, and mobile device type data to the stream to take advantage of Power BI’s map visualization. With this data, I was able to distinguish and have an iOS vs Android shake-off competition in the demo.

It literally took about 30 minutes to add the data to the stream in the web app and to update the structure in the data pipeline to receive the additional data in order to make this a reality. Once the data was in the catalog, we built a Power BI report on top of the data that had an auto-refresh to show the data as it was streaming live into the system.

Conclusion

In conclusion, delving into the world of Databricks Delta Live Tables for our serverless data streaming application has been an exhilarating journey. Crafting a real application with live data that captivated our audience and opened new doors of exploration has been truly rewarding.

Running my application across various locations in the US for our company event demonstrated the efficiency of Delta Live Tables in processing large-scale, continuous data streams at low latencies.

"As a first-timer interfacing with Databricks and its Data Intelligence Platform, I was pleasantly surprised by the ease with which we seamlessly connected Delta Live Tables to our data streams."

As a first-timer interfacing with Databricks and its Data Intelligence Platform, I was pleasantly surprised by the ease with which we seamlessly connected Delta Live Tables to our data streams. The flexibility to make changes on the fly, adding additional data points, and enriching the analytical experience, showcased the agility and power the Databricks Data Intelligence Platform brings to the table.

We experienced hands-on how Databricks runs on multiple cloud platforms where it supports all kinds of data ingestion from message buses and cloud object stores.

"The Databricks Intelligence Platform implements a consistent single, simple, and seamless governance model across all clouds."

Unlike the other often challenging cloud-specific solutions, Databricks Lakehouse implements the same single, simple, and seamless governance model across all clouds. Unity Catalog works for all objects such as tables, functions, volumes, data pipelines and AI models.

Most of my efforts here were about changing the AWS-specific Kinesis streams to the de-facto Apache Kafka standard. After this POC, we are ready to explore the new features of the Data Intelligence Platform such as Databricks Assistant for configuration and code migration and discover the possibilities it holds for our data-driven initiatives.

This endeavor has sparked a curiosity in my team beyond this particular project. The fun we had generating an engaging, real-time demo has me looking forward to further collaboration with Databricks.

Additional Resources

I found the following resources useful while writing this article.

- A condensed version of Frank's original demonstration from DAIS 2023 in San Francisco can be found in the Databricks Demo Center, featuring a making-of. I recommend watching it, especially to see the DAIS audience's enthusiastic response as they simulate a minor earthquake by vigorously shaking their mobile phones: The Real-Time Serverless Lakehouse.

- The Databricks Demo Center also hosts a simple click-through Delta Live Tables product tour that lets you explore DLT from your browser, without signing up for any trial or creating a workspace.

- Frank's demo included Databricks Workflows, to get an overview about orchestration, I recommend you to watch the demo about using Workflows with LLMs such as OpenAI.

About the authors

Patrick Kragthorpe-Shirley is a Director of Software and Data Engineering for RBA Inc., where he brings his passion for technology to modern software and data solutions. RBA Inc, a digital and technology consultancy, is a Microsoft Solutions Partner and Databricks Partner.

Frank Munz works as Principal TMM at Databricks where he brings the magic to Databricks' Data and AI products, pioneering practical solutions for practitioners.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.