Multi-agent framework is the next evolution of single agent chat bots and RAG (Retrieval Augmented Generation) applications. The Autogen framework is currently the most popular for multi-agent use cases. Promptflow is a tool that simplifies the process of building, evaluating, and deploying Language Learning Model (LLM) applications. This blog will guide you on how to build Autogen workflows with Promptflow.

What is Autogen?

AutoGen is a framework developed by Microsoft Research that simplifies the orchestration, optimization, and automation of workflows for large language models (LLMs). It enables complex LLM-based applications by allowing multi-agent conversations. Unlike traditional non-agentic or single agent workflows, which operate under rigid, predefined responses, agentic workflows allow AI to engage in dynamic, iterative processes that mirrors human cognitive practices. This paradigm shift enables AI to undertake complex tasks with depth and improves both the quality of output and its ability to tackle complex problems. Refer to Autogen Github Page for latest info.

What is Promptflow?

Promptflow is a development tool designed to streamline the entire development cycle of AI applications powered by Large Language Models (LLMs). It simplifies the process of prototyping, experimenting, iterating, and deploying your AI applications. Refer to Microsoft Documentation page for more information.

Why use Autogen with Promptflow:

While building LLM applications there are several other factors to consider for production deployment e.g. evaluation, running tests in batch mode, collecting and analysing test results, and deployment with appropriate monitoring and autoscaling etc. To address these requirements and organise pipeline with LLMOps concepts, we will develop an Autogen workflow using Promptflow. In this blog, we will focus on achieving build and deploy. Refer to online documentation for further details on evaluation, monitoring, and deployment.

Problem Summary:

We will use an existing example of Solving Complex Tasks with Nested Chats using Autogen and demonstrate how to deploy it using Promptflow. The primary goal of this workflow is to improve the quality of the LLM response by creating additional agents to provide feedback to response from the original response. In short, `user_proxy` take the input question, a `writer` agent responds to input question and a `critic` agent provide feedback to `writer` agent iteratively helping `writer` agent to improve and refine the answer provided.

Step1: Build chat flow

1. Setup your environment and ensure all the dependencies are installed. Follow Quick Start Guide for a detailed walkthrough.

2. Create a new Promptflow chat flow workspace. In this example, we will use Visual Studio Code (VSCode) for development:

pf flow init --flow pf-autogen --type chat

cd pf-autogen/

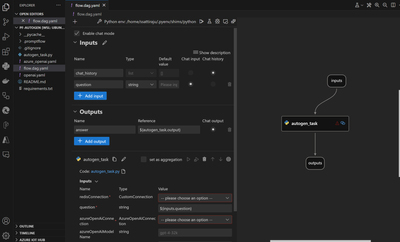

3. The default Promptflow chat includes `Input`, `Chat`, and `Output` steps. We will replace the `Chat` tool with the `Python` tool and name it `autogen_task`. Create Azure Open AI and Redis resources, register them, and update `autogen_task` with the input question and the created connections.

4. Promptflow allows secure registration and usage of connections. Here's an example of how to use Azure Open AI or any other custom connections for your workflow:

from promptflow.core import tool

from promptflow.connections import AzureOpenAIConnection, CustomConnection

@tool

def my_python_tool(

redisConnection: CustomConnection,

question: str,

azureOpenAiConnection: AzureOpenAIConnection,

azureOpenAiModelName: str = "gpt-4-32k",

autogen_workflow_id: int = 1,

) -> str:

print("Hello Promptflow")

After completing these steps, your Promptflow should look like this:

Click on the dropdown and select the connections created earlier.

Step:2 Create Autogen workflow

We will create a python file `agentchat_nestedchat.py` and instantiate `AgNestedChat` class. This class initializes required agents, including a writer, user proxy, and critic, each with their unique roles. Each agent is registered with a reply function that updates Redis with the sender's name, the recipient's name, and the messages.

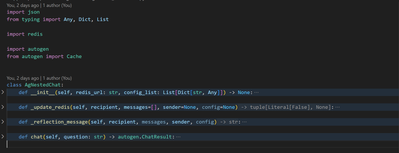

Here's a brief look at structure of `AgNestedChat` class:

- `__init__`: This is the constructor method that initializes the class. It sets up connections to Redis, creates a Redis cache, and initializes three agents: a writer, a user proxy, and a critic. Each agent is registered with a reply function that updates Redis with the sender's name, the recipient's name, and the messages.

- `_update_redis`: This method is used to publish a message to Redis. It takes the recipient, messages, sender, and config as arguments, and returns a tuple of False and None.

- `_reflection_message`: This method generates a reflection message. It takes the recipient, messages, sender, and config as arguments, and returns a string that prompts the recipient to reflect and provide critique on the last message from the sender.

- `chat`: This method registers nested chats for the user proxy agent and initiates a chat with the writer agent. It takes a question as an argument and returns the result of the nested chat.

Example structure of `AgNestedChat` class:

2. Update `autogen_task` to import `AgNestedChat` class and send the input question to nested chat.

from agentchat_nestedchat import AgNestedChat

from promptflow.connections import AzureOpenAIConnection, CustomConnection

from promptflow.core import tool

@tool

def my_python_tool(

redisConnection: CustomConnection,

question: str,

azureOpenAiConnection: AzureOpenAIConnection,

azureOpenAiModelName: str = "gpt-4-32k"

) -> str:

ag_workflow = AgNestedChat(config_list=OAI_CONFIG_LIST, redis_url=redis_url)

res = ag_workflow.chat(question=question)

return res.summary

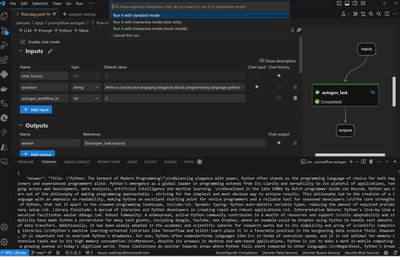

3. Execute the flow with input question and `Run it with standard mode` on the VSCode UI to see the results.

Step:3 Deployment

NOTE: For production deployments, Autogen tasks may take some time to return results. You might want to consider streaming interim responses (available in Redis) back to the user. Stay tuned for another blog post on how to do this.

Summary