- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Azure OpenAI offering models - Explain it Like I'm 5

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

It's been almost 2 years since our Azure OpenAI service was released, an Azure AI Service enabling customers to leverage the power of State-of-the-art Generative AI models. These models have become ubiquitous in our daily lives, from Copilots and ChatGPT providing productivity boosts to our daily tasks, all the way to AI agents, that are applying Artificial Intelligence to solve business tasks with amazing speed and accuracy. The common thread behind these experiences is the model interaction which for many enterprise customers has become paramount to its business applications. No matter the use case, the models are the engine providing astoundingly intelligent responses to our questions.

In the Azure OpenAI service, which provides Azure customers access to these models, there are fundamentally 2 different levels of service offerings:

1. Pay-as-you-go, priced based on usage of the service

2. Provisioned Throughput Units (PTU), fixed-term commitment pricing

Given the 2 options, you would probably gravitate toward the pay-as-you-go pricing, this is a logical conclusion for customers just starting to use these models in Proof of Concept/Experimental use cases. But as customer use cases become production-ready, the PTU model will be the obvious choice.

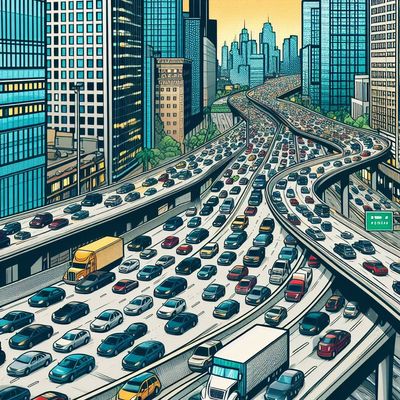

If you think about the Azure OpenAI service as analogous to a freeway, the service helps facilitate Cars (requests) travelling to the models and ultimately back to the original location. The funny thing about highways and interstates, like standard pay-as-you-go deployments, is you are unable to control who is using the highway the same time as you are―which is akin to the service's utilization we all experience during peak hours of the day. We all have a posted a speed limit, like rate limits, but may never reach the speed we expect due to the factors mentioned above. Moreover, if you managed a fleet of vehicles―vehicles we can think of as service calls―all using different aspects of the highway, you also cannot predict which lane you get stuck in. Some may luckily find the fast lane, but you can never prevent the circumstances ahead in the road. That's the risk we take when using the highway, but tollways (token-based consumption) all give us the right to use it whenever we want. While some high demand times are foreseeable, such as during rush hour, there may be cases where phantom slowdowns exist where there is no rhyme or reason as to why these slowdowns occur. Therefore, your estimated travel times (Response Latency) can vary drastically based on the different traffic scenarios that can occur on the road.

retry-after-ms value returned from the 429 response―you can define how long you are willing to wait before you redirect traffic to Pay-as-you-go or other models. This implementation will also ensure you are getting the highest throughput out of PTUs as possible.

In summary, for Azure OpenAI use cases that require Predictable, Consistent, and Cost Efficient usage of the service the Provisioned Throughput Unit (PTU) offering becomes the most reasonable solution especially when it comes to business-critical production workloads.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.