- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Advanced RAG with Azure AI Search and LlamaIndex

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We’re excited to share a new collaboration between Azure AI Search and LlamaIndex, enabling developers to build better applications with advanced retrieval-augmented generation (RAG) using a comprehensive RAG framework and state-of-the-art retrieval system.

RAG is a popular way to incorporate company information into Large Language Model (LLM)-based applications. With RAG, AI applications access the latest information in near-real time, and teams can maintain control over their data.

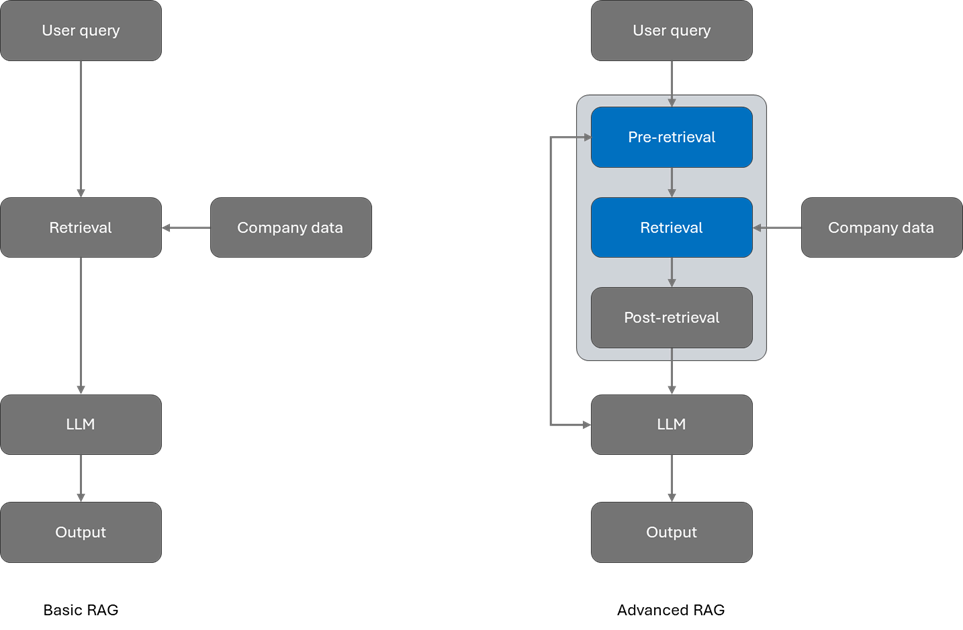

In RAG, there are various stages that you can evaluate and modify to improve your results, they fall into three categories: pre-retrieval, retrieval, and post-retrieval.

- Pre-retrieval enhances the quality of data retrieved using techniques such as query rewriting.

- Retrieval improves results using advanced techniques such as hybrid search and semantic ranking.

- Post-retrieval focuses on optimizing retrieved information and enhancing prompts.

LlamaIndex provides a comprehensive framework and ecosystem for both beginner and experienced developers to build LLM applications over their data sources.

Azure AI Search is an information retrieval platform with cutting-edge search technology and seamless platform integrations, built for high performance Generative AI applications at any scale.

In this post, we will focus on the pre-retrieval and retrieval stages. We will show you how to use LlamaIndex in pre-retrieval for query transformations and use Azure AI Search for advanced retrieval techniques.

Figure 1: Pre-retrieval, retrieval, and post-retrieval in advanced RAG

Pre-retrieval Techniques and Optimizing Query Orchestration

To optimize pre-retrieval, LlamaIndex offers query transformations, a powerful feature that refines user input. Some query transformation techniques include:

- Routing: keep the query unchanged, but identify the relevant subset of tools that the query applies to. Output those tools as the relevant choices.

- Query rewriting: keep the tools unchanged, but rewrite the query in a variety of different ways to execute against the same tools.

- Sub-questions: decompose the query into multiple sub-questions over different tools, identified by their metadata.

- ReAct agent tool picking: given the initial query, identify (1) the tool to pick, and (2) the query to execute on the tool.

Take query rewriting as an example: query rewriting uses the LLM to reformulate your initial query into many forms. This allows developers to explore diverse aspects of the data, resulting in more nuanced and accurate responses. By doing query rewriting, developers can generate multiple queries for ensemble retrieval and fusion retrieval, leading to higher quality retrieved results. Leveraging Azure OpenAI, an initial query can be decomposed into multiple sub-queries.

Consider this initial query:

“What happened to the author?”

If the question is overly broad or it seems unlikely to find a direct comparison within our corpus text, it is advisable to break down the question into multiple sub-queries.

Sub-queries:

- “What is the latest book written by the author?”

- “Has the author won any literary awards?”

- “Are there any upcoming events or interviews with the author?”

- “What is the author’s background and writing style?”

- “Are there any controversies or scandals surrounding the authors?”

Sub Question Query Engine

One of the great things about LlamaIndex is that advanced retrieval strategies like this are built-in to the framework. For example, the sub-queries above can be handled in a single step using the Sub Question Query Engine, which does the work of decomposing the question into simpler questions and then combining the answers into a single response for you.

response = query_engine.query("What happened to the author?")

Retrieval with Azure AI Search

To enhance retrieval, Azure AI Search offers hybrid search and semantic ranking. Hybrid search performs both keyword and vector retrieval and applies a fusion step (Reciprocal Rank Fusion (RRF)) to select the best results from each technique.

Semantic ranker adds a secondary ranking over an initial BM25-ranked or RRF-ranked result. This secondary ranking uses multi-lingual, deep learning models to promote the most semantically relevant results.

Semantic ranker can easily be enabled by updating the “query_type” parameter to “semantic.” Since semantic ranking is done within Azure AI Search stack, our data shows that semantic ranker coupled with hybrid search is the most effective approach for improved relevance out of the box.

In addition, Azure AI Search supports filters in vector queries. You can set a filter mode to apply filters before or after vector query execution:

- Pre-filter mode: apply filters before query execution, reducing the search surface area over which the vector search algorithm looks for similar content. Pre-filtering is generally slower than post-filtering but favors recall and precision.

- Post-filter mode: apply filters after query execution, narrowing the search results. Post-filtering favors speed over selection.

We are excited to be collaborating with LlamaIndex to offer easier ways to optimize pre-retrieval and retrieval to implement advanced RAG. Running advanced RAG doesn’t stop at pre-retrieval and retrieval optimization, we are just getting started! Stay tuned for future approaches we are exploring together.

Get Started and Next Steps

- azure-search-vector-samples/demo-python/code/community-integration/llamaindex/azure-search-vector-py...

- Azure AI Search - LlamaIndex 🦙 v0.10.3

- Azure AI Search code samples

- Azure AI Search documentation

- LlamaIndex documentation

Examples

Setup Azure OpenAI

aoai_api_key = "YourAzureOpenAIAPIKey"

aoai_endpoint = "YourAzureOpenAIEndpoint"

aoai_api_version = "2023-05-15"

llm = AzureOpenAI(

model="YourAzureOpenAICompletionModelName",

deployment_name="YourAzureOpenAICompletionDeploymentName",

api_key=aoai_api_key,

azure_endpoint=aoai_endpoint,

api_version=aoai_api_version,

)

# You need to deploy your own embedding model as well as your own chat completion model

embed_model = AzureOpenAIEmbedding(

model="YourAzureOpenAIEmbeddingModelName",

deployment_name="YourAzureOpenAIEmbeddingDeploymentName",

api_key=aoai_api_key,

azure_endpoint=aoai_endpoint,

api_version=aoai_api_version,

)

Setup Azure AI Search

search_service_api_key = "YourAzureSearchServiceAdminKey"

search_service_endpoint = "YourAzureSearchServiceEndpoint"

search_service_api_version = "2023-11-01"

credential = AzureKeyCredential(search_service_api_key)

# Index name to use

index_name = "llamaindex-vector-demo"

# Use index client to demonstrate creating an index

index_client = SearchIndexClient(

endpoint=search_service_endpoint,

credential=credential,

)

# Use search client to demonstration using existing index

search_client = SearchClient(

endpoint=search_service_endpoint,

index_name=index_name,

credential=credential,

)

Create a new index

metadata_fields = {

"author": "author",

"theme": ("topic", MetadataIndexFieldType.STRING),

"director": "director",

}

vector_store = AzureAISearchVectorStore(

search_or_index_client=index_client,

filterable_metadata_field_keys=metadata_fields,

index_name=index_name,

index_management=IndexManagement.CREATE_IF_NOT_EXISTS,

id_field_key="id",

chunk_field_key="chunk",

embedding_field_key="embedding",

embedding_dimensionality=1536,

metadata_string_field_key="metadata",

doc_id_field_key="doc_id",

language_analyzer="en.lucene",

vector_algorithm_type="exhaustiveKnn",

Load documents

documents = SimpleDirectoryReader("../data/paul_graham/").load_data()

storage_context = StorageContext.from_defaults(vector_store=vector_store)

Settings.llm = llm

Settings.embed_model = embed_model

index = VectorStoreIndex.from_documents(

documents, storage_context=storage_context

)

Vector search

from llama_index.core.vector_stores.types import VectorStoreQueryMode

default_retriever = index.as_retriever(

vector_store_query_mode=VectorStoreQueryMode.DEFAULT

)

response = default_retriever.retrieve("What is inception about?")

# Loop through each NodeWithScore in the response

for node_with_score in response:

node = node_with_score.node # The TextNode object

score = node_with_score.score # The similarity score

chunk_id = node.id_ # The chunk ID

# Extract the relevant metadata from the node

file_name = node.metadata.get("file_name", "Unknown")

file_path = node.metadata.get("file_path", "Unknown")

# Extract the text content from the node

text_content = node.text if node.text else "No content available"

# Print the results in a user-friendly format

print(f"Score: {score}")

print(f"File Name: {file_name}")

print(f"Id: {chunk_id}")

print("\nExtracted Content:")

print(text_content)

print("\n" + "=" * 40 + " End of Result " + "=" * 40 + "\n")

Hybrid search

from llama_index.core.vector_stores.types import VectorStoreQueryMode

hybrid_retriever = index.as_retriever(

vector_store_query_mode=VectorStoreQueryMode.HYBRID

)

hybrid_retriever.retrieve("What is inception about?")

Hybrid search and semantic ranking

hybrid_retriever = index.as_retriever(

vector_store_query_mode=VectorStoreQueryMode.SEMANTIC_HYBRID

)

hybrid_retriever.retrieve("What is inception about?")

Query rewriting

from llama_index.core import PromptTemplate

query_gen_str = """\

You are a helpful assistant that generates multiple search queries based on a \

single input query. Generate {num_queries} search queries, one on each line, \

related to the following input query:

Query: {query}

Queries:

"""

query_gen_prompt = PromptTemplate(query_gen_str)

def generate_queries(query: str, llm, num_queries: int = 5):

response = llm.predict(

query_gen_prompt, num_queries=num_queries, query=query

)

# assume LLM proper put each query on a newline

queries = response.split("\n")

queries_str = "\n".join(queries)

print(f"Generated queries:\n{queries_str}")

return queries

queries = generate_queries("What happened to the author?", llm)

Generated queries:

- What is the latest book written by the author?

- Has the author won any literary awards?

- Are there any upcoming events or interviews with the author?

- What is the author's background and writing style?

- Are there any controversies or scandals surrounding the author?

Sub question query engine

from llama_index.core.query_engine import SubQuestionQueryEngine

from llama_index.core.tools import QueryEngineTool, ToolMetadata

# setup base query engine as tool

query_engine_tools = [

QueryEngineTool(

query_engine=index.as_query_engine(),

metadata=ToolMetadata(

name=”pg_essay”,

description="Paul Graham essay on What I Worked On",

),

),

]

# build a sub-question query engine over this tool

# this allows decomposing the question down into sub-questions which then execute against the tool

query_engine = SubQuestionQueryEngine.from_defaults(

query_engine_tools=query_engine_tools,

use_async=True,

)

response = query_engine.query("What happened to the author?")

Generated 1 sub questions.

[pg_essay] Q: What did the author work on?

[pg_essay] A: The author worked on writing and programming before college. They wrote short stories and also tried programming on an IBM 1401 computer using an early version of Fortran. Later, they worked with microcomputers, building one themselves and eventually getting a TRS-80. They wrote simple games, a program to predict rocket heights, and a word processor. In college, the author initially planned to study philosophy but switched to AI because of their interest in intelligent computers.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.