- Home

- Azure

- Azure Virtual Desktop

- Re: Consistent Thin Client Disconnection from WVD Pool

Consistent Thin Client Disconnection from WVD Pool

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 12 2019 11:59 AM - edited Dec 12 2019 12:06 PM

Hello we have been experiencing some random but consistent disconnects from our WVD Pool. We have roughly 10 users and have been getting different event viewer logs for when they disconnect. We have Thin Clients on Windows 10 version 1607. When the users disconnect it will happen multiple times per day, however some days they do not disconnect. Attached are the event viewer logs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 07:17 AM

Hi Marco,

I just tested more on our environment.

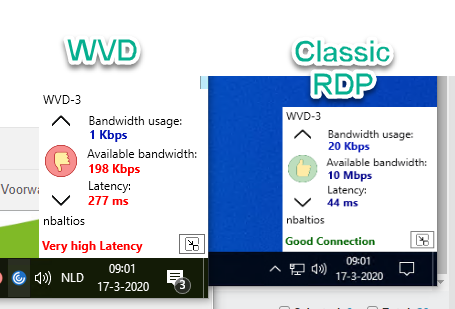

- RDP to APP server running in same vnet

bandwidth 20mbit, fast speed, amazing! - Then I logged in through Remote desktop app, I ran the tool from Bram Wolfs.

This immediately gave a warning, high latency.

The only difference is that I am now going through MS RD Gateway infrastructure.

This is exactly the issue that our users are experiencing (I even think this is also related to the disconnect issue)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 08:28 AM

We have 27 thin clients, and changed 12 of them to each connect directly to one of our 4 WVD hosts (so classic RDP, through site-to-site VPN to the WVD host).

Not a single session was disconnected, and users report less lag in their sessions when using this "direct RDP" workaround.

THE PROBLEM IS IN AZURE WVD GATEWAY.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 08:43 AM

Can someone at MSFT pick up this issue please? This is not related to a customer specific.

Thank you in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 09:11 AM

@knowlite We've had multiple reports regarding high latency in the europe region and are investigating. Thanks for letting us know. I will circle back to this thread once I know more.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 09:55 AM

Status update for everyone:

MS Engineer told me that the PG Team have applied a policy change to mittigate the latency issues we are seeing. They did ask us to reboot the WVD hosts to apply this change so he advises everyone on this thread to do so.

Afterwards the WVD deployments I rebooted have decent performance but then we had decent performance before the reboot as well given the time of the day. I allowed him to downgrade the issue to B status and we will check tomorrow morning if the problem persists or not.

He did also acknowledge that given the Corona outbreak the WVD infrastructure has a peak in usage and the infrastructure team are looking at improvements to assist in that area.

So I'm not sure if their fix actually resolves the issue or it's just a case of increasing capacity for WVD after increased usage the last weeks. If it's the second case I assume the problems will persist for some time. I can take single server customers to VPN-Vanilla RDP without too much changes but customers who require loadbalancing will need a special temp solution then :-S

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 11:32 AM

Thanks Pieter for the confirmation, I will reboot our WVD hosts tonight to see if anything has improved tomorrow according to @XxArkayxX advice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 11:34 AM

Tonight, our sessionshosts will be rebooted also. Hopes this helps......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 11:39 AM

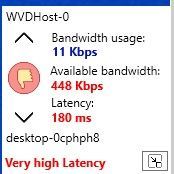

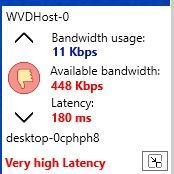

While I know it is after business hours and after rebooting the host. Current connection status:

Old:

New:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 01:20 PM

We have the same issue in Canada. Different customers, somes in Canada Central and Canada East. Both getting random but consistent disconnects. This is not related to a specific VM, i can RDP to 3389 without any disconnect the whole day and my customer complaining for multiple disconnect (over 5 per user per day) that can take 3 minutes up to 30 minutes to come back. When a disconnect occurs, it affect some users with the error 14 "Unexpected network disconnect" or "50331694 AutoReconnect due to Network Error" at the same time on different servers and differents start session time.

Do you have any ETA about the fix for this problem? Customers need to work remotely more than ever due to the current situation. The Azure gateway is very unreliable. Do you support a way to connect a Windows Server 2019 Gateway to WVD?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 02:56 PM

The last few weeks I was checking for disconnects for this command:

Get-RdsDiagnosticActivities -Outcome Failure -starttime 13-03-2020 -detailed -tenant <<TENANTNAME>> | sort-object endtime | ft

Now it suddenly gives me this error:

Get-RdsDiagnosticActivities : There were no activities found.

Does anyone else have this too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 16 2020 03:27 PM

Humm, I didn't have this issue today, I usually get everything then filter that I want.

Maybe it is related to the date. You might get better luck if you use (get-date) instead:

$Errorlogs = Get-RdsDiagnosticActivities -TenantName $myTenantName -StartTime (get-date).AddHours(-8) -Detailed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 12:37 AM

I rebooted all hosts yesterday evening. After hours feedback was very good, unfortunately office hours started again and latency went up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 12:58 AM - edited Mar 17 2020 01:09 AM

Here also. Very, very slow connection.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 01:24 AM

It's everwhere (at least in West EU) again. Raised the ticket with MS back to A.

I'm reverting back to Vanilla-RDP on the same infrastructure while MS fixes this. The problem isn't present on the same Azure infrastructure over standard RDP, not using the WVD Gateway. All you need to do is reconfigure the client if you're running a single server WVD deployment. Oh, and grant Remote Desktop access on the Win10 Multiuser Box. For multiple server deployments I haven't found a solution yet except forego Load Balancing and point individual groups of users to individual machines. What a mess

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 06:52 AM

Another update:

Still no news from MS. They asked me to reboot the VM again but I wouldn't have that kind of "fix" again. Wanted to speak to the genious in their team who came up with that lame "fix".

Here's what I came up with so far:

1) Running continuous psping to rdweb.wvd.microsoft.com resolving to 51.136.28.200 from my location. Result: Spikes galore! And with spikes I mean 1000-3000ms. Ouch!

2) Running continuous psping to the WVD VM (opened up port 3389 during this crisis): 20-30ms except a period around 11-12 this morning when apparently the entire West EU Azure center had congestion

3) From inside a VM in the Europe West region urnning continuous psping to rdweb.wvd.microsoft.com resolving to 51.136.28.200: 1-10ms pings with again regular spikes to 3000ms. That's a ping that should remain inside the West EU Datacenter. As stated before, the issue is the WVD Gateway service.

4) For **bleep**s 'n giggles I also ran a continuous ping to 52.177.123.162 which should be the IP for rdweb.wvd.microsoft.com in a large portion of the US: 100-200ms with...regular spikes 1000-3000ms

5) Ran a psping to 137.116.248.148 which should be the endpoint for a portion of Ireland/UK: 30-50ms with almost none to very rarily spikes. Compared to the other ones though, very few countries should use this endpoint.

My conclusion: It's obviously a capacity issue for the actual WVD Gateway. Should we, customers, actually be doing this type of performance monitoring or can we expect MS to do this themselves? Does it take multiple weeks to acknowledge "Aha, looks like we have capacity issues"? I'm willing to accept they need days or weeks to add infrastructure. Especially if they don't monitor this (good) themselves. But we're wasting time if it takes 2 weeks to get up to this point while it's crystal clear.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 08:39 AM

We've sent the following message customers using WVD in the West Europe region, this should appear in your Azure portal:

Starting at 7:00 UTC on 17 Mar 2020 you have been identified as a customer who may experience latency issues when using Virtual Machines via Windows Virtual Desktop in West Europe.

Engineers are aware of this issue and are actively investigating.

Engineers recommend disconnecting and reconnecting to the Virtual Machines which may help.

The next update will be provided in 60 mins or as events warrant.

@XxArkayxX - I'm not sure this is the same issue you are seeing as this has been recently identified.

I'll circle back with more information when available.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 09:01 AM

Yes, we see the health issue. Great that the issue is escalated to this level, and recognized as an West-Europe-wide problem!

Please be aware that MS already asked us to reboot the WVD hosts after a change MS did in the gateway earlier, but that it did not solve the issue.

Greets

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 17 2020 09:41 AM

For some reason I can't reply to Pieter

Yes, it is the same issue. I have sent the logs to your collegue with MS Case 120031223001948 to prove it. They asked me to disconnect/reconnect session and reavaluate but that's simply pointless given that the problem disappears around 17:00 GMT+1 every day regardless of what MS does. And then it returns from 08:00. It has been the case for the past 2 weeks as this thread demonstrates. The fact it has recently been "identified" just proves that your monitoring of the WVD Gateway infrastructure is insufficient. Any remote probe with PSPING clearly pinpoints the problem. I've been telling them it's capacity since last friday and it has simply taken them till today to atleast acknowledge that. I actually asked your collegue myself to open that Health Advisory so at least we have something to show to our customers other then psping logs demonstrating it's MS's issue and not ours.

I'm taking prolonged logs now just to keep proving the issue. And the issue is simply capacity issues on the WVD Gateway infrastructure. Every end of business day I get a MS engineer telling me to reboot VM's and today disconnect/reconnect sessions. But as long as no servers/hardware/network capacity gets added, nothing will be fixed. It's just the load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mar 18 2020 04:56 AM

@Marco Brouwer @oiab_nl @knowlite @Sbarnet

Apparently they added capacity and I'm now seeing performance back in line with expectations. Are you guys seeing the same?