Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Microsoft 365

- Modern Work App Consult Blog

- Running containers based on different platforms side-by-side with Docker preview

Running containers based on different platforms side-by-side with Docker preview

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Published

Jan 15 2019 03:11 PM

2,858

Views

Jan 15 2019

03:11 PM

Jan 15 2019

03:11 PM

First published on MSDN on Sep 20, 2018

When we have configured Docker in the first post , you'll remember that at some point we had to choose between Windows containers and Linux containers. The first ones can run natively on the host machine, while the second ones are executed inside a Linux virtual machine based on Hyper-V.

However you can't mix both architectures at the same time. If your machine is setup for using Linux containers, you can't deploy and run a container running IIS on Windows Server.

However, things are about to change! Thanks to the last preview of Docker for Windows, you can now finally run containers side-by-side even if they're based on different platforms.

Let's see how to do it!

Docker Edge is the name of the channel which delivers preliminary versions of Docker. You can switch from the stable to the edge channel by using the link you will find in General section of the Docker for Windows settings:

If you click on the another version link, you will see a popup where you'll be able to switch from the stable to the edge channel. You'll notice that the edge version is identified by a different logo:

Once the installation is completed, you'll be able to start playing with the last beta version of Docker. At the time of writing, its version number is 2.0.0.0-beta1 , which includes version 18.09.0-ce-beta1 of the Docker Engine.

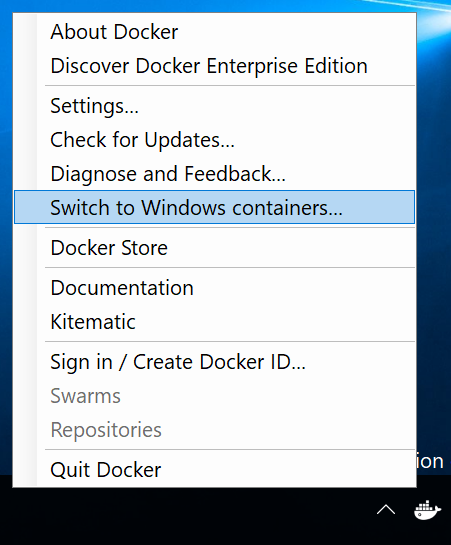

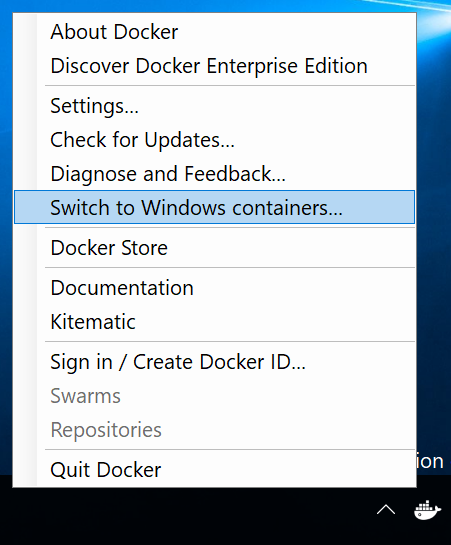

As first step, we need to switch to Windows containers. We can run, in fact, Linux and Windows containers side-by-side only if Windows containers are the default option. In order to achieve this goal, you need to right click on the Docker icon in the system tray and choose Switch to Windows containers .

If you don't have the Windows feature called Containers turned on, Docker for Windows will activate it for you. If that's the case, you will need also to reboot the machine at the end of the process.

If you open now a PowerShell prompt and you run docker images , you will notice that the list is empty, even if you have previously used Docker. The reason is that, when you switch to a different container, they are stored in a different way and in a different location. Windows containers, in fact, are stored directly on the host and not inside a virtual machine. As such, you can't share the same images between the two configurations.

Let's start with something simple: running a basic Linux and a basic Windows container side-by-side. Open PowerShell and type the following command:

The command will pull the container image for Windows Server, which is the command-line based version without any user interface. By using tags, we can specify which Windows version we want to use. In this case, we're pulling the most recent one (April 2018 Update). Since we have specified the -it parameter, we will directly connect with the terminal to the container. Since we are on a Windows machine, we will see the familiar command prompt:

What we did, actually, is nothing special. Since we are running Docker using Windows containers, it shouldn't be a surprise that we are able to pull a Windows-based image it and run it as a container. We would have been able to achieve the same goal with the stable Docker version.

Now let's do something more interesting. Open another PowerShell window, so that we can keep the Windows container up & running. This time, type the following command:

This time we're running a Ubuntu container and we're connecting to it using a terminal.

As you can see, we're now connected to the Ubuntu container and we can execute standard UNIX commands. What if we list all the running containers now using a third PowerShell window?

Both containers are running at the same time, despite the first one is based on Windows while the second one on Linux.

Microsoft offers an image for IIS for Docker called microsoft/iis , which you can use as a web server to host your web applications. Let's give it a try, by running the following command:

The image will be pulled from Docker Hub, then Docker will spin a new container for it. By using the -p parameter we're exposing the internal 80 port to the 8080 port of the host. If we open with the browser the URL http://localhost:8080 we will see the default IIS page:

Now let's repeat the experiment we did in the first post of the series . Let's run a new instance of nginx, a popular Linux based web server. You can use the following command:

The command is the same as the previous one. We have just changed the image to use ( nginx ) and the port where to expose the web server to 8181, since 8080 is already used by IIS. Now point your browser to http://localhost:8181 to see the default NGINX page.

And if you run again now docker ps , you can indeed see that the two containers are running side-by-side:

How can we be sure that the two containers are indeed running on two different platforms? We can get some help from Visual Studio Code to check this. If you remember when we have talked about the Docker integration in Visual Studio Code, we have an option to connect to the shell of a running container. Open Visual Studio Code and move to the Docker tab. Under the Containers section you should see the same output of the previous docker ps command:

Now right click on the microsoft/iis container and choose Attach Shell . Under the hood, Visual Studio Code will perform the following command for you:

docker exec is used to perform a command on a specific container. In this specific example:

As expected, we have now a PowerShell terminal open on the Windows instance which is running IIS:

Now let's try to do the same with the NGINX container. Right click on it and choose Attach Shell :

Well, this doesn't look good. The reason is that Visual Studio Code has tried to execute the same exact command we have seen with the IIS container, which means that we have tried to open a PowerShell terminal on a machine running Linux. Now it's pretty clear why it didn't work =)

We can fix this by manually repeating the command (just press the up arrow in your PowerShell prompt to show it again) and change powershell with /bin/sh .

This time we're using the UNIX shell and, as such, we can properly connect to the Linux machine running nginx. This is a clear demonstration that the two containers are indeed based on different platforms!

Let's resume back the work we have done in the previous posts . If you remember, we had built a solution made by three applications:

The first two applications were built using a custom image, built on top of the official one provided by Microsoft which contains the .NET Core SDK and runtime. When you pull an image from a repository, by default Docker will get the most appropriate one for your Docker configuration.

We can see this by rebuilding the two custom images. We're going to use the same exact Dockerfile we have seen in the other posts , without changing anything. We'll just run the standard build command from the root of the project. First move to the root of the web app project and run the following command:

Then move to the root of the Web API project and run the following command:

Now let's leverage the Docker Compose file we have built in the previous post to boot the whole solution. Open a command prompt on the same folder where you have the docker-compose.yml file and run:

You should get, at the end, the same outcome of the previous post . By hitting http://localhost:8080 you should see the test website, which is retrieving the list of articles from the Web API. If you refresh the page, this time the list will be retrieved from the Redis cache. If you execute docker ps , you will see the three containers running together:

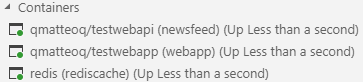

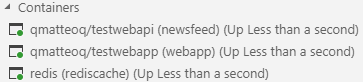

However, there's a difference compared to the last post, even if it isn't noticeable out of the box. Open again the Docker section in Visual Studio Code and move to the Containers section:

Right click on the web application ( qmatteoq/testwebapp ) and choose Attach Shell :

Can you spot the difference? We have connected to the container running our .NET Core application using PowerShell. This is happening because Docker supports the concept of multi-platform images . This means that a developer can publish multiple versions of the same image for different platforms. Microsoft does this for the .NET Core images, since .NET Core is cross-platform.

Since we are running Docker with Windows containers, when we have built the image for our web application Docker has pulled the Windows version of the .NET Core SDK and runtimes. However, this is completely transparent to us. We didn't change anything in the Dockerfile, we're still using the same FROM statements we were using in the previous posts:

Is there a way to force the usage of a specific platform, in case our machine is able to run multi-platform containers? The answer is yes, thanks to a new flag called --platform which has been added to the latest beta of the Docker engine. In fact, you will find in the documentation marked as experimental . After the flag you must specify the platform you want to use, which can be linux or windows .

You can specify the platform in three ways:

If you want to give this a try, delete the testwebapp and testwebapi custom images you have previously built, plus the standard dotnet images that has been automatically pulled by the Dockerfile.

To delete an image you need to grab the identifier first with the docker images command, then you can call docker rmi followed by the identifier. Alternatively, you can use the Docker tab in Visual Studio Code, right click on one of the images under the Images section and choose Remove image .

Now let's rebuild our two custom images starting from the Dockerfile. This time, however, we will run the following commands:

Thanks to the --platform switch, when Docker will parse the FROM command it will pull the Linux-based images for .NET Core.

We can verify this by running the docker-compose up command again after we have rebuilt the image for the web application and the Web APIs. If we right click on the running containers in Visual Studio Code and we choose again the Attach Shell option, we will see the same error as before:

Now our web application is running on Linux and, as such, we can't use PowerShell. We need to execute the /bin/sh command:

This time we can properly connect to the terminal and see the lists of files inside the container using UNIX commands:

In this post we have seen how the next version of Docker for Windows will bring support for running multi-platform containers on the same Windows machine. In the post we have seen multiple examples in action:

You can play with this feature today thanks to the Docker Edge channel, which delivers preliminary versions of the platform before releasing them in the stable channel.

Happy coding!

When we have configured Docker in the first post , you'll remember that at some point we had to choose between Windows containers and Linux containers. The first ones can run natively on the host machine, while the second ones are executed inside a Linux virtual machine based on Hyper-V.

However you can't mix both architectures at the same time. If your machine is setup for using Linux containers, you can't deploy and run a container running IIS on Windows Server.

However, things are about to change! Thanks to the last preview of Docker for Windows, you can now finally run containers side-by-side even if they're based on different platforms.

Let's see how to do it!

Switching to Docker Edge

Docker Edge is the name of the channel which delivers preliminary versions of Docker. You can switch from the stable to the edge channel by using the link you will find in General section of the Docker for Windows settings:

If you click on the another version link, you will see a popup where you'll be able to switch from the stable to the edge channel. You'll notice that the edge version is identified by a different logo:

Once the installation is completed, you'll be able to start playing with the last beta version of Docker. At the time of writing, its version number is 2.0.0.0-beta1 , which includes version 18.09.0-ce-beta1 of the Docker Engine.

Switching to Windows containers

As first step, we need to switch to Windows containers. We can run, in fact, Linux and Windows containers side-by-side only if Windows containers are the default option. In order to achieve this goal, you need to right click on the Docker icon in the system tray and choose Switch to Windows containers .

If you don't have the Windows feature called Containers turned on, Docker for Windows will activate it for you. If that's the case, you will need also to reboot the machine at the end of the process.

If you open now a PowerShell prompt and you run docker images , you will notice that the list is empty, even if you have previously used Docker. The reason is that, when you switch to a different container, they are stored in a different way and in a different location. Windows containers, in fact, are stored directly on the host and not inside a virtual machine. As such, you can't share the same images between the two configurations.

Running our first containers side-by-side

Let's start with something simple: running a basic Linux and a basic Windows container side-by-side. Open PowerShell and type the following command:

docker run --rm -it microsoft/nanoserver:1803

The command will pull the container image for Windows Server, which is the command-line based version without any user interface. By using tags, we can specify which Windows version we want to use. In this case, we're pulling the most recent one (April 2018 Update). Since we have specified the -it parameter, we will directly connect with the terminal to the container. Since we are on a Windows machine, we will see the familiar command prompt:

What we did, actually, is nothing special. Since we are running Docker using Windows containers, it shouldn't be a surprise that we are able to pull a Windows-based image it and run it as a container. We would have been able to achieve the same goal with the stable Docker version.

Now let's do something more interesting. Open another PowerShell window, so that we can keep the Windows container up & running. This time, type the following command:

docker run --rm -it ubuntu

This time we're running a Ubuntu container and we're connecting to it using a terminal.

As you can see, we're now connected to the Ubuntu container and we can execute standard UNIX commands. What if we list all the running containers now using a third PowerShell window?

PS C:\Users\mpagani> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

344687a13103 microsoft/nanoserver "c:\\windows\\system32…" 22 seconds ago Up 9 seconds naughty_perlman

0ffb5ec1d7de ubuntu "/bin/bash" 2 minutes ago Up 2 minutes youthful_jepsen

Both containers are running at the same time, despite the first one is based on Windows while the second one on Linux.

Testing another scenario with a web server

Microsoft offers an image for IIS for Docker called microsoft/iis , which you can use as a web server to host your web applications. Let's give it a try, by running the following command:

docker run --rm -p 8080:80 -d microsoft/iis

The image will be pulled from Docker Hub, then Docker will spin a new container for it. By using the -p parameter we're exposing the internal 80 port to the 8080 port of the host. If we open with the browser the URL http://localhost:8080 we will see the default IIS page:

Now let's repeat the experiment we did in the first post of the series . Let's run a new instance of nginx, a popular Linux based web server. You can use the following command:

docker run --rm -p 8181:80 -d nginx

The command is the same as the previous one. We have just changed the image to use ( nginx ) and the port where to expose the web server to 8181, since 8080 is already used by IIS. Now point your browser to http://localhost:8181 to see the default NGINX page.

And if you run again now docker ps , you can indeed see that the two containers are running side-by-side:

PS C:\Users\mpagani> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

657d728b3f53 nginx "nginx -g 'daemon of…" 2 minutes ago Up 2 minutes 0.0.0.0:8181->80/tcp recursing_pare

0f72c8b4438d microsoft/iis "C:\\ServiceMonitor.e…" 6 minutes ago Up 4 minutes 0.0.0.0:8080->80/tcp elated_bardeen

How can we be sure that the two containers are indeed running on two different platforms? We can get some help from Visual Studio Code to check this. If you remember when we have talked about the Docker integration in Visual Studio Code, we have an option to connect to the shell of a running container. Open Visual Studio Code and move to the Docker tab. Under the Containers section you should see the same output of the previous docker ps command:

Now right click on the microsoft/iis container and choose Attach Shell . Under the hood, Visual Studio Code will perform the following command for you:

docker exec -it 0f72c8b4438da48cf5af9335aa86c3d719961cdf9c60a4abf33e1629e9f16085 powershell

docker exec is used to perform a command on a specific container. In this specific example:

- We're using the -it parameter so that we can create an interactive connection

- The long series of letter and numbers is the full identifier of our IIS container

- Visual Studio Code has automatically detected that we have configured Docker to run Windows containers, so it invokes the powershell command

As expected, we have now a PowerShell terminal open on the Windows instance which is running IIS:

Now let's try to do the same with the NGINX container. Right click on it and choose Attach Shell :

Well, this doesn't look good. The reason is that Visual Studio Code has tried to execute the same exact command we have seen with the IIS container, which means that we have tried to open a PowerShell terminal on a machine running Linux. Now it's pretty clear why it didn't work =)

We can fix this by manually repeating the command (just press the up arrow in your PowerShell prompt to show it again) and change powershell with /bin/sh .

docker exec -it 0f72c8b4438da48cf5af9335aa86c3d719961cdf9c60a4abf33e1629e9f16085 /bin/sh

This time we're using the UNIX shell and, as such, we can properly connect to the Linux machine running nginx. This is a clear demonstration that the two containers are indeed based on different platforms!

Building an image for a specific platform

Let's resume back the work we have done in the previous posts . If you remember, we had built a solution made by three applications:

- A web app based on ASP.NET MVC Core 2.1

- A web API based on .NET Core 2.1

- A Redis cache

The first two applications were built using a custom image, built on top of the official one provided by Microsoft which contains the .NET Core SDK and runtime. When you pull an image from a repository, by default Docker will get the most appropriate one for your Docker configuration.

We can see this by rebuilding the two custom images. We're going to use the same exact Dockerfile we have seen in the other posts , without changing anything. We'll just run the standard build command from the root of the project. First move to the root of the web app project and run the following command:

docker build -t qmatteoq/testwebapp .

Then move to the root of the Web API project and run the following command:

docker build -t qmatteoq/testwebapi .

Now let's leverage the Docker Compose file we have built in the previous post to boot the whole solution. Open a command prompt on the same folder where you have the docker-compose.yml file and run:

docker-compose up

You should get, at the end, the same outcome of the previous post . By hitting http://localhost:8080 you should see the test website, which is retrieving the list of articles from the Web API. If you refresh the page, this time the list will be retrieved from the Redis cache. If you execute docker ps , you will see the three containers running together:

PS C:\Users\mpagani> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5999bb0ed94c qmatteoq/testwebapi "dotnet TestWebApi.d…" 34 seconds ago Up 26 seconds 80/tcp newsfeed

15e273ff1be7 qmatteoq/testwebapp "dotnet TestWebApp.d…" 34 seconds ago Up 25 seconds 0.0.0.0:8080->80/tcp webapp

890a8839a1c3 redis "docker-entrypoint.s…" 34 seconds ago Up 26 seconds 6379/tcp rediscache

However, there's a difference compared to the last post, even if it isn't noticeable out of the box. Open again the Docker section in Visual Studio Code and move to the Containers section:

Right click on the web application ( qmatteoq/testwebapp ) and choose Attach Shell :

Can you spot the difference? We have connected to the container running our .NET Core application using PowerShell. This is happening because Docker supports the concept of multi-platform images . This means that a developer can publish multiple versions of the same image for different platforms. Microsoft does this for the .NET Core images, since .NET Core is cross-platform.

Since we are running Docker with Windows containers, when we have built the image for our web application Docker has pulled the Windows version of the .NET Core SDK and runtimes. However, this is completely transparent to us. We didn't change anything in the Dockerfile, we're still using the same FROM statements we were using in the previous posts:

FROM microsoft/dotnet:2.1-aspnetcore-runtime AS base

WORKDIR /app

FROM microsoft/dotnet:2.1-sdk AS build

WORKDIR /src

Is there a way to force the usage of a specific platform, in case our machine is able to run multi-platform containers? The answer is yes, thanks to a new flag called --platform which has been added to the latest beta of the Docker engine. In fact, you will find in the documentation marked as experimental . After the flag you must specify the platform you want to use, which can be linux or windows .

You can specify the platform in three ways:

- By adding the --platform parameter to the docker build command. This way, every FROM statement included in the Dockerfile will pull an image based on the specified platform, if it exists.

- By adding the --platform parameter to the docker pull command, in case you want to grab a single image for the specified platform. When you do this, you can keep using the docker build command without any extra flag since Docker will automatically use the image already in cache instead of downloading a new one.

- By adding the --platform parameter to the docker run command. This way, Docker will pull the right image for the selected platform before running a container based on it.

If you want to give this a try, delete the testwebapp and testwebapi custom images you have previously built, plus the standard dotnet images that has been automatically pulled by the Dockerfile.

To delete an image you need to grab the identifier first with the docker images command, then you can call docker rmi followed by the identifier. Alternatively, you can use the Docker tab in Visual Studio Code, right click on one of the images under the Images section and choose Remove image .

Now let's rebuild our two custom images starting from the Dockerfile. This time, however, we will run the following commands:

docker build -t qmatteoq/testwebapp --platform linux .

docker build -t qmatteoq/testwebapi --platform linux .

Thanks to the --platform switch, when Docker will parse the FROM command it will pull the Linux-based images for .NET Core.

We can verify this by running the docker-compose up command again after we have rebuilt the image for the web application and the Web APIs. If we right click on the running containers in Visual Studio Code and we choose again the Attach Shell option, we will see the same error as before:

Now our web application is running on Linux and, as such, we can't use PowerShell. We need to execute the /bin/sh command:

docker exec -it 0f72c8b4438da48cf5af9335aa86c3d719961cdf9c60a4abf33e1629e9f16085 /bin/sh

This time we can properly connect to the terminal and see the lists of files inside the container using UNIX commands:

Wrapping up

In this post we have seen how the next version of Docker for Windows will bring support for running multi-platform containers on the same Windows machine. In the post we have seen multiple examples in action:

- A Ubuntu container running side-by-side with a Windows Server container

- An IIS on Windows container running side-by-side with a NGINX on Linux container

- A .NET Core solution running both with a Linux and Windows container, since it's a cross-platform technology

You can play with this feature today thanks to the Docker Edge channel, which delivers preliminary versions of the platform before releasing them in the stable channel.

Happy coding!

0

Likes

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels