Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Windows

- Windows Blog Archive

- The Coming .NET World – I’m scared

The Coming .NET World – I’m scared

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Published

Jun 26 2019 11:13 PM

2,593

Views

Jun 26 2019

11:13 PM

Jun 26 2019

11:13 PM

First published on TechNet on Apr 16, 2005

A few years ago Microsoft embarked on an anti-Java campaign called .NET, spinning .NET as a revolutionary technology (while failing to explain that it’s really Microsoft’s own implementation of the JVM concept with new languages layered on top of it). The .NET hype has died down somewhat in the last couple of years, but the multiyear marketing blitz has had its inevitable effect: lots of people are writing .NET applications (also known as managed applications). In fact, my Dell laptop includes a managed Dell utility that tells me when there Dell security alerts.

Both the client and server side of Web applications are ideal candidates for a managed environment, since the .NET runtime handles much of the complexity of cross-network communication and simplifies memory management. However, the proliferation of managed applications has extended to client-only applications. Microsoft already includes several with Windows XP Media Center Edition and Tablet PC Editions and many more are coming in Longhorn.

The reason I’m scared at the prospect is that managed applications have essentially no consideration for performance or system footprint. I’ll use Notepad as an example because I can compare a managed Notepad with the unmanaged one (unmanaged applications are also known as native applications – not the same as a native application from the point of view of Windows NT, which are applications that use the native API ) that ships with Windows XP. You can download the managed Notepad with source code at http://www.csharpfriends.com/Articles/getArticle.aspx?articleID=84 .

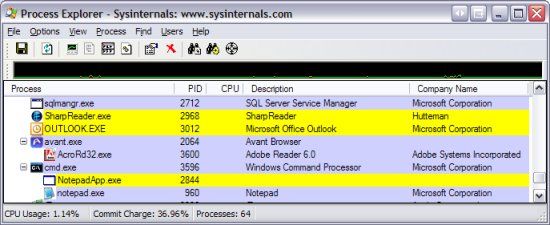

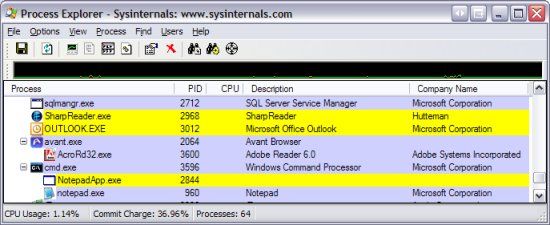

In this experiment I’ve simply launched both versions and will look at their performance-related information with Process Explorer. In Process Explorer’s process view you’ll see the managed version highlighted in yellow, which is the default highlight color for processes that use the .NET runtime:

You can see that Process Explorer has also highlighted Outlook and SharpReader as managed applications. Outlook isn’t a managed application (at least not the Office 2003 version), but I installed Lookout ( http://www.lookoutsoft.com/Lookout/lookoutinfo.html ), which is a managed Outlook search add-on that loads as a DLL into the Outlook process. SharpReader ( http://www.versiontracker.com/dyn/moreinfo/windows/28759 ) is the RSS feed aggregator that I use, and it’s obviously written in .NET.

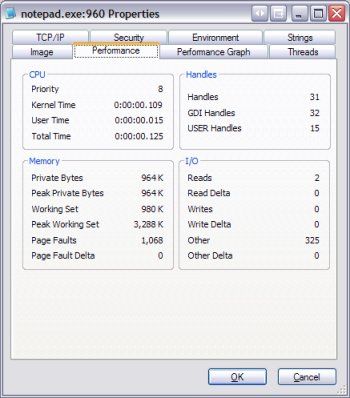

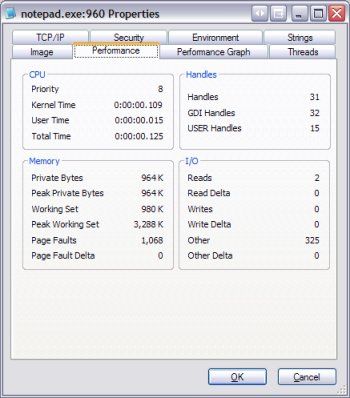

If you open the process properties of both Notepad applications and compare the performance pages side-by-side you’ll see why I hope that Independent Software Vendors (ISVs) and Microsoft stay away from .NET for client-side-only applications. The properties for the managed version is on the top with those of the native instance below:

First notice the total CPU time consumed by each process. Remember, all I’ve done is launch the programs – I haven’t interacted with either one of them. The managed Notepad has taken twice the CPU time as the native one to start. Granted, a tenth of a second isn’t large in absolute terms, but it represents 200 million cycles on the 2 GHz processor that they are running on. Next notice the memory consumption, which is really where the managed code problem is apparent. The managed Notepad has consumed close to 8 MB of private virtual memory (memory that can’t be shared with other processes) whereas the native version has used less than 1 MB. That’s a 10x difference! And the peak working set, which is the maximum amount of physical memory Windows has assigned a process, is almost 9 MB for the managed version and 3 MB for the unmanaged version, close to a 3x difference.

Is 8 MB of private memory a big deal? Not really, but then again this is basically a zero-functionality application (it doesn’t even have search or replace). Once you start to implement features in .NET the private byte usage really grows. SharpReader, for example, consumes close to 42 MB of private bytes to store data related to around 20 different feeds, and the user interface for SQL Server 2005 beta 3 (written in managed code) consumes 80 MB when run on a fresh installation.

Compare that managed application bloat with the memory usage of fully-featured unmanaged applications that have 10 times the complexity of the managed examples I’ve listed: Microsoft Word weighs in at around only 6 MB after starting with no document loaded and Explorer on my system is consuming only 12 MB.

Now imagine a future where a significant percentage of applications are written in .NET. Each managed application, ignoring the memory overhead of document data and shareable memory like code and file system data, will eat somewhere between 10 and 100 MB of your virtual memory. If you think your system is slow now, wait until several of these applications are active at the same time and competing for physical memory.

It makes me wonder if Microsoft gets a cut of every RAM chip sold…

Originally by Mark Russinovich on 4/16/2005 8:08:00 AM

Migrated from original Sysinternals.com/Blog

# re: The Coming .NET World – I’m scared

It would be interesting if you could compare it also with a Java application.

4/16/2005 3:30:00 AM by Camillo

# re: The Coming .NET World – I’m scared

i had to endulge only two examples of .net code so far but my senses told me the same thing you've published here.

pinnacle's mediacenter software starts so ridiculously slow on my 3.2 ghz machine that i first thought the task crashed silently.

adding to this annoyance is the fact that starting managed applications seem to be 'invisible' through the absence of the 'application starting' cursor.

autodesk's autocad 2005 starts considerably slower than it's predecessor and seems to need more ram for the very same tasks.

4/16/2005 5:55:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I agree with your analisys though I disagree with your conclusions.

The startup overhead is due to the runtime setup. This process, if you have a look to the Rotor sourcecode, it is a little bit heavy, especially because several classes, part of the base class library, are Jitted.

This implies that starting a managed application requires that overhead always to be payed. Moreover if you run two managed applications in parallel there is no attempt made by the two running instances of the runtime to share resources leading to a considerable overhead.

In some respect this situation reminds me when C runtime wasn't shared through DLLs and it was imposing unnecessary overhead over working set of running applications. I'm thinking of C runtime because David Stutz introduces CLR as a modern C runtime.

However from these considerations one may conclude that CLR should become smarter, trying to learn from lessons of the past. Perhaps Jitted code could be shared among different CLR instances.

My point is that I don't really see any really issue in the .NET model, though I see several aspects where CLR can be improved.

For instance I once developed the JavaStarter, it was an application to improve resource usage from different Java applications: the trick was to start a JVM and use it as a loader. This can be done also in .NET: with few lines of code one may start a runtime to be used as a loader of .NET applications. Each .NET application would run in a different Application Domain, achieving an appropriate level isolation. This is but a patch, though effective, waiting that MS guys improve CLR in this respect.

When MS announced that CLR would go into the OS and that in fact in Longhorn side by side execution would be not supported these were the considerations driving their decision. If you make the OS aware of the runtime it is possible to do amazing optimizations that would make CLR applications undistinguishable from unmanaged ones.

I have to do a last comment: your considerations assume well written applications. I have plenty of applications running on my laptop that are C++ and sucks. Moreover C++ inlining strategy tend to code-bloat applications, whereas CLR tend to favor code sharing.

I think that VM are inevitable to dominate the complexity of applications we need. Services like reflection and dynamic loading of types are fundamental tools. Thus we should look how to use these new tools to make CLR viable, and we must ask MS to have more efficient versions of CLR.

4/16/2005 7:36:00 AM by Antonio Cisternino

# re: The Coming .NET World – I’m scared

At last, someone which publicly reject VM technologies. I know they are computer future, very powerful, bla bla bla.

But I am (as you seem to be) wondering why Moore's law does apply to CPU power and network bandwith and does not apply to application responsiveness nor features.

Please, someone explain me why Amiga OS 4 seems more responsive on a G4 800 MHz than my Windows XP on my Athlon 2 GHz, are programmers really programming gods that we have to worship ?

4/16/2005 8:23:00 AM by Vincent

# re: The Coming .NET World – I’m scared

If you check out how Sharpreader allocates memory under the CLR Profiler, you'll notice it isn't the best written application when it comes to object usage.

4/16/2005 8:52:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I wonder if the resource usage you are seeing comes from using the CLR, or from being written in C#.

I don't see what it is with vendors trying to set up their own language (Java, C#, Objective-C, ...) these days. I guess there is a real need for a language that doesn't make it as easy to shoot yourself (and not just in the foot either...) as C++ does? I can understand this for writing GUI's for example, which can be tedious work (in any language). But I'm confident that there will be a good part C/C++ for a long time, since that can be made to compile on several platforms more or less easily, has decent speed generally, and allows you to do many things to speed it up even further (eg where I work we use a custom memory allocator for performance).

But if Java/C# is easier, it may get more people writing software. Not sur eif that is a good thing if there will then be more of the 'resource-hog' type of software :-(

4/16/2005 9:43:00 AM by legolas

# re: The Coming .NET World – I’m scared

I agree with Antonio's comments. You are assuming that the current state of the CLR and .NET in general is fixed. Just because it has certain undesirable characteristics now does not mean they are set in stone for all time. There is work being done to optimize many things and I doubt you are the only one to notice that .NET uses a lot of memory currently. Also, as someone else already pointed out, managed or unmanaged if you code poorly it will be very evident to all. Managed code is not a license to ignore common practices and to stop thinking about what is happening "under the covers", in fact managed code makes it so much more important because you are able to do much more powerful things with fewer lines of code. This of course means you NEED to be aware of the cost of these things and alternatives that can make it less costly. Just my 2 cents.

4/16/2005 10:14:00 AM by Ryan

# re: The Coming .NET World – I’m scared

Sharpreader(which I also use) has been knocked for memory usage for quite a while. The creator, Luke Hutteman, has even admitted that the current implementation, which keeps all feeds and feed text in memory, is not good. I remember a post from him saying that it shouldn't be used as a indication of .NET memory usage, but of course I can't find that now...

That said, I'm not disagreeing with what you said. Managed applications definitely use more resources. And they start slower. And they are a pain to get installed, requiring a 22 meg "redistributable". I've also seen those problems in unmanaged applications. Poor development is a problem with every language. I think that in a lot of cases if developers really tried to make the most performant and svelte managed applications, you could have some applications that do compare pretty well against unmanaged counterparts. Of course I think this is harder to do for managed apps than it is for unmanaged ones.

(I also happen to think that the best app will be a combination of managed and unmanaged code.)

I do think managed applications do have a place to be used today though, and that’s in backend server side applications. I think that if you are generating web front ends(on Windows) and you aren’t using ASP.NET, then you are horribly behind the curve. Even though I’ve done very little in the area, I think that the concepts behind ASP.NET should make the development process much smoother and make the applications much more maintainable, which in this case, I think is enough to counteract some of the other concerns. Of course, once again, it falls upon the developers to take the best advantage of the platform, and this still takes having good developers.

4/16/2005 10:16:00 AM by CaseyS

# re: The Coming .NET World – I’m scared

I recall an article by Pietrek in MSDN a couple of years ago where he traced the calls made by setting up a simple .Net app. It was impressive to see how many calls got made for creating an app domain and checking security.

So, as long as you know where this "overhead" is going, you can plan accordingly.

As for chewing cycles or bytes, I also recall in the mid-eighties when we were earing about X-Window and its required 8 meg of ram and thinking "these guys are nuts". The lesson I learned, then, was: if you have a nice application that people wants then the hardware will catch up (sooner or later).

4/16/2005 1:42:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

The basic problem is that in CLR 1.x assemblies don't share pages across processes like regular dlls do. Microsoft knows about this, and this is remedied in 2.x. So difference will not be as dramatic anymore.

Even with 1.x it's well worth to run managed client apps due to inherent resistance against security attacks like buffer overrun.

I respect your work A LOT, but this post is just a mix of some bitterness, fud and plain bad homework

4/16/2005 3:43:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

It's surprising how many are coming to the defense of .NET bloat. It doesn't matter if it is due to the existing architecture. It doesn't matter if it is theoretically fixed in version now+n. The current incarnation of .NET leads to apps that have a bloated footprint before they are even launched, far more bloat than native code when they ARE launched, and generally worse performance than native code from launch to shutdown. Sure, it's better than Java. But it's still miles apart from well written native code.

4/16/2005 4:34:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

Well said Mark, I've been wondering why authors haven't seen the overhead...

4/16/2005 9:08:00 PM by Hari

# re: The Coming .NET World – I’m scared

>But it's still miles apart from well

>written native code.

Comparing fully managed code to native code is ridiculous. Wow there are size and performance differences? Are you sure? I just can't imagine that something that is dynamically compiled and subject to security/safety verification could possibly be any slower than optimized native code.

4/17/2005 10:45:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I agree 100% with your analysis. There is only one place to go.

www.borland.com

Look for delphi. Ease of use plus unmanaged code performance!

4/17/2005 6:55:00 PM by Kwadwo Seinti

# re: The Coming .NET World – I’m scared

No exactly a fair comparasion between .NET and other development tools.

Please note that a true .NET application will always load a few runtimes when they loads, that can perheps explain the memory consumption difference. But when we add functionality to the applications, the difference would become much closer(relatively).

This also remind me of another comparasion between compiled binarys of ASM .com and .exe files plus C , Pascal and BASIC .exe files at the days of DOS. The size difference between ASM executables are, of course, very large. But if someone say that because of that we should keep away from those higher level languages, I'll say that the development complexity out weight the size and speed advantage when considering the development tool to use.

4/17/2005 9:00:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

The .NET version might be 10 times more bloated than the C version. But I'm sure I can write an assembly version that is 10 times faster.

But it doesn't matter. Hardware will catch up - 10 GHz machines will make the extra overhead unimportant.

4/17/2005 10:21:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

This post has been removed by the author.

4/18/2005 10:40:00 AM by Cullen Waters

# re: The Coming .NET World – I’m scared

My response

4/18/2005 10:40:00 AM by Cullen Waters

# re: The Coming .NET World – I’m scared

Software gets slower faster than hardware gets faster.

--

Wirth's law

4/18/2005 10:43:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

IMHO comparing C# and C++ is wrong, because C# was never meant to replace C++, it is meant to replace VB.

If you think of .Net as the new VB, you'll see there are a lot of improvements: great library, good speed, real OO, garbage collection, reliabilty, and none of the VB stigma attached to it. Is it a surprise that ASP.Net made ASP and VBScript disappear?

Microsoft Word won't be written in C#, it will still be a C++ application that you can extend with .Net, just as you used to extend it with VBA.

State of the art games will still be written in C++ to squeeze every microsecond they can from the machine.

Simple applications meant to be sold on the Internet will still be written in C++ or VB or Delphi or whatever results in a small downloadable, until the end of the decade, when everybody will have the .Net runtime in their machines and networks will be so fast that downloading 20+ Megs will be a non issue anyway.

4/18/2005 11:24:00 AM by M Rubinelli

# re: The Coming .NET World – I’m scared

It would have also been worthwhile to to see what numbers you get after NGen-ing NotepadApp.exe.

4/19/2005 3:22:00 AM by Atif Aziz

# re: The Coming .NET World – I’m scared

I don't think .NET is just a better VB. There are already real world 3D .NET based games like:

- ALEXANDER THE GREAT

- Arena Wars

As for perf, I would like to remind that IronPython has proven faster than the C interpreter of the Python Lnaguage. This has been possible because the .NET implementation has taken advantages of several features of the support that have compensated the efficiency loss introduced by the virtual machine.

Everybody here praise C++, I would like to point out that there are programming languages faster than C++. Nevertheless the benefits introduced by the language are worth it. Nevertheless compiler writers always tend to push the limit with improved compilers. I think the same will happen with VMs.

4/19/2005 7:24:00 AM by Antonio Cisternino

# re: The Coming .NET World – I’m scared

As a developer of commercial software I am always stuck with the problem of deciding what language to use for developement. I skipped MFC since it never seemed to be able to keep up with the latest Windows look as expressed in the MS Office apps. I've learned that I cannot force corporate support types to load the latest .Net runtimes just for my app or even the latest version of IE to support whatever Internet things my app may do. So I have stuck with native C++ code with no dependence on need-to-install-also runtimes.

4/19/2005 8:57:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

C++ is way faster today than any managed app! The reason is simple: C++ compilers have been out there for decades, and are a magnitude more advanced.

But this will change very soon! When you compile a C++ program, you compile it at the minimum supporting CPU code, and probably use cpuid or something similar for fine-tuned architecture-specific code.

But .NET runtime can upon managed app installation optimize the app to the best that the mashine it is running on can give. MMX/SSE2/whatever. CLR JIT-er will ony get better!

And memory isn't so expensive nowadays. When LH ships, most new machines will have 1-2Gigs of RAM anyway..

And it isnt' true that .NET code is always bloated! For example, generics in C# 2.0 are implemented natively in CLR, which reduces code bloat and increases JIT-ed (binary) code reusability. In C++/Java, templates lose it's identity once they are compiled.

Moreover, .NET supremacy will very shortly come out, once the multicore CPUs become common. We'll see what's faster - i386 C++ binary or managed app :)

4/19/2005 4:50:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

Don't be scared people, if VM must become the supremacy, they will still need us C++ developpers to write these VMs ;-)

4/19/2005 6:43:00 PM by Vincent

# re: The Coming .NET World – I’m scared

I just hope that Microsoft will allow to use native programs in next versions of Windows. I dislike back to BASIC time even if modern name is dotNET.

4/19/2005 7:37:00 PM by ISV

# re: The Coming .NET World – I’m scared

"And memory isn't so expensive nowadays. When LH ships, most new machines will have 1-2Gigs of RAM anyway.."

Uh, in what country? Is Longhorn even more delayed than I thought? 1GB can't be assumed across the board with all new PC's shipping in all markets around the world for MANY years...

4/19/2005 8:10:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

I think you're barking up the wrong tree. The benefits of .NET are so compelling in any environment that everybody should be using it. So what do we do about the overhead? Avoiding .NET isn't a good answer. Microsoft should optimize the overhead out; I don't see any reason it can't be brought down to being a slight overhead.

4/21/2005 2:47:00 PM by David Douglass

# re: The Coming .NET World – I’m scared

I posted a response, including some .NET 2.0 beta 2 footprint comparisons for Console and WinForms apps.

The bloated world of Managed Code

4/22/2005 12:10:00 AM by Jeff Atwood

# re: The Coming .NET World – I’m scared

It would be interesting to know if the time/space differences are constant or proportional. .Net loads compiles code and loads metadata not there in C, but it only does it once.

The sad fact (or maybe it's a happy one) is that .net is a whole lot easier to develop to than, say, C++/COM; the libraries are more regular, the infrastructure is more sophisticated (c.f. ATL), and the tools have improved since VC6 (although you could do unmanaged C++ in VS.Net). Oh, and the languages have a shorter learning curve.

Seems like VMs are going to be a fact of life.

4/25/2005 5:55:00 AM by James

# re: The Coming .NET World – I’m scared

Comparing the two Notepad purely based upon start up time is like trying to compare car performance without taking the cars off the lot.

If you load a 4Mb file into each of the Notepads, you may be surprised that the .NET version uses 1/3 the CPU time of the regular Notepad. Granted, the .NET version does use more memory, but given that software licenses are generally priced by CPU, this might not necessarily be a bad thing.

Two tweaks I would make to help the .NET performance numbers:

1) When openning the StreamReader, provide a large buffer size. This will take care of most of the I/O difference.

2) After loading the TextBox with the file contents, make a call to GC.Collect(). This will free up a lot of the extra memory the .NET verion used to load the file.

Bottom line, the programmer always needs to be aware of performance trade-offs being made. But even for a what is effectively a training excercise with little time given to tweaking the performance, at least in terms of CPU, the .NET Notepad greatly outperforms the regular Notepad.

4/26/2005 8:50:00 AM by Greg

# re: The Coming .NET World – I’m scared

Have no fear 64 bit computing is here....

4/30/2005 5:56:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

people here keep talking about shared dlls...this was a windows 9x concept!!!

in nt4 onwards each process loads a image of the system dlls (including the c runtime) into its own memory space. no sharing is allowed to increase system stablility.

5/4/2005 2:16:00 AM by gareth

# re: The Coming .NET World – I’m scared

Those of you coming to .net's rescue: Given a choice between targetting Win64 or .net -- which platform do you think MS' own Office team will choose?

This discussion is far from new. Recently Java was THE Language to use. Many of our competitors switched. They typically employ more developers, have bigger deployments, use more memory, definitively eat more CPU -- but they usually (always) end up with fewer features than our application. We don't feel the urge to switch to Java at all...

The only problem with the tool of our choice (Borland Delphi) is that Win64 isn't yet a supported platform. .net is a supported target though, but I don't quite see how we will be able to make that transition. We need more speed, not less.

--

Rune

5/24/2005 10:44:00 AM by Rune

# re: The Coming .NET World – I’m scared

First, Mark, are you a programmer? how much do you know about .Net than just comparing Notepad and looking at bytes used?

dotNet rocks! I can't wait the new .Net 2.0.

If I were you, I would say "The Coming .NET World - I'm extremely happy"

5/28/2005 5:30:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

You see Mark, why is ASM language absolete? Are there any programmer bother to write ASM code for faster speed? NO! Spend a year to write a simple database application in ASM? Hell NO! I'd rather spend a few minutes in .Net.

Long live .Net!

5/28/2005 5:40:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

idiot .NETers... open ur eyes...

5/31/2005 1:56:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

I would like to comment on the use of computer languages in general. For instance, why did sane people ever use VB? The answer is, just because it was pushed and advertized by Micosoft. Delphi was far better and still is. Why do we use C#, which was developed by the originators of Delphi? Same answer. Why do we use Java? Because, "computer scientists" are in love with its complexity, just as a mechanical engineers love compex machines regardless of whether they perform better.

Personally, I think Microsoft and Intel set the world of computing back 10 years. THe Amiga operating system, although buggy, was years ahead of Microsoft. The 8088 chip was a short sighted design compared to the Motorola processors.

6/5/2005 1:42:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

This is not shocking. I've been using personal computers since the mid-80's and even owned a bonafide IBM PC (one of the originals). Back then, the fastest apps were all those written in assembly language and manipulated the hardware directly ( device driver? what's that? ).

Knowledge of assembly language had such a cool factor with people back then , that PC Magazine used to publish all the source code to their utilities - often in 8086 assembly .

You would be hard pressed to see such a thing today.

While I do agree with Mark to an extent, the reality is, Moore's Law has continually eroded the need to program at the metal of the day (before too long).

I've seen it on my own desktop in various ways but the one thing I do that has been pretty dramatic in this regard is the fact that I run an X server . This is so I can see LINUX applications on my Windows desktop. I maintain a LINUX server and will readily run GUI based applications (logically there) and display the resultant windows on my Windows desktop. The free X server I use on Windows has gotten better with every motherboard upgrade I've made. Now, with a 64 bit Athlon system and with switched Ethernet, I have to say, that LINUX Mozilla on my Windows XP desktop runs nearly as well as if it was running locally. It truly is impressive to see the gains from a couple of years ago.

I remember the resistance to high level languages among the assembly language folks. The argument against anything before the advent of C was always speed . I know, since I was one of them. I learned 80x86 assembly and retain a good amount of knowledge from those days. My favorite instruction was REP MOVSx . Just setup your SI , DI , CX registers and rip away (think BitBlt ). And who can forget XLAT .

But programming in assembly today is of course largely a waste of time. It's great that I know it since I can hang with the best of them but with computing power ever increasing, the argument Mark is making will weaken more and more until it becomes insignificant.

Today I have 1.5 gigs of physical RAM on my system. If you think that is a lot, I'm considering going to 3 gigs of physical RAM. Why? Funny you should ask, I'm tired of loading contemporary games like UT2004 and DoomIII then seeing my disk thrash as virtual memory is called upon a bit too much. I tend to have a lot on my desktop and I don't quit applications to launch these games when I need a break. I have a dual monitor system and I've monitored how much physical RAM is left when launching DoomIII with a typical suite of applications that I leave open. After the main DoomIII game fires up and loads a save point, I have 110KB of physical memory free out of 1.5 gigs .

So it is no surprise that when I quit the game, the disk is a bit busy as the virtual memory subsystem kicks in. Yes, I can get faster drives, but faster drives won't beat the effect of doubling my memory. ( Aside : Though I must say, Serial ATA is nice, unfortunately my Maxtor Serial ATA drive died recently and I've been operating with older backup drives. No more Maxtor drives for this fellow, two brand new Maxtor drives failed within six months on me.)

If I do carry this out, this will severely mitigate any negative notions I may have of .NET applications being pigs.

I remember all the noises when IBM and Microsoft released the very first version of OS/2 - everyone was complaining of the fact that you needed four megabytes to run it well . All the usual suspects in PC Magazine wrote about it, Bill Machrone, Dvorak, the late Jim Seymour and the ever faithful Zachmann ("OS/2 is gonna rule!!!").

Think about how foolish that sounds today, i.e., "Four megabytes is too demanding!!!"

Funny thing is, I had a Compaq Deskpro 386 with an unheard of 10 MB of physical memory. Needless to say, my experience with computing back then was quite different than that of most people's. Or rather, my perception of what was seemed demanding. Yet time left that once mighty system in the dust.

Moore's Law will erode this argument a lot sooner than you think. Anyway, just my 2 cents.

-M

6/6/2005 4:01:00 AM by Mario

# re: The Coming .NET World – I’m scared

1 Mb of RAM costs 10 cents.

40 Mb of RAM cost 4 dollars.

soft costs more than memory.

don't fear.

your everyday dinner costs more than that damn memory.

it's chiper to boost your productivity as a developer than press out a memory.

6/10/2005 10:12:00 AM by Sergey

# re: The Coming .NET World – I’m scared

Raymond Chen and Rico Mariani recently did a 'dueling blogs' series where Raymond was trying to optimize the performance of an algorithm in raw Win32/C++ vs. Rico optimizing the same functionality in .Net/C#.

Check out this post in Rico's blog for the links to both sides of the conversation.

Very interesting reading.

--

In a world where you can buy 2GB of RAM for ~$175 and for any truly performance sensitive areas of your code you can still code in C++ and seamlessly integrate with any of the other supported .Net languages for less sensitive areas, why wouldn't you take the free programmer productivity that comes with a modern VM environment. (#1 on the list being automatic memory management.)

Never mind the inherent security benefits , buffer overrun checks , and cross-platform compatibility that come standard.

6/23/2005 4:32:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

Delphi was always the king of rapid Windows app development. And no 22mb .NET framework. Word.

6/24/2005 8:56:00 AM by Dave

# re: The Coming .NET World – I’m scared

I think Quake II is a far better comparison to use than Notepad.

7/28/2005 5:57:00 PM by RyanLeeSchneider

# re: The Coming .NET World – I’m scared

I think some of you seem to have missed an important point Mark has made here when you mention various .NET apps in isolation. A lot of the arguments go something like, "sure the app is a bit slower and takes a bit more memory but it was easier to write". But would happen if the majority or applications on the system we’re written with .NET and not just the odd one? If every process on the whole system is a bit slower and takes a bit more memory the machine as a whole will need to be faster and have more memory. I’m no expert on .NET but I often find myself wondering what is the price of its success. For example its probably ok to have the odd application lazily freeing memory during a garbage collection run but if all the application on the system done this what would happen?

I am not trying to say that either managed or native code are evil or the way to go. But I have found it difficult to find an informed opinion on the costs and benefits of .NET that isn’t a religious war. In this respect I found this article refreshing.

8/16/2005 10:57:00 PM by Steve

# re: The Coming .NET World – I’m scared

Hello,

since we're deveolping large multiuser DB application and we have direct comparison with predecessor coded in Native VB, I can make some conclusions:

- language itself (C#) is fine, some features are only in .NET 20 (generics) compared to C++, but I can live withou them. Other language concepts are fine

- memory management and GC is poor. It's good for simple tasks, but it's nondeterministic and in result it forces us to reuse instances as possible, since we're not able to ensure that Dispose is equal to free of memory.

- boxing is a rumour of memory. I hoped in .NET20 boxing will be improved, but it's not. Since generics seemed to be a fine way how to remove untyped ArrayLists and Hashtables, it's a problem. Direct access of typed values us generics ArrayLists is not possible (look at Ian Grifiths blog).

- JIT is a duty of CLR. We all hope NGEN in 20 will be improved as promised. I tested only slightly

- ease-of-use concepts forces senior developers to do more supervision over junior developers

- ease-of-use concepts can result in poor application with bad performance - you still have exactly what you're doing (e.g. VB late bingings - one big sh...)

- 2003 IDE, especially desginer, is poor.

My opinion is that up to .NET20 is good enouth that it can replace native applications.

If you can, go for 2005.

Ondra

9/1/2005 2:49:00 AM by ospilka@elinkx.cz

# re: The Coming .NET World – I’m scared

One important point that is overlooked here, in my opinion, is that MS is more or less *forcing* managed code execution down our throats.

It's going to get harder and harder to develop C++ applications for the Windows platform. MS already strongly deprecates C++ for the vast majority of software development tasks, and that isn't going to change.

Gradually, third-party component support for C++ development on the Windows platform will also slow to a trickle. It will become very challenging to develop a new Windows app with a modern look & feel using C++.

Bottom line: MS isn't going to reverse course at this point.

10/4/2005 7:50:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

I like coding in C# .net because I find it faster and easier to find the functionality that I need and I don't have to worry about memory management so much.

I have a toolbar app that periodically wakes up and kills useless processes (like ctfmon) or adjusts other processes to a desired priority.

Process explorer was reporting the working set as 24 megs, but on the debug version calls into GC for working set were reporting 600k. To try to reduce the working set I now explicitly call GC after every timed process check. However there is still an order of magnitude difference in the memory usage reported by GC and ProcExp.

10/6/2005 3:14:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I am willing to bet that nobody will remember .net, java or C# in 20 years.

11/3/2005 7:53:00 AM by msekler

# re: The Coming .NET World – I’m scared

Mark,

Not to argue on this topic, but just to make an adjustment on importance of good tools vs. good programmers.

Here is a screenshot of PROCEXP.

Note it takes 27 MB of Private memory. It was also consuming 62% of processor time at that moment doing pretty much nothing related to my main tasks at work.

This is obviously abnormal and should be considered a bug, but my point is that good tools reduce the likelihood of such bugs and thus ultimately, with a good OS will lead to better use of computer resources.

P.S. I just reread my comment and realized that in all projects I worked on, good programmers were more important that good tools :) Still .NET should not be underestimated.

12/2/2005 4:26:00 AM by Egor Shokurov

# re: The Coming .NET World – I’m scared

What is interesting is we always talk about the "coming .NET world" or "the future".

It's not the future though. It was released what, some 5 years ago.

So why isn't it being used? What's the last useful peice of software you used that was written in .NET? All my main applications that I use every day are still 32 bit windows API based apps.

On the other hand, did it take 5 years for 32bit to even begin to kill 16 bit? Maybe .Net takes 10 years to propagate? But I thought technology was supposed to move fast, and not at a snails pace, i.e. ten years.

12/31/2005 11:31:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

Someone mentioned "Development Complexity" as a good reason to choose the tool, probably implicitly pointing to .NET as the candidate... Well, I completely disagree. Development Complexity will "choose" the Development Model and Architecture to follow. You can make simple apps difficult to maintain if the architecture is bad. Or extremely complex and large apps (our case, with over 2 million lines of code) really EASY to maintain and grow. The key is in the architecture and the development model according to my own experience... If the tool you use, supports the architecture and development model, you are in the right path. In our case, we use Delphi + a myriad of script languages on top, and it has worked as a charm so far.

2/14/2006 5:41:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

VM's are according to me just another unnecessary indirection layer. In reality I believe there isn't any advantage. Corssplatform? Busted, see Qt. Security? Busted see MS Java VM, they weren't able to deliver secure VM, heck they aren't able even to deliver secure system based on NT kernel. How can one believe it will be better with .NET ? The responsivnes of today's very powerful PC being eated by .NET apps is exactly the same as the one tuned 386 with Win 3.0 from 1992. The software does almost exactly same work. Only in first the bilions times faster HW is required.

2/23/2006 10:03:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

As computers get faster, developers can write in a higher-level language and be less concerned about performance.

We have moved from assembly to C to C++ and are now moving to .NET.

An interesting counterexample is Torrent applications. Azureus was the most popular client, but has recently been passed by UTorrent.

Why? Because UTorrent is much faster, and people like to leave Torrents running in the background.

The difference between Azureus and UTorrent is that Azureus is written in Java, while UTorrent is written in C++ using Win32 directly.

3/25/2006 6:58:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

VMs are great for crossplatform apps, just that .NET runs under Windows only... Still in my PDA i HATE .NET apps, it takes ages to start even the simplest one (same with the RAM too). Someone was saying most PCs have 1-2G RAM... Do you know that Windows Vista takes up 1.5G just after booting up?.. How does THAT compare to Your PC? I have just that amount on my laptop and I thought it's plenty of RAM...

3/26/2006 1:15:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

Has anyone noticed Windows hasn't been updated in 5 yrs? It's the OS that sets the maximum memory. I'm fine with "managed" apps eating more memory as long as the OS isn't limited to 3-4 gigs. The talk about Moore's law is fine and all, but the platform defines many of the "hardware" features by abstracting them for use by applications. Software is not bound by Moore's law. So yeah, there's more memory and the CPU's are faster and have more features. But is the OS letting you take advantage of all those increases?

And come on .Net is bigger and fatter, admit it. Comparing ASM to HLLs in the 1980's is not even close to the same. C++ is harder to work in than C#, but it's not memorized Op Codes vs oddly structured english. It's oddly structured english vs less oddly structured english.

The memory bloat bothers me, but the file access bloat is much worse. Storage access times do not double in speed every 18 mo. Windows runs to the HDD way too much as it is. Encouragement from .Net is not necessary.

And shifting the blame to the CLR is a cope out. MS wrote the CLR if it was this bad at bloat they should have fixed that before publishing 1.0, not wait till 2.0 or whatever.

I'm surprised how many people say this is an unfair comparison. It's the most basic comparison and the first one I would start with if profiling. And it's the one the counts the most. I'm so glad the apps can be optimized internally or this or that can be done, but the simple fact is the .Net app is bigger and slower from the start.

The talk of loading all the libraries and not staring between other .Net apps is rather humourous. Didn't MS sell all the COM/DLL love to us in the mid-later 90's. Now all those ascertions are false? And it takes 3 or more versions to add that to the Framework?

3/28/2006 12:54:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

My laptop has 1.5GB of RAM.

Feel free to code in assembly language, if you want.

3/29/2006 10:07:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

Well, I would say some words on developing new apps on the Windows platform. I`m really disappointed from Microsoft. For more than 10 years they haven`t made any relevant changes in the way of writing good looking and easy to code GUI apps using the native API`s (Win32 and MFC). I spend lots of time always when I need to design something with modern GUI interface and I`m getting really shocked when I need to look hours and hours in the MSDN for the next LPNMHDR-like structure or looking for the side effects of function X. Probably .NET will solve this problem. I haven`t tried it yet, but I will surely do. Our team develops a complex crossplatform 3D graphics framework (using a self implemented COM-based architecture), we have implemented all the easy to use HashTables, Arrays, Strings, Tokenizers, Trees, Lists, RTTI`s. It was a hard to code work. We use C++ only because we need support on systems of any kind (Symbian smartphones, PDA`s, Pocket PC`s, Linux, Windows). Java and .NET are not a good choice for this task (because of portability and speed issues). But we need the easyness of C# that`s why we have invested years in implementing some of its nice features. We also need tools which are mainly Windows GUI-based apps. But I`m tired of using the idiotical old MFC for that. I will try .NET, but I`m confused how could I call a function from our dll like Search(IN CONST StxString& rSearchPattern, IN StxArrayT[StxStorageEntry*]& rResults>) in C#. I do not want to write any wrappers. Is it possible? I don`t know, but if it is possible it is OK. I would not switch to .NET to write the core functionality of such framework, because our everyday scenario is "Load 40-50 models each with more than 500 000 polygons, create the BSP`s, Octrees and so on and then create the scene graph where one geometry object is shared among different graph nodes (means incrementing the ref counters)". This would mess up the GC of any VM language and the memory will not be properly freed. And the new attempts to add new objects in the scene will lead to "Out of memory". The garbage collection will be slow, too. For a time critical jobs this is fatal. Although C++ is harder for the memory management and for the coding itself than C#, you have the full control on what is happening in the app. More and more students today are lazy and haven`t the coding excitement as the ones earlier. Such people study computer science just because of the "Big money". Knowing what is happening behind the code is important but hard. If it was easy, everybody would be a "computerman" and most of us would suffer the "benefits" of unemployement. Even having some knowledge in ASM is not that bad. Some of the compiler optimizations will force the code to fail in realease mode. So, you should look in the asm to see who makes the problem. It is bad to forget the old things and do only the new ones, especialy when the new ones are based on the old stuff. Just a small example of what I mean. In the math we have the addition "+". It was developed thousand years ago. In 20 century the matrices were presented. Should we forget the addition? No, because the matrices stuff uses addition. It is the same here. The VM stuff uses C/C++ for translations, C/C++ is being translated into ASM, ASM to hex (machine words). I think the new generation coders must learn more than we do and not easily say C++ is shit I do not need it, VM rulez. I have lot`s of friends saying this. And they are always on the phone asking me "Oh, man, help, how can I implement this or that feature? Language X has no/or poor support for that." They have problems just because they are lazy to read that "old and unneeded" stuff as they claim. Last thing - my personal oppinion:

C++ for time critical, crossplatform and memory dependent code, else VM.

P.S. Windows Vista is written mainly in C++. Only irrelevant piece of code is .NET (probably to enable its support). MS Visual Studio 2005 is 90% C++, rest is .NET only to support it. The new MFC80 redistributables are shit!

3/29/2006 10:53:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

To respond to the method of testing... I guess this says that if I were to compare a java app built by Sun Developers and then compare it to the performance of an app written by some joe in an article forum I should think my results are valid? I think this little experiment is bogus.

4/25/2006 3:46:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

I think the computer will get faster arguement is a joke. Is the idea that we are supposed to run as fast as an 8080 at a few Mhz the point? Why when there are thousands of developers is my PC still a DOG when it comes to performance.

When the 486/66 boots faster than my 2.8Ghz XP box then there are some serious design problems.

.NET is but one of the fat bloated slow application platforms that will continue to make my PC look like its running at 66mhz.

So what if my PC runs at 10ghz if it acts like it runs at 66mhz whats the point? What am I gaining?

Yeah hardware will get faster so we as developers are supposed to get sloppier? No No No..

5/2/2006 11:01:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

.Net vs. native code is the same argument I heard MANY years ago -- just different languages.

I used to code 360 BAL (basic assembler language) and also protected mode stuff. We used to laugh at the run-times for FORTRAN and COBOL.

The early days of PC's had the assembly guys laughing at any and all high level languages.

Then, we had the C guys laughing at the ASM guys because they could develop faster.

I suspect that the my language vs. your language argument will never go away. It is, after all, just a slight step above the Ford vs. Chevy stuff I heard in H.S.

Stuff has changed over time. When I started, we had programmers earning $5,000 per year working on multi-million dollar machines. Now, we have people earning up-wards of $100k programming a $1,000 machine. The economics have been turned completely upside down. At one point, we had to optimize the machine -- now we optimize the developer.

Also, one needs to look at the economics of software development. Software companies that see a market for their wares that number millions of seats, could care less about developer productivity. That cost is amortized of a million plus copies and becomes inconsequential.

There is a large difference between that model and the one where you build a copy of one -- the typical corporate environment. Often, the major cost element is the time associated with development time and the subsequent opportunity cost of not automating the latest need.

The bottom line is there is no answer to the Language "A" vs. Language "B" argument or Environment "A" vs. Environment "B" argument because the questions are nonsensical.

BTW, the first system I worked on did not have an OS. It used an I/O monitor that was a total of 1022 bytes. If you know why 1022 instead of 1024, you know which system I speak of.

5/10/2006 2:31:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

.NET is not a higher level language than C++, this is not the same as "assembler vs. C++" IMHO. It is more like interpreted vs. native code or something like that...

6/1/2006 8:54:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I think your analysis is not complete. People, we should not argue without concrete facts. Please provide some experiment you have been done to .Net code and native code that could provide us a meaningful look to the inside.

Wohoo, I agree .Net is cool, but I want its performance as rock as native code too. lol

6/2/2006 9:10:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

Hi Mark,

Well this seems to be an year old post, that probably predates .NET 2.0. I got here while googling for a memory footprint comparison between .net and C++ applications. I've been a professional C# programmer from the first days of .NET 1.0

I took a look at your post as well as the commments, and I think you are spot on about being scared of .NET.

The fact is, in my day job, we develop enterprise solutions, and customized applications for client businesses. Clients such as these do not necessarily care about adding up another extra bit of RAM to their workstations, as long as they are delivered, well-behaved, fully functional solutions in the shortest amount of time. .NET ofcourse is the prefect platform to do this on. So in the whole debate of whether .NET is should be chosen over C++ or not, I'll have to say, well, .NET does have its uses...

but, on my own spare time, i do work a lot on what can be called "consumer" applications: apps such as web browsers, word processors, media players and games. Now these applications need to run on end-user PCs and obviously, you shouldn't be writing applications expecting the user to fetch extra RAM cards, if he can't run your app. As an end user, think of the number of applications you've thrown away, just because they were too sluggish.

Last year I was at MS TechEd, where they were unvailing .NET 2.0. There was this guy, who was doing the session on C++/CLI (ain't that what they call it?). At the end of the session, I went up to him and asked, "hey, what've you done with WTL?" and the guy says, "WTL? Oh... That's obsolete, legacy... drop it... upgrade to C++/CLI". And I left TechEd that day thinking that MS is full of morons if they give up on something as efficient as WTL... (Well, I can assure you that, that isn't the case. Becasue, I believe after a month or so they released WTL 8.0).

So, before I go ranting out a long comment, lemme just say this in conclusion...

As a programmer, I love .NET. It get helps me get my work done in record time. But, the software world is not about us programmers' having it easy, is it? Its about what our clients want from us... If they want systems done in record time, and aren't bothered about the sys resources, then a .NET app is what they will get from us.

But, those programmer's who write apps that are going to run on my mom's PC,

stay the hell away from .NET... please! RAM is pressious to them. They didn't go out and bought RAM, so that you could have it easy and do it .NET style; they bought it to run media players, play games, burn DVDs...

catch my drift?

6/21/2006 11:56:00 PM by Sameera

# re: The Coming .NET World – I’m scared

Hi all

I am a professional programmer and I have used C/C++, ASM , VB6, Java, C# and VB.NET for various projects. I see people arguing like kids, for no reason. Each language has its purpose. The .NET applications can give smaller development times and for some companies (especially small-medium ones) that don't have the luxury, this is important. For example, in the last year I am working on ERP applications. When a client of our company needs an alteration, I can do it (depending though on what he/she needs) in much less time than it would take me with VC++. In a case where I would need a better speed performance I would defenitely choose VC++. And something else. People don't abandon a language and start learning from the beggining a new one. So people who are coding for (lets say) 10 years in C++ won't change that. The same happens for someone who works a .NET language. I would suggest younger people to learn C++ and then a .NET language if it is needed.

PS:

No one mentioned that .NET implementations exist for Linux and Mac OS. See dotGNU

http://en.wikipedia.org/wiki/DotGNU

7/19/2006 4:42:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

While I am a hardcore assembly programmer who today writes more C++ than asm for obvious reasons, this "from asm to C++" then "from C++ to .NET" makes no sense at all.

C was the answer to the intrinsic limitations of asm, about portability and productivity, and C++ was the answer to the limitations of C in big projects.

While C++ can be improved in many regards, .NET is not the genuine answer of the computer scientists to C++ problems, .NET is rather the Nth (with N very large) attempt of Microsoft to make big money while ruining its competitors and thus the computer industry in general with crap, bloated products.

Name one big defect of C++ that C# "fixes" and couldn't have been fixed by another, non-managed, language maybe defined by an ISO committee, rather than a single, greedy and known-to-like-mob-practices stinkin' company.

.NET and the languages on top of it are just another attempt of Microsoft to kill better competitors using ugly practices, it's not a gift from God to improve the computer industry or the users' experience.

If C++ is not perfect, improve it. Java wasn't an improvement over C++ (wasn't even meant to be, but today some pretend it is), it was a different thing. C# pretends to be a replacement for C++ (and in Microsoft original dreams, probably also a weapon to kill cross-platform development and make Windows the only supported platform in the world. Yes, it wasn't Microsoft who produced Linux and Mac versions (still very limited) of the .NET crap).

Ok, .NET is not totally crap, because it steals ideas from Java which is not totally crap and C# is not totally crap because it steals ideas from C++ and also implements things that C++ should have had but couldn't to retain the full C compatibility (and for other stupid reasons at times).

But IMHO Microsoft is truly mob, period, and everything that comes out of them is to be taken with diffidence. If you know the company. Otherwise, you're probably just a kid who doesn't know computers development history and didn't learn from it, and sees us old farts as paranoid, while we've just open eyes and experience.

Good luck, world!

If I think a Commodore64 had better scrolling than most today's PC games, I get shivers in my back. Modern PC's are like 100000 times more powerful, raw computing-power wise, with their GPUs and CPUs.

8/7/2006 11:54:00 AM by Mav

# re: The Coming .NET World – I’m scared

I've been using C/C++ for 10 years...

In these 10 years I saw how microsoft technologies changed..

If you wanted to write Windows components, you had to learn COM, then COM+, then VB, then Win9x API, then WinNT API, then ATL, and so on.... each year Microsoft launched a new technology...

not it's .net's time.

people will learn .net and in 5 years .net will be history....

People say that C/C++ has a larger learning curve... but once you learn how to code in C/C++ you just work... you don't need to discard what you have learned 5 years ago, you still use it.

You can now learn .net and be sure that in 5 years you will not use .net at all...

8/9/2006 7:29:00 AM by Pablo

# re: The Coming .NET World – I’m scared

Well said, Pablo. One thing that experience certainly taught me is NOT to write applications, but to extend my collection of thousands modules (which cover all the possible needs of all kinds of applications).

If a contractor asks me to write a word processor, for example, I don't write a word processor, but I extend my own collection of modules in its well defined hierarchy to add all the functions that a word processor needs.

In the end, for that customer I don't write a whole application but only a small source that is just some glue for my own modules, and hand it over to him/her. So 99% of the work I get paid for will be reusable, only 1% not.

He will get his word processor, and I will get my improved own framework, which I will know better than my pockets.

Someday a new contractor will ask me to write e.g. an IRC client and, guess what, I will just write a small source which is the glue for already existing modules of my framework. Yes, I already own those related to text editing, the previous contractor paied for them! ;) Holy s*it, I realize that I don't have a TCP/IP hierarchy of modules! So I'll write them, and add them to my framework.

But the applications I hand over to the customer must be only glue that links together my own, well known modules.

Next time, a new contractor will ask me to write a multiplayer videogame. Guess what, I already have networking and text editing modules, all I need to do is to write those modules that the customer's application needs and I don't already have. A graphics library for example.

But that graphics library he paid for, will be used in future projects that maybe have nothing to do with videogames (a graph plotting program for example?).

Contract after contract, my framework gets bigger and bigger, and my confidence in it grows, much better than any manual or online documentation or tutorial would allow (because *I* wrote it all!).

But if you use that messy .NET hierarchy you're in the hands of sata.. of Microsoft. Good luck then!

I've been growing my own C++ modules for 10+ years now, I *know* I am much more productive than any C# or Java baby out there. I also feel some serious limitations in the C++ language, that's why I'm writing my language's compiler now. Will it take thousands mans' years of work? No, as long as it's just.. glue.

You know, I had to write that x86 assembler class when, many many years ago, I needed to write an extremely fast (compiled fonts) DOS text routine.

How can I live without C# and Java and VisualBasic.. hehe. :)

8/11/2006 4:01:00 AM by Mav

A few years ago Microsoft embarked on an anti-Java campaign called .NET, spinning .NET as a revolutionary technology (while failing to explain that it’s really Microsoft’s own implementation of the JVM concept with new languages layered on top of it). The .NET hype has died down somewhat in the last couple of years, but the multiyear marketing blitz has had its inevitable effect: lots of people are writing .NET applications (also known as managed applications). In fact, my Dell laptop includes a managed Dell utility that tells me when there Dell security alerts.

Both the client and server side of Web applications are ideal candidates for a managed environment, since the .NET runtime handles much of the complexity of cross-network communication and simplifies memory management. However, the proliferation of managed applications has extended to client-only applications. Microsoft already includes several with Windows XP Media Center Edition and Tablet PC Editions and many more are coming in Longhorn.

The reason I’m scared at the prospect is that managed applications have essentially no consideration for performance or system footprint. I’ll use Notepad as an example because I can compare a managed Notepad with the unmanaged one (unmanaged applications are also known as native applications – not the same as a native application from the point of view of Windows NT, which are applications that use the native API ) that ships with Windows XP. You can download the managed Notepad with source code at http://www.csharpfriends.com/Articles/getArticle.aspx?articleID=84 .

In this experiment I’ve simply launched both versions and will look at their performance-related information with Process Explorer. In Process Explorer’s process view you’ll see the managed version highlighted in yellow, which is the default highlight color for processes that use the .NET runtime:

You can see that Process Explorer has also highlighted Outlook and SharpReader as managed applications. Outlook isn’t a managed application (at least not the Office 2003 version), but I installed Lookout ( http://www.lookoutsoft.com/Lookout/lookoutinfo.html ), which is a managed Outlook search add-on that loads as a DLL into the Outlook process. SharpReader ( http://www.versiontracker.com/dyn/moreinfo/windows/28759 ) is the RSS feed aggregator that I use, and it’s obviously written in .NET.

If you open the process properties of both Notepad applications and compare the performance pages side-by-side you’ll see why I hope that Independent Software Vendors (ISVs) and Microsoft stay away from .NET for client-side-only applications. The properties for the managed version is on the top with those of the native instance below:

First notice the total CPU time consumed by each process. Remember, all I’ve done is launch the programs – I haven’t interacted with either one of them. The managed Notepad has taken twice the CPU time as the native one to start. Granted, a tenth of a second isn’t large in absolute terms, but it represents 200 million cycles on the 2 GHz processor that they are running on. Next notice the memory consumption, which is really where the managed code problem is apparent. The managed Notepad has consumed close to 8 MB of private virtual memory (memory that can’t be shared with other processes) whereas the native version has used less than 1 MB. That’s a 10x difference! And the peak working set, which is the maximum amount of physical memory Windows has assigned a process, is almost 9 MB for the managed version and 3 MB for the unmanaged version, close to a 3x difference.

Is 8 MB of private memory a big deal? Not really, but then again this is basically a zero-functionality application (it doesn’t even have search or replace). Once you start to implement features in .NET the private byte usage really grows. SharpReader, for example, consumes close to 42 MB of private bytes to store data related to around 20 different feeds, and the user interface for SQL Server 2005 beta 3 (written in managed code) consumes 80 MB when run on a fresh installation.

Compare that managed application bloat with the memory usage of fully-featured unmanaged applications that have 10 times the complexity of the managed examples I’ve listed: Microsoft Word weighs in at around only 6 MB after starting with no document loaded and Explorer on my system is consuming only 12 MB.

Now imagine a future where a significant percentage of applications are written in .NET. Each managed application, ignoring the memory overhead of document data and shareable memory like code and file system data, will eat somewhere between 10 and 100 MB of your virtual memory. If you think your system is slow now, wait until several of these applications are active at the same time and competing for physical memory.

It makes me wonder if Microsoft gets a cut of every RAM chip sold…

Originally by Mark Russinovich on 4/16/2005 8:08:00 AM

Migrated from original Sysinternals.com/Blog

# re: The Coming .NET World – I’m scared

It would be interesting if you could compare it also with a Java application.

4/16/2005 3:30:00 AM by Camillo

# re: The Coming .NET World – I’m scared

i had to endulge only two examples of .net code so far but my senses told me the same thing you've published here.

pinnacle's mediacenter software starts so ridiculously slow on my 3.2 ghz machine that i first thought the task crashed silently.

adding to this annoyance is the fact that starting managed applications seem to be 'invisible' through the absence of the 'application starting' cursor.

autodesk's autocad 2005 starts considerably slower than it's predecessor and seems to need more ram for the very same tasks.

4/16/2005 5:55:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I agree with your analisys though I disagree with your conclusions.

The startup overhead is due to the runtime setup. This process, if you have a look to the Rotor sourcecode, it is a little bit heavy, especially because several classes, part of the base class library, are Jitted.

This implies that starting a managed application requires that overhead always to be payed. Moreover if you run two managed applications in parallel there is no attempt made by the two running instances of the runtime to share resources leading to a considerable overhead.

In some respect this situation reminds me when C runtime wasn't shared through DLLs and it was imposing unnecessary overhead over working set of running applications. I'm thinking of C runtime because David Stutz introduces CLR as a modern C runtime.

However from these considerations one may conclude that CLR should become smarter, trying to learn from lessons of the past. Perhaps Jitted code could be shared among different CLR instances.

My point is that I don't really see any really issue in the .NET model, though I see several aspects where CLR can be improved.

For instance I once developed the JavaStarter, it was an application to improve resource usage from different Java applications: the trick was to start a JVM and use it as a loader. This can be done also in .NET: with few lines of code one may start a runtime to be used as a loader of .NET applications. Each .NET application would run in a different Application Domain, achieving an appropriate level isolation. This is but a patch, though effective, waiting that MS guys improve CLR in this respect.

When MS announced that CLR would go into the OS and that in fact in Longhorn side by side execution would be not supported these were the considerations driving their decision. If you make the OS aware of the runtime it is possible to do amazing optimizations that would make CLR applications undistinguishable from unmanaged ones.

I have to do a last comment: your considerations assume well written applications. I have plenty of applications running on my laptop that are C++ and sucks. Moreover C++ inlining strategy tend to code-bloat applications, whereas CLR tend to favor code sharing.

I think that VM are inevitable to dominate the complexity of applications we need. Services like reflection and dynamic loading of types are fundamental tools. Thus we should look how to use these new tools to make CLR viable, and we must ask MS to have more efficient versions of CLR.

4/16/2005 7:36:00 AM by Antonio Cisternino

# re: The Coming .NET World – I’m scared

At last, someone which publicly reject VM technologies. I know they are computer future, very powerful, bla bla bla.

But I am (as you seem to be) wondering why Moore's law does apply to CPU power and network bandwith and does not apply to application responsiveness nor features.

Please, someone explain me why Amiga OS 4 seems more responsive on a G4 800 MHz than my Windows XP on my Athlon 2 GHz, are programmers really programming gods that we have to worship ?

4/16/2005 8:23:00 AM by Vincent

# re: The Coming .NET World – I’m scared

If you check out how Sharpreader allocates memory under the CLR Profiler, you'll notice it isn't the best written application when it comes to object usage.

4/16/2005 8:52:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I wonder if the resource usage you are seeing comes from using the CLR, or from being written in C#.

I don't see what it is with vendors trying to set up their own language (Java, C#, Objective-C, ...) these days. I guess there is a real need for a language that doesn't make it as easy to shoot yourself (and not just in the foot either...) as C++ does? I can understand this for writing GUI's for example, which can be tedious work (in any language). But I'm confident that there will be a good part C/C++ for a long time, since that can be made to compile on several platforms more or less easily, has decent speed generally, and allows you to do many things to speed it up even further (eg where I work we use a custom memory allocator for performance).

But if Java/C# is easier, it may get more people writing software. Not sur eif that is a good thing if there will then be more of the 'resource-hog' type of software :-(

4/16/2005 9:43:00 AM by legolas

# re: The Coming .NET World – I’m scared

I agree with Antonio's comments. You are assuming that the current state of the CLR and .NET in general is fixed. Just because it has certain undesirable characteristics now does not mean they are set in stone for all time. There is work being done to optimize many things and I doubt you are the only one to notice that .NET uses a lot of memory currently. Also, as someone else already pointed out, managed or unmanaged if you code poorly it will be very evident to all. Managed code is not a license to ignore common practices and to stop thinking about what is happening "under the covers", in fact managed code makes it so much more important because you are able to do much more powerful things with fewer lines of code. This of course means you NEED to be aware of the cost of these things and alternatives that can make it less costly. Just my 2 cents.

4/16/2005 10:14:00 AM by Ryan

# re: The Coming .NET World – I’m scared

Sharpreader(which I also use) has been knocked for memory usage for quite a while. The creator, Luke Hutteman, has even admitted that the current implementation, which keeps all feeds and feed text in memory, is not good. I remember a post from him saying that it shouldn't be used as a indication of .NET memory usage, but of course I can't find that now...

That said, I'm not disagreeing with what you said. Managed applications definitely use more resources. And they start slower. And they are a pain to get installed, requiring a 22 meg "redistributable". I've also seen those problems in unmanaged applications. Poor development is a problem with every language. I think that in a lot of cases if developers really tried to make the most performant and svelte managed applications, you could have some applications that do compare pretty well against unmanaged counterparts. Of course I think this is harder to do for managed apps than it is for unmanaged ones.

(I also happen to think that the best app will be a combination of managed and unmanaged code.)

I do think managed applications do have a place to be used today though, and that’s in backend server side applications. I think that if you are generating web front ends(on Windows) and you aren’t using ASP.NET, then you are horribly behind the curve. Even though I’ve done very little in the area, I think that the concepts behind ASP.NET should make the development process much smoother and make the applications much more maintainable, which in this case, I think is enough to counteract some of the other concerns. Of course, once again, it falls upon the developers to take the best advantage of the platform, and this still takes having good developers.

4/16/2005 10:16:00 AM by CaseyS

# re: The Coming .NET World – I’m scared

I recall an article by Pietrek in MSDN a couple of years ago where he traced the calls made by setting up a simple .Net app. It was impressive to see how many calls got made for creating an app domain and checking security.

So, as long as you know where this "overhead" is going, you can plan accordingly.

As for chewing cycles or bytes, I also recall in the mid-eighties when we were earing about X-Window and its required 8 meg of ram and thinking "these guys are nuts". The lesson I learned, then, was: if you have a nice application that people wants then the hardware will catch up (sooner or later).

4/16/2005 1:42:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

The basic problem is that in CLR 1.x assemblies don't share pages across processes like regular dlls do. Microsoft knows about this, and this is remedied in 2.x. So difference will not be as dramatic anymore.

Even with 1.x it's well worth to run managed client apps due to inherent resistance against security attacks like buffer overrun.

I respect your work A LOT, but this post is just a mix of some bitterness, fud and plain bad homework

4/16/2005 3:43:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

It's surprising how many are coming to the defense of .NET bloat. It doesn't matter if it is due to the existing architecture. It doesn't matter if it is theoretically fixed in version now+n. The current incarnation of .NET leads to apps that have a bloated footprint before they are even launched, far more bloat than native code when they ARE launched, and generally worse performance than native code from launch to shutdown. Sure, it's better than Java. But it's still miles apart from well written native code.

4/16/2005 4:34:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

Well said Mark, I've been wondering why authors haven't seen the overhead...

4/16/2005 9:08:00 PM by Hari

# re: The Coming .NET World – I’m scared

>But it's still miles apart from well

>written native code.

Comparing fully managed code to native code is ridiculous. Wow there are size and performance differences? Are you sure? I just can't imagine that something that is dynamically compiled and subject to security/safety verification could possibly be any slower than optimized native code.

4/17/2005 10:45:00 AM by Anonymous

# re: The Coming .NET World – I’m scared

I agree 100% with your analysis. There is only one place to go.

www.borland.com

Look for delphi. Ease of use plus unmanaged code performance!

4/17/2005 6:55:00 PM by Kwadwo Seinti

# re: The Coming .NET World – I’m scared

No exactly a fair comparasion between .NET and other development tools.

Please note that a true .NET application will always load a few runtimes when they loads, that can perheps explain the memory consumption difference. But when we add functionality to the applications, the difference would become much closer(relatively).

This also remind me of another comparasion between compiled binarys of ASM .com and .exe files plus C , Pascal and BASIC .exe files at the days of DOS. The size difference between ASM executables are, of course, very large. But if someone say that because of that we should keep away from those higher level languages, I'll say that the development complexity out weight the size and speed advantage when considering the development tool to use.

4/17/2005 9:00:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

The .NET version might be 10 times more bloated than the C version. But I'm sure I can write an assembly version that is 10 times faster.

But it doesn't matter. Hardware will catch up - 10 GHz machines will make the extra overhead unimportant.

4/17/2005 10:21:00 PM by Anonymous

# re: The Coming .NET World – I’m scared

This post has been removed by the author.

4/18/2005 10:40:00 AM by Cullen Waters

# re: The Coming .NET World – I’m scared

My response

4/18/2005 10:40:00 AM by Cullen Waters

# re: The Coming .NET World – I’m scared

Software gets slower faster than hardware gets faster.

--

Wirth's law