- Home

- System Center

- System Center Blog

- How to Guide for SC- Virtual Machine Manager 2019 UR1 features

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The very first update roll up for System Center Virtual Machine Manager 2019 is now available. The update release contains several features requested by customers on VMM user voice and other forums. Thanks for engaging with us and helping us improve VMM. We know you were eagerly waiting for this release and are excited to know about What’s new in SCVMM 2019 UR1. This release brings improvements in resiliency and also introduced new feature capabilities.

In this blog, we will talk about the new features, and will answer most of the "Why" and "How" questions you may have. For details of all the bug fixes in this release refer to the KB article here

New Features Introduced in SCVMM 2019 UR1

Support for replicated library shares

Large enterprises, usually have multi-site data-center deployments to cater to various offices across the globe. These enterprises typically have a central VMM server but locally available library servers at each data center to access files for VM deployment. rather than. This is to avoid any network related issues one might experience while accessing the library shares from a remote location. However, library files need to be consistent across all the data-centers to ensure uniform VM deployments. To maintain uniformity of library contents, organizations use replication technologies like DFSR.

If you are looking at managing replicated library shares through VMM, you will need to disable the usage of alternate data stream for both source and destination shares. VMM generates a GUID for all the library objects managed by VMM. This metadata is written into Alternate Data Stream of the file. VMM uses Alternate Data Stream to identify library objects as the same objects, while they are moved across folders in library shares or in scenarios where a library object is renamed. For effective management of replicated library shares using VMM, it is required to disable the Alternate Data Stream option.

You can do this while adding new library shares or by editing properties of existing library shares. Alternate data stream is enabled by default. The video below can be used as a guide to configuring library shares that are replicated through VMM Console.

Watch the Demo here:

Simplifying Logical Network Creation

We have heard your feedback regarding networking experience in VMM and hence, we have embarked on the journey to make networking experience in VMM simpler and easier. As a first step, SCVMM 2019 UR1 provides a simplified experience for creating logical networks. This has been achieved through the following improvements:

- Grouping Logical Networks types based on their use case.

- In-product descriptions and Illustration of the logical network types

- A connected experience to create IP pools within the logical network wizard.

- Dependency graph to assist in troubleshooting scenarios and understanding the network dependencies for logical network deletions.

Improvements in detail

Grouping of Logical Network types based on their use cases.

Logical network types are grouped into 3 categories.

1. Connected Network

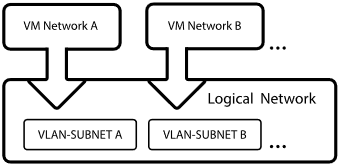

The VLAN and Subnet pairs of the underlying physical networks are logically equivalent. A single VM network will be created on top of this logical network and this VM network provides access to all the underlying VLAN-Subnet pairs.

This network type was earlier known as ‘One Connected Network’.

When to use this type of network?

Scenario:

Contoso is an enterprise. It needs a network to host their DevTest workloads. This network may have multiple VLANs / Subnets. Contoso creates a logical network of type ‘Connected network’. VMM is responsible to assign the VLAN / Subnet to the VMs based on the host group on which the VM is placed to.

2. Independent Network

Multiple VM networks can be created on top of this logical network. Each VM network created, provides access to a specific VLAN-Subnet pair. The VM networks are independent to each other.

There are two types of ‘Independent’ networks.

- VLAN based independent networks

- PVLAN based independent networks

When to use this type of network?

Scenario:

Woodgrove IT is a hoster. Woodgrove IT has ‘Contoso’ and ‘Fabrikam’ as it’s tenants. Both ‘Contoso’ and ‘Fabrikam’ needs a DevTest network. Contoso’s network should be isolated from that of Fabrikam’ s. All VMs of contoso should be connected to ‘Contoso-DevTest’ VM network and VMs of Fabrikam should be connected to ‘Fabrikam-DevTest’ VM network.

Woodgrove IT creates a logical network of type ‘Independent’ network and names it ‘DevTest’. This logical network has two VLAN-Subnet pairs. Two VM networks are created on top of this logical network with each VM network getting access to a specific VLAN-Subnet pair. One VM network is named ‘Contoso-DevTest’ and is provided to Contoso’s use and the other is named ‘Fabrikam-DevTest’ and provided to Fabrikam’ s use.

3. Virtualized Network

This is a logical network which has network virtualization/SDN. Multiple virtualized VM networks can be created on top of this logical network. Each VM network will have its own virtualized address space.

In-product descriptions and Illustration of the logical network types

Each type of logical network has an in-product description and an illustration describing the use case.

A connected experience to create IP pools within the logical network wizard.

An IP pool can be created right after a logical network site is created. IP pool is now a sub-object to a logical network definition (network site).

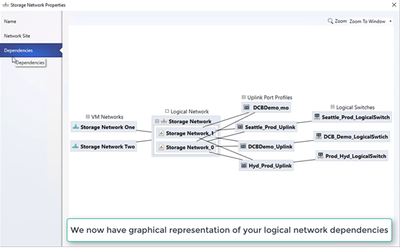

Dependency graph

A ‘dependencies’ tab is added to the logical network object. This will display the network dependencies between different VMM networking artefacts. This is helpful in troubleshooting scenarios and helps understand the network dependency when a logical network needs to be deleted.

Here is a Video demo to show the new experience while creating logical networks in VMM

Demo Video:

Configuration of DCB settings on S2D clusters

Traditionally datacenters used SAN for their enterprise storage needs and a dedicated network for Storage Traffic. This network was a Fiber channel network with a dedicated switch. This was a ‘LOSSLESS’ network and had a very good SLA / performance for storage traffic.

With the advent of ‘Converged Networking’ customers are using ‘Ethernet’ network as a converged network for their management as well as storage traffic. Hence, it is important for Ethernet networks to support a similar level for performance and lossless’ness compared to that of dedicated Fiber channel networks. This becomes more important when you consider a Storage Spaces direct cluster.

The East-West traffic needs to be lossless for the Storage Spaces direct cluster to perform well. Remote Direct Memory Access (RDMA) in conjunction with Datacenter Bridging (DCB) helps to achieve similar level of performance and lossless’ness in an Ethernet network as a Fiber channel networks.

The DCB settings needs to be configured consistently across all the hosts and the fabric network (switches). A misconfigured DCB setting in any one of the host / fabric device is detrimental to the S2D performance.

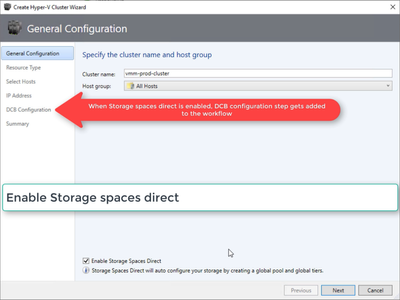

We are now supporting configuring DCB settings right in the S2D creation workflow of VMM.

Prerequisites

- Each node of the S2D cluster should have RDMA capable network adapters.

- In a converged NIC scenario, all the underlying physical NICs should be RDMA capable.

- The DCB settings should be configured consistently across the physical network fabric along with S2D cluster nodes. S2D admins needs to co-ordinate with the networking team to configure this.

What is supported and what is not supported

- Configuration of DCB settings is supported on both Hyper-V S2D cluster (Hyperconverged) and SOFS S2D cluster (disaggregated)

- You can configure the DCB settings during cluster creation workflow or you can configure DCB settings on an existing cluster.

Note: Configuration of DCB settings during SOFS cluster creation is NOT supported. You can only configure DCB settings on an already existing SOFS cluster. All the nodes of the SOFS cluster needs to be managed by VMM

3. Configuration of DCB settings during cluster creation is supported only when the cluster is created with a Windows Server which has been deployed. It is not supported with Bare Metal / Operating system deployment workflow.

How to configure DCB Settings

- Create a new Hyper-V cluster. When ‘Enable Storage Spaces Direct’ option is selected, a new step named ‘DCB configuration’ gets added to the Hyper-V cluster creation workflow.

2. In the ‘Resource Type’ step, select the account which has admin permissions on the S2D nodes as Run-As account.

3. Select ‘Existing servers running a Windows Server operating system’.

Note: Configuration of DCB settings is not supported in Bare Metal deployment / Operating System Deployment scenario.

- In the ‘Select Hosts’ step, select the S2D nodes.

- Navigate to ‘DCB configuration’ step. Enable ‘Configure Data Center Bridging’

- Input the Priority and Bandwidth for SMB-Direct and Cluster Heartbeat traffic.

Note: A default value is assigned to Priority and Bandwidth. Customize it based on the needs of your environment.

Default values:

|

Traffic Class |

Priority |

Bandwidth (%) |

|

Cluster Heartbeat |

7 |

1 |

|

SMB-Direct |

3 |

50 |

4. Select the network adapters used for storage traffic. RDMA is enabled on these network adapters.

Note: In a converged NIC scenario, select the storage vNICs. The underlying pNICs should be RDMA capable for vNICs to be displayed and available for selection.

5. Review the summary and finish. A S2D cluster will be created and the DCB parameters will be configured on all the S2D Nodes.

Note: DCB settings can be configured on the existing Hyper-V S2D clusters by visiting the cluster properties page and navigating to ‘DCB configuration’ page.

Note: Configuration of DCB settings are not supported during the ‘SOFS cluster creation’ workflow. DCB settings can be configured on an existing SOFS cluster provided all the nodes in the SOFS cluster are managed by VMM.

Note: Any out of band changes to DCB settings on any of the nodes will cause the S2D cluster to be non-compliant in VMM. A ‘Remediate’ option will be provided in the ‘DCB configuration’ page of cluster properties which customers can use to enforce the DCB settings configured in VMM on the cluster nodes.

We love bringing these features to you and hope you find these helpful in your VMM deployment. We look forward to your feedback!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.