- Home

- Windows Server

- Storage at Microsoft

- Storage Innovations in Windows Server 2022

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We are excited to release new features in Storage for Windows Server 2022. Our focus is to provide customers with greater resiliency, performance, and flexibility. While some of these topics have been covered elsewhere, we wanted to provide a single place where our Server 2022 customers can read about these innovations and features.

Advanced Caching in Storage Spaces

During Windows Server 2019, we realized that many deployments are on single, standalone server platforms (i.e., non-clustered). We wanted to deliver innovations that specifically target this popular segment of our customer base.

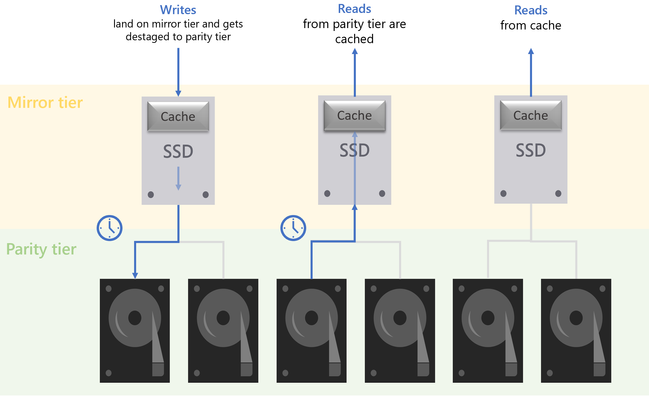

We developed a new storage cache for standalone servers that can significantly improve overall system performance, while maintaining storage efficiency and keeping the operational costs low. Similar to caching in Storage Spaces Direct, this feature binds together faster media (for example, SSD) with slower media (for example, high-capacity HDD) to create tiers.

Windows Server 2022 contains new, advanced caching logic that can automatically place critical data on the fastest storage volumes, while placing less-critical data on the slower, high-capacity storage devices. While highly-configurable, Windows offers a highly optimized set of defaults that allow an IT admin to “set and forget” while still achieving significant performance gains.

Learn more: Storage bus cache on Storage Spaces

Faster Repair/Resync

We heard your feedback. Storage resync and rebuilds after events like node reboots and disk failures are now TWICE as fast. Also, repairs have less variance in time taken so you can be more sure of how long the repairs will take. No longer will you get those long hanging repair jobs. We've done this through adding more granularity to our data tracking. This allows use to move only the data that needs to be moved. Less moved data, means less work, less system resources used, and less time taken - also less stress for you!

Adjustable Storage Repair/Resync Speed

We know that resiliency and availability are incredibly important for our users. Waiting for storage to resync after a node reboot or a replaced disk shouldn't be a nail-biting experience. We also know that storage rebuilds can consume system resources that users would rather keep reserved for business applications. For these reasons we have focused on improving storage resync and rebuilds.

In Windows Server 2022 we cut repair speeds in half! And you can go even faster with adjustable storage repair speeds.

This means you have more control over the data resync process by allocating resources to either repair data copies (resiliency) or run active workloads (performance). Now you can prioritize rebuilds during servicing hours or run the resync in the background to keep your business apps in priority. Faster repairs means increasing the availability of your cluster nodes and allows you to service clusters more flexibly and efficiently.

Learn more: Adjustable storage repair speed in Azure Stack HCI and Windows Server clusters

ReFS File Snapshots

Microsoft’s resilient file system (ReFS) now includes the ability to snapshot files using a quick metadata operation. Snapshots are different than ReFS block cloning in that clones are writable, snapshots are read-only. This functionality is especially handy in virtual machine backup scenarios with VHD files. ReFS snapshots are unique in that they take a constant time irrespective of file size.

Functionality for snapshots is currently built into ReFSutil or available as an API.

Other improvements:

- ReFS Block Cloning performance improvements.

- ReFS improvements to storage mapping in the background mapping to reduce latency.

- Various other perf and scale improvements.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.