- Home

- Startups at Microsoft

- Startups at Microsoft

- Part 1 - Building a Data Lakehouse using Azure Data Explorer

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

As businesses continue to generate massive amounts of data, the need for efficient data management solutions becomes increasingly important. This is where a data lakehouse comes in - a hybrid solution that combines the best features of a datalake and a data warehouse.

This article is Part 1 in the series, here we will explore how to build a data lakehouse using Azure Data Explorer (ADX) where the data flows from Azure SQL DB using Change Data Capture (CDC) through Azure Data Factory and events flowing from events hub.

In Part 2 we will show you how to deploy this solution using Bicep, a powerful infrastructure as code (IaC) tool from Microsoft. With this guide, you'll be able to create a data lakehouse that can handle large volumes of data and provide valuable insights for your business.

For the actual code on "how to guide" for the deployment see part 2:

Part 2 - Building a Data Lakehouse using Azure Data explorer - the deployment

Background

What is a Data Lake House?

The Data Lakehouse term was coined by Databricks on an article in 2021 and it describes an open data management architecture that combines the flexibility, cost-efficiency, and scale of data lakes with the data management, data mutability and performance of data warehouses.

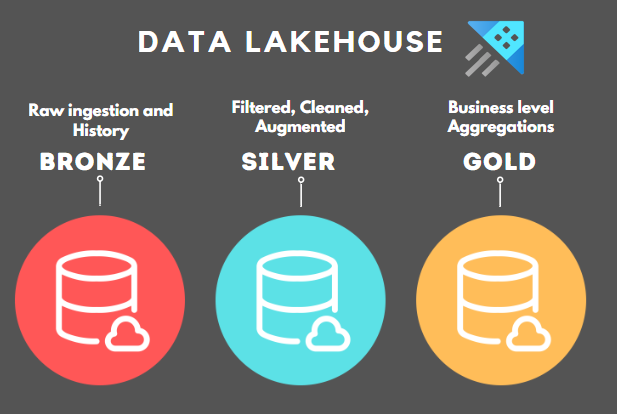

What is a Medallion Architecture?

A medallion architecture (also coined by Databricks) is a data design pattern used to logically organize data.

The goal is to incrementally improve the structure and quality of data as it flows through each layer of the architecture.

Medallion architectures are sometimes also referred to as "multi-hop" architectures.

Creating a multi layer data platform allow companies to improve data quality across the layers and at the same time provide for their business needs.

Unstructured and raw data are ingested using scalable pipelines to output the highest quality enriched data.

It contains 3 basic layers.

Bronze layer:

- Contains raw data, sometimes referenced as the data staging area.

- Not accessible to consumers only to engineers.

- Contains PII data.

Silver layer:

- Contains deduplicated, enriched data.

- Accessible to all consumers.

- Contains “anonymized data” (no PII).

- Consumers: Data analysts, Data scientists, Engineers.

Gold layer:

- Contains aggregated data.

- Accessible to all consumers.

- Built for dashboards.

What is CDC?

CDC (Capture Data Change) is the process of capturing changes made to data in a database and then delivering them to a downstream system. It is commonly used for data replication.

We will use CDC by capturing changes made to an Azure SQL and store the changes in Azure Data Explorer.

What is Azure Data Explorer (ADX)?

Azure Data Explorer is a fast and highly scalable data service for analytics.

The Azure Data Explorer analytics runtime is optimized for efficient analytics using powerful indexing technology to automatically index free-text and semi-structured data.

What is Azure Data Explorer and when is it a good fit?

Azure Data Explorer is a fully managed, high-performance, big data analytics platform that makes it easy to analyze high volumes of data in near real time. The Azure Data Explorer toolbox gives you an end-to-end solution for data ingestion, query, visualization, and management.

By analyzing structured, semi-structured, and unstructured data across time series, and by using Machine Learning, Azure Data Explorer makes it simple to extract key insights, spot patterns and trends, and create forecasting models. Azure Data Explorer is scalable, secure, robust, and enterprise-ready, and is useful for log analytics, time series analytics, IoT, and general-purpose exploratory analytics.

Azure Data Explorer key features

- Fast query performance: Azure Data Explorer is designed to handle high volumes of data in real-time and to provide fast query performance for ad-hoc analysis and visualization.

- Scalability: Azure Data Explorer is highly scalable and can handle large amounts of data from a variety of sources.

- Kusto Query Language (KQL): Azure Data Explorer uses KQL, a powerful query language that allows you to query and analyze data quickly and easily.

- Built-in visualization tools: Azure Data Explorer provides built-in visualization tools that allow you to easily create charts and graphs to visualize your data.

- Integration with other Azure services: Azure Data Explorer integrates with other Azure services, such as Azure Stream Analytics and Azure Event Hubs, to provide a complete data analytics solution.

Why use Azure Data Explorer for a Business Analytics Platform?

The reason to use ADX for a Business Analytics Platform is that we get the best of both worlds: Data lakes and Data warehouses.

|

|

Data Lake |

Data Warehouse |

Azure Data Explorer |

|

Suitable for raw data |

Y |

N |

Y |

|

Suitable for BI |

N |

Y |

Y |

|

Long Data retention |

Y |

N |

Y |

|

Suitable for DS |

Y |

N |

Y |

|

Cost |

Low |

High |

Low |

- Better query performance than data lakes through indexing.

- ADX implements fast indexing of all the columns including free-text and dynamic columns.

- ADX enables processing, aggregating and analyzing data.

- Mixed data types: structured, semi-structured and unstructured.

- ADX allows ingesting structured, semi-structured, and unstructured (free text) data.

- Direct access to source data.

- ADX stores immutable data (as in all data lakes).

- ADX allows transforming and exposing the increasingly “clean” data in the silver and gold layers.

- Choice of languages for processing (not only SQL)

- ADX has its own language (KQL) but it also supports SQL

- ADX provides native advanced analytics capabilities for:

- Time series analysis

- Pattern recognition

- Anomaly detection and forecasting

- Machine learning

- ADX allows you to build near real-time analytics dashboards using:

- Azure Data Explorer dashboards

- Power BI

- Grafana

- ADX can be accessed using a Spark Connector.

Part 2 – The Infrastructure

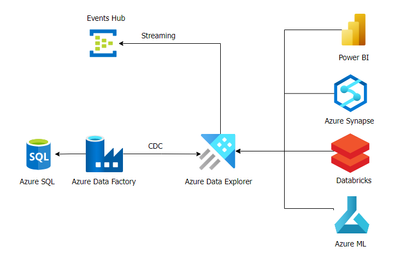

Architecture

- Azure SQL: our data source. In real life you can use any Database that can be connected to Azure Data Factory for CDC. For example: Cosmos DB, MySQL, Postgres, etc.

- Azure Events Hub: our data streaming and event ingestion service. It can process millions of events per second with low latency. Azure Data explorer offers “one click ingestion” from events hub.

- Azure Data Factory: our ETL service. Used for scale-out serverless data integration and data transformation. In this article we will use Azure Data Factory CDC capabilities to copy the changes in the Azure SQL DB into Azure Data Explorer.

- Azure Data Explorer: is the actual data lake house in our data platform.

- Power BI can be used for dashboards and reporting or you can leverage Azure Data Explorer’s built in reporting capabilities.

- Any of the following technologies can be used to build transformations and copy data between the layers in ADX.

- Azure Synapse

- Databricks

- Azure ML

- Apache Spark

Ingestion

We will ingest events from an Events hub into ADX using “one click ingestion”.

CDC (Capture Data Change) data will be written into ADX using Azure Data factory using periodically triggered ETLs.

The data layers

- Bronze layer:

- Contains the raw data arriving from Events hub and Azure Data Factory (CDC) from Azure SQL DB.

- ADX tables in this layer can be named “bronze<tbl-name>” or any other naming convention that suits you. I recommend using some prefix to identify all tables in this layer.

- Operational database changes (CDC) can be indefinitely stored for auditing purposes in the bronze layer or removed after usage by using a retention policy.

- Silver layer:

- Contains clean and anonymized data after enrichment and processing.

- ADX tables in this layer can be named “silver<tbl-name>” or any other naming convention that suits you. I recommend using some prefix to identify all tables in this layer.

- After ingesting data to the target tables in the silver layer, you may want to remove it from the source table in the bronze layer. Set a soft-delete period of 0 sec (or 00:00:00) in the source table's retention policy, and the update policy as transactional.

- Gold layer:

- Contains aggregated data used in dashboards and applications.

- ADX tables in this layer can be named “gold<tbl-name>” or any other naming convention that suits you.

Data processing

To build the Medallion Architecture in Azure Data Explorer, data needs to be transformed and copied between the layers (Bronze->Silver->Gold).

- Update policies instruct Azure Data Explorer to automatically append data to a target table whenever new data is inserted into the source table, based on a transformation query that runs on the data inserted into the source table. So, the data transformations between the layers can be done using update policies for cleanup, anonymization, etc.

More information can be found here:

- ADX Materialized views expose an aggregation query over a source table, or over another materialized view. Materialized views always return an up-to-date result of the aggregation query (always fresh). Querying a materialized view is more performant than running the aggregation directly over the source table. Materialized views can be used to calculate aggregations in the Silver layer to be stored in the Gold layer.

- Databricks, Azure Synapse or Azure ML can be used for data transformation and aggregation.

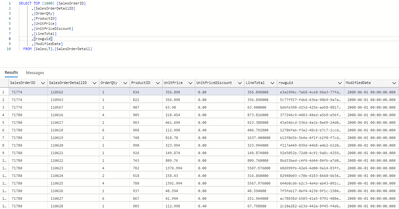

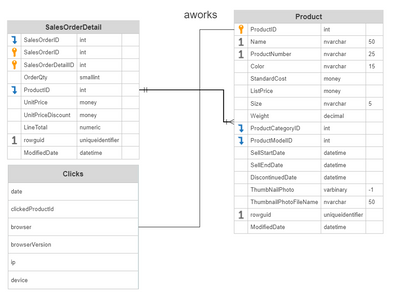

Data sources

- An Azure SQL DB containing 2 tables:

Products

Order Details

- An Event hub called “clicks-stream” with clicks events of the form:

{

"date": "2023-04-03 13:58:11",

"clickedProductId": 836,

"browser": "Safari",

"browserVersion": "4.5",

"ip": "202.254.96.230",

"device": "computer",

"source": "facebook"

}

All changes made to the tables in the Azure SQL DB are periodically copied to ADX using Azure Data Factory CDC.

All events in the event hub are ingested into ADX using ADX built-in ingestion.

Data diagram - ERD

Data Explorer tables

Bronze Layer

.create table [bronzeProducts] (ProductID:int,Name:string,ProductNumber:string,Color:string,StandardCost:real,ListPrice:real,Size:string,Weight:string,ProductCategoryID:long,ProductModelID:long,rowguid:guid,ModifiedDate:datetime, OrderDate:datetime)

.create table [bronzeOrders] (SalesOrderID:long,SalesOrderDetailID:long,OrderQty:long,ProductID:int,UnitPrice:real,UnitPriceDiscount:real,LineTotal:real,ModifiedDate:datetime)

.create table [bronzeClicks] (['date']:datetime,ProductID:int,browser:string,browserVersion:string,ip:string,device:string,source:string)

.create table bronzeClicks ingestion json mapping "bronzeClicks_mapping"

'[{"column":"date","path":"$[\'date\']","datatype":"","transform":null},{"column":"ProductID","path":"$[\'ProductID\']","datatype":"","transform":null},{"column":"browser","path":"$[\'browser\']","datatype":"","transform":null},{"column":"browserVersion","path":"$[\'browserVersion\']","datatype":"","transform":null},{"column":"ip","path":"$[\'ip\']","datatype":"","transform":null},{"column":"device","path":"$[\'device\']","datatype":"","transform":null},{"column":"source","path":"$[\'source\']","datatype":"","transform":null}]'

Silver Layer

.create table [silverProducts] (ProductID:int,Name:string,ProductNumber:string,Color:string,StandardCost:real,ListPrice:real,Size:string,Weight:string,ProductCategoryID:long,ProductModelID:long,rowguid:guid,ModifiedDate:datetime,OrderDate:datetime, WeightCategory:string)

.create table [silverOrders] (SalesOrderID:long,SalesOrderDetailID:long,OrderQty:long,ProductID:int,UnitPrice:real,UnitPriceDiscount:real,LineTotal:real,ModifiedDate:datetime, UnitFinalPrice:real, PurchaseDate:datetime)

.create table [silverClicks] (['date']:datetime,ProductID:int,browser:string,browserVersion:string,ip:string,device:string,source:string)

- silverProducts

For the sake of the example we will create a silver layer table for the products and we will add a column called WeightCategory with 4 possible values: S,M,L,U=unknown.

The Weight category will be calculated as follows:

S- weight<100

M- 100<weight<1000

L- weight>1000

U – unknown

We will use an update policy to copy the data between the bronze and silver layers.

First, let’s create a table in the silver layer.

.create function

with (docstring = 'Ingest raw products data and calculate product weight category', folder='ingestprojection')

ParseProducts ()

{

bronzeProducts

| extend weightCategory = case(todouble(Weight) <= 100, "S",

todouble(Weight) <= 1000 and todouble(Weight) > 100 , "M",

todouble(Weight) > 1000, "L",

"U")

}

.alter table

silverProducts

policy update @'[{"Source": "bronzeProducts", "Query": "ParseProducts", "IsEnabled" : true, "IsTransactional": true }]'

- silverOrders

For the sake of the example we will create a silver layer table for the orders and we will add 2 columns:

UnitFinalPrice to show the final unit price after discount.

PurchaseDate containing only the purchase date without the time.

.create function

with (docstring = 'Ingest raw orders data and calculate the final unit price', folder='ingestprojection')

ParseOrders ()

{

bronzeOrders

| extend UnitFinalPrice = round(UnitPrice * (1-UnitPriceDiscount),2)

| extend PurchaseDate = todatetime(split(ModifiedDate, " ")[0])

}

.alter table

silverOrders

policy update @'[{"Source": "bronzeOrders", "Query": "ParseOrders", "IsEnabled" : true, "IsTransactional": true }]'

- silverClicks

For the clicks table we will copy it “as-is” to the silver layer.

.create function

with (docstring = 'Ingest raw clicks', folder='ingestprojection')

ParseClicks ()

{

bronzeClicks

}

.alter table

silverClicks

policy update @'[{"Source": "bronzeClicks", "Query": "ParseClicks", "IsEnabled" : true, "IsTransactional": true }]'

CDC data includes all the changes from the operational database.

So every update will show as a new row in ADX.

This requires we create materialized views that show only the latest values for an item.

We will create materialized views to show only the latest changes to the products and orders tables.

.create materialized-view silverOrdersLatest on table silverOrders

{

silverOrders

| summarize arg_max(ModifiedDate, *) by SalesOrderID

}

.create materialized-view silverProductsLatest on table silverProducts

{

silverProducts

| summarize arg_max(ModifiedDate, *) by ProductID

}

- Gold Layer

In the gold layer we will create a single materialized view to show daily aggregations. This view can be used in dashboards in ADX or Power BI.

- goldDailySales

silverOrdersLatest

| join kind=inner silverProductsLatest on $left.ProductID == $right.ProductID

| summarize DailySales = sum(LineTotal) by PurchaseDate, ProductID, Name

silverClicks

| join kind=inner silverProductsLatest on $left.ProductID == $right.ProductID

| summarize DailyClicks = count() by 'date', ProductID, browser, browserVersion, device, source

That’s it, we are done.

I hope you enjoyed reading this.

For the actual code on "how to guide" for the deployment see part 2:

Part 2 - Building a Data Lakehouse using Azure Data explorer - the deployment

Thanks

Denise

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.