Dear SSIS Users,

From 1.15.0, Azure Feature Pack supports SQL Server 2019. Also included are the following new features.

- Delete operation in Flexible File Task.

- Support deleting folder/file from local file system/Azure Data Lake Storage Gen2

- Support deleting file from Azure Blob Storage

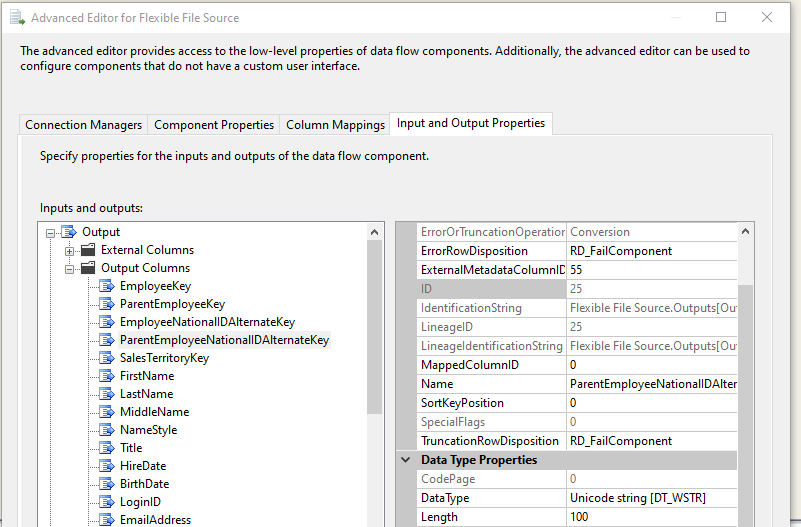

- External and Output data type conversion in Flexible File Source. You can change the DataType properties for Output Columns to convert data types in the Input and Output Properties tab of the Advanced Editor for Flexible File Source dialog as shown below.

You can download this new version of Azure Feature Pack from the following links: