- Home

- Security, Compliance, and Identity

- Security, Compliance, and Identity Blog

- Protecting email Data and Services

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Electronic communication and collaboration services[1] such as Outlook.com, Skype, Gmail, Slack, and OneDrive carry valuable private and confidential communications that need protection. But these same services also provide a means for attackers to steal information or seize control of users’ computers for nefarious purposes, via viruses, worms, spam, phishing attacks, and other forms of malware.

Preventing the theft of user information and the dissemination of malware is a core feature of electronic communication and collaboration services. This requires significant processing of users' communications and data both in-transit and after delivery. This processing can and should be done without compromising the user’s privacy or the confidentiality of their communications[2].

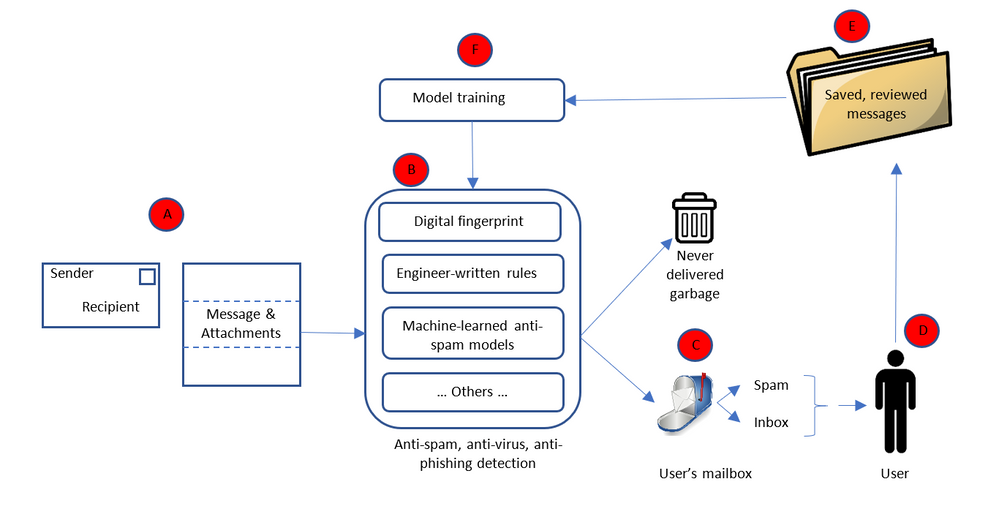

Message processing data flow:

To protect against malware distributed via electronic communications and collaboration systems such as email servers[3], the content follows a conceptually simple flow.

Starting with “A” the message is received by the recipient’s email service. The message’s envelope, as well as the message contents and any attachments, are passed on to the anti-malware portion of the email service (“B”) which determines whether or not the message is malware. Based on what the anti-malware service determines, the message is delivered to the user as appropriate (“C”). Some messages are determined to be malware with near certainty and are never delivered to the user. Instead they are deleted as quickly as possible[4]. Some messages are likely to be spam, however the service is not always certain. So the message is delivered to the user’s mailbox but into their spam/junk-mail folder. The remaining valid messages are delivered to the user’s inbox. The service can never determine with absolute certainty whether or not a message is malicious; it is always a probabilistic assessment. If the service is wrong either way; i.e. the user is exposed to malware or the user indicates that a message originally thought to be spam is not, the message may be added to a database of messages (“E”) used to train the next version of the anti-malware logic (“F”), thus permitting the system to “learn” over time and become more effective.

Clearly this entire process entails considerable processing of the messages. The types of processing are diverse and done in two key phases: message processing (“A” – “D”) and model building (“E” – “F”).

Anti-malware is particularly important for in-transit messages because attacks most typically enter the service through communications being transmitted. It is, however, also performed against already received and processed messages. As new attacks are identified – usually after the attack has been launched and some infected content has evaded the filters and been delivered to users – the anti-malware service is updated to defend against those attacks, and the service is rerun against recently delivered message to retroactively remove instances of said attack.

Processing message:

The types of processing done on messages to determine whether or not they are malicious include simple rules (e.g. messages without senders are likely not valid), reputations systems (messages from a certain set of IP addresses or senders are likely not valid, such as lists managed by the Spamhaus project[5]), digital thumbprints (comparing the thumbprint of the message or attachment to the thumbprints of known bad messages), honey pots (email addresses with no user and thus mailboxes that could never get any valid messages – anything delivered to them is spam or malware), and complex machine-learned models which process the contents of the message or the attachment[6]. As new forms of attack are encountered, new forms of defense must be quickly developed to keep users safe.

This requires processing of the message envelope, body, and attachment. For example:

- Message envelopes indicate not only the message recipient but also the supposed sender, and the server path which the message followed to reach the recipient. This is critical, not only to determine the recipient (needed to ensure the message is delivered to the intended recipients), but also to determine how probable it is that the sender is who they claim to be. It is common for attackers to “spoof” a sender, i.e. pretend they are a certain sender even though they are not. Knowing the path taken by the message can help determine if it has been sent by a spoof sender. For example, if a message originates from a trusted sending service which verifies the identity of the sender, then passes through a series of trusted intermediate services to the destination, the probability that the sender has been spoofed is much lower.

- Message bodies are among the most critical elements of the message which need to be processed to determine if the message is likely spam or a phishing attack. For example, text about “great deals on pharmaceuticals” is often indicative of spam. Without processing the body of the message, it is impossible to determine this. Attackers know that defenders watch out for such phrases, so they often try to hide them as text within an image. To the reader this looks similar, but defenders must run the images through optical character recognition algorithms to convert the images into text that can then be compared against a set of suspicious phrases. For example:

Another example of message body analysis is comparing the text of hyper-links to the URL. If a hyperlink’s text says “Click here to reset your Facebook password” but the URL points to “http://12345.contoso.com“, it is likely a phishing attack because Facebook password reset links should never be any URL other a correct Facebook one. Because most users do not check the URL before clicking on the hyperlink, it is important to protect them from such attacks. Without processing the message body, this is impossible.

- It is equally important to analyze attachments; otherwise attackers use them to deliver malicious payloads or contents to users. Attachments can be executables that open a user’s computer to attacker control. They can also be phishing attacks with the mismatched hyperlink text and URL scenario we described above embedded into the attachment. Any attack in the body of a message can appear in an attachment, and attachments can include additional forms of attack.

Model building:

Model building is the portion of machine learning in which the logic that does the evaluation is updated. It is the “learning” part of the machine learning; where the evaluation algorithm is updated based on new data so that it produces the desired output, not only based on data and results it has previous seen and been trained on, but also based on any new data or results.

The entire computer science sub-discipline of machine learning is the science of learning algorithms, and of this training phase, so a full treatise is beyond the scope of this paper. Generally speaking however, learning algorithms for detecting malware can be developed that respect the privacy of recipients because what is necessary is an understanding of the attack and the pattern of the attack, not the victims of that attack.

Privacy: data protection and confidentiality implications

Protecting the personal data of both sender and recipients, and communications confidentiality, during message processing (“A” – “D” above) can be done without diminishing the efficacy of the anti-malware service because that service acts as a stateless function. The service process the message and creates new metadata indicating whether or not the message should be delivered, without retaining any knowledge of the contents of the message and without exposing the message to anyone except the intended recipients. The recipient’s communication service processes and accesses the content on behalf of the recipient; and it is the recipient’s expectation to be protected against spam and malicious communications.. Failing to process every user’s every message would expose the entire service, and all its users, to known infections, which would be irresponsible.

Protecting personal data and confidentiality during the model building phase (“E” – “F” above) is done by a selection of algorithms and approaches that preserve privacy and confidentiality, those in which personal data is not retained or exposed (for example, selection of privacy preserving machine learning feature vectors), by restricting the use of the communication to building anti-malware capabilities[7], or by building user-specific models which solely benefit that user. The first two techniques have been used historically, but the third is becoming increasingly common as users’ expectations of what qualifies as nuisance communications (i.e. spam) become more individualized[8].

Using communication data for model building in anti-malware capabilities is done without explicit user consent. Malware is an ongoing struggle between attackers and the people providing the communications safety service, with attackers trying to find ways to get messages past the safety service. As new attacks emerge the safety service must respond quickly[9], using as much information as is available (which often necessitates sharing information with the anti-malware elements of other services). Attackers are increasingly using machine learning to create and launch attacks[10], requiring defenders to respond in kind with increasingly advanced machine learning-based defenses. One way to do this is to automate the creation of new versions of the anti-malware model so the service quickly inoculates all users against new attacks. The effectiveness of anti-malware depends on knowing about, and inoculating all users against, these attacks. This data can be used without exposing personal data or compromising confidentiality. All users of a service, and the entire service itself, are at risk if we fail to constantly process content in order to detect new forms of infection for every user. Similar to failing to inoculate a few members of a large population against an infectious disease, failing to process all users against these attacks would be irresponsible and would ultimately put the entire population at risk.

For anti-malware it important to note that the sender is generally malicious, and unlikely to grant consent to build better defenses against their attack. Requiring consent from all parties will make it impossible for services to provide a safe, secure, and nuisance-free communications and collaboration environment for all.

[1] It is difficult to differentiate between communication and collaboration, or between messages and other collaboration artifacts. Consider a document jointly authored by many people, each of whom leaves comments in the document to express ideas and input. Those same comments could be transmitted as email, chats, or through voice rather than comments in a document. Rather than treat them as separate, we recognize collaboration and communication as linked and refer to them interchangeably.

[2] Privacy, protection of personal data and confidentiality are frequently treated as synonymous, but we draw a distinction. For the purposes of this paper we treat data protection as the act of protecting knowledge of who a piece of data is about, and confidentiality as protecting that information. For example, consider a piece of data that indicates Bob is interested in buying a car. Data protection can be achieved by removing any knowledge that the data is about Bob; knowing simply that someone is interested in buying a car protects Bob’s privacy. Protecting confidentiality is preventing Bob’s data from being exposed to anyone but him. In this specific instance, Bob may only be concerned with protecting his personal data. However, if the information related to Microsoft’s interest in buying LinkedIn, Microsoft would be very interested in protecting the confidentiality of that data.

[3] Henceforth in this paper we will refer to this as an email service, but it is understood that similar problems and solutions apply to other communication and collaboration services.

[4] A staggering 77.8% of all messages sent to an email service are spam, with 90.4% of them being identified as such with sufficient certainty to prevent them ever being delivered to users.

[6] Use of machine learning in anti-spam services is one of the oldest, most pervasive, and most useful applications of that technology, dating back to at least 1998 (http://robotics.stanford.edu/users/sahami/papers-dir/spam.pdf)

[7] Services like Office365 that provide subscription-funded productivity services to users are incentivized to preserve the confidentiality of user data; the user is the customer, not the product.

[8] To my daughter, communications about new Lego toys are not a nuisance, they are interesting and desirable. However to me they are an imposition.

[9] Today, a typical spam campaign lasts under an hour. Yet in that time it gets through often enough to make it worthwhile to the attacker.

[10] https://erpscan.com/press-center/blog/machine-learning-for-cybercriminals

- Jim Kleewein, Technical Fellow, Microsoft

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.