- Home

- Planner

- Planner Blog

- Microsoft Planner: Considerations for Reporting–Part 2

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A little longer getting to this than I’d hoped – but some of that time (as well as the day job) was spent doing a better job of what will be part 3 – so looking forward to writing that up! For Part 2 though I’m looking at Flow to start with – and then seeing what I can do with the data. Don’t expect to see a finished example here that will fit your needs – I’m really just putting ideas out there – see what might work for you.

Flow is really gathering momentum and I was surprised at the variety of triggers and actions available for both Microsoft and 3rd Party applications. Unrelated to reporting – but one example I showed at Ready was taking a picture with Adobe’s Creative Cloud, which when saved as an asset triggered the Flow and then this in turn created a Planner task in my ‘Photos’ plan. Cool. You can imagine taking it a bit further and getting the image attached and maybe even reading the EXIF GPS and adding that. One extended scenario could be fixing potholes in roads – take a picture and the task to fix it gets created and assigned.

But back to reporting. The flow I decided to use was triggered by a daily schedule (unfortunately no triggers in Planner yet), then this flows to the List my plans action – and this created a Blob in my Azure Blob storage account. In this case just writing the value from the plan.

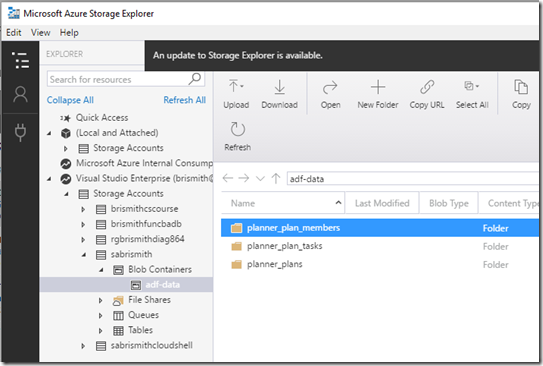

Looking at my Azure storage through the Microsoft Azure Storage Explorer I can see I have three ‘directories’ – and the one I was writing to was planner_plans.

Opening up that directory I see my 31 plans, where the name is the ID of the Plan and the contents is just the ID and title.

I then have another flow triggered by new blobs in this directory.

A new Blob makes a call to List tasks – using the Plan ID, then this writes to the planner_plan_tasks directory a blob with the name being the ID of the task, then a comma separated list with the task, plan and bucket IDs, then the start and due dates and the percent complete – and finally the title – and in this case a hard-coded timestamp. The idea of the time stamp was to have snapshots – but I’m not really taking it that far yet. So the contents would look something like:

-gNBHh_yukGpXdlmqy3IO2QAGiF8,lX-TnekUv0e8nVLMP4q6X2QABVGT,naTONxTjREe2F7SzoP9Fr2QAMS_w,2017-06-19T12:00:00Z,,100,Satisfaction,2017-07-01

The other directory isn’t in these flows but I did have did push the ‘members’ json into there just to play around with it.

And this was for the plan with the ID starting GmA – here we see the first few members of the group associated with that plan.

Now I have the data in blob storage there are a number of things I could do – either manually – or using Azure Data Factory to orchestrate moving this somewhere else. I’ll admit this may well be adding more steps and process than is actually needed – and Part 3 shows a smoother approach – but one aim for me was using some of the cool new Azure capabilities just to get my head around how they work and how they could be used.

I initially looked at the wizard approach using the preview ‘Copy Data’ option in Data Factory – and you can see I already have 6 linked services.

The basic stage are to enter the properties (and I just named my sample and set it to one time, then you get to choose your Source and Destination – either from the full list of data stores (which includes 3rd Party source too) or from existing connections – and I’d already created sources for my blob storage and destinations for my SQLAzure database. In this example I’ll choose the json file of members shown above (and I’m not screenshotting all pages – there are some full tutorials out there .

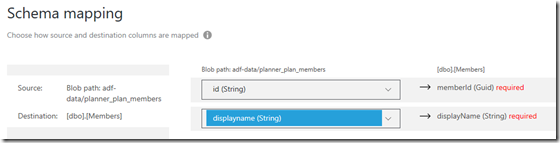

In formatting the file settings I say it is a set of objects (my members) and then I want to cross-apply nested JSON array of value. This then presents the columns and I have removed all but displayname and id.

I selected my destination as SQLAzure from an existing connection, then chose my ‘Members’ table. I can then map my schema for source and destination..

I take the default for other settings around performance – then I can review my settings.

I go to the deploy stage – and all looks good!

All completed ok, and I can see the results in my database.

Obviously you wouldn’t do this file by file as I have – and the pipelines can be defined in json and applied across many files. So for my tasks for example I would define the pipeline and how to handle the CSV file – and it could then be set to process any files that arrived in a certain blob. Reporting against my data once it is in SQL Server would then be very straightforward using any of a number of tools.

As an example of what the pipeline might look like in its json format – here is my activity that did that copy. There will be similar json files representing the source and destination, as well as the linked services:

{

"name": "Activity-0-GmA-1oD6tUC27IgEEuENRWQAGrMQ-2017-07-01_json->[dbo]_[Members]",

"linkedServiceName": null,

"inputs": [

{

"name": "InputDataset-g18"

}

],

"outputs": [

{

"name": "OutputDataset-g18"

}

],

"type": "Copy",

"policy": {

"concurrency": 1,

"timeout": "1.00:00:00",

"retry": 3,

"delay": "00:00:00",

"executionPriorityOrder": "NewestFirst",

"longRetry": 0,

"longRetryInterval": "00:00:00",

"style": "StartOfInterval"

},

"scheduler": {

"frequency": "Day",

"interval": 1

},

"typeProperties": {

"source": {

"type": "BlobSource",

"recursive": false

},

"sink": {

"type": "SqlSink",

"writeBatchSize": 0,

"writeBatchTimeout": "00:00:00"

},

"translator": {

"type": "TabularTranslator",

"columnMappings": "id:memberId,displayname:displayName"

}

}

}

Take a look at Flow, and Azure Data Factory – there is some cool technology that can help you move and consolidate your various data elements, wherever they may currently sit.

There are also some very cool json capabilities in SQL Server 2016 if you hadn’t already seen them – so plenty of options for loading data into SQL even if Azure isn’t on your radar (but hopefully I’ve piqued your interest). See the Getting Started information if you want to have a play – with $200 free credit - https://azure.microsoft.com/en-us/get-started/ – and plenty of free options.

There are a few limitations on the data you can get out via Flow right now but there are further triggers and activities planned. (OK, pun intended

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.