- Home

- Windows Server

- Networking Blog

- Troubleshooting Kubernetes Networking on Windows: Part 1

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We’ve all been there: Sometimes things just don’t work the way they should even though we followed everything down to a T.

Kubernetes in particular, is not easy to troubleshoot – even if you’re an expert. There are multiple components involved in the creation/deletion of containers that must all harmoniously interoperate end-to-end. For example:

- Inbox platform services (e.g. WinNAT, HNS/HCS, VFP)

- Container runtimes & Go-wrappers (e.g. Dockershim, ContainerD, hcsshim)

- Container orchestrator processes (e.g. kube-proxy, kubelet)

- CNI network plugins (e.g. win-bridge, win-overlay, azure-cni)

- IPAM plugins (e.g. host-local)

- Any other host-agent processes/daemons (e.g. FlannelD, Calico-Felix, etc.)

- … (more to come!)

This, in turn, also means that the potential problem space to investigate can grow overwhelmingly large when things do end up breaking. We often hear the phrase: “I don’t even know where to begin.”

The intent of this blog post is to educate the reader on the available tools and resources that can help unpeel the first few layers of the onion; it is not intended to be a fully exhaustive guide to root-cause every possible bug for every possible configuration. However, by the end one should at least be able to narrow down on an observable symptom through a pipeline of analytical troubleshooting steps and come out with a better understanding of what the underlying issue could be.

NOTE: Most of the content in this blog is directly taken from the amazing Kubecon Shanghai ’18 video “Understanding Windows Container Networking in Kubernetes Using a Real Story” by Cindy Xing (Huawei) and Dinesh Kumar Govindasamy (Microsoft).

Table of Contents

- Ensure Kubernetes is installed and running correctly

- Use a script to validate basic cluster connectivity

- Query the built-in Kubernetes event logs

- Analyze kubelet, kube-proxy logs

- Inspect CNI network plugin configuration

- Verify HNS networking state

- Take a snapshot of your network using CollectLogs.ps1

- Capture packets and analyze network flows

Step 1: Ensure Kubernetes is installed and running correctly

As mentioned in the introduction, there are a lot of different platform and open-source actors that are needed to operate a Kubernetes cluster. It can be hard to keep track of all of them - especially given that they release at a different cadence.

One quick sanity-check that can be done without any external help is to employ a validation script that verifies supported bits are installed:

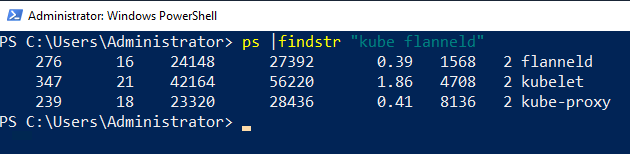

While trivial, another step that can be equally easily overlooked is ensuring that all the components are indeed running. Any piece of software can crash or enter a deadlock-like state, including host-agent processes such as kubelet.exe or kube-proxy.exe. This can result in unexpected cluster behavior and detached node/container states, but thankfully it’s easy to check. Running a simple ps command usually suffices:

Unfortunately, the above command won’t capture that the processes themselves could be stuck waiting in a deadlock-like state; we will cover this case in step 4.

Step 2: Use a script to validate basic cluster connectivity

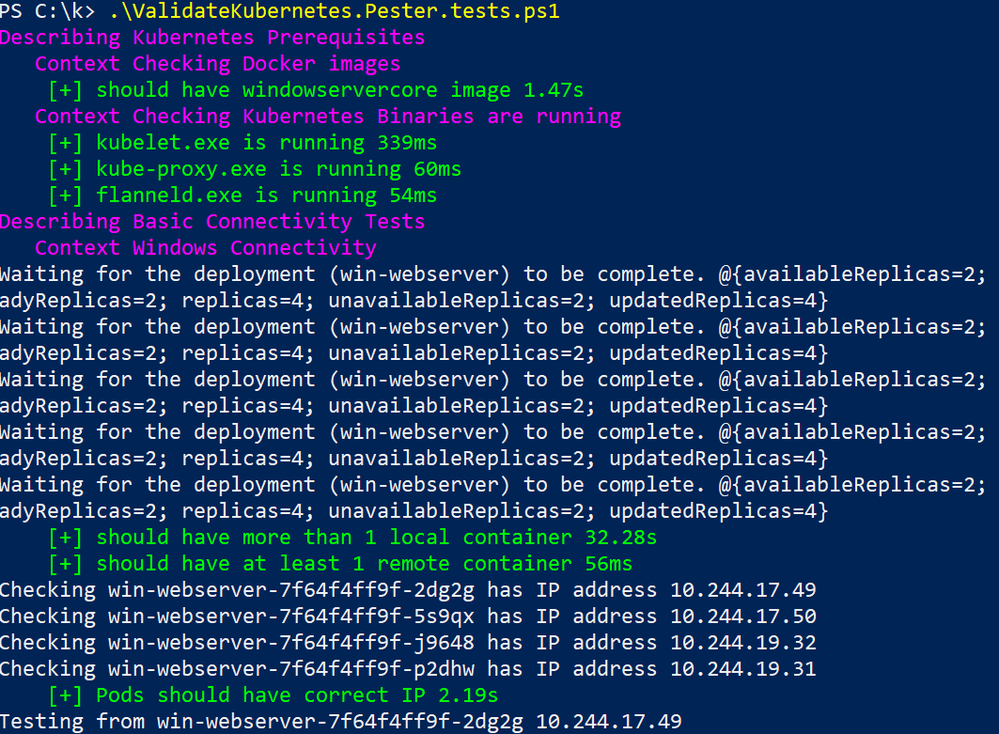

Before diving head-first into analyzing HNS resources and verbose logs, there is a handy Pester test suite which allows you to validate basic connectivity scenarios and report on success/failure here. The only pre-requisite in order to run it is that you are using Windows Server 2019 (requires minor fix-up otherwise) and that you have more than one node for the remote pod test:

The intent of running this script is to have a quick glance of overall networking health, as well as hopefully accelerate subsequent steps by knowing what to look for.

Step 3: Query the built-in Kubernetes event logs

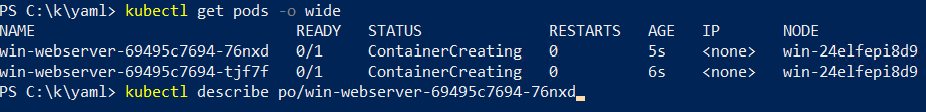

After verifying that all the processes are running as expected, the next step is to query the built-in Kubernetes event logs and see what the basic built-in health-checks that ship with K8s have to say:

More Information about misbehaving Kubernetes resources such as event logs can be viewed using the “kubectl describe” command. For example, one frequent misconfiguration on Windows is having a misconfigured “pause” container with a kernel version that doesn’t match the host OS:

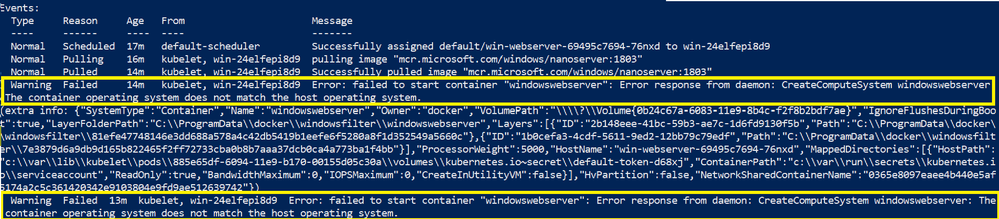

Here are the corresponding event logs from kubectl describe output, where we accidentally built our “kubeletwin/pause” image on top of a Windows Server, version 1803 container image and ran it on a Windows Server 2019 host:

(On a side note, this specific example issue can be avoided altogether if one references the multi-arch pause container image mcr.microsoft.com/k8s/core/pause:1.0.0 which will run on both Windows Server, version 1803 and Windows Server 2019).

Step 4: Analyze kubelet, kube-proxy logs

Another useful source of information that can be leveraged to perform root-cause analysis for failing container creations is the kubelet, FlannelD, and kube-proxy logs.

These components all have different responsibilities. Here is a very brief summary of what they do which should give you a rough idea on what to watch out for:

|

Component |

Responsibility |

When to inspect? |

|

Kubelet |

Interacts with container runtime (e.g. Dockershim) to bring up containers and pods. |

Erroneous pod creations/configurations |

|

Kube-proxy |

Manages network connectivity for containers (programming policies used for NAT’ing or load balancing). |

Mysterious network glitches, in particular for service discovery and communication |

|

FlannelD |

Responsible for keeping all the nodes in sync with the rest of the cluster for events such as node removal/addition. This consists of assigning IP blocks (pod subnets) to nodes as well as plumbing routes for inter-node connectivity. |

Failing inter-node connectivity |

Log files for all of these components can be found in different locations; by default a log dump for kubelet and kube-proxy is generated in the C:\k directory, though some users opt to log to a different directory.

If the logs appear to not have updated in a longer time, then perhaps the process is stuck, and a simple restart or sending the right signal can kick things back into place.

Step 5: Inspect CNI network plugin configuration

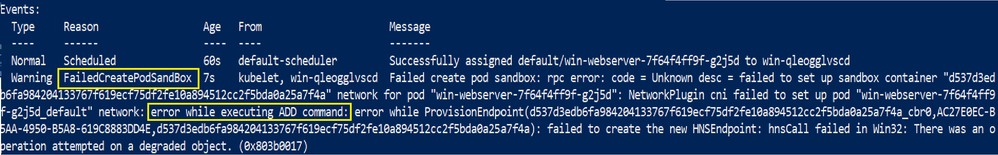

Another common source of problems that can cause containers to fail to start with errors such as “FailedCreatePodSandbox” is having a misconfigured CNI plugin and/or config. This usually occurs whenever there are bugs or typos in the deployment scripts that are used to configure nodes:

Thankfully, the network configuration that is passed to CNI plugins in order to plumb networking into containers is a very simple static file that is easy to access. On Windows, this configuration file is stored under the “C:\k\cni\config\” directory. On Linux, a similar file exists in “/etc/cni/net.d/”.

Here is the corresponding typo that caused pods to fail to start due to degraded networking state:

Whenever there are failing pod creations or unexpected network plumbing, we should always inspect the CNI config file for typos and consult the CNI plugins documentation for more details on what is expected. Here are the docs for the Windows plugins:

Step 6: Verify HNS networking state

Having exhaustively examined Kubernetes-specific event logs and configuration files previously, the next step usually consists of collecting network information programmed on the networking stack (control plane and data plane) used by containers. All of the information can be collected conveniently by the “CollectLogs.ps1” script, which will be done in step 7.

Before reviewing the contents of the “CollectLogs.ps1” tool, the Windows container networking architecture needs to be understood at a high-level.

|

Windows Component |

Responsibilities |

Linux Counterpart |

|

Network Compartment |

|

Network namespace |

|

vSwitch and HNS networks |

|

Bridge and IP routing |

|

vNICs, HNS endpoints, and vSwitch ports |

|

IP Links and virtual network interfaces |

|

HNS policies, VFP rules, Firewall |

|

iptables |

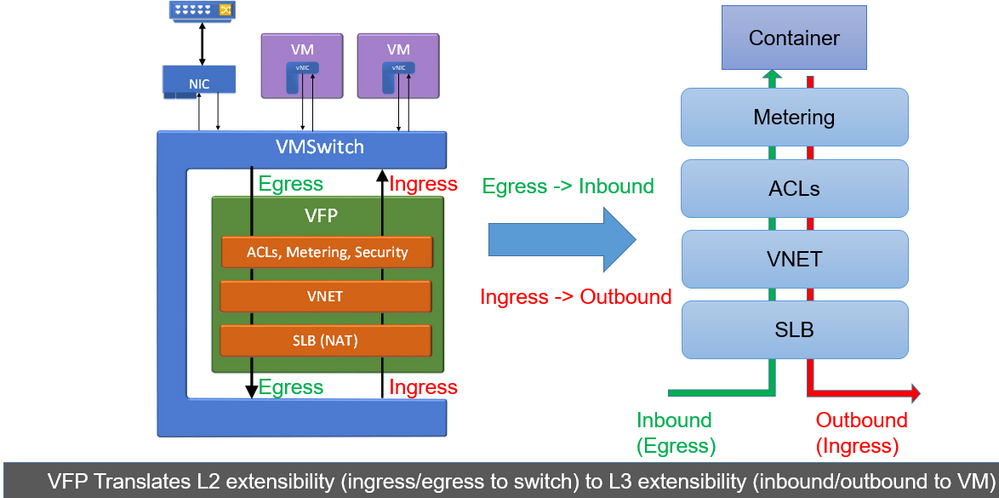

One particular component to highlight is VFP (Virtual Filtering Platform), which is a vSwitch extension containing most of the decision logic used to route packets correctly from source to destination by defining operations to be performed on packets such as:

- Encapsulating/Decapsulating packets

- Load balancing packets

- Network Address Translation

- Network ACLs

To read up more on this topic, many more details on VFP can be found here.

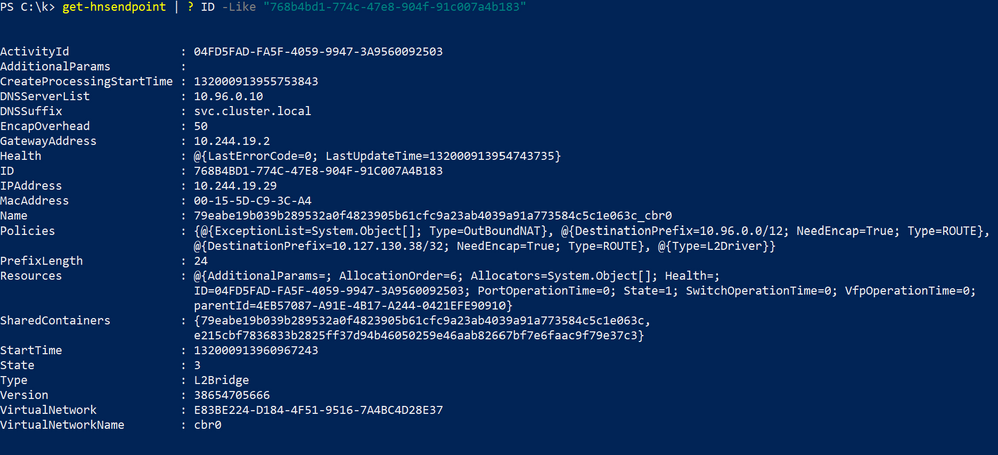

Our first starting point should be to check that all the HNS resources indeed exist. Here is an example screenshot that shows the HNS networking state for a cluster with kube-DNS service (10.96.0.10) and a sample Windows web service (10.104.193.123) backed by 2 endpoint DIPs ("768b4bd1-774c-47e8-904f-91c007a4b183", "048cd973-b5db-45a6-9c65-16dec22e871d"):

We can take a closer look at the network object representing a given endpoint DIP using Get-HNS* cmdlets (this even works for remote endpoints!)

The information listed here (DNSSuffix, IPAddress, Type, VirtualNetworkName, and Policies should match what was passed in through the CNI config file.

Digging deeper, to view VFP rules we can use the inbox “vfpctrl” cmdlet. For example, to view the layers of the endpoint:

Similarly, to print the rules belonging to a specific layer (e.g. SLB_NAT_LAYER) that each packet goes through:

The information programmed into VFP should match with what was specified in the CNI config file and HNS Policies.

Step 7: Analyze snapshot of network using CollectLogs.ps1

Now that we familiarized ourselves with the state of the network and its basic constituents let’s take a look at some common symptoms and correlate it against the likely locations where the culprit may be.

Our tool of choice to take a snapshot of our network is CollectLogs.ps1. It collects the following information (amongst a few other things):

|

File |

Contains |

|

endpoint.txt |

Endpoint information and HNS Policies applied to endpoints. |

|

ip.txt |

All NICs in all network compartments (and which) |

|

network.txt |

Information about HNS networks |

|

policy.txt |

Information about HNS policies (e.g. VIP - > DIP Load Balancers) |

|

ports.txt |

Information about vSwitch (ports) |

|

routes.txt |

Route tables |

|

hnsdiag.txt |

Summary of all HNS resources |

|

vfpOutput.txt |

Verbose dump of all the VFP ports used by containers listing all layers and associated rules |

Example #1: Inter-node communication Issues

L2bridge / Flannel (host-gw)

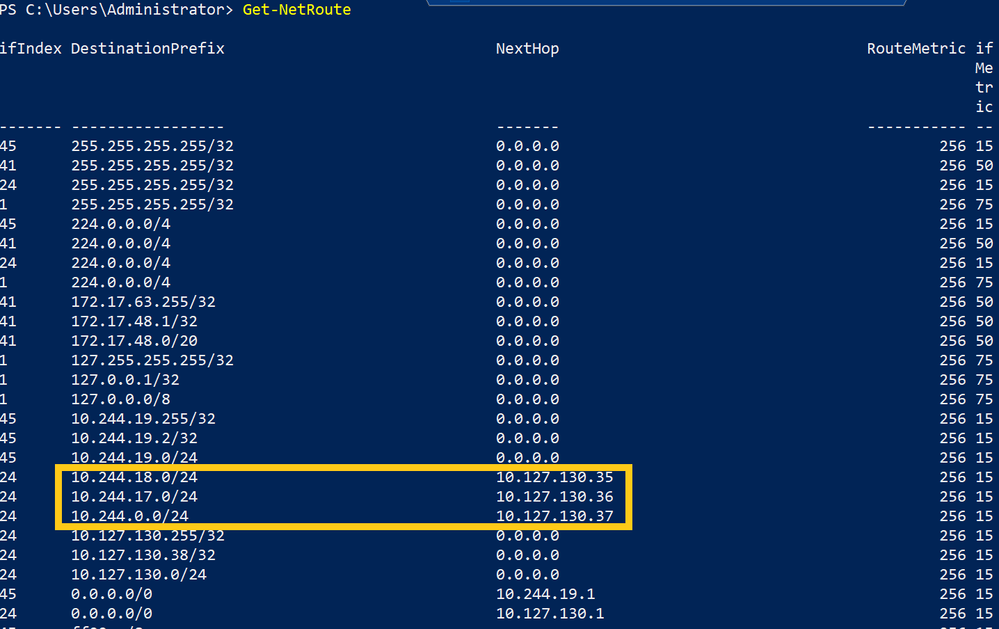

When dealing with inter-node communication issues such as pod-to-pod connectivity across hosts, it is important to check static routes are programmed. This can be achieved by inspecting the routes.txt or using Get-NetRoute:

There should be routes programmed for each pod subnet (e.g. 10.244.18.0/24) => container host IP (e.g. 10.127.130.35).

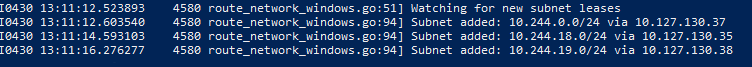

When using Flannel, users can also consult the FlannelD output to watch for the appropriate events for adding the pod subnets after launch:

Overlay (Flannel vxlan)

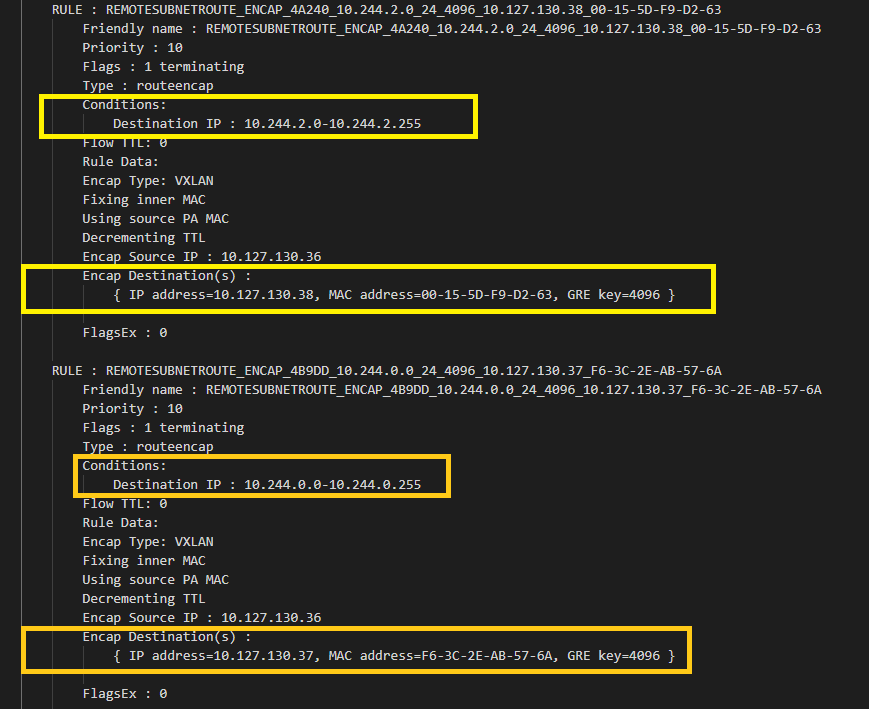

In overlay, inter-node connectivity is implemented using "REMOTESUBNETROUTE" rules in VFP. Instead of checking static routes, we can reference "REMOTESUBNETROUTE" rules directly in vfpoutput.txt, where each pod subnet (e.g. 10.244.2.0/24) assigned to a node should have its corresponding destination IP (e.g. 10.127.130.38) specified as the destination in the outer packet:

For additional details on inter-node container to container connectivity in overlay, please take a look at this video.

When can I encounter this issue?

One common configuration problem that manifests in this symptom is having mismatched networking configuration on Linux/Windows.

To double-check the network configuration on Linux, users can consult the CNI config file stored in /etc/cni/net.d/. In the case of Flannel on Linux, this file can also be embedded into the container, so users may need to exec into the Flannel pod itself to access it:

kubectl exec -n kube-system kube-flannel-ds-amd64-<someid> cat /etc/kube-flannel/net-conf.json kubectl exec -n kube-system kube-flannel-ds-amd64-<someid> cat /etc/kube-flannel/cni-conf.json

Example #2: Containers cannot reach the outside world

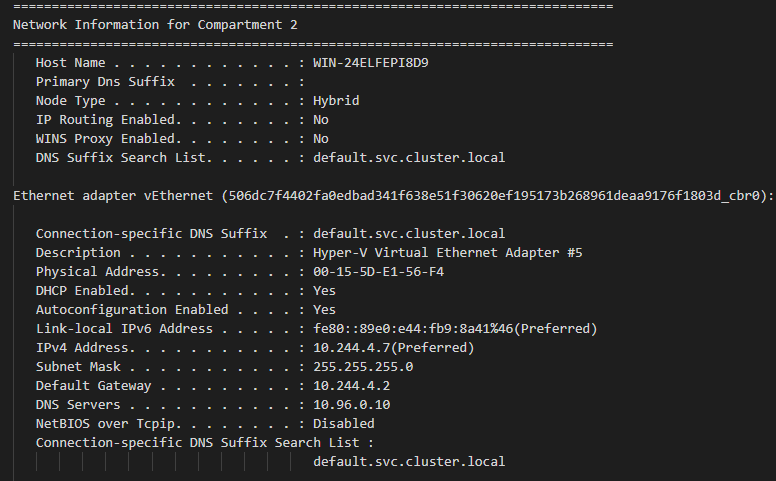

Whenever outbound connectivity does not work, one of the first starting points is to ensure that there exists a NIC in the container. For this, we can consult the “ip.txt” output and compare it with the output of an “docker exec <id> ipconfig /all” in the problematic (running) container itself:

In l2bridge networking (used by Flannel host-gw backend), the container gateway should be set to the 2. address exclusively reserved for the bridge endpoint (cbr0_ep) in the same pod subnet.

In overlay networking (used by Flannel vxlan backend), the container gateway should be set to the .1 address exclusively reserved for the DR (distributed router) vNIC in the same pod subnet.

L2bridge

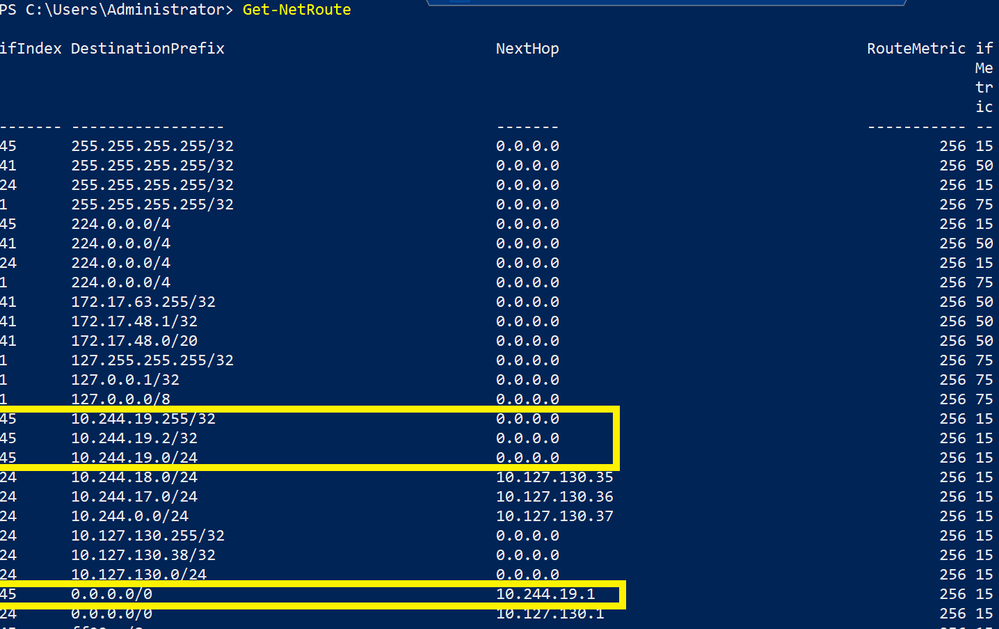

Going outside of the container, on l2bridge one should also verify that the route tables on the node itself are setup correctly for the bridge endpoint. Here is a sample with the relevant entries containing quad-zero routes for a node with pod subnet 10.244.19.0/24:

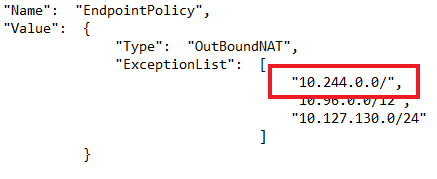

The next thing to check on l2bridge is verify that the OutboundNAT policy and the ExceptionList is programmed correctly. For a given endpoint (e.g. 10.244.4.7) we should verify in the endpoint.txt that there exists an OutboundNAT HNS Policy and that the ExceptionList matches with what we entered into the deployment scripts originally:

Finally, we can also consult the vfpOutput.txt to verify that the L2Rewrite rule exists so that the container MAC is rewritten to the host’s MAC as specified in the l2bridge container networking docs.

In the EXTERNAL_L2_REWRITE layer, there should be a rule which matches the container’s source MAC (e.g. "00-15-5D-AA-87-B8") and rewrites it to match the host’s MAC address (e.g. "00-15-5D-05-C3-0C"):

Overlay

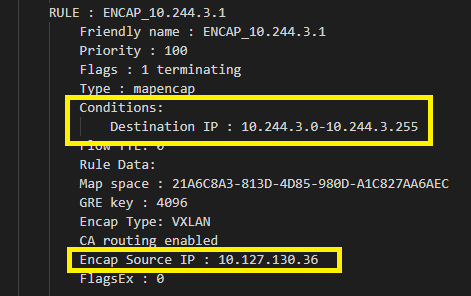

For overlay, we can check whether there exists an ENCAP rule that encapsulates outgoing packets correctly with the hosts IP. For example, for a given pod subnet (10.244.3.0/24) with host IP 10.127.130.36:

When can I encounter this issue?

One example configuration error for Flannel (vxlan) overlay that may results in failing east/west connectivity is failing to delete the old SourceVIP.json file whenever the same node is deleted and re-joined to a cluster.

NOTE: When deploying L2bridge networks on Azure, user’s also need to configure user-defined routes for each pod subnet allocated to a node for networking to work. Some users opt to use overlay in public cloud environments for that reason instead, where this step isn’t needed.

Example #3: Services / Load-balancing does not work

Let’s say we have created a Kubernetes service called “win-webserver” with VIP 10.102.220.146:

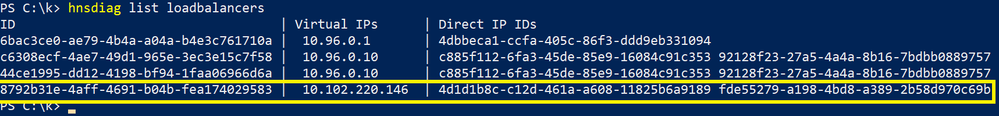

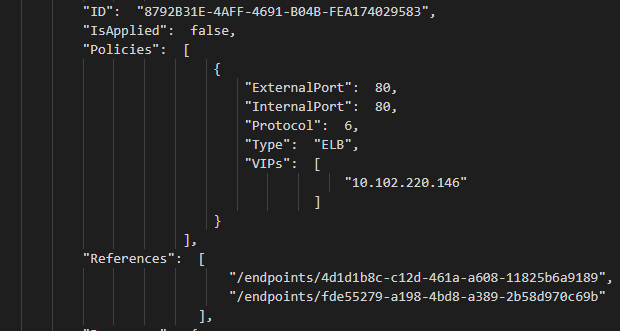

Load Balancing is usually performed directly on the node itself by replacing the destination VIP (Service IP) with a specified DIP (pod IP). HNS Loadbalancers can be viewed using the “hnsdiag” cmdlet:

For a more verbose output, users can also inspect policy.txt to check for “ELB” policies (LoadBalancers) for additional information:

The next step usually consists of verifying that the endpoints (e.g. "4d1d1b8c-c12d-461a-a608-11825b6a9189") still exist in endpoint.txt and are reachable by IP from the same source:

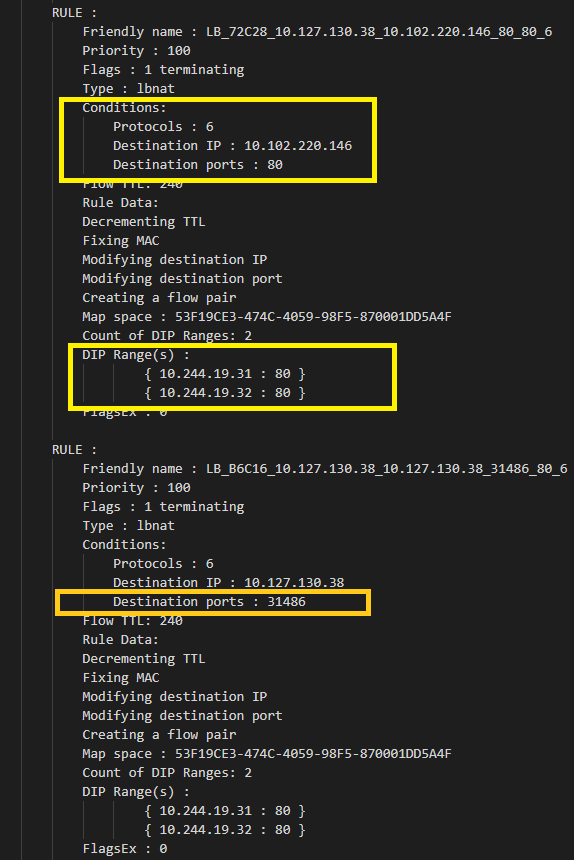

Finally, we can also check whether the VFP "lbnat" rules exist in the "LB" layer for our service IP 10.102.220.146 (with NodePort 31486):

When can I encounter this issue?

One possible issue that can cause erroneous load balancing is a misconfigured kube-proxy which is responsible for programming these policies. For example, one may fail to pass in the --hostname-override parameter, causing endpoints from the local host to be deleted.

NOTE that service VIP resolution from the Windows node itself is not supported on Windows Server 2019, but planned for Windows Server, version 1903.

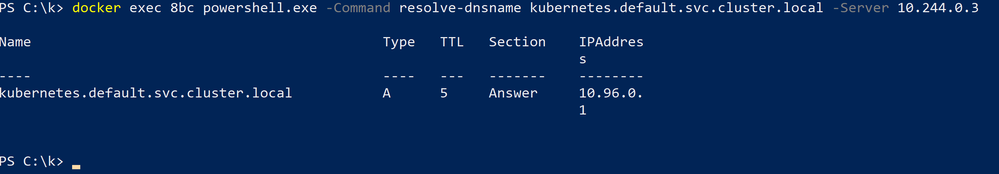

Example #4: DNS resolution is not working from within the container

For this example, let’s assume that the kube-DNS cluster addon is configured with service IP 10.96.0.10.

Failing DNS resolution is often a symptom of one of the previous examples. For example, (external) DNS resolution would fail if outbound connectivity isn’t present or resolution could also fail if we cannot reach the kube-DNS service.

Thus, the first troubleshooting step should be to analyze whether the kube-DNS service (e.g. 10.96.0.10) is programmed as a HNS LoadBalancer correctly on the problematic node:

Next, we should also check whether the DNS information is set correctly in the ip.txt entry for the container NIC itself:

We should also check whether it’s possible to reach the kube-DNS pods directly and whether that works. This may indicate that there is some problem in resolving the DNS service VIP itself. For example, assuming that one of the DNS pods has IP 10.244.0.3:

When can I encounter this issue?

One possible misconfiguration that results in DNS resolution problems is an incorrect DNS suffix or DNS service IP which was specified in the CNI config here and here.

Step 8: Capture packets and analyze flows

The last step requires in-depth knowledge of the operations that packets from containers undergo and the network flow. As such, it is also the most time-consuming to perform and will vary depending on the observed issue. At a high level, it consists of:

- Running startpacketcapture to start the trace

- Reproducing the issue – e.g. sending packets from source to destination

- Running stoppacketcapture to stop the trace

- Analyzing correct processing by the data path at each step

Here are a few example animations that showcase some common container network traffic flows:

Pod to Pod:

Pod to Internet:

Animated visualization showing pod to outbound connectivity

Animated visualization showing pod to outbound connectivity

Pod to Service:

Showing how to analyze and debug these packet captures will be done in future part(s) of this blog post series through scenario-driven videos showing packet captures for supported networking flows on Windows.

For a quick teaser, here is a video recording taken at KubeCon that shows debugging an issue live using startpacketcapture.cmd: https://www.youtube.com/watch?v=tTZFoiLObX4&t=1733

Summary

We looked at:

- Automated scripts that can be used to verify basic connectivity and correct installation of Kubernetes

- HNS networking objects and VFP rules used to network containers

- How to query event logs from different Kubernetes components

- How to analyze the control path at a high level for common configuration errors using CollectLogs.ps1

- Typical network packet flows for common connectivity scenarios

Performing the above steps can go a great length towards understanding the underlying issue for an observed symptom, improve efficacy when it comes to implementing workarounds, and accelerate the speed at which fixes are implemented by others, having already performed the initial investigation work.

What’s next?

In the future, we will go over supported connectivity scenarios and specific steps on how to troubleshoot each one of them in-depth. These will build on top of the materials presented here but also contain videos analyzing packet captures, data-path analysis as well as other traces (e.g. HNS tracing).

We are looking for your feedback!

Last but not least, the Windows container networking team needs your feedback! What would you like to see next for container networking on Windows? Which bugs are preventing you from realizing your goals? Share your voice in the comments below, or fill out the following survey and influence our future investments!

Thank you for reading,

David Schott

*Special thanks to Dinesh Kumar Govindasamy (Microsoft) for his fantastic work creating & presenting many of the materials used as a basis for this blog at KubeCon Shanghai '18!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.