- Home

- Windows Server

- Networking Blog

- Teaming in Azure Stack HCI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Install-Module Convert-LBFO2SETWindows Server currently has two inbox teaming mechanisms with two very different purposes. In this article, we’ll describe several reasons why you should use Switch Embedded Teaming (SET) for Azure Stack HCI scenarios and we’ll discuss several long-held teaming myths – We’d love to hear your feedback in the comments below. Let's get started!

In Windows Server 2012 we released LBFO as an inbox teaming mechanism, with many customers leveraging this technology to provide load-balancing and fail-over between network adapters. Since then, the rise of software-defined storage and software defined networking has brought performance and compatibility challenges to the forefront (outlined in this article) with the LBFO architecture that required a change in direction.

This new direction is called Switch Embedded Teaming (SET) and was introduced in Windows Server 2016. SET is available when Hyper-V is installed on any Server OS (Windows Server 2016 and higher) and Windows 10 version 1809 (and higher). You’re not required to run virtual machines to use SET, but the Hyper-V role must be installed.

In summary, LBFO is our older teaming technology that will not see future investment, is not compatible with numerous advanced capabilities, and has been exceeded in both performance and stability by our new technology (SET). We'd like to discuss why you should move off LBFO for virtualized and cloud scenarios. Let's dig into this paragraph a bit.

LBFO is our older teaming technology that will not see future investment

With the intent to bring software-defined technologies like SDNv2 and containers to Windows Server, it became clear that we needed an alternative teaming solution and so we set off creating SET, circa 2014. Simultaneously reaching feature parity and stability with LBFO took time; several early adopters of SET will remember some of these pains. However, SET’s stability, performance, and features have now far surpassed LBFO.

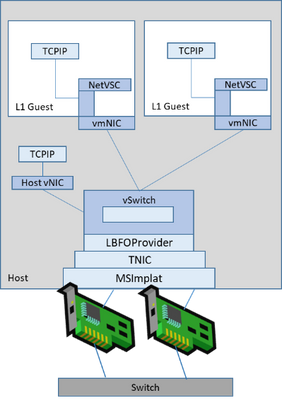

All new features released since Windows Server 2016 (see below) were developed and tested with SET in mind – This includes all Azure Stack HCI solutions you may have purchased; Azure Stack HCI is not tested or certified with LBFO. This is largely due to development simplicity and testing; without driving too far into unimportant details, LBFO teams adapters inside NDIS which is a large and complex component – its roots date back to Windows 95 (of course updated considerably since then). If your system has a NIC, you’re using NDIS. In the picture shown above, each component below the vSwitch was part of NDIS.

The size and complexity of scenarios included in NDIS made for very complex testing requirements that were only compounded by virtualized and software-defined technologies that considerably hampered feature innovation. You might think that this is just a Microsoft problem, but really this affects NIC vendor driver development time and stability as well.

All-in-all, we’re not focusing on LBFO much these days, particularly as software-defined Windows Server networking scenarios become more exotic with the rise of containers, software-defined networking, and much more. There’s a faster, more stable, and performant teaming solution, called Switch Embedded Teaming.

LBFO is not compatible with several advanced capabilities

Here’s a smattering of scenarios and features that are supported with SET but NOT LBFO:

Windows Admin Center - WAC is the de facto management tool for Windows Server and Azure Stack HCI, with millions of nodes under management. You can create and manage a SET team for a single host, or deploy a SET team to multiple hosts with the new Cluster Creation UI we released to help you deploy Azure Stack HCI solutions at Microsoft Ignite this year (watch the session, try it out, and give us feedback).

LBFO is not available for configuration in Windows Admin Center.

RDMA Teaming - Only SET can team RDMA adapters. RDMA is used for example with Storage Spaces Direct (S2D) which requires a reliable high bandwidth, low latency network connection between each node. High-bandwidth? Low Latency? That’s RDMA’s bag so it is the recommended pattern with S2D. Reliability? That’s SET's claim-to-fame so these two are a logical pairing.

Guest RDMA: SET supports RDMA into a virtual machine. This doesn’t work with LBFO for two reasons:

- RDMA adapters cannot be teamed with LBFO both host adapters and virtual adapters.

- RDMA uses SMB multichannel which requires multiple adapters to balance traffic across. Since you can’t assign a vNIC to pNIC affinity with LBFO, neither the SMB nor non-SMB traffic can be made highly available.

Guest Teaming is a strange one; you could add multiple virtual NICs to a Hyper-V VM; inside the VM, you could use LBFO to team the virtual NICs. However, you cannot affinitize a virtual NIC (vNIC) to a physical NIC (pNIC), so it’s possible that both vNICs added in the VM are sending and receiving traffic out of the same pNIC. If that pNIC fails, you lose both of your virtual NICs.

SET allows you to map each vNIC to a pNIC to ensure that they don’t overlap, ensuring that a Guest Team is able to survive an adapter outage.

Microsoft Software Defined Networking - (SDN) was first released in its modern form in Windows Server 2016 and requires a virtual switch extension called the Virtual Filtering Platform (VFP). VFP is the brains behind SDN, the same extension that runs our public cloud, Azure. VFP can only be added to a SET team.

This means that any of the SDN features (which are included with a Datacenter Edition license) like the Software Load Balancer, Gateways, Distributed Firewall (ACLs), and our modern network QoS capability are also unavailable if you’re using LBFO.

Container Networking - Containers relies on a service called the Host Network Service (HNS). HNS also leverages VFP and as mentioned in the SDN section, VFP can only be added to a Switch Embedded Team (SET). For more information on Container Networking, please see this link.

Virtual Machine Multi-Queues - VMMQ is a critical performance feature for Azure Stack HCI. VMMQ allows you to assign multiple VMQs to the same virtual NIC without which, you rely on expensive software spreading operations (the OS spreads packets across multiple CPUs without hardware (NIC) assistance) that greatly increases CPU utilization on the host, reducing the number of virtual machines you can run.

Moreover, if your vNIC doesn’t get a VMQ, all traffic is processed by the default queue. With SET you can assign multiple VMQs to the default queue which can be shared as needed by any vNIC allowing more VMs to get the bandwidth they need.

In this video, you can see the performance (throughput) benefits of Switch Embedded Teaming over that of LBFO. The video demonstrates a 2x throughput improvement with SET over LBFO, while consuming ~10% additional CPU (a result of double the throughput).

Dynamic VMMQ - d.VMMQ won’t work either. Dynamic VMMQ is an intelligent queue scheduling algorithm for VMMQ that recognizes when CPU cores are overworked by network traffic and reassigns that network traffic processing to other cores automatically so your workloads (e.g. VMs, applications, etc.) can run without competing for processor time.

Here's an example of some of the benefits of Dynamic VMMQ. In the video, you can see the host, spending CPU resources processing packets for a specific virtual NIC. When a competing workload begins on the system (which would prevent the virtual NIC from reaching maximum performance), we automatically tune the system by moving one of the workloads to an available processor.

RSC in the vSwitch is an acceleration that coalesces segments destined for the same virtual NIC into a larger segment.

Outbound network traffic is slimmed to fit into the mtu size of the physical network (default of ~1500 bytes). However, inbound traffic can be coalesced into one big segment. That one big segment takes far less processing than multiple small segments, so once traffic is received by the host, we can combine them and deliver several segments to the vNIC all at once. SET was made aware of RSC coalescing and supports this acceleration as of Windows Server 2019.

We’re continuing to improve this feature for even better performance in the next version of Windows Server and Azure Stack HCI by enabling RSC in the vSwitch to extend over the VMBus. In the video below, we show one VM sending traffic to another VM with the improved acceleration disabled - This is using only the original Windows Server 2019, RSC in the vSwitch capabilities.

Next we enable the Windows Server vNext improvements; throughput is improved by ~17 Gbps while CPU resourcing is reduced by approximately 12% (20 cores on the system). This type of traffic pattern is specifically valuable for container scenarios that reside on the same host.

LBFO has been exceeded in both performance and stability by SET

Note: Guest RDMA, RSC in the vSwitch, VMMQ, and Dynamic VMMQ belong in this category as well.

Certified Azure Stack and Azure Stack HCI solutions test only SET

If all that wasn’t enough, both Microsoft and our partners validate and certify their solutions on SET, not LBFO. If you bought a certified Azure Stack HCI solution from one of our partners OR a standard or premium logo’d NIC, it was tested and validated with Switch Embedded Teaming. That means all certification tests where run with SET.

Link Aggregation Control Protocol (LACP)

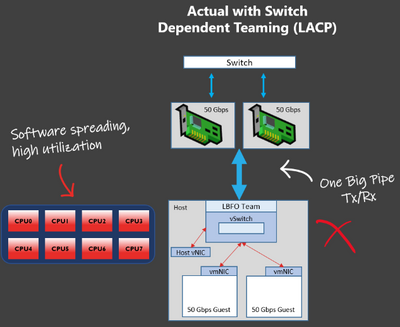

Ok, so this one is a little counter-intuitive. LACP, allows for port-channels or switch-dependent teams to send traffic to the host over more than one physical port simultaneously.

For native hosts this means that every port in the port-channel can send traffic simultaneously – for the system on the right with 2 x 50 Gbps NICs, it looks like one big pipe with a native host potentially receiving 100 Gbps. Naturally, you'd expect that this capability could extend to virtual NICs as well.

But things change with virtualization. When the traffic gets to the host, the NICs need to interrupt multiple, independent processors to exceed what a single CPU core can process – This is what VMMQ does, and as mentioned, VMMQ does not work with LBFO.

LBFO limits you to a single VMQ and despite having (in the picture) 100 Gbps of inbound bandwidth, you would only receive about 5 Gbps per virtual NIC (or up to ~20 Gbps per vNIC at the painful expense of OS-based software spreading that could be used for running virtual machine workloads).

With SET, switch-independent teaming, and the hardware assistance of VMMQ and enough CPUs in the system, you could receive all 100 Gbps of data into the host.

In summary, LACP provides no throughput benefits for Azure Stack HCI scenarios, incurs higher CPU consumption, and cannot auto-tune the system to avoid competition between workloads for virtualized scenarios (Dynamic VMMQ).

Asymmetric Adapters

While we're myth-busting, let’s talk about adapter symmetry which describes the length to which adapters have the same make, model, speed, and configuration – SET requires adapter symmetry for Microsoft support. Usually the easiest way to identify this symmetry is by the device Interface Description (with PowerShell, use Get-NetAdapter). If the interface description matches (with exception of the unique number given to each adapter e.g. Intel NIC #1, Intel NIC #2, etc.) then the adapters are symmetric.

Prior to Windows Server 2016, conventional wisdom stated that you should use different NICs with different drivers in a team. The thinking was that if one driver had an issue, another team member would survive, and the team would remain up. This is a common benefit customers cite in favor of LBFO: it supports asymmetric adapters.

However, two drivers mean twice as many things can go wrong in fact increasing the likelihood of a problem. Instead, a properly designed infrastructure with symmetric adapters are far more stable in our review of customer support cases. As a result, support for asymmetric teams are no longer a differentiator for LBFO nor do we recommend it for Azure Stack HCI scenarios where reliability is the #1 requirement.

LBFO for management adapters

Some customers I’ve worked with have asked if they should use LBFO for management adapters when the vSwitch is not attached – Our recommendation is to always use SET whenever available. A management adapter’s goal in life is to be stable and we see less support cases with SET.

To be clear, if the adapter is not attached to a virtual switch, LBFO is acceptable however, you should endeavor to use SET whenever possible due to the support reasons outlined in this article.

vSwitch on LBFO Deprecation Status

Recently, we publicly announced our plans to deprecate the use of LBFO with the Hyper-V virtual switch. Moving forward, and due to the various reasons outlined in this article, we have decided to block the binding of the vSwitch on LBFO.

Prior to upgrading from Windows Server 2019 to vNext or if you have a fresh install of vNext, you will need to convert any LBFO teams to a SET team if it's attached to a Hyper-V virtual switch. To make this simpler, we're releasing a tool (available on the PowerShell gallery) called Convert-LBFO2SET.

You can install this tool using the command:

Install-Module Convert-LBFO2SET

Or for disconnected systems:

Save-Module Convert-LBFO2SET -Path C:\SomeFolderPath

Please see the wiki for instructions on how to use the tool however here's an example where we convert a system with 10 host vNICs, 10 generation 1 VMs, and 10 generation 2 VMs.

Summary

LBFO remains our teaming solution when Hyper-V is not installed. If however, you are running virtualized or cloud scenarios like Azure Stack HCI, you should give Switch Embedded Teaming serious consideration. As we’ve described in this article, SET has been the Microsoft recommended teaming solution and focus since Windows Server 2016 as it brings better performance, stability, and feature support compared to LBFO.

Are there other questions you have about SET and LBFO? Please submit your questions in the comments below!

Thanks for reading,

Dan “All SET for Azure Stack HCI” Cuomo