- Home

- Windows Server

- Networking Blog

- Synthetic Accelerations in a Nutshell – Windows Server 2016

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Hi folks,

Dan Cuomo back for our next installment in this blog series on synthetic accelerations. Windows Server 2016 marked an inflection point in the synthetic acceleration world on Windows, so in this article we’ll talk about the architectural changes, new capabilities, and changes in configuration requirements compared to the last couple operating systems.

Before we begin, here are the pointers to the previous blogs:

- Synthetic Accelerations in a Nutshell – Windows Server 2012

- Synthetic Accelerations in a Nutshell - Windows Server 2012 R2

Keep in mind that due to changes in Windows Server 2016, many of the details in Windows Server 2012/R2 becomes irrelevant while some become even more important. For example, vRSS becomes more important while the benefits of Dynamic VMQ, as originally implemented in Windows Server 2012 R2, is surpassed in this release. We’ll wrap up this series in a future post that covers Windows Server 2019 (and the new and improved Dynamic VMMQ)!

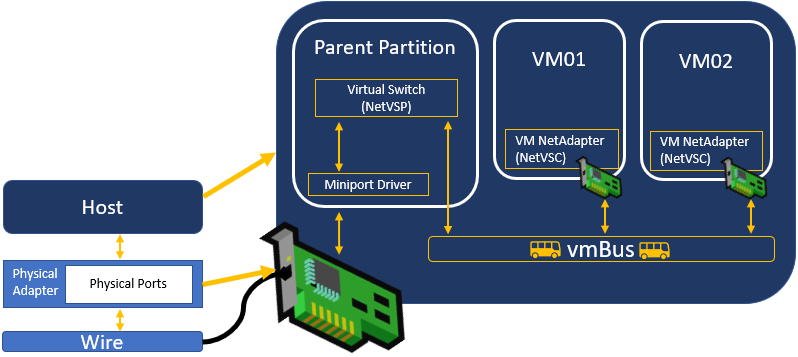

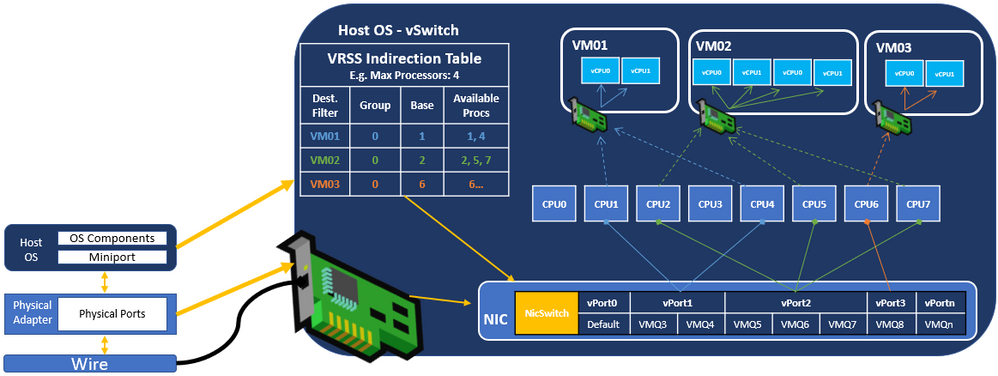

But before we get to the grand finale in Windows Server 2019, let’s talk about the groundwork that occurred in Windows Server 2016 and the big advances they brought in synthetic (through the virtual switch) network performance. As a quick review, this is the synthetic datapath; all ingress traffic must traverse the virtual switch (vSwitch) in the parent partition prior to being received by the guest:

NIC Architecture in Windows Server 2016

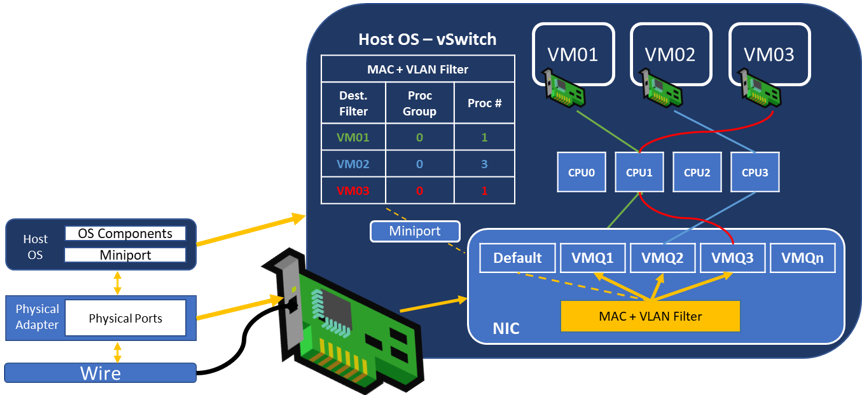

Windows Server 2016 brought a new architecture in the NIC that affects the implementation of VMQ. If you remember the article on Windows Server 2012, you may remember that the NIC creates a filter based on each vmNIC’s MAC and VLAN combination on the Hyper-V vSwitch. Ergo, every MAC and VLAN combination registered on the Hyper-V vSwitch would be passed to the NIC to request a VMQ be mapped.

Note: Well not exactly every combination. VMQs must be requested using the virtual machine properties so only VMs that have the appropriate properties would be passed to the NIC for allocation of a queue. The rest, as you may recall, land in the default queue.

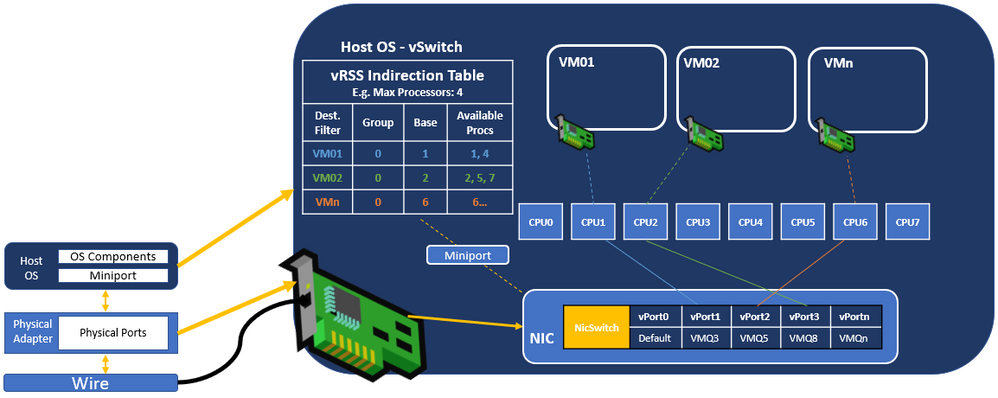

Now however, some added intelligence in the form of an embedded Ethernet switch (NIC Switch) was built into the physical network adapters. When Windows detects that a NIC has a NIC Switch, it asks the NIC to map a NIC Switch port (vPort) to a queue (instead of assigning a queue to the MAC + VLAN filter mentioned previously). Here’s a look at the Legacy VMQ (MAC + VLAN filtered) architecture:

Here’s the new architecture. If you look at the NIC at the bottom of the picture, you can see a queue is now mapped to a vPort and that vPort maps to a processor.

In previous operating systems, the NIC Switch was used only when SR-IOV was enabled on the virtual switch. However, in Windows Server 2016 we split the use of the NIC Switch away from SR-IOV and now leverage it to enable some great advantages.

VRSS without the offload

If you’re running Windows Server 2016, you’re likely NOT in this exact situation. However, this section is important to understand the great leap in performance you’ll see in the next section (so don’t skip this section :smiling_face_with_smiling_eyes:).

Virtual Receive Side Scaling (vRSS) was first introduced in Windows Server 2012 R2. As a review, vRSS has two primary responsibilities on the host:

- Creating the mapping of VMQs to processors (known as the indirection table)

- Packet distribution onto processors

With Legacy VMQ in Windows Server 2012 R2 packet distribution, vRSS performs RSS spreading onto additional processors surpassing the throughput that a single VMQ and CPU could deliver.

While this enabled much improved throughput over Windows Server 2012, the packet distribution (RSS spreading) was performed in software and incurred CPU processing (this is covered in the 2012 R2 article so I won’t spend much time on this here). This capped the potential throughput to a virtual NIC to about 15 Gbps in Windows Server 2012 R2.

Note: If you simply compare vRSS and VMQ on Windows Server 2012 R2 to Windows Server 2016, you’ll notice that your throughput with the same accelerations enabled naturally improves. This is for a couple of reasons but predominantly boils down to the improvements in drivers and operating system efficiency. This is one benefit of upgrading to the latest operating system and installing the latest drivers/firmware.

VMMQ

(I said don’t skip the last section! If you did, go back and read that first!)

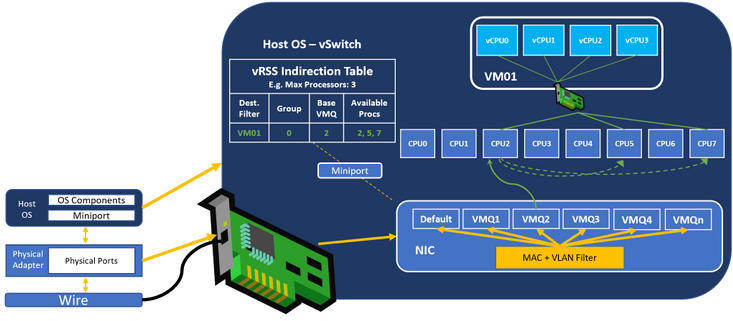

The big advantage of the NIC Switch is the ability to offload the packet distribution function performed by vRSS to NIC. This capability is known as Virtual Machine Multi Queues (VMMQ) and allows us to assign multiple VMQs to the same vPort in the NIC Switch, which you can see in the picture below.

Now instead of a messy software solution that incurs overhead on the CPU and processes the packets in multiple places, the NIC simply places the packet directly onto the intended processor. RSS hashing is used to spread the incoming traffic between the queues assigned to the vPort.

In the last article, we discussed how VMQ and RSS had a budding friendship (see the “Orange Mocha Frappuccinos” reference ;)). Now you should see just how integral RSS is to VMQ’s vitality; They’re like a frog and a bear singing America. They’re movin' right along; they're truly birds of a feather; they’re in this together.

Now the packets only need to be processed once before heading towards their destination – the virtual NIC. The result is a much more performant host and increased throughput to the virtual NIC. With enough available processing power, you can exceed 50 Gbps.

Management of a Virtual NIC’s vRSS and VMMQ Properties

In Windows Server 2016, VMMQ was disabled by default. It was after all, a brand-new feature and after the “Great Windows Server 2012 VMQ” debacle, we thought it best to disable new offloads by default until the NIC and OS had an opportunity to prove they could play nicely together.

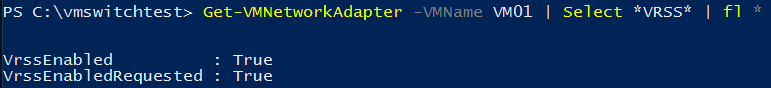

Verify vRSS is Enabled for a vNIC

This should already be enabled (remember this is the OS component that directs the hardware offload, VMMQ) but you can see the settings by running this command for a vmNIC:

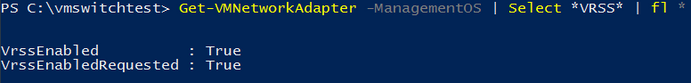

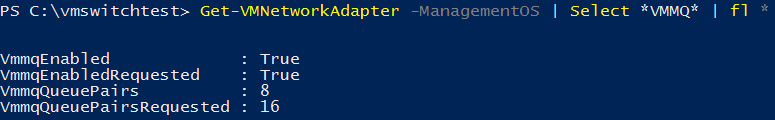

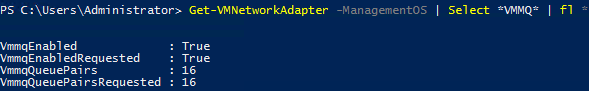

And now a host vNIC – Oh yea! We enabled vRSS for host vNICs in this release as well! This was not previously available on 2012 R2.

Note: If this was accidentally disabled and you’d like to reenable it, please see Set-VMNetworkAdapter.

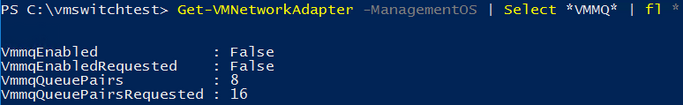

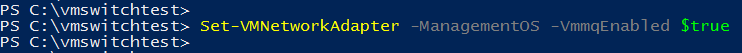

To Enable VMMQ

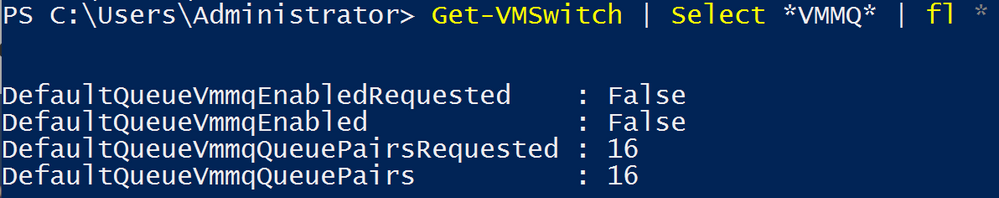

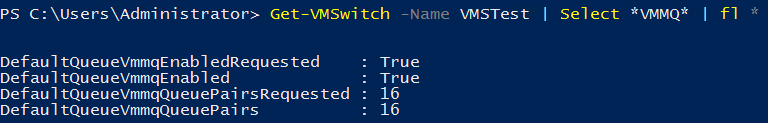

First, check to see if VMMQ is enabled. As previously mentioned, it is not enabled by default on Server 2016.

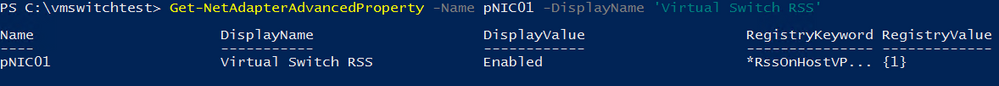

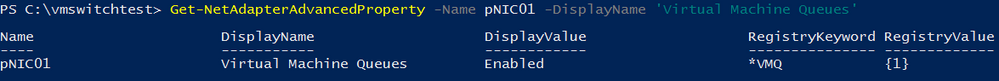

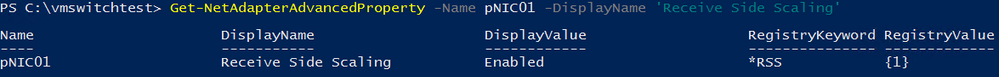

Now let’s check that the prerequisite hardware features are enabled. To do this, use Get-NetAdapterAdvancedProperty to query the device (or use the device manager properties for the adapter) for the following three properties:

Virtual Switch RSS

Virtual Machine Queues

Receive Side Scaling

If any of the above settings are disabled (0) make sure to enable them with Set-NetAdapterAdvancedProperty.

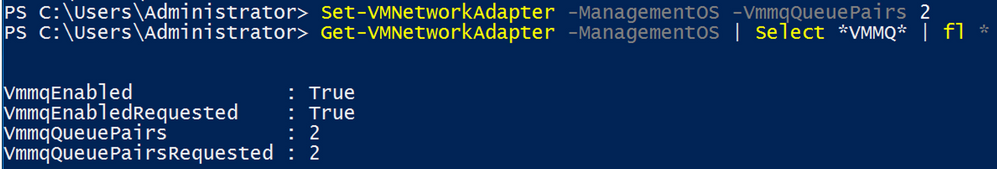

Now let’s enable VMMQ on the vNIC by running Set-VMNetworkAdapter:

Finally, verify that VMMQ is enabled:

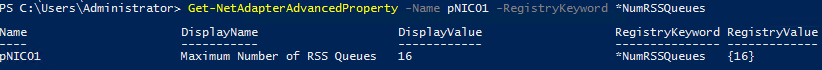

One last thing…In the picture above you can see the number of VMMQ Queue Pairs (see the 2012 article on NIC Architecture – a queue is technically a queue pair) assigned for this virtual NIC ☹. It shows the request was for 16, so why did only 8 get allocated?

First, understand the perspective of each properties output. VMMQQueuePairRequested is what vRSS requested on your behalf – in this case 16. VMMQQueuePairs is the actual number granted by the hardware.

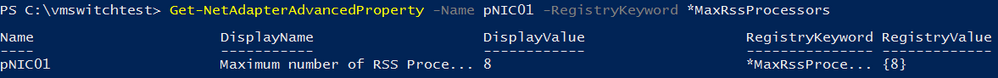

As you know from our previous Get-NetAdapterAdvancedProperty cmdlet above, each network adapter has defined defaults that governs its properties. The *MaxRssProcessors property (intuitively) defines the maximum number of RSS processors that can be assigned for any adapter, virtual NICs included.

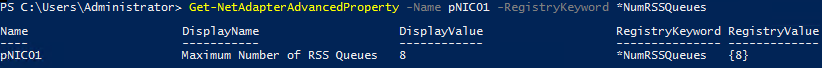

Lastly the *NumRSSQueues defines how many queues can be assigned.

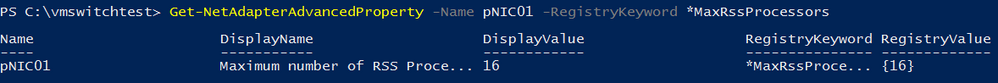

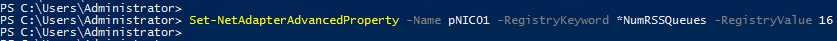

We can remedy this by changing these properties using Set-NetAdapterAdvancedProperty or device manager.

Now check that the virtual NIC now has 16 queue pairs:

Note: Ever wonder why there is an asterisk (*) in front of the Get-NetAdapterAdvancedProperty properties?

These are known as well-known advanced registry keywords which is standardized software contract between the operating system and the network adapter. Any keyword listed here without an asterisk in front of it is defined by the vendor and may be different (or not exist) depending on the adapter and driver you use.

By lowering the number of VMMQQueuePairsRequested you have an easy mechanism to manage the available throughput into a VM. You should assign 1 Queue Pair for every 4 Gbps you’d like a VM to have.

If you choose to do this, keep in mind two things. First, VMMQ is not a true QoS mechanism. Your mileage may vary as the actual throughput will depend on the system and available resources.

Second, VMMQ scales considerably better than VMQ alone in large part because of the improvements to the default queue outlined in the next section so you may not need to manage the allocation of queues quite as stringently as you have in the past.

Management of default queues

In the last section, we enabled VMMQ for a specific virtual NIC. However, you may want to enable VMMQ for the default queue. As a general best practice, we recommend that you enable VMMQ for the default queue.

As a reminder, the default queue is the queue that can apply to multiple devices. More specifically, if you don’t get a VMMQ, you’ll use these. In the past, all VMs that were unable to receive their own VMQ shared the default queue. Now, they share multiple queues (e.g. VMMQ).

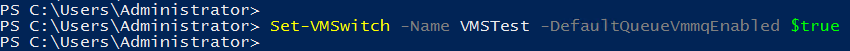

You can see above the same setup is required for the DefaultQueueVMMQPairs. The only difference is that, instead of setting the configuration on the virtual NIC using Set-VMNetworkAdapter, you set the configuration on the virtual switch like this:

Now any virtual machine that is unable to receive its own queues will benefit from having 16 (or whatever you configure) available to share the workload.

Processor Arrays

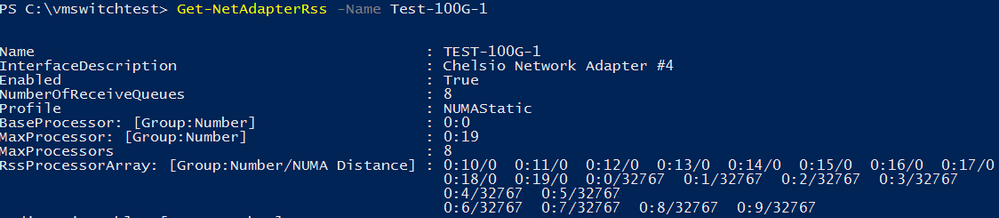

In Windows Server 2016, you are no longer required to set the processor arrays with Set-NetAdapterVMQ or Set-NetAdapterRSS. I’ve been asked if you still can configure these settings if you have a desire to, and the answer is yes. However, the scenarios when this is useful are few and far between. For general use, this is no longer a requirement.

As you can see in the picture below, the RSSProcessorArray includes processors by default and they are ordered by NUMA Distance.

#DownWithLBFO

You may have seen our minor twitter campaign about eliminating LBFO from your environment…

There are numerous reasons for this recommendation, however it boils down to this:

LBFO is our older teaming technology that will not see future investment, is not compatible with numerous advanced capabilities, and has been exceeded in both performance and stability by our new technologies (SET).

Note: If you're new to Switch Embedded Teaming (SET) you can review this guide for an overview

We’ll have a blog that will unpack that statement quite a bit more but let’s talk about it in terms of synthetic accelerations. LBFO doesn’t support offloads like VMMQ. VMMQ is an advanced capability that lowers the host CPU consumption and enables far better network throughput to virtual machines. In other words, your users (or customers) will be happier with VMMQ in that they can generally get the throughput they want, when they want it, so long as you have the processing power and network hardware to meet their demands. If you want to use VMMQ and you’re looking for a teaming technology, you must use Switch Embedded Teaming (SET).

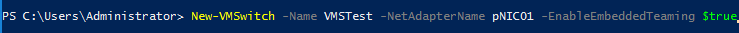

To do this, simply add -EnableEmbeddedTeaming $true to your New-VMSwitch cmdlet.

Summary of Requirements

We continue to chip away at the requirements on the system administrator as compared to previous releases.

- Install latest drivers and firmware

- Processor Array engaged by default – CPU0. This was originally changed in 2012 R2 to enable VRSS (on the host), however this now includes host virtual NICs and hardware queues (VMQ/VMMQ) as well.

- Configure the system to avoid CPU0 on non-hyperthreaded systems and CPU0 and CPU1 on hyperthreaded systems (e.g. BaseProcessorNumbershould be 1 or 2 depending on hyperthreading).

- Configure the MaxProcessorNumber to establish that an adapter cannot use a processor higher than this. The system will now manage this automatically in Windows Server 2016 and so we recommend you do not modify the defaults.

- Configure MaxProcessors to establish how many processors out of the available list a NIC can spread VMQs across simultaneously. This is unnecessary due to the enhancements in the default queue. You may still choose to do this if you’re limiting the queues as a rudimentary QoS mechanism as noted earlier but it is not required.

- Enable VMMQ on Virtual NICs and the Virtual Switch. This is new as this capability didn't exist prior to this release and as mentioned, we disabled new offloads.

- Test customer workload

Summary of Advantages

- Spreading across virtual CPUs (vRSS in the Guest) – The virtual processors have been removed as a bottleneck (originally implemented in 2012 R2).

- vRSS Packet Placement Offload – Additional CPUs can be engaged by vRSS (creation of the indirection table implemented in 2012 R2). Now packet placement onto the correct processor can be done in the NIC improving the performance of an individual virtual NIC to +50 Gbps with adequate available resources. This represents another 3x improvement over Windows Server 2012 R2 (and 6x over Windows Server 2012)!

Note: Some have commented that they have no need for an individual virtual machine to receive +50 Gbps on its own. While these scenarios are (currently) less common, it misses the point. The actual benefit is that +50 Gbps can be processed by the system whether that be 100 VMs / 50 Gbps == 2 Gbps per VM or +50 Gbps for 1 VM. You choose how to divvy up the available bandwidth.

- Multiple queues for the Default Queue – Previously the default queue was a bottleneck for all virtual machines that couldn’t receive their own dedicated queue. Now those virtual machines can leverage VMMQ in the default queue enabling greater scaling of the system.

- Management of the Default Queue – You can choose how many queues for the default queue and each virtual NIC

- Pre-allocated queues – By pre-allocating the queues to virtual machines, we’re able to meet the demand of bursty network workloads.

Summary of Disadvantages

- VMMQ is disabled by default – You need to enable VMMQ individually or use tooling to enable it.

- No dynamic assignment of Queues – Dynamic VMQ is effectively deprecated with the use of VMMQ which means that once a queue has been mapped to a processor it will not be moved in response to changing system conditions.

- Pre-allocated queues – This is also a disadvantage because we may be wasting system resources without the ability to reassign them.

The redesigned NIC architecture (NIC Switch) enabled VMMQ which represents another big jump in synthetic system performance and efficiency. If you’re using the synthetic datapath, you’ll receive a huge boost over what vRSS and Dynamic VMQ alone can bring alone enabling another 3x improvement in performance. In addition, VMMQ enables improved system density (packing more VMs onto the same host) and a more consistent user experience as they will be able to leverage VMMQ in the default queue. Lastly, Switch Embedded Teaming (SET) has officially becomes our recommended teaming option in this release, in part due to its support for advanced offloads like VMMQ.

I hope you enjoyed the first three articles in this series. Next week we’ll wrap up with the final installment describing Dynamic VMMQ and our first acceleration that isn't named VMQ or RSS!

Dan "Accelerating" Cuomo

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.