- Home

- Mixed Reality

- Mixed Reality Feedback and Help

- Re: How to get better quality RGB-D pairs?

How to get better quality RGB-D pairs?

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Feb 19 2021 09:00 AM

Hello :D

I want to run some algorithms based on RGB-D data captured by HoloLens2, thus "aligned depth" is needed, like this question -> https://github.com/microsoft/HoloLens2ForCV/issues/50 . In short, I need RGB - Depth pairs, and they are supposed to have same resolution and same field of view, just like the question says about it, "one-to-one in pixels".

I have run this script as the answer suggested:

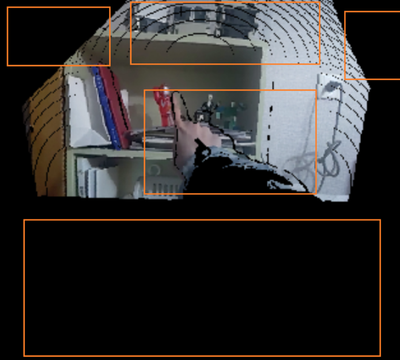

But the aligned RGB-D pair is kind of unsatisfactory. For example, here is a RGB image obtained.

To match the field of view of the depth image (the depth camera have a lower field of view than the RGB camera, right?), there are too many black areas in the RGB image. As the RGB images have been reduced to the same resolution as depth maps (760x428->320x288), it's sad that so many areas are still wasted. And maybe due to the projection calculation in the virtual pinhole camera model, there are strange black curves in the image. I am not able to run my algorithms based on the processed RGB images, so upset T T

Is there any way to get better quality RGB-D pairs? Thanks for any Suggestions you may have!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Feb 19 2021 10:54 AM - edited Feb 19 2021 10:55 AM

@Kernel_Function Hey there! Looks like you're doing some interesting stuff!

Alright, so I haven't worked with Research Mode stuff yet, so I have limited experience here. But I have worked a bit with the Kinect for Azure device and the native SDK there! There are a few things that jump out to me just reading your post.

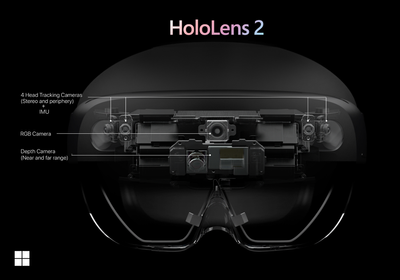

The big one that caught my eye was the color/depth data overlap. On a Kinect for Azure, you'd see a lot more overlap, since the color and depth cameras are close together, facing the same direction, and have similar FOV. However, the HoloLens has a different alignment for color and depth cameras! You can see it a bit in this picture here, the depth camera is actually angled downwards. There's some HL1 images that show the same thing from the side view, so this alignment is similar in both devices.

This makes sense on HoloLens, since the color camera relates to what the user is seeing: straight ahead, but the depth camera needs to work with your hands and the environment: mostly downwards. So that's why you're not seeing a whole lot of overlap there, the sensors just aren't aligned well for this use case!

I also don't remember seeing those rings before on Kinect for Azure! Shadowing yeah, but not rings. The Kinect SDK did a pretty good job overlaying color and depth, so I just don't know if this is because of the way the code you're using works, or if it's because the the camera angle is different and therefore tough to compensate for!

Anyhow, that kinda exhausts my knowledge on the subject. Computer Vision gets complicated, and I've only dabbled. If you could go into higher level details about what you're trying to achieve, it's possible I might know some tools to help there!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Feb 20 2021 07:17 AM

Hi @koujaku ! Extremely grateful for you answering my question!

Yeah, I have also noticed that the layout of the RGB and depth cameras on Hololens caused the problem. Your answer makes me realize why so many algorithms use RGB-D images from Kinect and none of them use data from HoloLens, although the depth sensor in Azure Kinect and in Hololens are the same. The information you have provided is very important, and maybe I need to change the device to obtain the data as the current problem seems kinda difficult to solve. Thanks a lot!