- Home

- Security, Compliance, and Identity

- Microsoft Sentinel

- Re: OMS Agent on Azure Sentinel Log forwarder not receiving and forwarding logs to sentinel workspac

OMS Agent on Azure Sentinel Log forwarder not receiving and forwarding logs to sentinel workspace

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oct 15 2021 12:56 PM

Hello,

We have observed that we no longer are receiving Syslog and CEF logs from the Azure Sentinel Log forwarder that is deployed on client premise. I have performed the following steps:

netstat -an | grep 514

Status: Listening or established (which is fine)

netstat -an | grep 25226

Status: Listening or established (which is fine)

sudo tcpdump -A -ni any port 514 -vv

Status: receive logs from the data sources (which is fine)

sudo tcpdump -A -ni any port 514 -vv | grep (Zscaler IP)

Status: receive logs from the Zscaler data source, the logs showed Palo Alto name in the CEF messages which means Zscaler traffic was routed through the firewall (which is fine, as confirmed by client)

sudo tcpdump -A -ni any port 25226 -vv

Status: No logs were received (Issue Identified)

sudo tcpdump -A -ni any port 25226 -vv | grep (Zscaler IP)

Status: No logs were received (Issue Identified)

Restarted the Rsyslog Service:

service rsyslog restart (After service restart, Azure Sentinel Started receiving the syslog. The Syslog data source came up and working fine)

Restarted the OMSAgent Service

/opt/microsoft/omsagent/bin/service_control restart {workspace ID}

Status: There was no status message and prompt came, assuming it restarted in the background (Please confirm if this is the normal, not prompting or showing any message)

After OMS Agent restart, ran tcpdump again on OMS Agent to see if it starts receiving the logs but no luck.

I followed the following link: https://docs.microsoft.com/en-us/azure/sentinel/troubleshooting-cef-syslog?tabs=rsyslog

Can any one guide what probably be the cause of this issue????? Any help will be much appreciated. Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oct 18 2021 04:39 AM

sudo wget -O cef_troubleshoot.py https://raw.githubusercontent.com/Azure/Azure-Sentinel/master/DataConnectors/CEF/cef_troubleshoot.py... python cef_troubleshoot.py [WorkspaceID]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oct 21 2021 05:43 AM

@Gary Bushey Thank you for the prompt response. We have got the issue resolved through microsoft support. Apparently for some reason the OMI Agent was in a zombie/stuck state. Restarting the agent didnt work, had to manually kill the process and start the agent again.

Killing and starting the agent again resolved the issue. As per MS teams , one of the possibility of this behavior may be that the disk space got full earlier at some point in time, which was then resolved however may be that disk space issue might have caused the agent to go into such a state.

Anyways, the issue has been resolved, we are still monitoring to see if it remains stable or not.

Sharing the above for the benefit of all.

Thanks once again for your support, much appreciated.

Fahad.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Feb 03 2023 08:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2023 06:29 AM

Hi @alazarg

Check your /etc/rsyslog.d/50-default.conf file. It has a section like this:

#

# First some standard log files. Log by facility.

#

#auth,authpriv.* /var/log/auth.log

*.*;auth,authpriv.none -/var/log/syslog

#cron.* /var/log/cron.log

#daemon.* -/var/log/daemon.log

kern.* -/var/log/kern.log

#lpr.* -/var/log/lpr.log

mail.* -/var/log/mail.log

#user.* -/var/log/user.logThe problem is line 5 where any facility (*.*) is written to /var/log/syslog file. Solution is to either comment it out (using #) or even better:

#

# First some standard log files. Log by facility.

#

if ($fromhost-ip == '127.0.0.1') then {

#auth,authpriv.* /var/log/auth.log

*.*;auth,authpriv.none -/var/log/syslog

#cron.* /var/log/cron.log

#daemon.* -/var/log/daemon.log

kern.* -/var/log/kern.log

#lpr.* -/var/log/lpr.log

mail.* -/var/log/mail.log

#user.* -/var/log/user.log

}Then only local generated logs will be written to the filesystem and the disk will not be filled.

Good luck.

/Kenneth ML

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May 02 2023 04:14 PM

Thank you very much for the input @Kenneth Meyer-Lassen

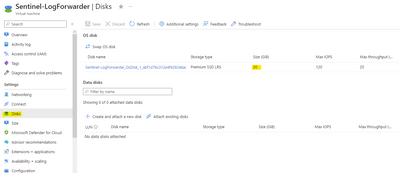

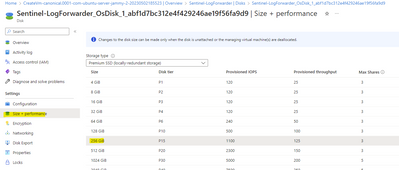

The disk size full issue was resolved by increasing the OS Disk size. When creating Linux VM in Azure, OS disk is 32GB and for Log forwarder that need to be increased to at least 256 GB, as follows:

Select the disk, choose from the list, and save.

The syslog server will maintain the log file cleanup with its scheduled task.