- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- What's New in Notebooks - MSTICPy v2.0.0

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We've just released v2.0.0 of MSTICPy - the Python InfoSec library for Jupyter notebooks.

This is a major version update and has:

- Several new features

- Some significant updates/improvements to existing features

- New and improved documentation on our ReadTheDocs documentation site

- Some breaking changes and deprecations.

To give you a feel for the feature changes in the release we've put together a notebook containing some of the features of MSTICPy 2.0. This is available at What's new in MSTICPy 2.0.

If you are new to MSTICPy or just want to catch up and get a quick overview check out our new MSTICPy Quickstart Guide.

Contents

- MSTICPy v2.0 - random facts

- Dropping Python 3.6 support

- Package re-organization and module search

- Simplifying imports in MSTICPy

- Folium map update - single function, layers, custom icons

- Threat Intelligence providers - async support

- Time Series simplified - analysis and plotting

- DataFrame to graph/network visualization

- Pivots - easy initialization/dynamic data pivots

- Consolidating Pandas accessors

- MS Sentinel workspace configuration

- MS Defender queries available in MS Sentinel QueryProvider

- Microsoft Sentinel QueryProvider

- New queries

- Documentation additions and improvements

- Miscellaneous improvements

- Feedback

- Appendix - Previous feature changes since MSTICPy 1.0

MSTICPy v2.0

MSTICPy is approaching its 4th birthday. It's first proper PR in Jan 2019 took it to a total of 7.5k lines of code! Some fun facts:

- v2.0 was a big change - 100k lines added, 70k lines removed (although a lot of these were just code moving location)

- Now at 220k+ lines of code

- Most active month - December 2021 with 35 PRs

- Most active hour of the week - Tues 22:00 UTC/14:00 PST - more than 2x any other hour of the week! Who knows why!

- 34 PR Contributors

- 959 Github stars

- 137k downloads

- MSTICPy powers 80% of notebooks in Microsoft Sentinel repo

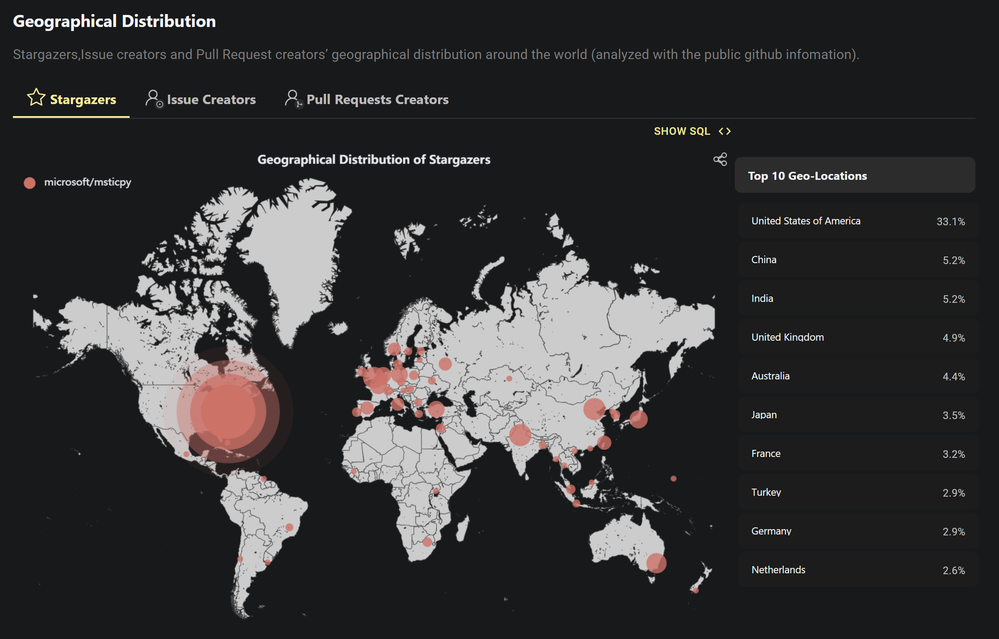

Geographical distribution of our GitHub likers

Graph courtesy of OSS Insight - see the full data at OSSInsight/msticpy

Dropping Python 3.6 support

As of this release, we only officially support Python 3.8 and above. Python 3.6 is nearly 8 years old and is no longer supported by a lot of packages that we depend on. There are also a bunch of features such as dataclasses and better type hinting that we'd like to start using.

We will try to support Python 3.6 if the fixes required are small and contained but make no guarantees of it working completely on

Python prior to 3.8.

Package re-organization and module search

One of our main goals for v2.0.0 was to re-organize MSTICPy to be more logical and easier to use and maintain. Several years of organic growth had seen modules created in places that seemed like a good idea at the time but did not age well.

The discussion about the V2 structure can be found on our GitHub repo here: #320.

Due to the re-organization, many features are no longer in places where they used to be imported from!

We have tried to maintain compatibility with old locations by adding "glue" modules. These allow import of many modules from their previous locations but will issue a Deprecation warning if loaded from the old location. The warning will contain the new location of the module - so, you should update your code to point to this new location.

This table gives a quick overview of the V2.0 structure

| folder | description |

|---|---|

| msticpy.analysis | data analysis functions - timeseries, anomalies, clustering |

| msticpy.auth | authentication and secrets management |

| msticpy.common | commonly used utilities and definitions (e.g. exceptions) |

| msticpy.config | configuration and settings UI |

| msticpy.context | enrichment modules geoip, ip_utils, domaintools, tiproviders, vtlookup |

| msticpy.data | data acquisition/queries/storage/uploaders |

| msticpy.datamodel | entities, soc objects |

| msticpy.init | package loading and initialization - nbinit, pivot modules |

| msticpy.nbwidgets | nb widgets modules |

| msticpy.transform | simple data processing - decoding, reformatting, schema change, process tree |

| vis | visualization modules including browsers |

Notable things that have moved:

- most things from the

sectoolsfolder have migrated to context, transform or analysis - most things from the

nbtoolsfolder have migrated to either:msticpy.init- (not to be confused with__init__) - package initializationmsticpy.vis- visualization modules

- pivot functionality has moved to

msticpy.init

Module Search

If you are having trouble finding a module, we have added a simple search function:

import msticpy as mp

mp.search("riskiq")

Matches of module names will be returned in a table with links to the module documentation:

Modules matching 'riskiq'

| Module | Help |

|---|---|

| msticpy.context.tiproviders.riskiq | msticpy.context.tiproviders.riskiq |

Simplifying imports in MSTICPy

The root module in MSTICPy now has several modules and classes that can be directly accessed from it (rather than

having to import them individually).

We've also decided to adopt a new "house style" of importing msticpy as the alias mp. Slavishly copying the idea from some of great packages that we use (pandas -> pd, numpy -> np, networkx -> nx) we thought it would save a bit of typing. You are free to adopt or ignore this style - it has no impact on the functionality.

import msticpy as mp

mp.init_notebook()

qry_prov = mp.QueryProvider("MDE")

ti = mp.TILookup()

Many commonly used classes and functions are exposed as attributes of msticpy (or mp).

Also, a number of frequently used classes are imported by default in the init_notebookfunction, notably all of the entity classes. This makes it easier to use pivot functions without any initialization or import steps.

import msticpy as mp

mp.init_notebook()

# IpAddress can be used without having to import it.

IpAddress.whois("123.45.6.78")

init_notebook improvements

- You no longer need to supply the

namespace=globals()parameter when calling from a notebook.init_notebookwill automatically obtain the notebook global namespace and populate imports into it. - The default verbosity of

init_notebookis now 0, which produces minimal output - useverbosity=1orverbosity=2to get more

detailed reporting. - The Pivot subsystem is automatically initialized in

init_notebook. - All MSTICPy entities are imported automatically.

- All MSTICPy magics are initialized here.

- Most MSTICPy pandas accessors are initialized here (some, which require optional packages, such as the timeseries accessors are

not initialized by default). init_notebooksupports aconfigparameter - you can use this to provide a custom path to amsticpyconfig.yamloverriding the usual defaults.- searching for a

config.jsonfile is only enabled if you are running MSTICPy in Azure Machine Learning.

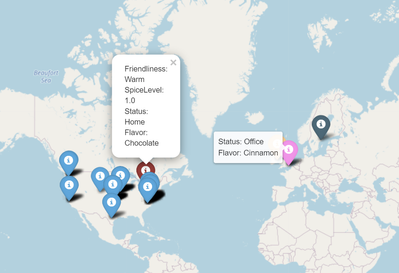

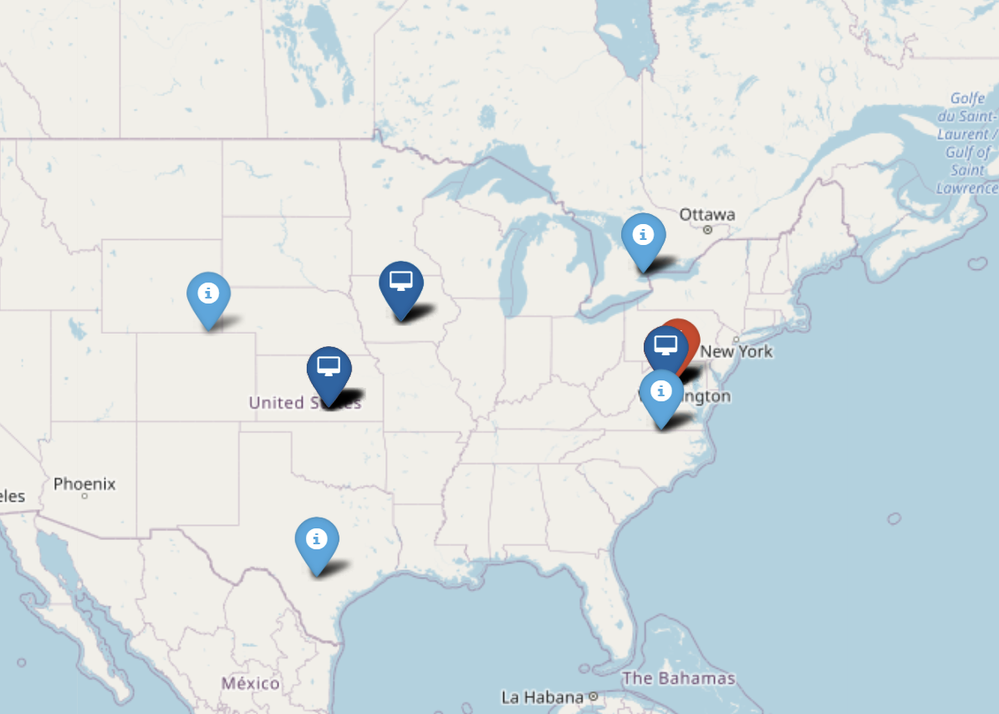

Folium map update - single function, layers, custom icons

The Folium module in MSTICPy has always been a bit complex to use since it normally required that you convert IP addresses to MSTICPy

IpAddress entities before adding them to the map.

You can now plot maps with a single function call from a DataFrame containing IP addresses or location coordinates. You can group the data

into folium layers, specify columns to populate popups and tooltips and to customize the icons and coloring.

plot_map

A new plot_map function (in the msticpy.vis.foliummap module) that lets you plot mapping points directly from a DataFrame. You can

specify either an ip_column or coordinates columns (lat_column and long_column). In the former case, the geo location of the IP address

is looked up using the MaxMind GeoLiteLookup data.

You can also control the icons used for each marker with the icon_column parameters. If you happen to have a column in your data that contains names of FontAwesome or GlyphIcons icons you can use that column directly.

More typically, you would combine the icon_column with the icon_map parameter. You can specify either a dictionary or a function. For a dictionary, the value of the row in icon_column is used as a key - the value is a dictionary of icon parameters passed to the Folium.Icon class. For a function, the icon_columnvalue is passed to the function as a single parameter and the return value

should be a dictionary of valid parameters for the Icon class.

You can read the documentation for this function in the docs.

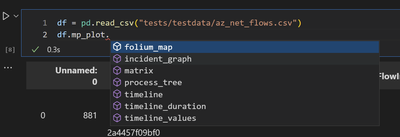

plot_map pandas accessor

Plot maps from the comfort of your own DataFrame!

Using the msticpy mp_plot accessor you can plot maps directly from a pandas DataFrame containing IP or location information.

The folium_map function has the same syntax as plot_mapexcept that you omit the data parameter.

df.mp_plot.folium_map(ip_column="ip", layer_column="CountryName")

Layering, Tooltips and Clustering support

In plot_map and .mp_plot.folium_map you can specify a layer_column parameter. This will group the data by the values in that column and create an individually selectable/displayable layer in Folium. For performance and sanity reasons this should be a column with a relatively small number of discrete values.

Clustering of markers in the same layer is also implemented by default - this will collapse multiple closely located markers

into a cluster that you can expand by clicking or zooming.

You can also populate tooltips and popups with values from one or more column names.

"Classic" interface

The original FoliumMap class is still there for more manual control. This has also been enhanced to support direct plotting from IP, coordinates or GeoHash in addition to the existing IpAddress and GeoLocation entities. It also supports layering and clustering.

Threat Intelligence providers - async support

When you have configured more than one Threat Intelligence provider, MSTICPy will execute requests to each of them asynchronously. This will bring big performance benefits when querying IoCs from multiple providers.

Note: requests to individual providers are still executed synchronously since we want to avoid swamping provider services with multiple

simultaneous requests.

We've also implemented progress bar tracking for TI lookups, giving a visual indication of progress when querying multiple IoCs.

Combining the progress tracking with asynchronous operation means that not only is performing lookups for lots of observables faster

but you will also less likely to be left guessing whether or not your kernel has hung.

Note that asynchronous execution only works with lookup_iocs and TI lookups

done via the pivot functions. lookup_ioc will run queries to multiple providers in seqence

so will usually be a lot slower than lookup_iocs.

# don't do this

ti_lookup.lookup_ioc("12.34.56.78")

# do this (put a single IoC in a list)

ti_lookup.lookup_iocs(["12.34.56.78"])

TI Providers are now also loaded on demand - i.e., only when you have a configuration entry in your msticpyconfig.yaml for that provider.

This prevents loading of code (and possibly import errors) due to providers which you are not intending to use.

Finally, we've added functions to enable and disable providersafter loading TILookup:

from msticpy.context import TILookup

ti_lookup = TILookup()

iocs = ['162.244.80.235', '185.141.63.120', '82.118.21.1', '85.93.88.165']

ti_lookup.lookup_iocs(iocs, providers=["OTX", "RiskIQ"])

Time Series simplified - analysis and plotting

Although the Time Series functionality was relatively simple to use, it previously required several disconnected steps to compute

the time series, plot the data, extract the anomaly periods. Each of these needed a separate function import. Now you can do all of these

from a DataFrame via pandas accessors. (currently, there is a separate accessor -df.mp_timeseries - but we are still working on consolidating our pandas accessors so this may change in the future.)

Because you typically still need these separate outputs, the accessor has multiple methods:

df.mp_timeseries.analyze- takes a time-summarized DataFrame and returns the results of a time-series decompositiondf.mp_timeseries.plot- takes a decomposed time-series and plots the anomaliesdf.mp_timeseries.anomaly_periods- extracts anomaly periods as a list of time rangesdf.mp_timeseries.anomaly_periods- extracts anomaly periods as a list of KQL query clausesdf.mp_timeseries.apply_threshold- applies a new anomaly threshold score and returns the results.

See documentation for these functions.

Analyze data to produce time series.

df = qry_prov.get_networkbytes_per_hour(...)

ts_data = df.mp_timeseries.analyze()

Analyze and plot time series anomalies

df = qry_prov.get_networkbytes_per_hour(...)

ts_data = df.mp_timeseries.analyze().mp_timeseries.plot()

Analyze and retrieve anomaly time ranges

df = qry_prov.get_networkbytes_per_hour(...)

df.mp_timeseries.analyze().mp_timeseries.anomaly_periods()

[TimeSpan(start=2019-05-13 16:00:00+00:00, end=2019-05-13 18:00:00+00:00, period=0 days 02:00:00),

TimeSpan(start=2019-05-17 20:00:00+00:00, end=2019-05-17 22:00:00+00:00, period=0 days 02:00:00),

TimeSpan(start=2019-05-26 04:00:00+00:00, end=2019-05-26 06:00:00+00:00, period=0 days 02:00:00)]

df = qry_prov.get_networkbytes_per_hour(...)

df.mp_timeseries.analyze().mp_timeseries.kql_periods()

'| where TimeGenerated between (datetime(2019-05-13 16:00:00+00:00) .. datetime(2019-05-13 18:00:00+00:00)) or TimeGenerated between (datetime(2019-05-17 20:00:00+00:00) .. datetime(2019-05-17 22:00:00+00:00)) or TimeGenerated between (datetime(2019-05-26 04:00:00+00:00) .. datetime(2019-05-26 06:00:00+00:00))'

DataFrame to graph/network visualization

You can convert a pandas DataFrame into a NetworkX graph or plot directly as a graph using Bokeh interactive plotting.

You pass the functions the column names for the source and target nodes to build a basic graph. You can also name other columns to be node or edge attributes. When displayed these attributes are visible as popup details courtesy of Bokeh’s Hover tool.

proc_df.head(100).mp_plot.network(

source_col="SubjectUserName",

target_col="Process",

source_attrs=["SubjectDomainName", "SubjectLogonId"],

target_attrs=["NewProcessName", "ParentProcessName", "CommandLine"],

edge_attrs=["TimeGenerated"],

)

Pivots - easy initialization/dynamic data pivots

The pivot functionality has been overhauled. It is now initialized automatically in init_notebook - so you don't have to import and create an instance of Pivot.

Better data provider support

Previously, queries from data providers were added at initialization of the Pivot subsystem. This meant that you had to:

- create your query providers before starting Pivot

- every time you created a new QueryProvider instance you had to re-initialize Pivot.

Data providers now dynamically add relevant queries as pivot functions when you authenticate to the provider.

Multi-instance provider support

Some query providers (such as MS Sentinel) support multiple instances. Previously this was not well supported in Pivot functions - the last

provider loaded would overwrite the queries from earlier providers. Pivot now supports separate instance naming so that each Workspace has a separate instance of a given pivot query.

Threat Intelligence pivot functions

The naming of the Threat Intelligence pivot functions has been considerably simplified.

VirusTotal and RiskIQ relationship queries should now be available as pivot functions (you need the VT 3 and PassiveTotal packages installed

respectively to enable this functionality).

More Defender query pivots

A number of MS Defender queries (using either the MDE or MSSentinel QueryProviders) are exposed as Pivot functions.

Consolidating Pandas accessors

Pandas accessors let you extend a pandas DataFrame or Series with custom functions. We use these in MSTICPy to let you call analysis or visualization functions as methods of a DataFrame.

Most of the functions previously exposed as pandas accessors, plus some new ones, have been consolidated into two main accessors.

- df.mp - contains all of the transformation functions like base64 decoding, ioc searching, etc.

- df.mp_plot - contains all of the visualization accessors (timeline, process tree, etc.)

mp accessor

- b64extract - base64/zip/gzip decoder

- build_process_tree - build process tree from events

- ioc_extract - extract observables by pattern such as IPs, URLs, etc.

- mask - obfuscate data to hide PII

- to_graph - transform to NetworkX graph

mp_plot accessor

- folium_map - plot a Folium map from IP or coordinates

- incident_graph - plot an incident graph

- matrix - plot correlation between two values

- network - plot graph/network from tabular data

- process_tree - plot process tree from process events

- timeline - plot a multi-grouped timeline of events

- timeline_duration - plot grouped start/end of event sequence

- timeline_values - plot timeline with a scalar values

Example usage (note: the required parameters, if any, are not shown in these examples)

df.mp.ioc_extract(...)

df.mp.to_graph(...)

df.mp.mask(...)

df.mp_plot.timeline(...)

df.mp_plot.timeline_values(...)

df.mp_plot.process_tree(...)

df.mp_plot.network(...)

df.mp_plot.folium_map(...)

One of the benefits of using accessors is the ability to chain them into a single pandas expression (mixing with other pandas methods).

(

my_df

.mp.ioc_extract(...)

.groupby(["IoCType"])

.count()

.reset_index()

.mp_plot.timeline(...)

)

MS Sentinel workspace configuration

From MPConfigEdit you can more easily import and update your Sentinel workspace configuration.

Resolve Settings

If you have a minimal configuration (e.g., just the Workspace ID and Tenant ID) you can retrieve other values such as Subscription ID, Workspace Name and Resource Group and save them to your configuration using the Resolve Settings button.

Import Settings from URL

You can copy the URL from the Sentinel portal and paste it into the MPConfigEdit interface. It will extract and lookup the full details of the workspace to save to your settings.

Expanded Sentinel API support

The functions used to implement the above functionality are also available standalone in the MSSentinel class.

from msticpy.context.azure import MicrosoftSentinel

MicrosoftSentinel.get_workspace_details_from_url(url)

MicrosoftSentinel.get_workspace_name(ws_id)

MicrosoftSentinel.get_workspace_settings(resource_id)

MicrosoftSentinel.get_workspace_settings_by_name(ws_name, sub_id, res_group)

MicrosoftSentinel.get_workspace_id(ws_name, sub_id, res_group)

MS Defender queries available in MS Sentinel QueryProvider

Since Sentinel now has the ability to import Microsoft Defender data, we've made the Defender queries usable from the MS Sentinel provider.

qry_prov = QueryProvider("MSSentinel")

qry_prov.MDE.list_host_processes(host_name="my_host")

This is not specific to Defender queries but is a more generic functionality that allows us to share compatible queries between different QueryProviders. Many of the MS Defender queries are also now available as Pivot functions.

Microsoft Sentinel QueryProvider changes

There are several changes specific to the MS Sentinel query provider:

-

The MS Sentinel provider now support a timeout parameter allowing you lengthen and shorten the default.

qry_prov.MDE.list_host_processes( host_name="myhost", timeout=600, )

-

You can set other options supported by Kqlmagic when initializing the provider

qry_prov = mp.QueryProvider("MSSentinel", cloud="government")

-

You can specify a workspace name as a parameter when connecting instead of creating a WorkSpaceConfig instance or supplying

a connection string. To use the Default workspace supply "Default" as the workspace name.qry_prov.connect(workspace="MyWorkspace")

New queries

Several new Sentinel and MS Defender queries have been added.

| Provider | Query | Description |

|---|---|---|

| AzureNetwork | network_connections_to_url | List of network connections to a URL |

| LinuxSyslog | notable_events | Returns all syslog activity for a host |

| LinuxSyslog | summarize_events | Returns a summary of activity for a host |

| LinuxSyslog | sysmon_process_events | Get Process Events from a specified host |

| WindowsSecurity | account_change_events | Gets events related to account changes |

| WindowsSecurity | list_logon_attempts_by_ip | Retrieves the logon events for an IP Address |

| WindowsSecurity | notable_events | Get noteable Windows events not returned in other queries |

| WindowsSecurity | schdld_tasks_and_services | Gets events related to scheduled tasks and services |

| WindowsSecurity | summarize_events | Summarizes a the events on a host |

Over 30 MS Defender queries can now also be used in MS Sentinel workspaces if MS Defender for Endpoint/MS Defender 365 data is connected to Sentinel.

Additional Azure resource graph queries

| Provider | Query | Description |

|---|---|---|

| Sentinel | get_sentinel_workspace_for_resource_id | Retrieves Sentinel/Azure monitor workspace details by resource ID |

| Sentinel | get_sentinel_workspace_for_workspace_id | Retrieves Sentinel/Azure monitor workspace details by workspace ID |

| Sentinel | list_sentinel_workspaces_for_name | Retrieves Sentinel/Azure monitor workspace(s) details by name and resource group or subscription_id |

See the updated built-in query list in the MSTICPy documentation.

Documentation additions and improvements

The documentation for V2.0 is available at https://msticpy.readthedocs.io (previous versions are still online and can be accessed through

the ReadTheDocs interface).

New and updated documents

- New MSTICPy Quickstart Guide

- Updated Installing guide

- Updated MSTICPy Package Configuration

- Updated Threat Intel Lookup documentation

- Updated Time Series analysis documentation

- New Plot Network Graph from DataFrame

- Updated Plotting Folium maps

- Updated Pivot functions

- Updated Jupyter and Sentinel

API documentation

As well as including all of the new APIs, the API documentation has been split into a module-per-page to make it easier to read and navigate.

InterSphinx

The API docs also now support "InterSphinx".

This means that references in the MSTICPy documentation to objects in other packages that support InterSphinx (e.g., Python standard library, pandas, Bokeh) have active links that will take you to the native documentation for that item.

Sample notebooks

The sample notebooks for most of these features have been updated along the same lines as the documentation. See MSTICPy Sample notebooks.

There are three new notebooks:

- ContiLeaksAnalysis

- Network Graph from DataFrame

- What's new in MSTICPy 2.0

ContiLeaks notebook added to MSTICPy Repo

We are privileged to host Thomas's awesome ContiLeaks notebook that covers investigation into attacker forum chats including

some very cool illustration of using natural language translation in a notebook.

Thanks to Thomas Roccia (aka @fr0gger) for this!

Miscellaneous improvements

- MSTICPy network requests use a custom User Agent header so that you

can identify or track requests from MSTICPy/Notebooks. - GeoLiteLookup and the TOR and OpenPageRank providers no longer try

to download data files at initialization - only on first use. - GeoLiteLookup tries to keep a single instance if the parameters

that you initialize it with are the same - Warnings cleanup - we've done a lot of work to clean up warnings -

especially deprecation warnings. - Moved some remaining Python unittest tests to pytest

Feedback

Please reach out to us on GitHub - file an issue or start a discussion on

https://github.com/microsoft/msticpy - or email us at msticpy@microsoft.com

Appendix - Summary of changes since MSTICPy v1.0

- Sentinel Search API Support v1.8.0

- Azure authentication improvements v1.8.0

- Powershell deobfuscator and viewer v.1.7.5

- Splunk Async queries v.1.7.5

- CyberReason QueryProvider @FlorianBracq v1.7.0

- IntSights TI provider @FlorianBracq v1.7.0

- Splunk queries @d3vzer0 v1.7.0

- Moved from requests to httpx @grantv9 v1.7.0

- MS Sentinel API support for watchlists and analytics v1.6.0

- Clustering, grouping and layering support for Folium @tj-senserva v1.6.0

- Process Tree visualization supports multiple data schemas v1.6.0

- VT FileBehavior, File object browser and Pivot functions v1.6.0

- Single sign-on for notebooks in AML v1.5.1

- RiskIQ TI Provider and Pivot functions @aeetos v1.5.1

- Sentinel Incident and Entity graph exploration and visualization v1.5.0

- Support for Azure Data Explore (Kusto) QueryProvider v1.5.0

- Support for M365D QueryProvider v1.5.0

- Added GitHub actions CI pipeline and updated Azure pipelines CI v1.5.0

- Support for Azure sovereign clouds v1.4.0

- Process Tree visualization for MDE data v1.4.0

- Matrix plot visualization v1.4.0

- Enable MSTICPy use from applications and scripts v.1.3.1

- Timeline duration visualization v1.3.0

- Azure Resource Graph provider @rcobb-scwx v1.2.1

- Sumologic QueryProvider @juju4 v1.2.1

- Notebook data viewer v1.2.0

- Pivot functions updates - joins for all pivot types, shortcuts v1.1.0

- GreyNoise TI Provider v1.1.0

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.