- Home

- Security, Compliance, and Identity

- Security, Compliance, and Identity Blog

- Using the Office 365 Management Activity API and Power BI for security analysis (Part 1)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Publishers note: I am posting this on behalf my colleague Jon Nordstrom who is part of the Office 365 customer experience team. Myself and few other engineers thought you would all benefit from his knowledge of the Office 365 management activity API

A common question we get from organizations that move to Office 365 is, how do I get visibility into transactions that effect the information stored in Office 365. The security and compliance center and the Office 365 Management Activity API provide organizations with this visibility though detailed information with regards to user, admin, system, and policy actions and events from Office 365 and Azure Active Directory (Azure AD).

Reviewing the wealth of information available via the API and the audit logs, can be challenging though. Triaging detection results manually and categorizing related activities into groups can be difficult. The amounts of data generated from likely malicious activity can overwhelm investigators with irrelevant results. We know from experience the importance of accurate tooling, Microsoft Online Services processes petabytes of event monitoring data.

When Microsoft Online Services engineering started on the journey to view detection results as graphs we found that it enabled us to:

- evaluate intrusion detection results in context with related activity

- incorporate lower-fidelity indicators without being overwhelmed with benign results

- determine with greater fidelity when datacenter activity is likely to represent an intrusion

In this first post of our two-part series we will to help you understand how you can get the data necessary to perform a security analysis of it. The second post will focus on examples scenarios and using Power BI to visualize the data.

We are going to use Azure Cosmos DB, Azure Function Apps and cloud message queues to retrieve and store information. The data stores can be removed or purged, and the solution can be disabled or removed. Azure Cosmos DB also allows for the concept of TTL to expire content if you continue to synchronize the information. The sample graphs generated in this post were created with the free version of the ZoomCharts add-in for Power BI Desktop. Depending on your need there are several other graph add-ins available such as Network Navigator Chart and Force-Directed Graph. If you already have a method for obtaining data from the Activity API such as Azure Log Analytics , then continue to use that and access the information from Power BI which we briefly discuss in this post and go into more detail in next post.

1. Register an App with Azure Active Directory

Use this as a guide to create and register an application for making calls to the Office 365 Activity API. There are more detailed information about app registrations here. For this environment, the “Configure an X.509 certificate to enable service-to-service calls” is not necessary; it is enough to have an application with the appropriate permissions set. Don’t forget to complete the Administrator consent.

2. Setting up the infrastructure to push the Office 365 activities

The first step is to push the information you need to a store. We’ll use this sample from GitHub https://github.com/OfficeDev/O365-ActivityFeed-AzureFunction that allow you to push the past seven days of Office 365 Activity data to an instance of Azure Cosmos DB. You can limit the number of days and the workloads as needed. The code is created to run in two Azure Functions (PowerShell is currently in preview).

- Azure Function 1 places activity messages in a cloud message queue.

- Azure Function 2 picks up messages from that queue and transmits them to Azure Cosmos DB.

1. Create Function 1. (QueueEvents.ps1)

Remember to register a new Application in Azure Active Directory with adequate permissions before you take these steps.

If you don’t already have a Function App start to create one. By default, runtime is limited to 5 minutes for the functions running under the consumption plans, however, you can increase the runtime up to 10 minutes. An alternative is to use the App Consumption plan if you expect longer execution times. The consumption plans typically cover the need for retrieving the event data.

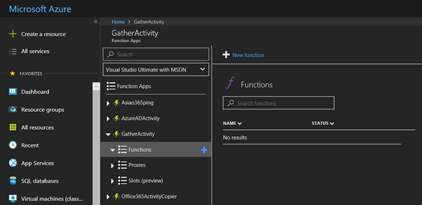

Create a New Function in the Function App

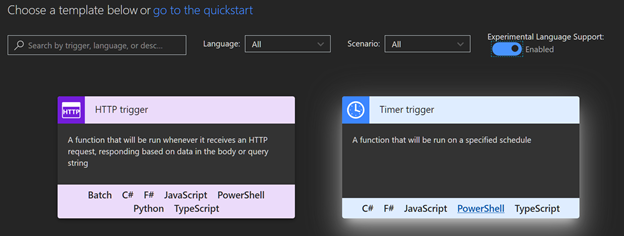

Enable experimental Language support, select Timer trigger and PowerShell.

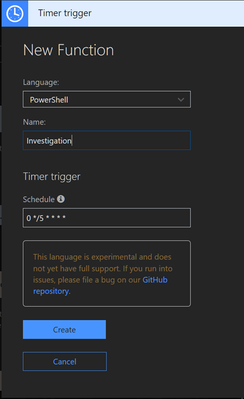

Name the new function, and select the schedule for the trigger, which determines how often the function will pull data. A workload.log file is used to keep track of time to prevent duplicate requests.

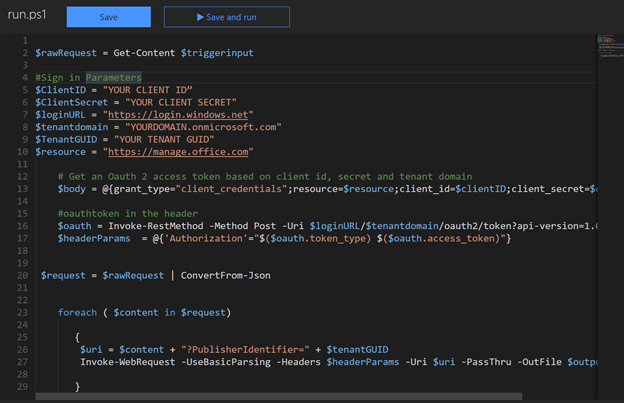

Next, paste the code for function 1., QueueEvents.ps1 to the function.

Adjust the variables for sign in based on the AAD application registered. The Tenant GUID can be found under the Endpoints in the app registration. It is listed as part of the endpoints.

We will come back to adjust the storage variables shortly as part of the integration of the storage queues. Optionally modify the included workloads or message size. Note that reducing message size will increase the number of times Azure Function 2 is run. Making message size too large (for example, > 100) may cause you to exceed the limits of the queue and reduce performance.

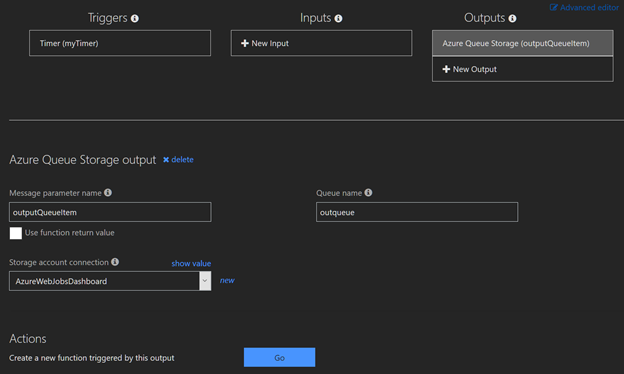

Next, select integrate and add an Azure Queue Storage output. The queue name is specified using the $storageQueue variable use ‘outQueue’with single quotes unless you changed the queue name in the output settings. Click, Show Value on the "Storage account connection" and copy the Account key value to $StorageAccountKey and the AccountName to $StorageAccountName. These steps are required to use cloud queue functionality. A regular storage queue call does not allow for multiple objects.

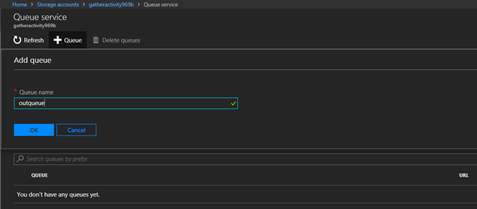

Do verify that the queue was created, there have been some instances in the testing where they weren’t. In that case create a queue manually in the associated storage account.

2. Create Function 2. (StoreEvents.ps1)

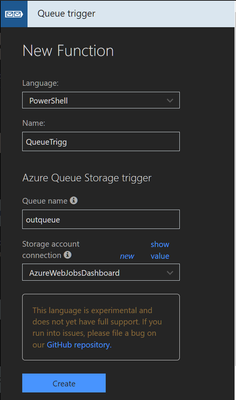

Follow these steps to create the second function, which is a Queue trigger. Create a new Function in the previously created Function App. Provide a Queue name and the storage account defined in Function 1.

Add the code StoreEvents.ps1 and the information for the auth variables. The $triggerInput variable is the message parameter name.

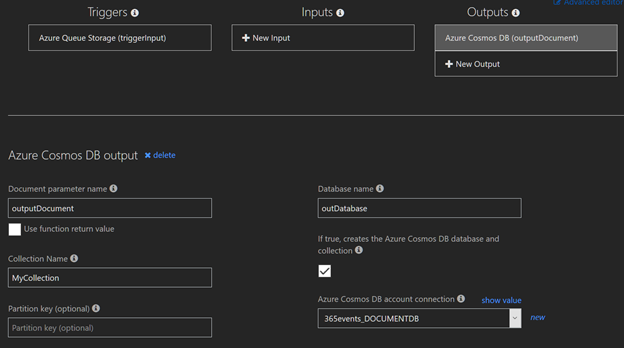

Select Integrate and add a Cosmos DB. The $outputdocument variable is based on the Document parameter name. If you change the name, you must also change the name of the variable. Click Show Value for “Azure Cosmos DB account connection” and store the account key for making requests from Power BI (an alternative is to navigate to the Cosmos DB and use a read-only key).

If you don’t already have an Azure Cosmos DB in place create a new database before creating the output. If you don’t have a Cosmos DB and collection, ensure to set the checkbox to create the Azure Cosmos DB and collection.

3. Start the functions.

If you haven’t enabled the Office 365 Activity API subscriptions that is the first step to take. There is a sample script for how to enable the subscriptions at the GitHub page it can be run from on-premises (Enablesubscription.ps1). Do enable Office 365 audit log search as well.

When configuring a start date for retrieving data, note that the maximum time span is the past 7 days based on Activity API restrictions.

If you change variable $StoredTime = Get-content $Tracker to a date in the format of "yyyy-MM-ddTHH:mm:ss.fffZ" the workloads will use it as the starting point (e.g., $StoredTime = "2018-04-12T01:34:18.702Z"). Either disable the function after it has completed a first run or replace it with Get-content $Tracker as the next scheduled run will process the same data if not adjusted.

Alternatively, you can use Azure Storage Explorer and add these files with your desired start time to the root of the Azure functions blob store:

- Audit.AzureActiveDirectory.log

- Audit.SharePoint.log

- Audit.Exchange.log

- Audit.General.log

- DLP.All.log

The only thing you need in the file is the date example "2018-03-27T11:34:18.702Z" without the quotation marks. The log files allow for continuous synch excluding content previously synchronized.

When the functions are started, invocation logs will indicate if there are any issues. Use Azure Storage Explorer to view the storage queues.

If the function for some reason breaks the workload.log files will not be updated, and the function will start from the date last stamped in the workload.log file. There may be some overlap, but the analysis tools will identify any duplicates.

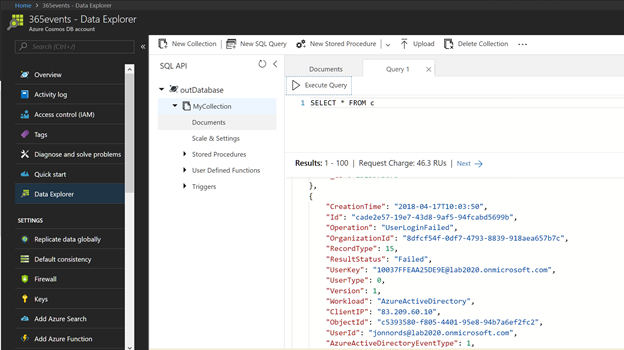

Ensure that the data is reaching your Cosmos DB instance by navigating to the Database, as shown below.

3. Open the data in Power BI

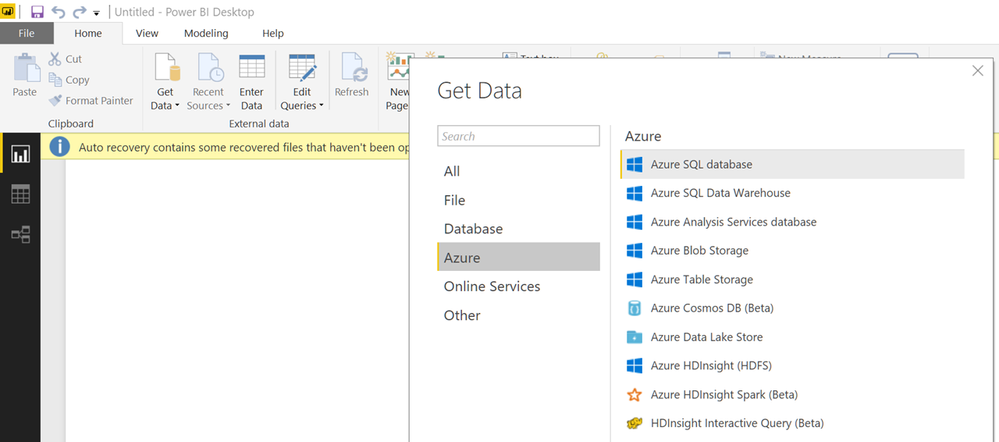

Download the Power BI Desktop client and the ZoomCharts referenced previously. Select to connect to Azure Cosmos DB in the Power BI desktop client.

Specify your connection string, etc., and optionally specify a query to limit the scope to the asset you want to investigate. Do remember that everything is case sensitive. In this case I have scoped it down to a specific site. Please see the GitHub page for more query samples. The subsequent step will require the Cosmos DB storage key that you stored as part of setting up the Cosmos DB.

Expand the columns by selecting the expand arrows. When done close and apply.

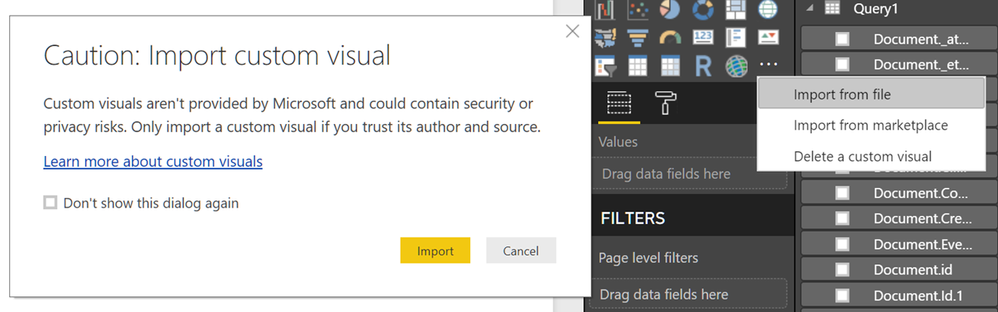

Import the Zoomchart visual if you haven’t completed that step previously.

Load the Nodes you want to review and the Measure.

Change the Measure to count to make the node sizes reflect the actual number of occurrences as an example

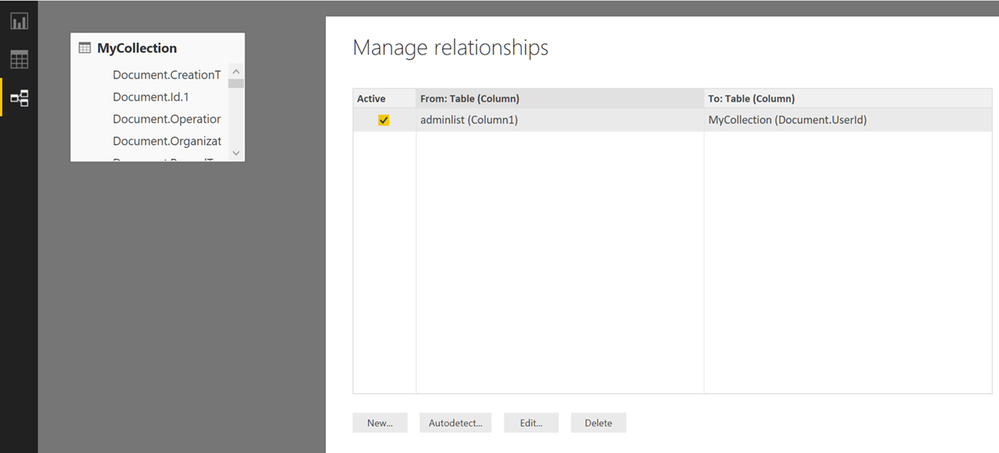

In many cases you can export csv files or directly make relationships to existing data and join it with the activity data. This is an example where we imported a few admin accounts from adminlist.csv. It could just as well be a number of files or ip addresses for which you need more information. We loaded the csv file.

In the next step we went to Relationships and joined the Adminlist with the UserID field to allow us to eliminate all accounts but those we are investigating.

In the visual level filter, we removed any account but those we are investigating

Summary

We have provided you with a starting point for how to analyze Office 365 Data using Power BI. The data can be pivoted and analyzed in many ways. Templates and continuously pulling the Activity Data can be used to quickly load data and draw conclusions from the wealth of information. Other event sources such as on premises Intrusion Detection Systems, Information classification systems or Azure Information Protection may be used to join with the activity data to improve visibility.

Our next post will provide more examples of analyzing information using Graphs.

If you have any feedback please provide it at the GitHub project page or by emailing it to cxprad@microsoft.com.

We look forward to hearing from you!

—Jon Nordstrom, senior program manager, Office 365 customer experience

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.