- Home

- Azure

- Messaging on Azure Blog

- Public Preview of the Azure Schema Registry in Azure Event Hubs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Today, we are proud to announce the public preview of the Azure Schema Registry in Azure Event Hubs.

In many event streaming and messaging scenarios, the event/message payload contains structured data that is either being de-/serialized using a schema-driven format like Apache Avro and/or where both communicating parties want to validate the integrity of the data with a schema document as with JSON Schema.

For schema-driven formats, making the schema available to the message consumer is a prerequisite for the consumer being able to deserialize the data, at all.

The Azure Schema Registry that we are offering in public preview as a feature of Event Hubs provides a central repository for schema documents for event-driven and messaging-centric applications, and enables applications to adhere to a contract and flexibly interact with each other. The Schema Registry also provides a simple governance framework for reusable schemas and defines the relationship between schemas through a grouping construct.

With schema-driven serialization frameworks like Apache Avro, externalizing serialization metadata into shared schemas can also help to dramatically reduce the per-message overhead of type information and field names included with every data set as it is the case with tagged formats such as JSON. Having schemas stored alongside the events and inside the eventing infrastructure ensures that the metadata required for de-/serialization is always in reach and schemas cannot be misplaced.

What do I need to know about the Azure Schema Registry support in Event Hubs?

Event Hubs adds the new schema registry support as a new feature of every Event Hubs Standard namespace and in Event Hubs Dedicated.

Inside the namespace, you can now manage "schema groups" alongside the Event Hub instances ("topics" in Apache Kafka® API parlance). Each of these schema groups is a separately securable repository for a set of schemas. Schema groups can be aligned with a particular application or an organizational unit.

The security boundary imposed by the grouping mechanism help ensures that trade secrets do not inadvertently leak through metadata in situations where the namespace is shared amongst multiple partners. It also allows for application owners to manage schemas independent of other applications that share the same namespace.

Each schema group can hold multiple schemas, each with a version history. The compatibility enforcement feature which can help ensure that newer schema versions are backwards compatible can be set at the group level as a policy for all schemas in the group.

An Event Hubs Standard namespace can host 1 schema group and 25 schemas for this public preview. Event Hubs Dedicated can already host 1000 schema groups and 10000 schemas.

In spite of being hosted inside of Azure Event Hubs, the schema registry can be used universally with all Azure messaging services and any other message or events broker.

For the public preview, we provide SDKs for Java, .NET, Python, and Node/JS that can de-/serialize payloads using the Apache Avro format from and to byte streams/arrays and can be combined with any messaging and eventing service client. We will add further serialization formats with general availability of the service.

The Java client's Apache Kafka client serializer for the Azure Schema Registry can be used in any Apache Kafka® scenario and with any Apache Kafka® based deployment or cloud service.

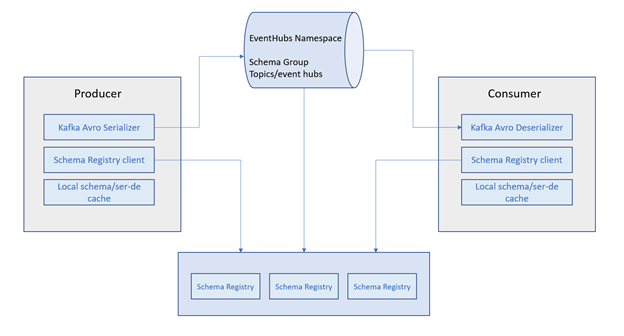

The figure below shows the information flow of the schema registry with Event Hubs using the Apache Kafka® serializer as an example:

How does the Azure Schema Registry integrate with my application?

First, you need to create an Event Hubs namespace, create a schema group, and seed the group with a schema. This can either be done through the Azure portal or through a command line client, but the producer client can also dynamically submit a schema. These steps are explained in detail in our documentation overview.

If use Apache Kafka® with the Java client today and you are already using a schema-registry backed serializer, you will find that it's trivial to switch to the Azure Schema Registry's Avro serializer and just by modifying the client configuration:

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,

com.microsoft.azure.schemaregistry.kafka.avro.KafkaAvroSerializer.class);

props.put("schema.registry.url", azureSchemaRegistryEndpoint);

props.put("schema.registry.credential", azureIdentityTokenCredential);

You can then use the familiar, strongly typed serialization experience for sending records and schemas will be registered and made available to the consumers as you expect:

KafkaProducer<String, Employee> producer =

new KafkaProducer<String, Employee>(props);

producer.send(new ProducerRecord<>(topic, key, new Employee(name)));

The Avro serializers for all Azure SDK languages implement the same base class or pattern that is used for serialization throughout the SDK and can therefore be used with all Azure SDK clients that already have or will offer object serialization extensibility.

The serializer interface turns structured data into a byte stream (or byte array) and back. It can therefore be used anywhere and with any framework or service. Here is a snippet that uses the serializer with automatic schema registration in C#:

var employee = new Employee { Age = 42, Name = "John Doe" };

string groupName = "<schema_group_name>";

using var memoryStream = new MemoryStream();

var serializer = new SchemaRegistryAvroObjectSerializer(client, groupName, new SchemaRegistryAvroObjectSerializerOptions { AutoRegisterSchemas = true });

serializer.Serialize(memoryStream, employee, typeof(Employee), CancellationToken.None);

Open Standards

Even through schema registries are increasingly popular, there has so far been no open and vendor-neutral standard for a lightweight interface optimized for this use-case.

The most popular registry for Apache Kafka® is external to the Kafka project and vendor-proprietary, and even though it still appears to be open source, the vendor has switched the license from the Apache license to a restrictive license that dramatically limits who can touch the code, and to run the registry in a managed cloud environment. Cloud customers are locked hard into that vendor's cloud offering, in spite of the open-source "community" façade.

Just as with our commitments to open messaging and eventing standards like AMQP, MQTT, JMS 2.0 and CloudEvents, we believe that application code that depends on a schema registry should be agile across products and environments and that customer should be able to use the same client code and protocol even if the schema registry implementation differs.

Therefore, Microsoft has submitted the interface of the Azure Schema Registry to the Cloud Native Foundation's "CloudEvents" project in June 2020.

The purpose of the submission is the joint standardization in the CloudEvents Working Group (WG) to ensure that the same interface can be implemented by many vendors and that clients can uniformly access their "nearest" schema registry, because the CloudEvents WG exists to jointly develop interoperable federations of eventing infrastructure. The schema registry interface is not restricted to or in any way dependent on the CloudEvents specification, but in the other direction, the CloudEvents dataschema and datacontenttype attributes already anticipate the use of a schema registry for payload encoding.

The schema registry definition in CNCF CloudEvents is expected to still evolve in the working group, and we are committed to providing a compliant implementation with the 1.0 release within a short time of it becoming final.

Notes:

- The Schema Registry feature is currently in preview and is available only in standard and dedicated tiers, not in the basic tier.

Next Steps

Learn more about the Azure Schema Registry in Event Hubs in the documentation.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.