- Home

- Internet of Things (IoT)

- IoT Devices

- Re: Questions about VAIDK, Shutter Options & External Trigger

Questions about VAIDK, Shutter Options & External Trigger

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jan 02 2022 09:50 AM

Hi Everyone,

I see that Microsoft is offering VisionAI Dev kit which allows people to run CustomVision.ai exported models directly on the device.

My question was:

- Many manufacturing applications require a triggered camera, where the camera is triggered by an external trigger. This helps limit the amount of unnecessary frames taken and anlyzed and improves accuracy of results. Is there an option to make VAIDK take only 1 frame when an external trigger is provided(say a sensor indicates that product is in right place)?

- The current camera offers a rolling shutter which limits the camera usage in manufacturing where products can be fast moving. Typically a global shutter sensor and image acquisition synced with an external sensor solves this issue. If VAIDK was to have another version which offers Built in Lighting, Global Shutter and External trigger, the use cases would be transformed significantly.

- Are there any other boards where CustomVision exported models can be run directly with hardware acceleration?

Thanks & Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jan 05 2022 12:27 PM

AFAIK, the VisionAI Dev Kit doesn't have sensors like motion detector for what you are trying to achieve.

I recommend you take a look at Azure Percept which proposes a newer devkit which you could more easily customize to achieve your goal.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jan 05 2022 09:06 PM - edited Jan 06 2022 05:00 AM

Is it possible to attach a custom MIPI camera to Azure Percept? Like an Arducam Global Shutter camera? Is there any document explaining supported camera types or it just supports one camera which it comes with for now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jan 06 2022 09:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jan 10 2022 11:01 AM - edited Jan 14 2022 12:01 AM

Perhaps it's good to mention that Azure Percept runs a fully fledged Linux distro that you as an admin can get root access to, so theoretically you can build all sorts of applications with it to access and use the sensors. Out of the box, Azure Percept starts several Azure IoT edge modules like azureeyemodule which runs continuously and provides a local RTSP endpoint that other services can access. If your use case is only capturing a frame after an event happens, it might be sufficient to write a few lines of code to access the camera and receive 1 frame. Here is an example of how this can be done (and here is one how this library can also be used as an Azure IoT Edge module).

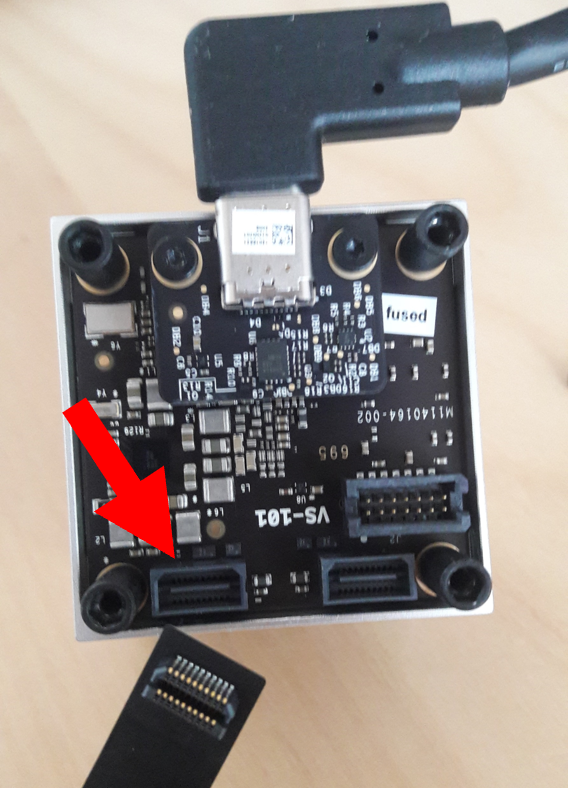

Concerning your question about a custom MIPI camera, this is how the Azure Percept vision chip looks on the backside with the one of the two camera connectors marked with an arrow:

Arducam uses a different MIPI CSI-2 connector so you can't just plug it into the chip directly. What should work is using a USB 3.0 global shutter camera, the disadvantage here are the extra time costs necessary to read data from the camera and put it as a tensor on the VPU while the MIPI interface the media sub system of the Myriad chip offers makes it possible to process the data directly on the chip.