- Home

- Internet of Things (IoT)

- Internet of Things Blog

- IoT Solutions and Azure Cosmos DB

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Here are the 2 main reasons why Azure Cosmos DB is great for in IoT applications:

- Cosmos DB can ingest semi-structured data at extremely high rates.

- Cosmos DB can serve indexed queries back out with extremely low latency and high availability.

For a fascinating history and in-depth technical outline of Cosmos DB, check out this blog by Dharma Shukla Founder of Azure Cosmos DB, Technical Fellow, Microsoft.

The two primary IoT use cases for Cosmos DB are storing device telemetry and managing a device catalog. Read on to learn key details and catch this Channel 9 IoT Show episode with Andrew Liu, Program Manager, Cosmos DB team:

Storing device telemetry

The first IoT use case for Cosmos DB is storing telemetry data in a way that allows for rapid access to the data for visualization, post processing and analytics.

XTO Energy, a subsidiary of ExxonMobil, uses Cosmos DB for this exact scenario. The company has 50,000 oil wells generating telemetry data like flow rate and temperature at a high throughput. With lots and lots of bytes/second coming in, XTO DB uses Cosmos DB because of its fast, indexed data store, partitioning, and automatic indexing. See the architecture diagram below showing how Cosmos DB fits in their IoT solution. Note that data comes into Azure IoT Hub. Typically, you would position a hot store (with fast, interactive queries) and cold store behind IoT Hub. In this case, Azure Cosmos DB is used for the hot store. Read more about XTO Energy use of Azure Cosmos DB and Azure IoT.

Device catalog

A second IoT scenario that benefits using Cosmos DB is managing a device catalog for critical processes. This is where the low latency and "always on" guarantees of Cosmos DB comes in. Less than 10-ms latencies for both, reads (indexed) and writes at the 99th percentile, all around the world and 99.999% availability for multi-region writes!

Azure Cosmos DB transparently replicates your data across all the Azure regions associated with your Cosmos account. Cosmos DB employs multiple layers of redundancy for your data managing partitioning for you. Learn more about Cosmos DB high availability.

A real-life scenario example is that of an automobile manufacturer running an assembly line with high availability requirements. The manufacturer drives commands to robots that manufacture the cars. In order to ensure that the assembly line never stops, the manufacturer uses Cosmos DB to ensure extremely high availability and low latency.

Getting started with Cosmos DB for IoT

There are four “must-know” aspects that IoT developers need to understand to work with Cosmos DB:

- Partitioning: The first topic an IoT developer needs to understand is partitioning. Partitioning is how you will optimize for performance. Cosmos DB does the heavy lifting for you taking care of partition management and routing in particular. What you will need to do is choose a partition key that makes sense during design. The Partition key is what will determine how data is routed in the various DB partitions by Cosmos DB and needs to make sense in the context of your specific scenario. The Device Id is generally the “natural” partition key for IoT applications. Learn more on partitioning.

- Request Units (RUs): The second topic an IoT developer needs to understand is Request Units. A Request Unit (RU) is the measure of throughput in Azure Cosmos DB. RUs are compute units for both performance and cost. With RUs, you can dynamically scale up and down while maintaining availability, optimizing for cost, performance and availability at the same time. RUs offer programmatic APIs for scenarios like batch jobs. Learn more about Request Units.

- Time to Live: The third topic IoT developers should understand is Time-to-Live or TTL. With TTL, Azure Cosmos DB provides the ability to delete items automatically from a container after a certain period of time. With the right understanding and use of TTL settings in Cosmos DB, and benefiting from the free deletes, you will save 50% compared to systems that charge IOPs for deletes. Learn more about Time to Live.

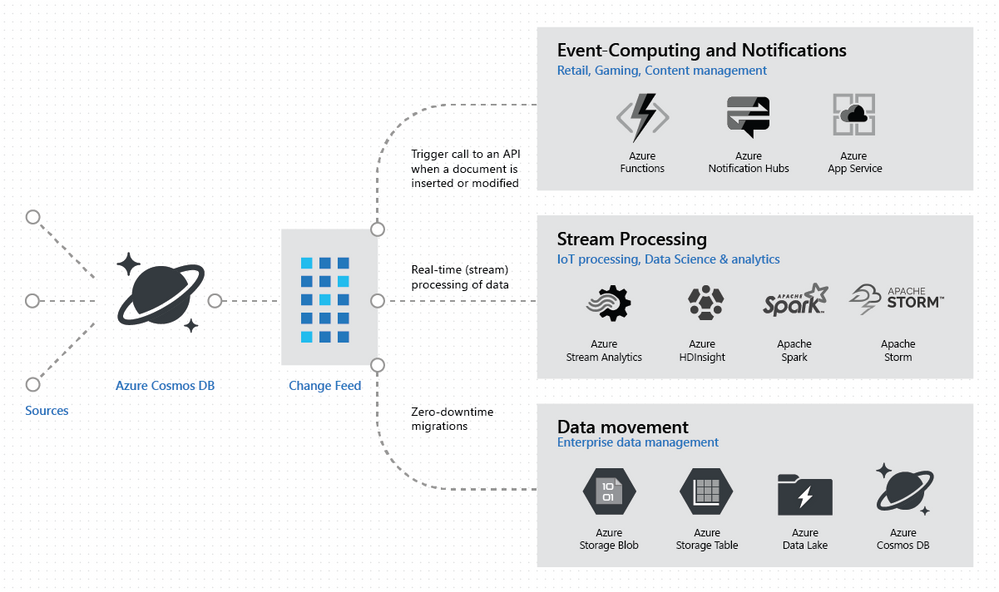

- Change Feed: The final “need to know” topic is change feed. A common design pattern in IoT is to use changes to the data to trigger additional actions. Change feed support in Azure Cosmos DB works by listening to an Azure Cosmos container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified. The change feed is a single source of truth. You will avoid consistency issues across multiple systems when using it correctly. Learn more about change feed.

Try a hands-on lab

Not convinced yet? Try a hands-on lab. In the lab, you will use Azure Cosmos DB to ingest streaming vehicle telemetry data as the entry point to a near real-time analytics pipeline built on Cosmos DB, Azure Functions, Event Hubs, Azure Databricks, Azure Storage, Azure Stream Analytics, Power BI, Azure Web Apps, and Logic Apps.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.