- Home

- Windows Server

- Failover Clustering

- Disaster Recovery in the next version of Azure Stack HCI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Disaster can hit at any time. When thinking about disaster and recovery, I think of 3 things

- Be prepared

- Plan on not involving humans

- Automatic, not automated

Having a good strategy is a must. You want to be able to have resources automatically move out of one datacenter to the other and not have to rely on someone to "flip the switch" to get things to move.

As announced at Microsoft Ignite 2019, we are now going to be able to stretch Azure Stack HCI systems between multiple sites in the next version for disaster recovery purposes. This blog will give you some insight into how you can set it up along with videos showing how it works.

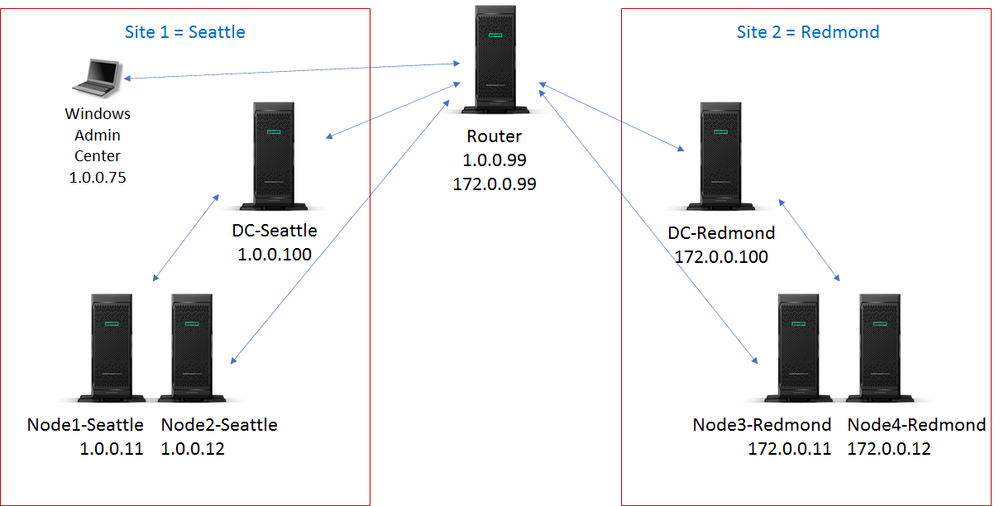

To set this up, the configuration I am using is basic and common to how many networks are configured.

As you can see from the above, I have:

- Two sites (Seattle and Redmond)

- Two nodes in each site

- A domain controller in each site

- Different IP Address schemes at each site

- Each site goes through a router to connect to the other site

When putting this scenario together, we considered multiple things. One of the main considerations is ease of configurations. In the past, when setting up a Failover Cluster in a stretched environment could have some inadvertent misconfigurations. We wanted to ensure, where we could, that misconfigurations can be averted. We will be using Storage Replica as our replication method between the sites. Everything you need from a software perspective for this scenario will be in-box.

One of the first things we looked at was the sites themselves. What we are doing is detecting if nodes are in different sites when Failover Clustering is first created. We do this with two different methods.

- Sites are configured in Active Directory

- Nodes being added have different IP Address schemes

If we see sites are configured in Active Directory, we will create a site fault domain with the name of the site and add nodes to this fault domain. If sites are not configured but the nodes are in different IP Address schemes, we will create a site fault domain with the IP Address scheme as the name with the nodes. So taking the above configuration:

- Sites configured in Active Directory would be named SEATTLE and REDMOND

- No sites configured in Active Directory, but different IP Address schemes would be named 0.0.0/8 and 172.0.0.0/16

In this first video, you can see that Active Directory Sites and Services do have sites configured and the creation of the site fault domains are created for you.

For more information on how to set up sites within Active Directory, please refer to the following blog:

Step-by-Step: Setting Up Active Directory Sites, Subnets, and Site-Links

The next item we took to ease configuration burdens are when Storage Spaces Direct is enabled. In Windows Server 2016 and 2019 Storage Spaces Direct, we supported one storage pool. In the stretch scenario with the next version, we are now supporting a pool per site. When Storage Spaces Direct is now enabled, we are going to automatically create these pools and name them with the site they are created in. I.E.:

- Pool for site SEATTLE and Pool for site REDMOND

- Pool for site 1.0.0.0/8 and Pool for site 172.0.0.0/16

The other item we are detecting for is the presence of the Storage Replica service. We will go out to each node to detect if Storage Replica has been installed on each of the nodes specified. If it is missing, we will stop and let you know it is missing and let you know which node.

As an FYI, keep in mind that this is a pre-released product at the time of this blog creation. The stopping of the Storage Spaces Direct enablement is subject to change to more of a warning at a later date.

Once everything is in place and all services are present, you can see from this video, the successful enablement of Storage Spaces Direct and the separate pools.

To point out here, in order to get this to work, we did a lot of work with the Health Service to allow this. The Health Service keeps track of the health of the entire cluster (resources, nodes, drives, pools, etc). With multiple pools and multiple sites, it needs to keep track of all this. I will go more into this a bit later and show you how it works in a few other scenarios with this configuration.

Now that we have pools, we need to create virtual disks at the sites. Since this is a stretch and we want replication with Storage Replica, two disks need to be created at each site (one for data and one for logs). I use New-Volume to create them, but there is a caveat. When the virtual disk is created, Failover Clustering will auto add them into the Available Storage Group. Therefore, you need to ensure Available Storage is on the node you are creating the disks on.

NOTE: We are aware of this caveat and will be working on getting a better story for this as we go along.

In the examples below, I will use:

- site names of SEATTLE and REDMOND

- pool names of Pool for site SEATTLE and Pool for site REDMOND

- NODE1 and NODE2 are in site SEATTLE

- NODE3 and NODE4 are in site REDMOND

So, let's create the disks. The first thing is to ensure the Available Storage group is on NODE1 in SITEA so we can create those disks.

From Failover Cluster Manager:

- Go under Storage to Disks

- In the far-right pane, click Move Available Storage and Select Node

- Select NODE1 and OK

From PowerShell:

Move-ClusterGroup -Name "Available Storage" -Node NODE1

Now we can create the two disks for site SEATTLE. I will be creating a 2-way mirror From PowerShell you can run the commands:

New-Volume -FriendlyName DATA_SEATTLE -StoragePoolFriendlyName "Pool for Site SEATTLE" -FileSystem ReFS -NumberOfDataCopies 2 -ProvisioningType Fixed -ResiliencySettingName Mirror -Size 125GB

New-Volume -FriendlyName LOG_SEATTLE -StoragePoolFriendlyName "Pool for Site SEATTLE" -FileSystem ReFS -NumberOfDataCopies 2 -ProvisioningType Fixed -ResiliencySettingName Mirror -Size 10GB

Now, we must create the same drives on the other pool. Before doing so, take the current disks offline and move the Available Storage group is moved to NODE3 in site REDMOND.

Move-ClusterGroup -Name "Available Storage" -Node NODE1

Once there, the commands would be:

New-Volume -FriendlyName DATA_REDMOND -StoragePoolFriendlyName "Pool for Site REDMOND" -FileSystem ReFS -NumberOfDataCopies 2 -ProvisioningType Fixed -ResiliencySettingName Mirror -Size 125GB

New-Volume -FriendlyName LOG_REDMOND -StoragePoolFriendlyName "Pool for Site REDMOND" -FileSystem ReFS -NumberOfDataCopies 2 -ProvisioningType Fixed -ResiliencySettingName Mirror -Size 10GB

We now have all the disks we want in the cluster. You can then move the Available Storage back to NODE1 and copy your data on it if you have is already. Next thing is to set them up with Storage Replica. I will not go through the steps here. But here is the link to the document on setting it up in the same cluster as well as another video here showing it being set up.

Everything is all set and resources can be created. If you have not seen what it looks like, here is a video of a site failure. Everything will move over and run. Storage Replica takes care of ensuring the right disks are utilized and halting replication until the site comes back.

TIP: If you watch the tail end of the video, you will notice that the virtual machine automatically live migrates with the drives. We added this functionality back in Windows 2016 where the VM will chase the CSV so they are not on different sites. Again, nother extra you have to set up, just something we did to ease administration burdens for you.

I talked earlier about all the work we did with the health service. We also did a lot of work with the way we autopool drives together by site. In the next couple of videos, I want to help show you.

This first video is more of the brownfield scenario. Meaning, I have an existing cluster running and want to add nodes from a separate site. In the video, you will see that we add the nodes from the different site into the cluster and create a pool from the drives in that site.

In this video, I will show how we react to disk failures. I have removed a disk from each pool to simulate a failure. I then replace the disks with new ones. We detect which drive is in which site and pool them into the proper pool.

I hope this gives you a glimpse into what we are doing with the next version of Azure Stack HCI. This scenario should be in the public preview build when it becomes available. Get a preview of what is coming and help us test and stabilize things. You also can suggest how it could be better.

Thanks

John Marlin

Senior Program Manager

High Availability and Storage Team

Twitter: @johnmarlin_msft

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.