- Home

- Exchange

- Exchange Team Blog

- Demystifying Exchange 2010 SP1 Virtualization

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

It’s been a few months since we announced some major changes to our virtualization support statements for Exchange 2010 (see Announcing Enhanced Hardware Virtualization Support for Exchange 2010). Over that time, I’ve received quite a few excellent questions about particular deployment scenarios and how the changes to our support statements might affect those deployments. Given the volume of questions, it seemed like an excellent time to post some additional information and clarification.

First of all, a bit of background. When we made the changes to our support statements, the primary thing we wanted to ensure was that our customers wouldn’t get into a state where Exchange service availability might be reduced as a result of using a virtualized deployment. To put it another way, we wanted to make sure that the high level of availability that can be achieved with a physical deployment of the Exchange 2010 product would not in any way be reduced by deploying on a virtualization platform. Of course, we also wanted to ensure that the product remained functional and that we verified that the additional functionality provided by the virtualization stack would not provide an opportunity for loss of any Exchange data during normal operation.

Given these points, here’s a quick overview of what we changed and what it really means.

With Exchange 2010 SP1 (or later) deployed:

- All Exchange 2010 server roles, including Unified Messaging, are supported in a virtual machine.

- Unified Messaging virtual machines have the following special requirements:

- Four virtual processors are required for the virtual machine. Memory should be sized using standard best practices guidance.

- Four physical processor cores are available for use at all times by each Unified Messaging role virtual machine. This requirement means that no processor oversubscription can be in use. This requirement affects the ability of the Unified Messaging role virtual machine to utilize physical processor resources.

- Exchange server virtual machines (including Exchange Mailbox virtual machines that are part of a DAG), may be combined with host-based failover clustering and migration technology, as long as the virtual machines are configured such that they will not save and restore state on disk when moved, or taken offline. All failover activity must result in a cold boot when the virtual machine is activated on the target node. All planned migration must either result in shutdown and cold boot, or an online migration that makes use of a technology like Hyper-V Live Migration. Hypervisor migration of virtual machines is supported by the hypervisor vendor; therefore, you must ensure that your hypervisor vendor has tested and supports migration of Exchange virtual machines. Microsoft supports Hyper-V Live Migration of these virtual machines.

Let’s go over some definitions to make sure we are all thinking about the terms in those support statements in the same way.

- Cold boot This refers to the action of bringing up a system from a power-off state into a clean start of the operating system. No operating system state has been persisted in this case.

- Saved state When a virtual machine is powered off, hypervisors typically have the ability to save the state of the virtual machine at that point in time so that when the machine is powered back on it will return to that state rather than going through a “cold boot” startup. “Saved state” would be the result of a “Save” operation in Hyper-V.

- Planned migration When a system administrator initiates the move of a virtual machine from one hypervisor host to another we call this a planned migration. This could be a single migration, or a system admin could configure some automation that is responsible for moving the virtual machine on a timed basis or as a result of some other event that occurs in the system other than hardware or software failure. The key point here is that the Exchange virtual machine is operating normally and needs to be relocated for some reason – this can be done via a technology like Live Migration or vMotion. If the Exchange virtual machine or the hypervisor host where the VM is located experiences some sort of failure condition, then the result of that would not be “planned”.

Virtualizing Unified Messaging Servers

One of the changes made was the addition of support for the Unified Messaging role on Hyper-V and other supported hypervisors. As I mentioned at the beginning of this article, we did want to ensure that any changes we made to our support statement resulted in the product remaining fully functional and providing the best possible service to our users. As such, we require Exchange Server 2010 SP1 to be deployed for UM support. The reason for this is quite straightforward. The UM role is dependent on a media component provided by the Microsoft Lync team. Our partners in Lync did some work prior to the release of Exchange 2010 SP1 to enable high quality real-time audio processing in a virtual deployment, and in the SP1 release of Exchange 2010 we integrated those changes into the UM role. Once that was accomplished, we did some additional testing to ensure that user experience would be as optimal as possible and modified our support statement.

As you’ll notice, we do have specific requirements around CPU configuration for virtual machines (and hypervisor host machines) where UM is being run. This is additional insurance against poor user experience (which would show up as poor voice quality).

Host-based Failover Clustering & Migration

Much of the confusion around the changed support statement stems from the details on combination of host-based failover clustering & migration technology with Exchange 2010 DAGs). The guidance here is really quite simple.

First, let’s talk about whether we support third-party migration technology (like VMware’s vMotion). Microsoft can’t make “support” statements for the integration of 3rd-party hypervisor products using these technologies with Exchange 2010, as these technologies are not part of the Server Virtualization Validation Program (SVVP) which covers the other aspects of our support for 3rd-party hypervisors. We make a generic statement here about support, but in addition you need to ensure that your hypervisor vendor supports the combination of their migration/clustering technology with Exchange 2010. To put it as simply as possible: if your hypervisor vendor supports their migration technology with Exchange 2010, then we support Exchange 2010 with their migration technology.

Second, let’s talk about how we define host-based failover clustering. This refers to any sort of technology that provides automatic ability to react to host-level failures and start affected VMs on alternate servers. Use of this technology is absolutely supported within the provided support statement given that in a failure scenario, the VM will be coming up from a cold boot on the alternate host. We want to ensure that the VM will never come up from saved state that is persisted on disk, as it will be “stale” relative to the rest of the DAG members.

Third, when it comes to migration technology in the support statement, we are talking about any sort of technology that allows a planned move of a VM from one host machine to another. Additionally, this could be an automated move that occurs as part of resource load balancing (but is not related to a failure in the system). Migrations are absolutely supported as long as the VMs never come up from saved state that is persisted on disk. This means that technology that moves a VM by transporting the state and VM memory over the network with no perceived downtime are supported for use with Exchange 2010. Note that a 3rd-party hypervisor vendor must provide support for the migration technology, while Microsoft will provide support for Exchange when used in this configuration. In the case of Microsoft Hyper-V, this would mean that Live Migration is supported, but Quick Migration is not.

With Hyper-V, it’s important to be aware that the default behavior when selecting the “Move” operation on a VM is actually to perform a Quick Migration. To stay in a supported state with Exchange 2010 SP1 DAG members, it’s critical that you adjust this behavior as shown in the VM settings below (the settings displayed here represent how you should deploy with Hyper-V):

Figure 1: The correct Hyper-V virtual machine behavior for Database Availability Group members

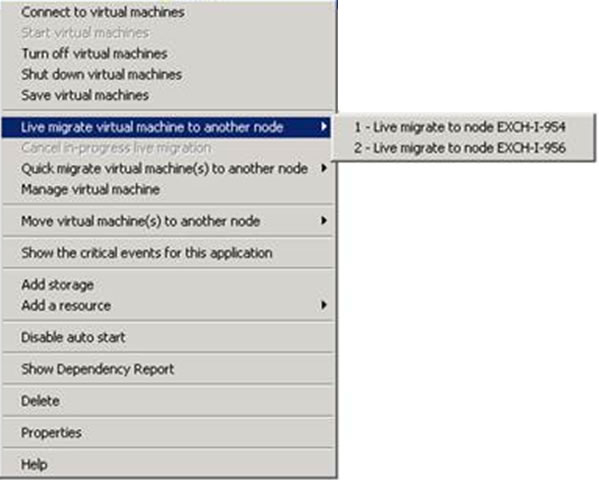

Let’s review. In Hyper-V, Live Migration is supported for DAG members, but Quick Migration is not. Visually, this means that this is supported:

Figure 2: Live Migration of Database Availability Group member in Hyper-V is supported (see large screenshot)

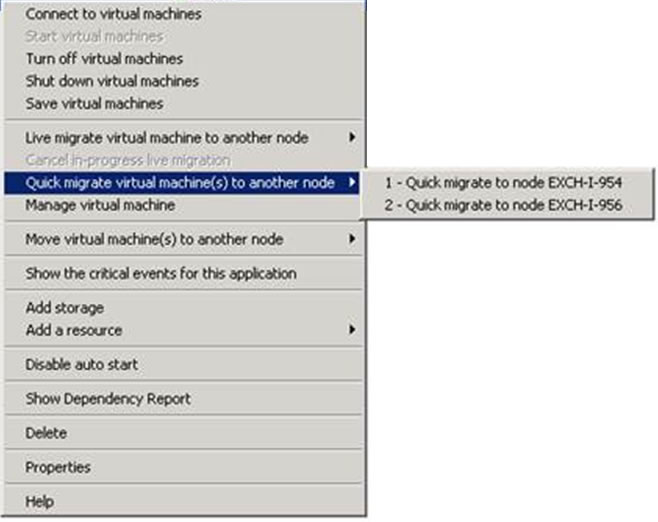

And this is not supported:

Figure 3: Quick Migration of Database Availability Group members is not supported

Hopefully this helps to clarify our support statement and guidance for the SP1 changes. We look forward to any feedback you might have!

Jeff Mealiffe

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.