- Home

- Microsoft 365

- Excel

- Excel is adding extra digits behind the scenes for a report

Excel is adding extra digits behind the scenes for a report

- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 05:04 AM

I have an excel report that I am using as a data source for Power BI. In the excel report it is showing the project numbers as 31900004.001 but when pulling into power bi they're showing as 3190004.00999998 which is creating issues since I am trying to relate this table to other tables based on the project number. I have already tried the power BI forum and someone took a look at the data and stated the issue is in excel. Can you please help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 05:46 AM

@dailyjm The project numbers you see in Excel seem to be real numbers with many decimals, but formatted to show only three decimals. If you need to pull these into Power BI as numbers with exactly three decimals, you could round them in Excel first. But you can also do the rounding in Power BI by transforming the numbers column to numbers rounded to three decimals.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 05:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 05:59 AM - edited Apr 14 2022 06:00 AM

@dailyjm Don't really follow. Where do the project numbers come from to begin with?

Can you upload a screenshot showing the number with e.g. three decimals and what's included in the formula bar. For example like this:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 06:13 AM

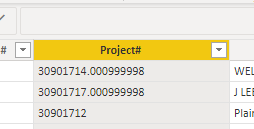

The data is coming from an .xls extract from an Oracle based reporting tool. I've tried to change to text & csv to pull in but all end results are always the same. Out of roughly 50,000 projects there are around 5,000 or so that are doing this. Sure, see below:

As you can see in the following screenshot there are many different types of project numbers that have varying decimal places which won't permit me to just round up in Power BI. The below are valid project numbers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 06:24 AM

@dailyjm Okay! Thought I could easily fix it, but I can't. Sorry.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 06:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 07:16 AM - edited Apr 14 2022 08:58 AM

@dailyjm wrote: ``The data is coming from an .xls extract from an Oracle based reporting tool. I've tried to change to text & csv to pull in but all end results are always the same. ``

As we see in your examples, not all project IDs are numeric; for example, one is 188724-47.LS.

Consequently, the project IDs should be entered as text.

Do not open the csv or txt file directly in Excel.

Instead, save the file to a folder, click on the Excel icon to open Excel, not the file, then import the csv/txt file into Excel using the Text Import Wizard. In Excel 2010, I click Data > From Text and select the file.

In the Text Import Wizard, work your way through the 3 dialog boxes. First, choose the method of column separation (probably delimited). Click Next. Then, select the column separator (probably comma). Click Next. Here is where the real magic is done. Select the column with the project IDs, and select Text under "Column data format". Finally, click Finish.

PS.... I am not familiar with the transfer of data from Oracle to Excel and from Excel to Power BI. If the Oracle project IDs are coded as text, perhaps Oracle can create the Excel file directly without using a csv/txt file. And that case, perhaps Oracle would format the project IDs as text. I don't know.

-----

Possible TMI.... The reason that the number 31900004.001 appears as 31900004.000999998 is because the latter is the correct decimal representation (rounded to 17 significant digits) of the internal binary approximation of the original value.

Usually, we see it as 31900004.001 because Excel displays only up to 15 significant digits, rounded.

I am (pleasantly) surprised that Power BI displays 17 significant digits.

FYI, the exact decimal representation of the binary approximation of 31900004.001 is 31900004.0009999,983012676239013671875 .

(I use period for the decimal point and comma to demarcate the first 15 significant digits.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 10:31 AM

@Joe User Thank you for your feedback. This method did help to remove extra digits but it also removed some of the digits that I needed. So now a lot of the ones that are .001 are no longer including the decimals. It's weird because it did not wipe out all decimals, only some.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 10:49 AM

@dailyjm wrote: ``it did not wipe out all decimals, only some.``

I cannot imagine any reasonable way that could happen. (An example of an "unreasonable" way is an event macro that changes the data. Not likely!)

If you can attach a csv/txt file and resulting Excel file that can be used to demonstrate the problem, I could take a look at it.

(We only need the product IDs in the csv/txt and Excel files.)

Otherwise, the only thing that I can suggest is for you to use formulas of the form =ISTEXT(A1) to confirm that all of the product IDs are, in fact, text after you import the csv/txt file in the manner that I described.

But even if some of the product IDs are not text, I cannot imagine how only some of their "decimal fraction" could be dropped in the process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 11:04 AM

Final XLSX outcome after following your instructions above: https://1drv.ms/x/s!AnS3coMvk-0jgbFQ-00Y5zb-g43C_g?e=E18QYr

Original File received from business :

https://1drv.ms/x/s!AnS3coMvk-0jgbFPhtSGvWrowBVBEg?e=qKgG9b

XLSX that I opened and resaved as a CSV file

https://1drv.ms/u/s!AnS3coMvk-0jgbFRi3S14IUQ_6EOww?e=8BAaMD

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 14 2022 01:02 PM - edited Apr 14 2022 03:44 PM

I'm afraid those attachments are not very useful to me.

First, you provide files with more than 50,000 rows of data. I have no idea which rows create problems for you. At a minimum, you could tell me which rows to look at.

Second, all of the files that you provide are xlsx files. I thought the problem arises when you take a csv or txt file created by Oracle, and you open or import the csv file into Excel.

If I understand your process correctly, I would need the initial csv file and the resulting Excel file.

But perhaps I misunderstand the process. Perhaps you start with the "orig" xlsx file from Oracle, save it as csv, then (following my instructions) import the csv file into an xlsx file.

All of your problems might be remedied if you can coerce Oracle to save to a csv file directly, not to an xlsx file that you save as csv.

-----

That said, here is one observation. I cannot say for certain whether or not it is relevant to your problem, for the reasons noted above. But it might give you some insight.

Note: I will suggest a work-around after I explain the problem that I observe.

Looking at the "orig" file, consider two examples: A7 and A65.

Both cells are formatted as Text, but the date type of the cell values is numeric. Note the difference between cell format and cell value data type.

This can happen when we enter a numeric value into a cell that is initially formatted as General, then the cell format is changed to Text. Formatting alone does not change the cell value data type. So, it remains type numeric.

(Aside.... It seems incredulous that Oracle per se would do that. But let's move on....)

More importantly, A7 displays only 30900921, but its actual value is 30900921.001 in the Formula Bar. Note the difference between displayed value and actual value.

In contrast, for A65, both the displayed and actual values are 31401967.01.

If we save the "orig" file as csv, the displayed values, not the actual values, are usually written into the csv file.

Consequently, for A7, the csv file contains the value 30900921; precision is lost.

But for A65, the csv file contains the value 31401967.01; precision is not lost.

The difference in displayed values is coincidental, due to the rules for the General format. I am not aware of any explanation of those rules (sigh). But obviously, they depend on the precision of the decimal fraction. They also depend on the number of significant digits.

-----

Suggested work-arounds....

The best work-around is to coerce Oracle to save as csv directly. Presumably, the results will be consistent, with no loss in precision.

Alternatively, do the following.

1. Open the "orig" xlsx file in Excel.

2. Create a new worksheet.

3. In the new worksheet, enter A4:A50116 in the Name Box (upper-left) to select the range.

4. Type (do not press Enter) the formula ='Source Data'!a4 & "" , then press ctrl+Enter (not ctrl+shift+Enter) to copy the formula down the column.

5. Save the new worksheet as csv.

Now you can follow my previous instructions to import, not open, the saved csv file into an xlsx file.

Recall that in the third dialog box of the Import Text Wizard, we must select the first column of the imported csv file, and select Text for the column format.

That step is still necessary to prevent Excel from interpreting the text as numeric whenever possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 15 2022 06:19 AM

Apologies for sending the complete set of records and not just the ones that had issues. That was an oversight on my part, I was trying to fix other issues at work between sending the files over so was in a bit of a hurry. I thoroughly appreciate all of your help and time that you have devoted to this.

For the .xls files, I am only provided those from my colleague as xls files. I had then opened the file and saved as a csv and then pulled into excel using the text import option for those. Unfortunately our company uses an archaic version of Oracle right now. The reports are coming from another application that is fed the data from Oracle and just to get those reports she had been working with our IT department for months and months (sadly). If I did request csvs it would probably take another year to get those. I believe the data is an issue from those reports because another colleague mentioned he had the same issue with separate reports that he had pulled from Oracle itself.

I tried your method which did work for all of those with the extra digits but now it has cut off trailing zeros that were needed. For instance 3140339.020 has become 3140339.2 and 31300071.000 has become 31300071

I'm attempting to create a new column in BI based off of a little logic to add the extra zeros back in and combine the columns to create a new project number but it is still not matching up exactly for some reason.

But at least I'm down from 7000 entries being wrong to 4000 so that is progress! I might just tell the team that what they're doing can't be done because of the data issue.. but I do not like to give up easily and admit I can't find a solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apr 18 2022 08:52 PM - edited Apr 18 2022 08:55 PM

@dailyjm wrote: ``I tried your method which did work for all of those with the extra digits but now it has cut off trailing zeros that were needed. For instance 3140339.020 has become 3140339.2 [sic; errata: 3140339.02] and 31300071.000 has become 31300071``

You are right: my work-around is flawed in that way.

But how do you know the project ID should be 3140339.020?

My point is: if you have access to the correct data, you should use that as your "raw data".

Once you permit text like 3140339.020 to be stored as a number in Excel, it is impossible to know whether 3140339.02 should be 3140339.020 -- or 3140339.02000, for that matter (unless you know that the data is limited 3 decimal places at most).

The point is: the information is already lost.

An equivalent analogy is: suppose you use a word processor that autocorrects what it perceives to be a typo. You purposely type the sentence "is was weird that the user typed wierd", but the autocorrected result is "is was weird that the user typed weird".

It is now impossible to know how the sentence was typed initially.

Bottom line: The only way to prevent the project IDs from being changed is to enter them as text into Excel. Since Excel provides a way to do that, the problem is with your process, not with Excel.