- Subscribe to RSS Feed

- Mark Discussion as New

- Mark Discussion as Read

- Pin this Discussion for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 29 2021 08:32 AM

My spreadshett is 100 columns and 35000 rows. The vast majority of cells have calculated vales.

PC is

3.80 gigahertz AMD Ryzen 9 3900X 12-Core

No memory cache

64-bit ready

Multi-core (12 total)

Hyper-threaded (24 total)

Before crashes CPU never exceeds 7%

This file started life as a CSV but now runs as xlsm

Solution so far is to only recalculate 5 columns at a time - which is ridiculous!

Anyone any ideas?

- Labels:

-

Formulas and Functions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 29 2021 12:15 PM

100 columns X 35000 rows = 3,500,000 cells

Vast majority have calculated values, so say, what, 3,000,000?

Do the calculations begin in cell A1 and proceed through all the remaining? I.e., is there a cascade of calculations required every time any one cell changes? What ARE the dependencies? Is it necessary for all three and a half million cells to be in the same spreadsheet? What functions are part of these three million (or so) calculations?

If you could give a more complete description here--without violating any proprietary aspect of the application--maybe some realistic and practical suggestions could be forthcoming.

- Some functions are less efficient, more volatile, than others; so what functions predominate in all those myriad calculations?

- What's the general structure of the spreadsheet? what kind of dependencies exist between columns and rows? Is it all one huge database, each row representing a different entity?

- You mention that doing it five columns at a time works--albeit "ridiculous" as a solution--which suggests a general left-to-right progression in all these calculations. Is there some other logical way to break up the flow?

- Etc

I"m having a hard time even conceiving of an application or situation that would require such a great amount of computing power other than maybe astrophysics.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 07:34 AM

Hello mathetes

Thanks for responding so positively.

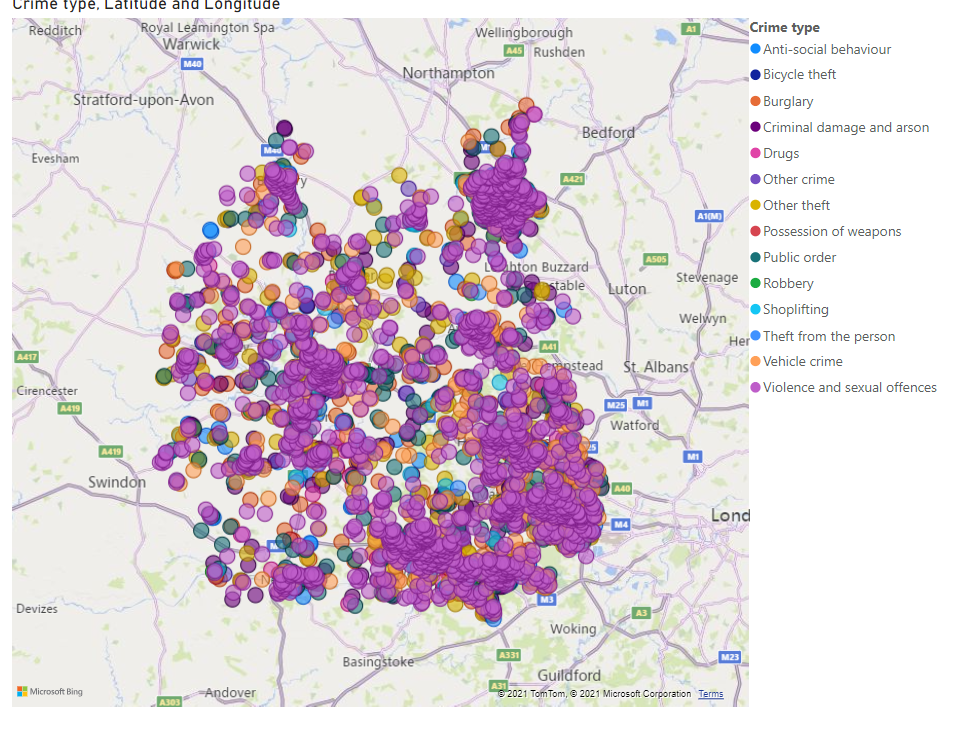

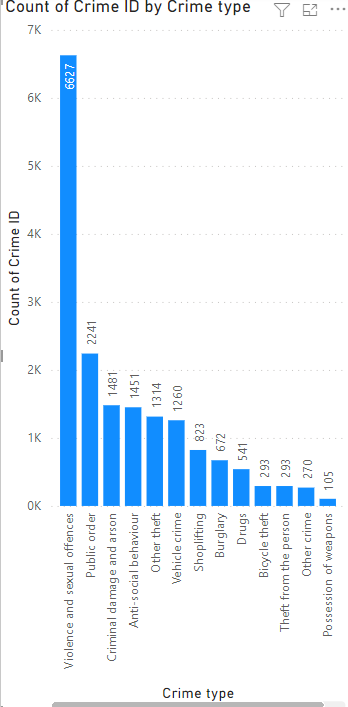

Each month the 43 Police Forces in the UK release crime data and this is classified by 10 “crime types” such as “Violence against the person” and “Robbery”.

Every single crime report has the Latitude & Longitude of its location.

Obviously my first filter is to look at each force separately.

Taking “Thames Valley Police” as an example. The data is made available, freely, is a CSV file.

Step 1: Translate the Lat/Lon into location files. In the UK we define geographic areas with LSOA Codes. Each LSOA Code covers about 1500 of the population.

I use a third party site to translate the codes and insert these into the csv file, which is then saved as a macro enabled Excel file and now begin the work.

Step 2: The number of crimes in each LSOA area varies from 0 to an unknown (so far) maximum. I need to produce an analysis of each crime type in each and every LSOA area. This second step entails translating each LSOA code into a unique format so that I can use VLOOKUP. I use a HELPER column to do this with the LSOA Codes in Column “E”.

=E2&COUNTIF($E$2:E2,E2)

Step 3: Having established that each crime is now associated with a unique modified LSOA code this step looks up the crime and reports it into the first available column. There is an issue here that I do not know how many columns will be required. Consequently I extend the range to 100 columns and then check that there is a final column with all null values. If necessary the number of columns is extended until this condition is satisfied.

=IFERROR(VLOOKUP($E2&COLUMNS($L$1:L1),$G$2:$K$18306,5,0),0)

This is extended across the 100 columns and down the thousands of rows.

It is this step that causes the crash! Just too many calculations.

I use the null result as a “0” so as to see if I need additional columns.

Step 4: Each of the 10 crime types is then allocated a column and we “COUNTIF” for each crime type.

Step 5: Once we have the results it’s relatively easy to then produce reports.

Thanks for reading.

JohnB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 09:00 AM

Thanks for that detailed description of the task. I'm hoping one or two other regular responders here--frequent and trusted contributors--will be able to jump in to help, as I suspect the tools needed might be VBA or Power Query, neither one of which is part of my own tool box.

Is it possible for you to post a small sample of the data, perhaps just for two or three of the LSOA codes?

@Peter Bartholomew @Sergei Baklan @Riny_van_Eekelen @NikolinoDE @mtarler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 09:08 AM

@mathetes @John Barlow A link to the site where the CSV file can be obtained would be helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 01:57 PM

Hi and thanks for taking an interest.

https://data.police.uk/data/ is the first place to start. Select one of the 43 Police Forces, I suggest Thames Valley, and take it from there.

SadlyI am illiterate when it comes to using JSON but this is an alternative way to access data.

Thanks again.

JohnB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 02:02 PM

Hello again

The attached file might give you an idea of the task.

Thanks for looking.

JohnB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 09:56 PM - edited Dec 30 2021 09:59 PM

Solution@John Barlow Thanks for the link.

I looked at the file you uploaded with your reply to @mathetes to understand what you are wanting to achieve. It seems to me that you can do the summary with a simple pivot table. No need to create all the columns that count each type of crime for every area. Attached your file with the pivot table added in Sheet2. Is that what you have in mind?

Then, I looked at the November report for Thames Valley and it's a straight-forward CSV file that could be imported into Excel without a problem. All 17459 rows were summarised into a similar pivot table as mentioned above, of just under 1400 rows (one for each LSOA and 14 columns (one for every crime type) and with grand totals for each row and column. It took less than a minute to create this, from scratch. File also attached. And the best thing is that there is not one single formula in this file!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 31 2021 06:23 AM

I downloaded the Thames Valley dataset, converted it to a Table, and then used the formula

= COUNTIFS(

Nov_thames_valley[LSOA code], LSOA#,

Nov_thames_valley[Crime type], type#)to provide counts of crime type against LSOA code. The calculation took 2.7s on a Core i7 laptop and the results matched @Riny_van_Eekelen's Pivot Table analysis.

Looking at your formula, the calculation I would expect to cause difficulty is

= E16000&COUNTIF($E$2:E16000,E16000)which requires 128 million calculations (this grows as the square of the number of rows).

It strikes me that you are using high-performance hardware in combination with an obsolete version of Excel. I would recommend using Microsoft 365 or Excel 2021 and taking advantage of the most recent dynamic array functions. The capabilities of Excel have changed out of all recognition since 2018.

Despite the success of a formula-based approach (it is only one formula written to a single cell) I would support Riny in identifying the Pivot Table as purpose-written for the type of analysis you wish to perform. It may be possible to use PowerQuery to download the JSON version of the data directly from the source. If it were loaded to the data model and analysed using PowerPivot, you could work with datasets that are bigger than those that will fit on a worksheet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dec 30 2021 09:56 PM - edited Dec 30 2021 09:59 PM

Solution@John Barlow Thanks for the link.

I looked at the file you uploaded with your reply to @mathetes to understand what you are wanting to achieve. It seems to me that you can do the summary with a simple pivot table. No need to create all the columns that count each type of crime for every area. Attached your file with the pivot table added in Sheet2. Is that what you have in mind?

Then, I looked at the November report for Thames Valley and it's a straight-forward CSV file that could be imported into Excel without a problem. All 17459 rows were summarised into a similar pivot table as mentioned above, of just under 1400 rows (one for each LSOA and 14 columns (one for every crime type) and with grand totals for each row and column. It took less than a minute to create this, from scratch. File also attached. And the best thing is that there is not one single formula in this file!