- Home

- Education Sector

- Educator Developer Blog

- UCL IXN Emergency Room Busyness predictions - a generalised Azure ML model for the NHS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction:

Meet the UCL team, Project aimed to create a generalised system to allow the modelling of any emergency department within an evaluation framework.

Ethan Wood, team leader, who worked on project coordination, the database engineering of the backend, as well as research and literature.

(https://www.linkedin.com/in/ethan-wood-london/)

Noan Le Renard, who worked on the front-end web app, as well as research and literature.

(https://www.linkedin.com/in/noanlerenard/)

Wuhao Chen, who worked on the machine learning model, data visualization, and research.

(https://www.linkedin.com/in/wchen1999/Through our university, University College London, Industry Exchange Network program, we are working as technical consultants for Microsoft, contracted to a major NHS hospital.

Our challenge:

Our project aimed to create a generalised system to allow the modelling of any emergency department within an evaluation framework. A model can be written in Python where once uploaded; it will be run against historical patient data to gain a score for its particular metric.

The system automatically manages and scales models whilst providing a simple python object interface to patient data. One of the provided models is a machine learning algorithm of emergency department admissions, with improved accuracy compared to the current admission prediction system. The framework will include a graphical user interface for interacting with the models and a RESTful API to retrieve and request data.

Our approach:

Initially, alongside forecasting emergency department busyness, the NHS were interested in the impact of third-party data on these predictions. As a result, the first half of our project was spent acquiring substantial third-party data to start researching any potential correlation that may exist with our dataset. The extrinsic factors we initially investigated were weather related (e.g. temperature, humidity, precipitation…) as our client thought that they were most likely to have direct impact on emergency department busyness. In addition, after finding the news outlet local to our hospital's area, we wanted to research the links between the news headlines and our dataset.

However, despite arduous work and research, most of our studies came back to be inconclusive as they were not statistically meaningful. Nonetheless, in order to gain insight on our research, we decided to meet a research group working on a similar project to ours. Withal, despite their increased time and experience in the field and having a more restrained scope, they still struggled to find meaningful progress on finding correlations between health records and third-party data.

As a result, to accommodate the timespan that we were given, we decided to change the direction of our client deliverables, and after approval from Microsoft and the NHS, we built a feasible final product with a much larger reach and scale: a generalized system for modelling an emergency department.

This solution consists of a front end which can both upload and interact with the models, and a back end that consists of a swarm of models interacting with a central database. However, as part of our compromise to still fulfill on our client deliverables, we also developed and trained a machine learning model for our hospital that can accurately predict busyness and integrates into our platform.

Our technical solution:

Despite redefining our deliverables, we still built a technical solution to our initial problem. We think the inclusion of this is warranted as through there creation, we were able to get a better grasp on the complexity of our project which ultimately led to its change in direction.

Following our exhaustive research, we were able to create a simple busyness model based on the prediction of the number of patients currently in the emergency department. Using historical data, we were able to simulate the department's current state and create historical predictions. For our front end, we built a dashboard utilizing React to visualize the data. We eventually reused this as part of our final front end for a more user-friendly interaction with the model output.

To increase our chances of finding correlations with third party data, we found a local news agency and scraped their website. However, as the news website was poorly designed, we had to resort to building a custom web scrapper in R to retrieve the news headlines and their corresponding date and time. To convert these headlines into a datatype we could compare to our dataset to find correlations with, we decided to use google TextRank and the RAKE algorithm to extract the important keywords.

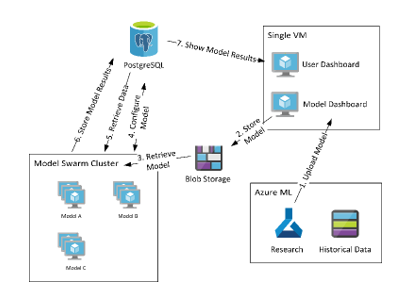

After the shift in deliverables, our generalised system to allow the modelling of any emergency department consists of a front and back end. The former takes the form of a webserver built with Node.Js that is used to upload new models and interact with existing ones. Using a RESTful API to retrieve and request data, the user now has access to historical and prediction data, as well as executing operations on models such as seeding (calculating all historical values) or evaluating (calculating all historical prediction values and comparing them to the known historical value).

When a model is uploaded from our frontend, it passes through blob storage to our Model Swarm Cluster, where the models are stored, and the predictions are run. The final part of our backend consists of a PostgreSQL database in which the model's results are stored, and commands are passed to the cluster to fetch data and further configure the models. The retrieved data is then passed to the front end where it is displayed to the user.

Fig 1. System architecture diagram

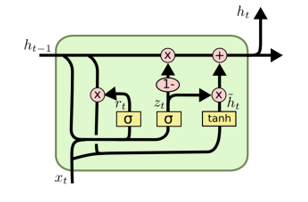

Due to the sequential nature of our dataset, we have chosen the industry’s common approach, which is a recurrent neural network to predict future attendance level. Firstly, our model’s input is a vector of 252 historical time steps composed of three weeks of history with 9 features for each step. The first layer is composed of 16 GRU neurons which connects to a second GRU layer with 16 neurons. The third layer is also a GRU layer which makes this model a 3-stacked GRU network, its output is then fed into a dense layer of 12 neurons, which will output a vector of 6 values for our final prediction. (c.f Fig 2.)

Fig 2. GRU network structure.

Our use of Microsoft and Azure technologies:

With our access to a vast array of medical records, one of the key requirements of our project was the security of our dataset. Thankfully, we were able to conduct our work in a contained and secure environment through Azure’s services.

This dataset was stored in a secure limited access blob storage account, which allowed both us and our client to quickly interact with the data, manipulate it, and transfer it to other Azure services. For example, for our backend, we eventually migrated our dataset into an Azure Database for PostgreSQL for the ease of interaction with both our models and frontend.

Our research, ML development, and training was done through Azure’s Machine Learning Studio. By virtue of this secure and seamless workflow that we could scale for any operation, we were able to orchestrate research on correlations between third-party data and our dataset through our access to Juypter Notebook and R Studio, alongside testing multiple machine learning models with different parameters.

Moreover, we employed this service's AutoML feature to automatically create, train, and validate a wide range of candidate algorithms, such as an SVM, decision tree, and random forest in order to establish a baseline for our future neural network approach.

It is also worth noting that we were recommended to use PowerBI as a tool, especially for its data visualizations and its ability to automatically generate prediction models. However, despite its integration with Azure, our dataset far exceeded the software’s maximal capacities, thus limiting us to powerful virtual machines for our research.

What we learnt:

With the exhaustive use of machine learning models during our project, we stumbled upon a few roadblocks which allowed us to learn from our mistakes and therefore improve our models. Initially, our technical mentor at Microsoft suggested that after we cleaned our dataset, we should use Azure’s AutoML feature to automate the time-consuming task of machine learning model development. However, despite running standard data sanitizations procedures, our AutoML model was only ever 56% accurate.

This led us to ask the NHS about the quality and reliability of our dataset. After a discussion, we learned about certain limits of medical datasets, namely that certain data quality conditions such as completeness, accuracy and timeliness are prone to human error and inconsistencies. As a result, in order not to negatively skew our predictions models, we started to create machine learning models only from parameters we knew satisfied these conditions. Eventually, after trying both classical and neural network approaches to time series forecasting, we were able to create an RNN model which produces predictions that correctly aligns with our real-world situations.

We determined that the models produced by AutoML were not well suited for our situation, as they were manipulating our problem as more of a classification task, disregarding the inherent relationship between time steps. After careful deliberation and research, we decided to further our research using recurrent neural networks as our main model for time-series prediction as it captures its underlying relationship and context. At first, we were using a specific type of recurrent neural network called a LSTM (c.f. Fig. 3). After testing, we decided to use a GRU network instead (c.f. Fig. 4) as its more efficient to train because it only contains 2 gates per cell, as opposed to 3 for an LSTM. Its result is on par with that of the LSTM net whilst reducing the overall training time. However, due to the nature of a recurrent neural network, it is very easy to overfit the model. We therefore reduced its complexity and applied recurrent dropout to our model’s stacked layer. Eventually, our model was able to predict the trend in attendance more accurately than the moving average approach that our hospital is currently using.

Fig 3. LSTM cell

Fig 4. GRU cell

At the start of the project, we were faced with the conundrum of choosing a cloud service as opposed to physical hardware. One of our key requirements was that our data was to be kept secure and therefore stay on the Azure cloud for protection and to be backed up, but we thought that not being able working on our local devices would be an inconvenience to our workflow.

Much to our surprise, it was an enjoyable turn of expectations when we were able to learn about the benefits of using a cloud service such as Azure. Being able to spin up virtual machines with interactive programming environments such as Jupyter Notebook or R studio allowed for a seamless workflow, indistinguishable from working with our own servers, that was now hosted on the Azure platform and therefore readily accessible from any device with a connection.

Furthermore, being able to create a virtual environment with specific technical specifications offered us an astounding level of flexibility for any situation. Coupled with the fact that we were working with a budget, the information on the scope of our billing account available alongside a cost-effective pay-per-use basis enabled us to forecast our quotas with ease.

Whilst creating our generalized system, we projected ourselves and envisioned a future in which our product would be simultaneously used by multiple hospitals. Immediately, we grew concerned for the efficiency of our systems and the scaling of our tools. However, using a cloud service provider lets us upgrade the specifications of our Azure services to match any client’s demand instantly (especially when using a docker swarm).

Our Future plan:

From a purely technical standpoint, we hope to enhance the generalized system by enriching its features. For example, making more data more data readily accessible to researchers, creating an easier to use API, and all around increasing the efficient and load times of our components. We also want to foresee a future where through more iterative rounds of user testing, we create an easier and more efficient user experience for the interactions with the model through our frontend.

Regarding our generalized system for modelling an emergency department, we hope that after our hospital adopts it, it can be rolled out to other trusts around the UK as they recognize its benefits. This would allow any NHS facility with a medical dataset to model its own emergency department and accurately predict its busyness. It also allows NHS trusts to share models between departments as it is as simple as emailing a file. Fortunately for us, as we are using Azure, this increase in scale can be done simply and efficiently by allocating more resources to our services.

Finally, we plan to pursue the academic side of our project by trying to improve and expand our busyness algorithm. For example, we can research and iteratively test to improve its logic, efficiency and syntax. We think that using academia to our advantage can teach us about the inner intricacies of creating models, which we can use to refine the way they are interacted with and processed in our generalized system.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.