- Home

- Education Sector

- Educator Developer Blog

- Building your own educational visualisation learning platform with .Net Core & Azure

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Guest blog by David Buchanan Imperial College Microsoft Student Partner and UK Finalist at the Imagine Cup 2018 with Higher Education App

About me

I’m a second year Mechanical Engineering student at Imperial College London . I entered the Imagine Cup this year as a solo team with my Higher Education App project. My primary interests within computing are cloud computing, web development and data engineering. I have experience in .Net Core (C#), JavaScript, HTML5, CSS3, SQL, (Apache) Gremlin and the Hadoop ecosystem. My LinkedIn address is www.linkedin.com/in/david-buchanan1 , which you can follow to keep up with my progress on the App.

Introduction

My submission to the Imagine Cup this year was an educational App and website which offered an avenue for anyone looking to make their own interactive educational content.

The App is based around the concept of clearly mapping out topics and subtopics into a hierarchal structure, such that the overall layout of a subject becomes much clearer than traditional formats like textbooks. It was designed from the start to be as accessible as possible to maximise the inclusiveness of the platform.

As such, it is compatible with practically all devices thanks to its browser based design, including mobile platforms, games consoles and single-board computers like the Raspberry Pi.

An example of a map for studying Maths

The Aim of the App

One of the key focuses in the App’s design was to make sure the learning environment was as efficient as possible. One of the biggest shortcomings of current VLE software is the inefficiency of the navigational experience, and the fragmented nature with which data is presented. Often when a user wishes to access several topics within a subject, current platforms require constant renavigation of the same index pages, or require the student to open various tabs to more easily access the various topics they wish to look at. These are highly inefficient and distracting processes which not only waste the time of the user, but also make learning an unnecessarily laborious process.

My App aims to not only improve the efficiency of the navigational experience, but also make it natural and intuitive by integrating both touch gestures as well as keyboard and controller based interfaces.

An example of the menu that comes up when you click on a node or its image/text

Further to the earlier point of maximising accessibility, the platform is designed such that users with motor or visual impairments should have as comfortable and efficient a learning experience as is possible, with all the accessibility features being automatically integrated, without the need for special consideration from the content creators. This is done by utilising the HTML5 speech synthesis API to verbally call out the text highlighted on screen while the user navigates using either their keyboard or the onscreen controls. Furthermore, as the App uses vector graphics, users with partial blindness can zoom in as much as they desire without either the text or graphics blurring. All controls involved with map creation and navigation are bound to appropriate keys within the users’ keyboard, which allows individuals with special input requirements to easily map their custom hardware or software solutions to all the controls.

Adoption of EdTech

The platform is incredibly relevant right now, as educational institutions increasingly look towards EdTech to make learning more accessible and relevant to students. Inevitably, young people are spending increasing amounts of time on online-connected devices such as mobiles or laptops, with truly cross-platform learning solutions lagging in terms of quality and innovation. Of course, there are several excellent learning platforms already available such as Khan Academy, Quizlet, and OneNote, and whilst initially it may appear that my platform is a competitor these, I’d argue that it is a complement to these existing solutions, as it allows users to easily link to external resources, and also allows the community to rate these resources in terms of effectiveness and clarity. This is a crucial differentiator in my opinion, as existing platforms offer a wealth of invaluable knowledge and currently it is often difficult to identify which platforms shine in particular areas.

An example of a reading list for a node, which facilitates rating of online resources

Technology

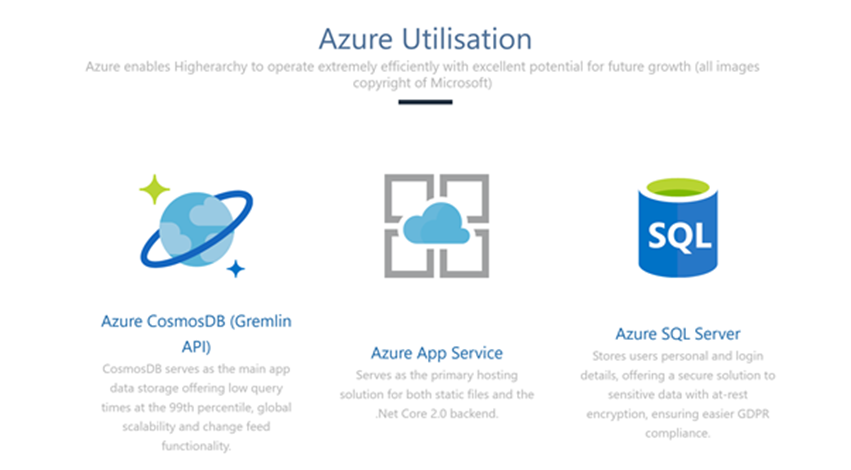

The App utilises several key Azure technologies, like the App Service and Cosmos DB, to facilitate excellent performance and massive scalability at low cost. Using the Graph API for Cosmos DB has ensured the platform can easily act as an educational social network, facilitating rich communication and connection between users. The graph data structure also allows incredibly easy grouping of users, which allows universities or schools to easily form private groups in which they can securely share course resources. Naturally following on from this is the topic of data security and privacy, a topic of both great relevance and concern. To maximise protection of personal data, markedly personally identifiable information like name, age, and email are stored in a separate Azure SQL database, which has the benefit of row-level security to minimise the possible impact of data breaches, and more importantly to prevent breaches ever happening in the first place (please note GDPR defines personal data as including username and user ID, and thus the graph database does still technically including personal data).

The key Azure services used, more recently an Azure VM service has been used to host a virtualised Hadoop instance for development, which will eventually migrate to a distributed HDInsight service

The front end of the App is written primarily in JavaScript which is used to manipulate an SVG canvas. By utilising CSS3 transform animations, the browser can more easily utilise available GPU resources thanks to the matrix multiplication based nature of the transformations using a homogeneous coordinate system, resulting in smooth performance across both mobile and desktop platforms

[1]

. The frontend also features in browser LaTeX rendering, which reduces the storage size required on the backend for formulae greatly, as well as offering a really easy way to upload and display libraries of formulae, which is especially useful for STEM subjects.

An example of the in-app LaTeX support

The backend is written entirely in .Net Core 2.0 (C#), which has allowed seamless integration and delivery with other Azure services. Something I plan on utilising in the future when my analytics and messaging platform is more mature is the Cosmos DB change feed, which again integrates effortlessly into a .Net Core codebase. This has the potential to work really nicely with SignalR for real-time notifications. Another great benefit of .Net Core is the simplicity of its string interpolation and dynamic datatypes, which is crucial in dynamically generating gremlin queries for the graph database and something I’ll go into detail in later in this post.

Since the Imagine Cup final, I have added a highly scalable and extensible analytics platform based on Apache Hadoop and Apache Spark. Currently the platform collects data on time spent by users in each topic as well as the links they click on within each map and stores it in HDFS, offering a rich data set for users to analyse their learning patterns to make future learning as efficient as possible. This will eventually be integrated with the question/exam module to give feedback on which resources have been most useful in maximising exam results.

Why C# (and .Net Core) was a Great Fit

One of the biggest technical hurdles I encountered in the project was dynamically converting the deeply nested JSON used by JavaScript to generate and load the map on the front end into a series of gremlin queries that Cosmos DB could not only understand, but form a fully equivalent graph of. The difficulty came primarily from the fact the JSON has no fixed structure and is very weakly typed, whereas C# is typically a strongly typed and static language. This project made great utility therefore of the dynamic datatype features of C#, which has allowed the language to evolve since C# 4.0 from a statically typed language into a gradually typed language.

To give the exact context of the problem, when you make a hierarchal tree structure through JSON, the parent-child relationships are generally implied from the structure of the JSON. However, as the eventual goal is to turn the JSON into a series of easily executable gremlin queries to form the graph in Cosmos DB, the JSON is inevitably going to have to be flattened, and will inherently lose the structure that gave it those relationships. The way I prevented this problem, was by associating a unique integer ID to each node within a map, as well as a property called ParentLocalID which was, as the name implies, the ID of its parent. All nodes on the map had to have a parent, bar the root node which did not have the property.

To map out the overall process of saving the JSON to Cosmos DB in stages:

1. Parse the JSON into a format C# (and thus .Net Core) can understand

2. Flatten the JSON into an array or list (as the data is fixed in length it may be advantageous to use an array as its iteration performance is generally better) of individual nodes, each with the associated properties that contain all the educational information (each node has a minimum of 4 properties to define its position and globally unique ID, and no maximum, though typically a well filled out node will have around 10)

3. Generate the gremlin queries by using string interpolation of the properties we have just collected from the JSON, and store them in a dictionary to be executed

4. Iterate across the entire dictionary that has just been generated, and asynchrously execute the queries against the Cosmos DB

The first and second steps become much simpler than one might expect thanks to the ubiquitous Newtonsoft.Json package. It facilitates extremely simple parsing of JSON into a newtonsoft.json.linq.jobject object, which makes manipulation of the children of the root member trivial. The circumstance of my App, as I have no idea what structure the JSON inputted will be, prevents easy use of deserialization functionality of the Newtonsoft library, which requires some idea of the structure of the JSON in order to process it. The parsing function also had the bonus that LINQ functionality was enabled on the resulting data, making flattening far simpler. LINQ’s Select and ToList enable you to then easily tailor the flattening process to your dataset. Alternatively, you could utilise recursive methods to possibly make this process more computationally efficient, which is something I will be exploring in the near future

[2]

.

The third step is made relatively straightforward again thanks to C#’s string interpolation functionality. By placing a $ sign before a string, you can simply input any variables by placing them in curly brackets. This is important because the query strings need to be dynamically generated with all the data we’ve collected in the last two steps. One of the key considerations is then minimising the number of gremlin queries required to add each node, link it to the map for easy graph navigation, and link it to its parent node to maintain the data structure. Also worth noting is that as users are going to save the same map multiple times as they update it, we don’t want to generate duplicate nodes within the database, as having two nodes with same unique ID causes the phrase to lose its meaning and usefulness.

To prevent duplicate node generation, we can use a clever gremlin trick involving folding and coalescing. Typically adding a vertex (which represents a node in my app) in gremlin involves something along the lines of the following query (note that output.nodes[] is an array of objects of all the nodes generated from steps 1 and 2, which we are iterating by f++ from 0):

This generates all the nodes quite nicely each with four key properties, and links them all to the root, and thus the map, but if you perform the query twice it will simply duplicate both the vertex and edge added. Therefore we use our more refined query:

What this does is makes an initial check whether the database already has a Node with the same uniqueid, and if it does then the rest of the query isn’t performed such that no duplicate edge or vertex is generated. The massive benefit is you don’t have to wait for query responses from the database for every single node to verify they already exist. The downside to this is that if a node already exists and it has been updated in a new save, you have to follow it up with a second query that updates the properties.

This is then followed by two simple queries to add the parent edge. The reason I have split it into two queries is that the first deletes all pre-existing parent links, as my App is going to have functionality such that the parent can be changed, and we only ever want one parent at any one time, so this methodology guarantees that is maintained. You may notice that each node has both an id and uniqueid property also, this is because the node ids are integer values that start at 0 for the root and increase by 1 each time making them unique within the context of each map, while the uniqueid’s are UUID’s generated by a UUID v4 generation script that virtually guarantees each node can be identified globally within the database (chance of collision is incredibly improbable but not impossible).

Dictionary<string, string> gremlinQueries = new Dictionary<string, string> { };

//A for loop to iterate over all nodes and generate queries to add to the dictionary

for(int f = 0; f < numberofnodes - 1; f++) {

//These queries add the node and edge to the map if it doesnt already exist and then update the properties if it does already exist

gremlinQueries.Add("SaveNode" + f.ToString(), $" g.V().has('Node','NodeID','{output.nodes[f].uniqueid}').fold().coalesce(unfold(),addV('Node').property('NodeID','{output.nodes[f].uniqueid}').property('Title','{output.nodes[f].name}').property('Description','{output.nodes[f].description}').property('Content','{output.nodes[f].content}').addE('PartofMap').to(g.V('{root.uniqueid}')))");

gremlinQueries.Add("UpdateNodeProperties" + f.ToString(), $" g.V().has('Node','NodeID','{output.nodes[f].uniqueid}').property('NodeID','{output.nodes[f].uniqueid}').property('Title','{output.nodes[f].name}').property('Description','{output.nodes[f].description}').property('Content','{output.nodes[f].content}')");

//Conditional to check the node isnt the root node as the root has no parent node

if (output.nodes[f].id != root.id) {

//Iterates within the other iteration across all other nodes to find the parent node and link it

for (int z = 0; z < numberofnodes - 1; z++)

{

//Adds the parent node query if there is a match between ParentLocalID and local node id

if(output.nodes[f].id == output.nodes[z].parentlocalid)

{

//Removes any previous parents linked to the node as each node should have only one parent

gremlinQueries.Add("RemoveDuplicateParentLinks" + f.ToString(), $" g.V().has('Node','NodeID','{output.nodes[f].uniqueid}').outE('ParentNode').drop()");

//Adds an edge between the parent node to the node being iterated across

gremlinQueries.Add("AddParentLink" + f.ToString(), $" g.V().has('Node','NodeID','{output.nodes[f].uniqueid}').addE('ParentNode').to(g.V().has('Node','NodeID','{output.nodes[z].uniqueid}'))");

}

}

};

};

//Iterates across all members of the dictionary to allow execution of the queries against the database

foreach (KeyValuePair<string, string> gremlinQuery in gremlinQueries)

{

Console.WriteLine($"Running {gremlinQuery.Key}: {gremlinQuery.Value}");

// The CreateGremlinQuery method extensions allow you to execute Gremlin queries and iterate

// results asychronously

IDocumentQuery<dynamic> query = client.CreateGremlinQuery<dynamic>(graph, gremlinQuery.Value);

while (query.HasMoreResults)

{

foreach (dynamic result in await query.ExecuteNextAsync())

{

//Writes to console the result of the query to show success

Console.WriteLine($"\t {JsonConvert.SerializeObject(result)}");

}

}

}

Step four can be seen within the foreach loop which iterates across the dictionary of the queries, and uses code from Microsoft docs

[3]

, which are linked in the references section. You can then package all of this into an async Task, and have the JSON sent via the body of a HttpPost request.

Some key notes to make on this process and things I have learned from development:

· Use dynamic datatypes with caution. They are extremely useful with JSON when the datatype is not explicitly stated and the structure is unknown, but as they are evaluated at runtime it is important to put appropriate security checks in place to ensure the executed code doesn’t violate the security of the application in question.

· My methods aren’t necessarily the most algorithmically efficient way to perform this process and it is something I’m going to work on and refine, the purpose of this article is to give a good general idea of the process involved. Whilst ExpandoObjects are incredibly useful when you don’t know the model of the data you are processing in advance, they are highly memory intensive and not necessarily the best for scalability.

· It can be possible in certain circumstances to perform the first two steps regarding flattening the deeply nested JSON on the frontend rather than the backend and that may be worth looking into depending on context.

· From my testing on a free tier app service and 400 RU/s Cosmos DB instance, the code in question can process a map of around 50 nodes with around 10 properties each in under a second. This is a perfectly acceptable save time for a single user use case but it may not necessarily scale, and load-tests are something I plan to follow up on before general release. Thankfully both the Azure App Service and Azure Cosmos DB offers turnkey scalability so that is always an easy contingency option.

· Whilst I have placed some emphasis on computational efficiency within this article, as long as the initial JSON is sent to the server (typically an extremely quick process with JSON file size typically being only around 500kB even with rich node data on over 100 nodes), no further user input is needed to ensure the save is completed as the rest of the processing is performed asynchrously on the server side. This means even if a save should take 10 minutes to complete, the user can leave the webpage almost immediately after clicking save and there should be no issues.

Plans for the Future

Whilst the platform is still in a closed Alpha stage, I’m aiming for the platform to see a full release by the end of 2018. The App is always going to be free by default, with premium membership options coming later down the line when the platform matures and the user facing multi-tenant analytics module is completed. The main obstacles to a public release at the current time is the lack of a fully featured question/exam module. It’s also worth noting the platform has been designed with the intention of eventually implementing a recommendation system through machine learning, which could act to offer subjects or topics you may be interested in based on what you have looked at previously, but I would like to finish the core feature set and release at least an open beta version of the site before I start working on this.

Closing Remarks

I’d like to thank everyone at Microsoft involved with the

Imagine Cup

for both their time and their invaluable advice. The finals were a brilliant experience and I’d definitely recommend anyone with an interest in technology to apply for next year. I’d also like to congratulate the other finalists on all of their extremely polished and professional projects. Special congratulations goes to the top two winners of the UK finals from Manchester and Abertay, both of which have a brilliant combination of presentation and utilisation of modern technology. I wish them all the best in the world finals, they’ll no doubt do the UK proud.

1.

http://wordsandbuttons.online/interactive_guide_to_homogeneous_coordinates.html

2.

https://www.codeproject.com/Tips/986176/Deeply-Nested-JSON-Deserialization-Using-Recursion

3. https://azure.microsoft.com/en-gb/resources/samples/azure-cosmos-db-graph-dotnet-getting-starte...

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.