- Home

- Security, Compliance, and Identity

- Core Infrastructure and Security Blog

- What is an Azure Load Balancer?

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A lot of folks who are new to Azure assume that load balancers in Azure are logically equivalent to load balancers in their on-premises data centers. These load balancers are typically a device (sometimes a VM) which functions as a special-purpose router, using some method of determining if the back-end machines are healthy, and some load distribution algorithm. The traffic actually traverses the device, meaning hitting the performance limits of the load balancer could lead to failing requests.

In contrast, Azure Load Balancers (ALB) are not discrete devices through which the traffic is routed; they should be thought of more as a collection of rules on how connections are arranged in the Azure software-defined network (SDN). Azure load balancers operate on Layer 4 of the OSI model.

Some logical conclusions follow from this:

- Removing a backend from a backend pool doesn’t terminate connections

- A load balancer cannot be overloaded by traffic in the same way a device-based load balancer would (maxing out CPU, memory, etc.)

- Not all of the same functionality may be available as a device-based load balancer

- There are some limitations which would not exist with a device-based load balancer

A common concern raised by customers regarding ALB’s is that the load is not completely evenly distributed. There’s a simple enough explanation for this.

There are 3 distribution modes with standard ALB’s:

- Default: 5-tuple hash-based (source IP, source port, protocol, destination IP, destination port)

- Client IP & Destination IP (2-tuple session affinity)

- Client IP & Protocol & Destination IP (3-tuple session affinity)

The thing to understand is that the most common distribution method (5-tuple) is not round robin. The main thing to understand is if the combination of 5 factors is the same (source/destination, ip/port and protocol) then the requests will be routed to the same backends. If there is an uneven distribution of any of these 5 categories (i.e. more requests from one client, client port re-use, heavy traffic on a single frontend IP on the load balancer, etc.), then the distribution to the backends will too be uneven.

We can demonstrate this easily enough.

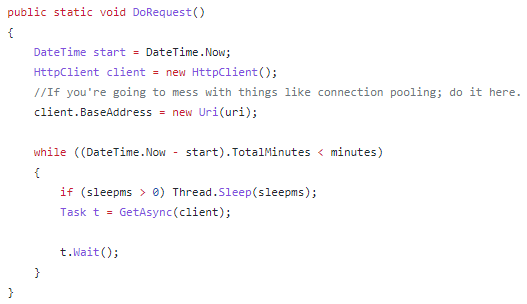

I put together a minimal test tool, which you can find out on GitHub, which will let you run a number of threads making HTTP requests with a delay between requests. Next up, I put together a VM Scaleset behind a standard load balancer with 6 instances, and default distribution mode (5-tuple). I’ve installed IIS, and configured a Data Collection Rule to ingest the logs into Log Analytics for ease of analysis.

As my test tool uses the .Net HttpClient object, by default this uses connection pooling for performance reasons. Establishing a socket is ‘expensive’, therefore re-using the socket leads to better performance, but it also re-uses the client port. From the linked doc, we have this nugget of information:

Every HttpClient instance uses its own connection pool, isolating its requests from requests executed by other HttpClient instances.

Indeed, each thread gets its own HttpClient, therefore we would expect each thread to have its own client port:

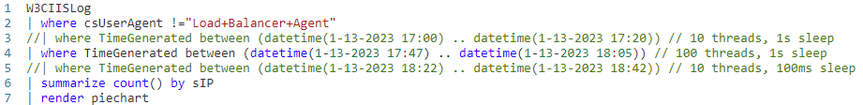

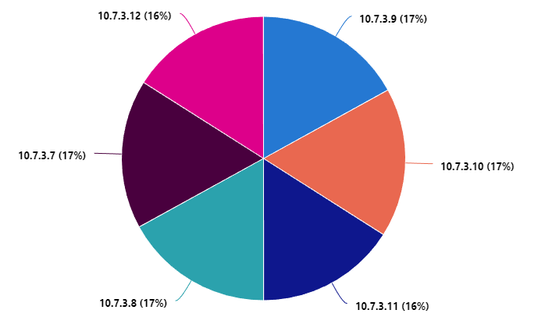

So – how does our distribution look for different parameters in our load test tool? I’ll share my KQL query I used for creating these graphs first, and then you can see how the tests hold up:

10 threads, 1 second sleep

100 threads, 1 second sleep

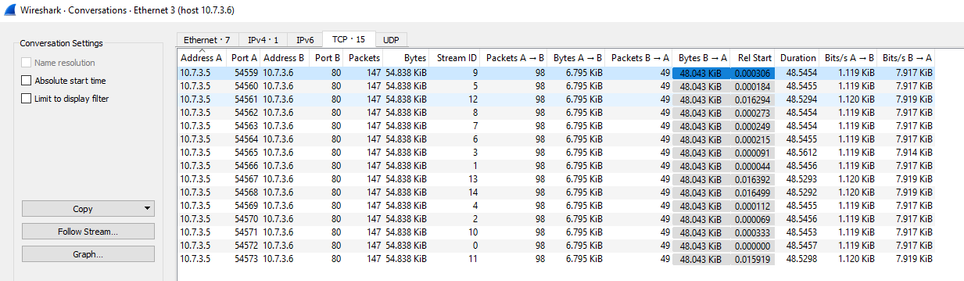

As you can see – given our test’s usage of threads and connection pooling, having a smaller number of client ports due to fewer threads yields a more uneven distribution. More threads leads to a more even (but still not perfect) distribution. We don’t have to believe the docs on this – we can actually use Wireshark to validate this is going on under Statistics > Conversations > TCP.

A view of what this looks like running with 15 threads, with a sleep of 1 second.

As you can see, we have 15 client ports in use to match our 15 threads.

What we’ve learned is that if you are seeing uneven distribution in the default load balancer mode, the first step is to understand why this is happening. You have a few options to look at why:

- Look at backend logs to see the distribution of client IP’s, to see if traffic from clients is uneven.

- Look at a packet trace from the clients, to determine if you’ve got a small concentration of client ports.

- If there’s multiple frontend IP’s, look at the load balancer metrics with splitting applied for Frontend IP address.

To wrap up – I wanted to share one more detail about how Azure Load Balancers work. As Azure networking is software-defined and as such rule-based, one quirk is that adding an Azure load balancer in front of some resources can actually itself change how those resources communicate back out. This is related to our back-end architecture using the Virtual Filtering Platform, which you can read about here.

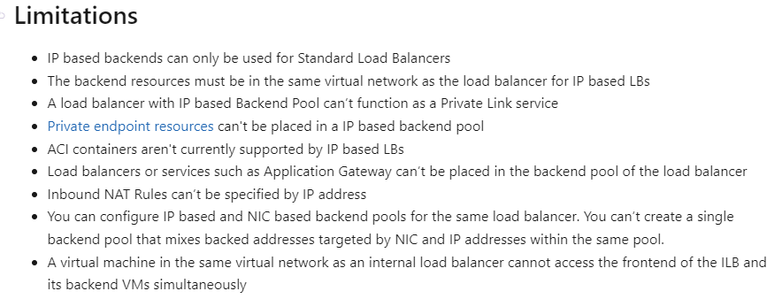

It is possible to skip past a load balancer and hit the backend, but there’s some corner cases where the SDN gets mixed up, and as such it’s not recommended to mix load balancer frontend and backend direct traffic from the same source. We mention a few of the resulting limitations here:

Got more questions on Azure Load balancer? Post them to the Azure Community!

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.