- Home

- Security, Compliance, and Identity

- Core Infrastructure and Security Blog

- Azure Log Analytics - Data Retention By Type in Real Life

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

[20210426 - Update: Added the authentication requirement before executing the ARMCLIENT commands.]

Hello everybody, Bruno here again together with my Azure Sentinel colleague @sifriger (credits to you mate to have brought what we’re going to present to my attention).

Today, I’ll walk you through the setting of different retention for a Azure Log Analytics workspace based on data types.

First of all, let me refresh a little bit what a data type is in the Azure Log Analytics context: a data type is either a scalar data type (one of the built-in predefined types listed below), or a user-defined record (an ordered sequence of name/scalar-data-type pairs, such as the data type of a row of a table).

Let’s also refresh the data retention definition: the retention represents how long (the amount of time in days ) data is kept inside an Azure Log Analytics workspace (see Change the data retention period paragraph in the Manage usage and costs with Azure Monitor Logs article).

Now that we have both concepts refreshed and clear in mind, we can get to the point. As you have heard for sure, on October 08, 2019 a feature that allows you to set the retention by single data type (also referred as table) overriding the general workspace setting was announced through this official post.

What could be the reason why you might want to change the retention on a single table? There could be several and all could be valid. For instance, we could have:

- Usage and costs control: as per Manage usage and costs with Azure Monitor Logs article, by operating on the data retention you proactively monitor ingested data volume and storage growth, and define limits to control those associated costs.

- Limit performance data retention on an Azure Sentinel connected workspace: This one is pretty common. Performance data is normally considered stale after a couple of days or even earlier unless you want to store it for data warehouse purpose (i.e. baseline or troubleshooting). Moreover, natively, Azure Sentinel does not have any query which runs against or correlate with the Perf table, hence there could be no need to keep this data over the default retention (which 30 days for normal Log Analytics workspace or 90 days for Azure Sentinel connected ones).

Bear also in mind that there are also some important elements to be considered when dealing with data retention and before making the decision of changing it. As an example:

- Correlation among tables could be impacted by different retention

- Changing the retention at the workspace level will change the table retention any longer on the one previously modified

- Should you need to align back to workspace retention, you need to reset what has been configured using the same method used to set it, hence you will get through the same steps and level of complexity.

- For data collected earlier than October 2019 (when this feature was released), setting a lower retention for an individual type won’t affect your retention costs.

With all that said, should you need to set different retention per table, continue reading this post.

Setting data retention by type is not a feature offered at the portal interface level. In the Azure portal you can only set the retention for the entire workspace.

This means that you’ll need to use some simple ARM command.

So, moving on, is there anything else you need to know or have before moving ahead?

Easy answer: you need to know the resource id and have an ARM client to execute the command.

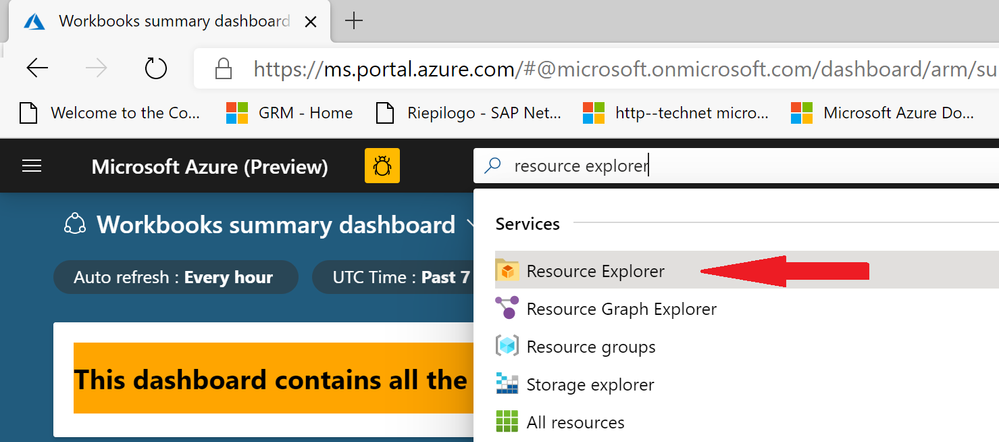

As far as the resource id goes, you first need to retrieve the Log Analytics workspace resource id. You can do that, thanks to Azure Resource Manager, directly from the Azure portal by using Resource Explorer:

Once you get to the Resource Explorer page, expand Subscriptions and navigate all the resources till the one you want to operate on (in this case the Log Analytics workspace):

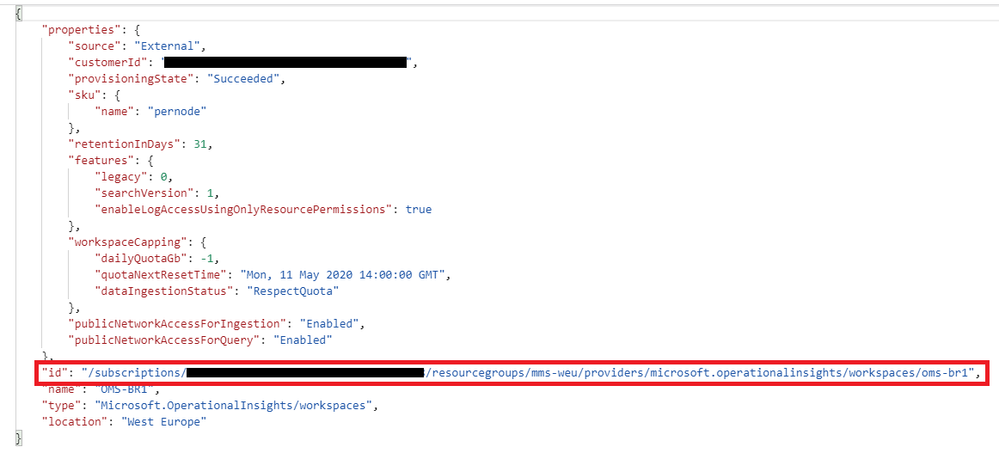

Once you get to it, in the right part you will get the JSON template of the given resource. Inside the template you have several key/value pairs and objects, among which there is the id that represents exactly the resourceid of the workspace for which you want to change the retention on a given table:

Now that you have the workspace resourceid, since tables do not appear as resource entries, you need an additional easy step. You need to add the table name and API information to execute the necessary command.

You can achieve that by adding the following string right after the resource id value just retrieved by paying attention to the table name (I intentionally put a placeholder here)

/Tables/MYTABLENAME?api-version=2017-04-26-preview

NOTE: the API version could be different depending on the latest and newest releases.

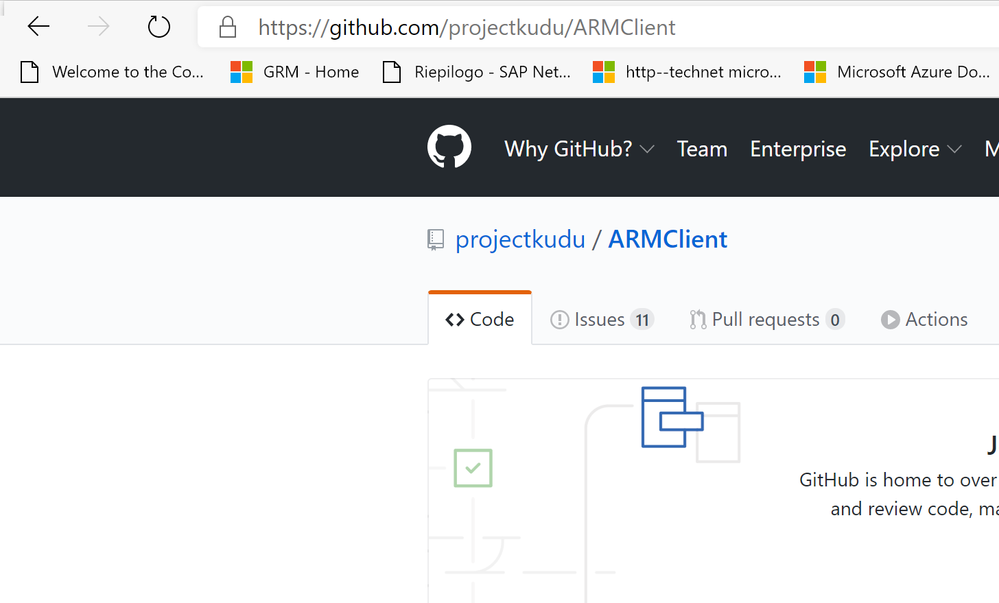

Regarding the ARM client you can get ARMClient from GitHub.

With all the necessary pieces in place, you can carry on the necessary operation and assemble the related command to fulfill your needs.

But what are the operation type that you can you accomplish as far as the data retention goes?

They can be basically summarized into 3:

- Query data retention for either a specific table or the entire workspace. This can be helpful when you do not know if the customer set something up previously

- Set/update data retention

- Reset data retention back to the workspace general setting

Before making any change, you need to make sure about which retention your resource is configured with. Simple one: just query the data retention by running the GET command through ARMClient, passing the full resource id.

NOTE: Running ARMClient commands against Azure resources, requires authentication. Depending on where you're running the command from, you could use either ARMClient login (i.e. from PowerShell) or ARMClient azlogin (i.e. from Azure CLI).

For the entire post I will use my custom log table named myApplicationLog_CL (it doesn’t make any difference whether it is a custom log or not), hence the command will be as below (my subscription was intentionally masked ![]() )

)

ARMClient.exe get "/subscriptions/********-****-****-****-************/resourceGroups/rg-dataretentionbytype/providers/Microsoft.OperationalInsights/workspaces/la-DataRetentionByType/Tables/myApplicationLog_CL?api-version=2017-04-26-preview"

Tip Should you want to retrieve retention for each and every table in the workspace, just remove the table name from the above command, making it like this one below:

ARMClient.exe get "/subscriptions/********-****-****-****-************/resourceGroups/rg-dataretentionbytype/providers/Microsoft.OperationalInsights/workspaces/la-DataRetentionByType/Tables?api-version=2017-04-26-preview"

As you can see from the image there’s nothing about a specific retention. This means that the myApplicationLog_CL table is inheriting the workspace setting whatever it is.

Now let’s say that I want to set the retention for my custom log to 55 days.

To be able to set or update the given property, you need to specify its name and value after the resource id as part of the command in JSON format. In our case the property name is called retentionInDays, the value will be 55 and the format is the one used in the command below:

ARMClient.exe put "/subscriptions/********-****-****-****-************/resourceGroups/rg-dataretentionbytype/providers/Microsoft.OperationalInsights/workspaces/la-DataRetentionByType/Tables/myApplicationLog_CL?api-version=2017-04-26-preview" "{'properties':{'retentionInDays':55}}"

As I already anticipated, the retention set at the workspace level will not impact this table anymore.

But what if I would to set it back? What if I want to reset or revert any configuration that I could have made by mistake?

Luckily, there’s the possibility to set the property to a null value.

This turns out to reset the configuration back to its default which is the workspace setting (whatever retention it has been configured with). Similarly, to previous command, you need to set the property value, this time, to null:

ARMClient.exe put "/subscriptions/********-****-****-****-************/resourceGroups/rg-dataretentionbytype/providers/Microsoft.OperationalInsights/workspaces/la-DataRetentionByType/Tables/myApplicationLog_CL?api-version=2017-04-26-preview" "{'properties':{'retentionInDays':null}}"

From now on data in my custom log table will be kept according to the workspace setting.

I also want to mention something about the “delete operation task”.

Nowadays the table deletion is not supported hence you can only delete the content.

For more information about data deletion, see the documentation at https://docs.microsoft.com/en-us/azure/azure-monitor/platform/personal-data-mgmt#how-to-export-and-d...

IMPORTANT: Deletes in Log Analytics are destructive and non-reversible! Please use extreme caution in their execution and read carefully the above-mentioned documentation.

I hope I gave you all the necessary elements to make the decision of changing or not the data retention as well as the important points to consider before making any decision.

I also hope my technical guide on how to make the change is clear enough and helps you achieving the goal easily and quickly.

Thanks to have gone through this long post and good luck with data retention by type ![]()

Bruno & Simone.

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.