- Home

- Azure Data

- Azure Synapse Analytics Blog

- Polybase User Guide - Part 1 : Secured Storage Account

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Customers are ever increasingly making use of Polybase to load data into the Azure Data Warehouse, Polybase is the go-to solution when attempting load large files and thousands to millions of records. The intention of this Guide is not explain what Polybase is and how it works if you require a deeper understanding of how it works or looking for more granular information on Polybase start here

The intention of this guide is to help you decide which Polybase configuration will fulfill your requirements. It will be a multi part guide and in each part of the guide I will review the credentials, the requirements and provide the examples and relevant articles which will make your implementation of Polybase hassle free.

In Part 2 we will review Unrestricted storage and the original Polybase configuration

In Part 3 we will review Integration tools which make use of Polybase to load data (ADF/Databricks)

In Part 4 we will review how to modify your existing Polybase configuration without recreation

Part 1 – Secured Storage Account

In Part 1 we will focus on the most sought-after feature within Polybase, Connecting to a Secured storage account. The ability to connect to a secured storage account was generally available December 2018 and has since caused much confusion due to the specific requirements to make this possible, none of which are difficult to implement and will require a once off configuration.

If you have a Secured storage account or want to secure your storage account and would like to make use of Polybase to load or read your external data the following steps are required, there is no other alternative or workaround. We went through extensive development and released multiple components to make this possible.

Requirements

There are 2 requirements which make this configuration possible

- Generation 2 Storage account, if you do not have a Generation 2 storage account this will not be possible, upgrade to Gen2 before going further following this guide

- VNET Service Endpoints with Azure storage which is what I will be covering in this guide and is available for further reference here

Implementation

The Polybase engineering team released a new credential called Managed Service Identity as well as a new secure schema ABFSS which connects to an updated endpoint dfs.core.windows.net.

Using these 3 components it is now possible for you to enable the storage firewall and limit access to Azure Services within your storage account. Polybase source connections from the Azure Data Warehouse do not fall with the standard Azure Service IP boundaries which is why this was previously not possible.

(The same configuration is applicable to Blob Store and Data Lake there is no difference in the configuration)

Database Security Consideration

Before we proceed further, we need to address the permissions a user requires to create all the required objects. To make this easier and more manageable I would suggest creating a role within your Data Warehouse and making users role members which would avoid having to grant and revoke permissions individually as users are added or removed. The permissions which are required are elevated and therefore I would advise that only Database Administrators, Senior Project Members or knowledgeable users are granted these permissions.

Below is all the permissions you will require within the database, the script will create the roll, assign the permissions to the role and add the role membership to the designed user account. Specify required Data Warehouse DB name and User account.

Create Role Polybase_Users

Grant CREATE TABLE TO Polybase_Users

Grant ALTER ANY SCHEMA TO Polybase_Users

Grant ALTER ANY EXTERNAL DATA SOURCE TO Polybase_Users

Grant ALTER ANY EXTERNAL FILE FORMAT TO Polybase_Users

Grant CONTROL ON DATABASE::[] TO Polybase_Users

sp_addrolemember Polybase_Users , <yourUsername>

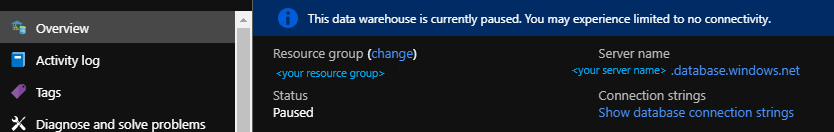

Step1 – Create the MSI

Register the Logical Database Server which hosts your Azure Data Warehouse within Azure Active directory, the identity will be used to authenticate with the storage account and grant you access to your external data

In order to so run the following Powershell commands, you can run this from the Azure Module if you have it installed or run the command from the Azure Portal using Cloudshell.

Connect-AzAccount

Select-AzSubscription -SubscriptionId <your-subscriptionId>

Set-AzSqlServer -ResourceGroupName <your-database-server-resourceGroup> -ServerName <your-database-servername> -AssignIdentity

(Note: You do not have to specify database.windows.net only the server name is required. Details provided on the Server Overview Pane)

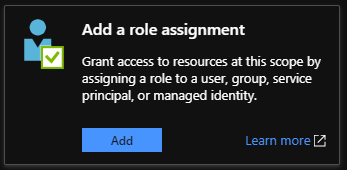

Step2 – Grant the permissions

With the identity created you will now be able to grant the Identity access to the target storage account which I want to secure with a firewall.

To grant these permissions, the grantor must be a member of a group or role which has Owner Privileges. If you do not have such permissions, you would not be able to grant the Server MSI the required role.

- Within the storage account navigate to Access Control(IAM)

- Select Add a Role Assignment

- Select Storage Blob Data Contributor from the Role Dropdown list

- Search for your <SERVER NAME> in the Select Search box

- Once you have found the server name save the configuration, so permissions are applied.

The permissions have now been granted at a storage level to the Managed Service Identity, this will allow you to connect to the storage account.

Step 3 – Database Credential Configuration

The next step is to configure the database credentials which will be used by your External Data Source

If you have never created any external objects and this is the first time you are configuring Polybase you have to start out by creating a Master Key within your database. (Azure Data Warehouse does not require a password to be specified for the Master Key. If you make use of a password, take record of the password)

CREATE MASTER KEY [ENCRYPTION BY PASSWORD = 'somepassword'];

Once the key is created proceed with creating the credentials which will refer to the Managed Service Identity. It does not require you to specify a SECRET when you use the Managed Service Identity.

The IDENTITY Name must be ‘Managed Service Identity’ for Polybase to connect to the Secured Storage Account.

CREATE DATABASE SCOPED CREDENTIAL msi_cred WITH IDENTITY = 'Managed Service Identity';

In the example we use msi_cred, feel free to name it according to your requirements, note that it must be used when creating your External Data Source.

Step 4 – Create the External Data Source

The only change which has been introduced in the External Data source creation is the change to the new schema and the updated endpoint.

When creating your External data source ensure that you use ABFSS and NOT WASBS and that your location points to dfs.core.windows.net.

CREATE EXTERNAL DATA SOURCE ext_datasource_with_abfss

WITH (TYPE = hadoop,

LOCATION = 'abfss://container@mystorageaccount.dfs.core.windows.net',

CREDENTIAL = msi_cred); --the credential name we created in the previous step

Additional Info on External Data Sources can be found here

Step 5 – Create the External file format

When connecting to files stored on an external location we need to specify what the file looks like in the terms of row / column delimiters, specific date time formats I would require to persist and what type of file the source file is. Additional Info on this can be found here

In the example I will create a file format that references a Delimited Text file which is comma delimited and has no table header in the file.

CREATE EXTERNAL FILE FORMAT NoHeader_CSV

WITH (FORMAT_TYPE = DELIMITEDTEXT,

FORMAT_OPTIONS (

FIELD_TERMINATOR = ',',

STRING_DELIMITER = '"',

USE_TYPE_DEFAULT = True)

)

Step 6 – Create External Table

The final step is to create the external table which will be consuming the credentials we’ve created and connecting to the External Data Source that has been provided.

When creating the above-mentioned components in the Data Warehouse we are only parsing the Syntax and if the syntax is valid the object will create. Therefore, you will only know that there is a problem once you create your External Table as only then will we authenticate using the information that has been provided.

CREATE EXTERNAL TABLE <YourTableName>

(

Column1 varchar(50) Null,

Column2 IDENTITY,

Column3 datetime2,

...

)

WITH

(

LOCATION = '/path/filename',--Path will be the root of the specified container

DATA_SOURCE = ext_datasource_with_abfss ,

FILE_FORMAT = NoHeader_CSV

) ;

If you followed the instructions and guidelines provided and specified a valid path and file name, you will be able to Create the External Table successfully and Select from the object without failure.

Solution Overview

The new ABFSS Schema is a secured schema which encrypts all communication between the storage account and Azure Data Warehouse.

The Managed Service Identity allows you to create a more secure credential which is bound to the Logical Server and therefore no longer requires user details or storage keys to be shared for credentials to be created.

The Storage account security is streamlined and we now grant RBAC permissions to the Managed Service Identity for the Logical Server with no requirement to grant User Specific access to the Storage account.

The solution allows for the Storage account firewall to be enabled which limits IP addresses which are allowed to connect to the storage endpoint and adhere to security best practices

If you are unable to complete the steps in the article, please do reach out to us by logging a Support Request.

Part 2 - Unsecured storage the original Polybase configuration to follow soon.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.