- Home

- Azure Data

- Azure Synapse Analytics Blog

- How Azure Synapse Analytics helps you analyze COVID data with Synapse SQL serverless endpoint

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Synapse Analytics is a limitless data analytics service that enables you to analyze data on Azure DataLake storage. It provides managed Apache Spark and T-SQL engines (provisioned and serverless) for analyzing data.

In this article, you will see how you can analyze the distribution of COVID cases reported in Serbia (Europe) using Synapse SQL endpoint in Synapse Analytics. Synapse SQL engine is the perfect choice for ad-hoc data analytics for the data analysts with T-SQL skills. The data set is placed on Azure storage and formatted as parquet.

Prerequisite

To try this sample, you need to have Azure Synapse Analytics workspace. If you don’t have one you can easily deploy it using Azure portal or this Deployment template. Workspace automatically deploys one serverless Synapse SQL endpoint that is everything we need for this kind of analysis. With the workspace you are also getting easy-to-use Web UI called Synapse Studio that enables you to start analyzing the files directly from your browser.

NOTE: You need Synapse SQL serverless (on-demand) query endpoint to execute the code in this article. The functionalities used in this article are still not available in provisioned endpoint.

COVID data set

In this sample is used the latest available public data on geographic distribution of COVID-19 cases worldwide from the European Center for Disease Prevention and Control (ECDC). Each row/entry contains the number of new cases reported per day and per country. For more information about this dataset, see here. Data set is updated on daily basis and placed as a part of Azure Open Dataset.

OPENROWSET function

Synapse SQL endpoint in Synapse Analytics enables you to use OPENROWSET T-SQL function to analyze data in Azure Data Lake storage. This is easy to use function where you just need to provide URL of some file on Azure storage and you will get the content of the file. Example of OPENROWSET function that reads the content of a parquet file placed on Azure storage is shown in the following example:

select *

from openrowset(bulk 'https://****.blob.core.windows.net/.../cases.parquet',

format='parquet') as cases

If you provide a file URL after the bulk keyword and specify that you are using parquet format, you will get the content of that parquet file.

Analyzing COVID dataset

Probably the easiest tool that you can use to analyze the file is Synapse Studio – Web UI where you can write a T-SQL query and see the results in browser.

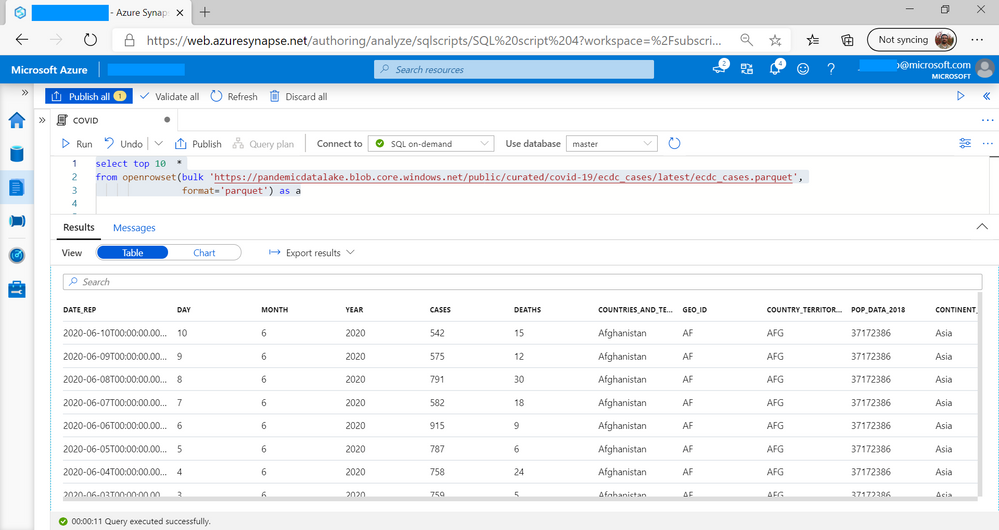

We can begin analysis by exploring the columns in data set on first 10 records:

Here I can see that some of the columns interesting for analysis are DATE_REP and CASES. I would like to analyze number of cases reported in Serbia, so I would need to filter the results using GEO_ID column.

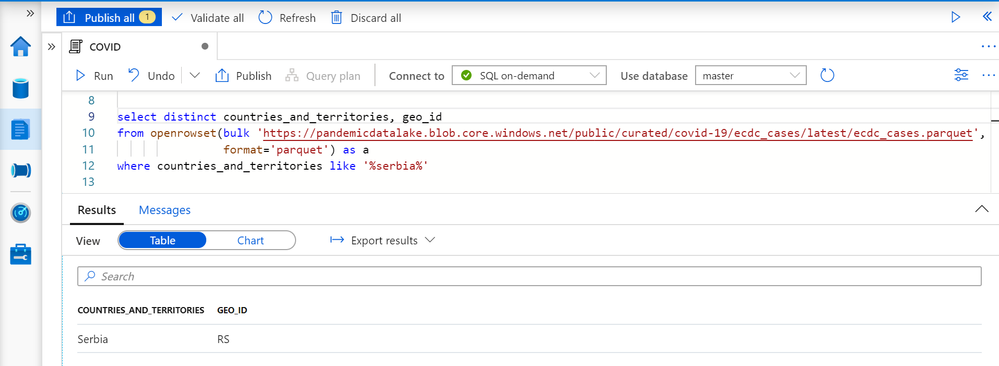

I’m not sure what is geo_id value for Serbia, so I will find all distinct countries and geo_id values where country is something like Serbia:

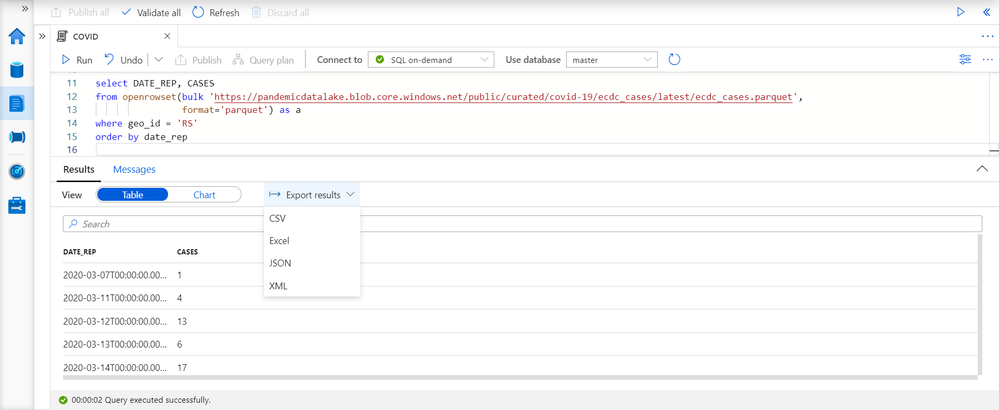

Here I can find that the value that I should use for filtering is ‘RS’. Now I can use this value to filter the records that represent reported cases in ‘Serbia’ and get date when the case is reported and number of cases. In addition, I will sort these values by date reported:

We can easily export data set with the number of cases reported in Serbia as CSV, Excel, or some other file type, so we can apply some offline client-side machine learning using Python or R or some other offline tool. In this case data set is not big so it is suitable for advanced offline analysis.

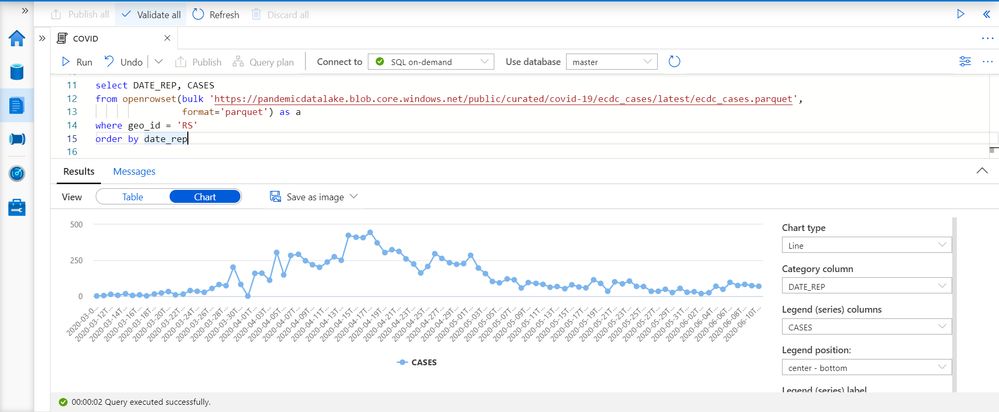

Let’s first explore this data set. If we switch to Chart pane, we will see quick visualization of data set:

I have set DATE_REP as category column and I see trend analysis of reported COVID cases in Serbia. By looking at this chart, we can see that the peek is somewhere between 15th and 20th April, and that distribution might be something like Chi-squared, Poisson, or Gamma distribution. This is important information because I can leverage this information in my offline ML model to test what distribution is the best fit for this data set.

Conclusion

Synapse SQL endpoint and Synapse Studio in Azure Synapse Analytics are tools for easy data exploration that enable you to easily read the content of a file and do basic data exploration. In this example, we have seen how to leverage T-SQL language and charting features in Synapse Analytics to analyze distribution of reported COVID cases in Serbia. The similar analysis can be repeated on any other data set. You can also do more advanced analytic by leveraging the T-SQL features - see the article Advanced analytics of COVID data using Synapse SQL endpoint for more details.

You can find more information about querying files in Azure Data Lakes Storage in this article.

If you want to try these queries, the scripts are below:

select top 10 *

from openrowset(bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.parquet',

format='parquet') as a

select countries_and_territories, geo_id

from openrowset(bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.parquet',

format='parquet') as a

where countries_and_territories like '%serbia%'

select DATE_REP, CASES

from openrowset(bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.parquet',

format='parquet') as a

where geo_id = 'RS'

order by date_rep

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.