- Home

- Azure Data

- Azure Synapse Analytics Blog

- Convert plain parquet files to Delta Lake format using Apache Spark in Azure Synapse Analytics

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Classic big data processing typically use read & append pattern. You can read immutable files and append new data as new file that you can add to the existing data sets. It is hard to modify existing data

Apache Delta format enables you to have big data sets that you can modify. Delta format is based on standard set of parquet files, but it keeps track about added and deleted file. If you need to modify data in one parquet file, Delta format will just record that file as invalidated and create new file with modified content that is included in data set.

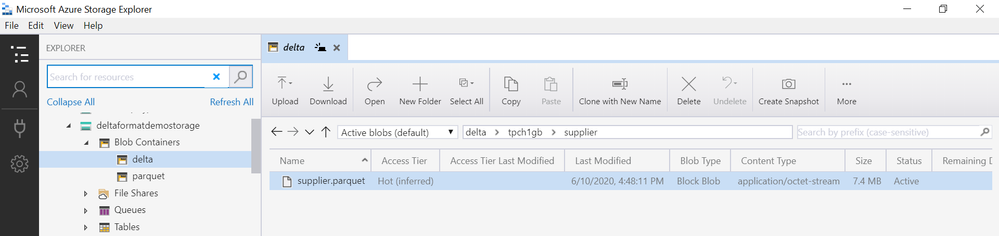

If you have Apache Spark, you can easily convert your existing parquet files or set of files into delta format. Let’s imagine that we have a folder on Azure storage with one or more .parquet files, representing a file data set, as shown on the following picture:

Apache Spark enables you to modify this location and add metadata files that will convert this single parquet file to a set of files. Since Apache Spark is built-in into Azure Synapse Analytics, you can use Synapse Analytics Studio to make this conversion.

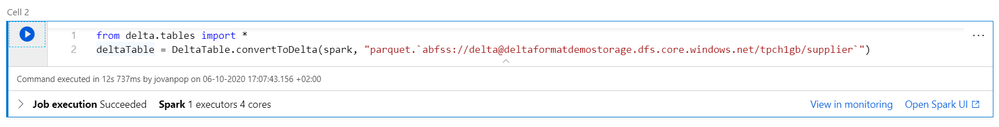

You can open Synapse Studio for Azure Synapse Analytics and create new Apache Spark notebook where you can convert this folder with parquet file to a folder with Delta format using the following PySpark code:

from delta.tables import *

deltaTable = DeltaTable.convertToDelta(spark, "parquet.`abfss://delta@deltaformatdemostorage.dfs.core.windows.net/tpch1gb/supplier`")

Conversion of plain parquet folder to Delta format is very quick because this command just creates some metadata files that describe locations of the files:

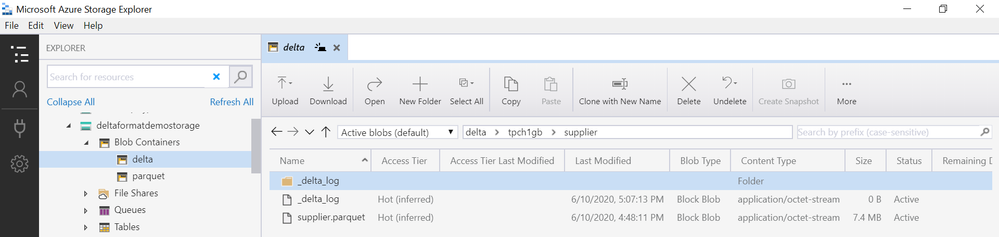

As a result, you will get the additional _delta_log file/folder in your Azure storage folder:

Once you complete the conversion you can create Delta table in Apache Spark for Azure Synapse using the command similar to the following Spark SQL example:

%%sql

CREATE TABLE supplier USING DELTA LOCATION 'abfss://delta@deltaformatdemostorage.dfs.core.windows.net/tpch1gb/supplier'

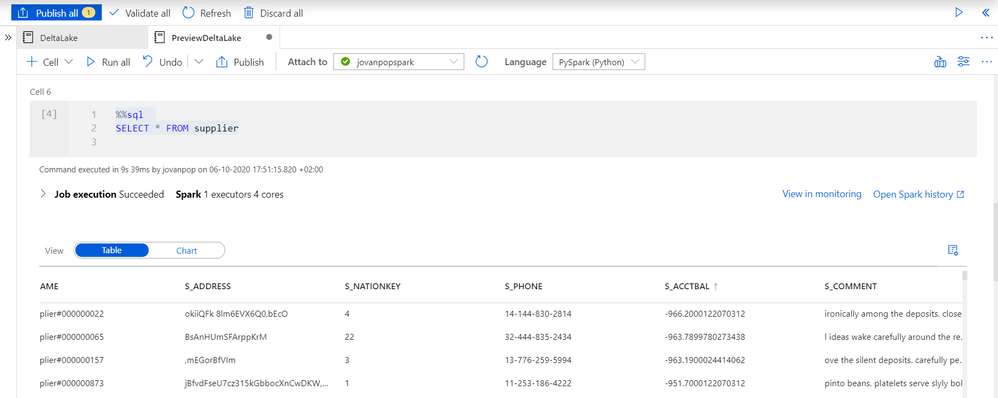

From this point, you can use Apache Spark to read, insert, update, and delete data from your supplier table. Example of Spark SQL query that reads data is

You can also update data in Delta format files by executing something like the following PySpark code:

from delta.tables import *

deltaTable = DeltaTable.forPath(spark, "delta@deltaformatdemostorage.dfs.core.windows.net/tpch1gb/supplier")

deltaTable.update("s_acctbal > 100", { "s_acctbal": "2*s_acctbal" } )

With a simple conversion you can convert your read/append only data set to file structure where you can easily update data.

Conclusion

Azure Synapse Analytics is limitless data analytics solution that enables you to use various engines such as Apache Spark or Synapse SQL to analyze and process files on Azure storage. In this article you have learned hot to leverage Apache Spark engine in Azure Synapse to make your read-only file sets fully updateable.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.