- Home

- Azure Data

- Azure Synapse Analytics Blog

- How to operationalize your data analytics pipelines

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Synapse Analytics is an analytics platform that provides productive developer experiences such as the Synapse Studio bulk load wizard helping data engineers quickly get data ingested and datasets onboarded through a code-less experience. The platform also comes with other low-code authoring experiences for data integration where it is now even easier to take the next step and further orchestrate and operationalize loads in just a few clicks. You can use built-in data pipelines that are extremely flexible where you can customize them according to your requirements all within the Synapse studio for maximum productivity.

This how to guide walks you through how to quickly set up a continuous data pipeline that automatically loads data as the files arrive in your storage account of your SQL pool.

1. Generate your COPY statement within a stored procedure by using the Synapse Studio bulk load wizard.

2. Use familiar dynamic SQL syntax to parameterize the COPY statement’s storage account location. You can also generate the time of ingestion using default values within the COPY statement. Sample code:

CREATE PROC [dbo].[loadSales] @storagelocation nvarchar(100) AS

DECLARE @loadtime nvarchar(30);

DECLARE @COPY_statement nvarchar(4000);

SET @loadtime = GetDate();

SET @COPY_statement =

N'COPY INTO [dbo].[Trip]

(

[DateID] 1,

[MedallionID] 2,

[HackneyLicenseID] 3,

[PickupTimeID] 4,

[DropoffTimeID] 5,

[PickupGeographyID] 6,

[DropoffGeographyID] 7,

[PickupLatitude] 8,

[PickupLongitude] 9,

[PickupLatLong] 10,

[DropoffLatitude] 11,

[DropoffLongitude] 12,

[DropoffLatLong] 13,

[PassengerCount] 14,

[TripDurationSeconds] 15,

[TripDistanceMiles] 16,

[PaymentType] 17,

[FareAmount] 18,

[SurchargeAmount] 19,

[TaxAmount] 20,

[TipAmount] 21,

[TollsAmount] 22,

[TotalAmount] 23,

[loadTime] default ''' + @loadtime + ''' 24

)

FROM ''' + @storagelocation + '''

WITH (

FIELDTERMINATOR=''|'',

ROWTERMINATOR=''0x0A''

) OPTION (LABEL = ''loadTime: ' + @loadtime + ''');';

EXEC sp_executesql @COPY_statement;

3. Create a Synapse pipeline in the Synapse Studio with an event-based trigger for when a blob is created in your storage container and parameterize the blob path (folder path and file name) as part of the pipeline. Additional documentation on pipeline triggers is here.

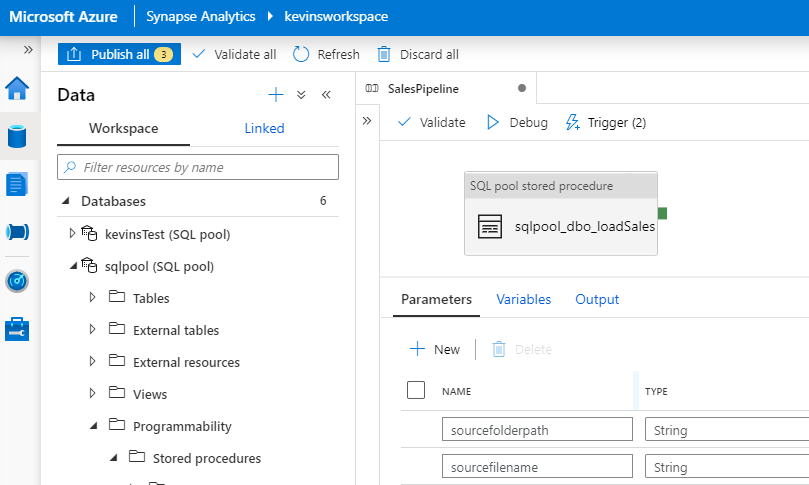

Parameterized pipeline:

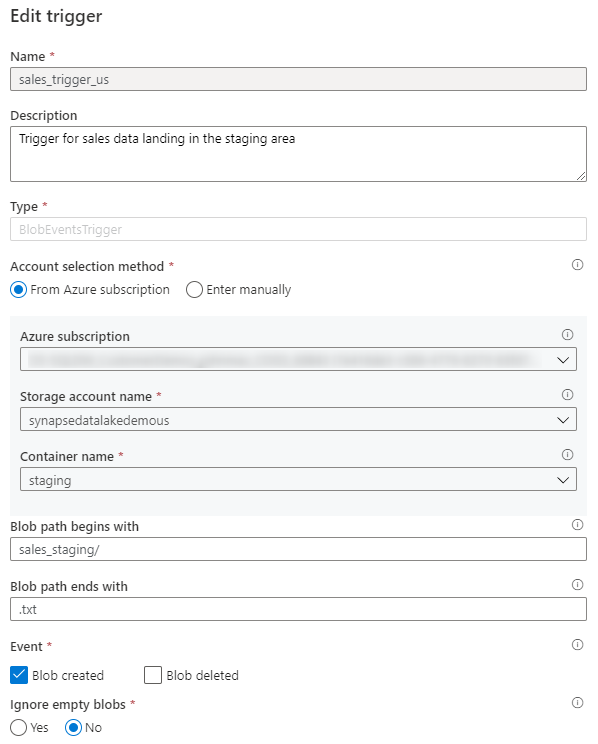

Event-based trigger:

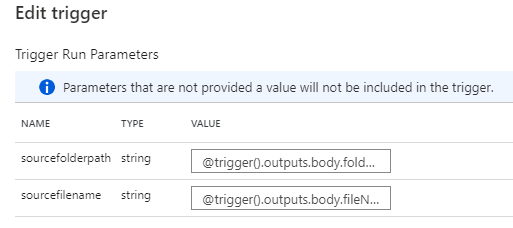

Trigger parameters:

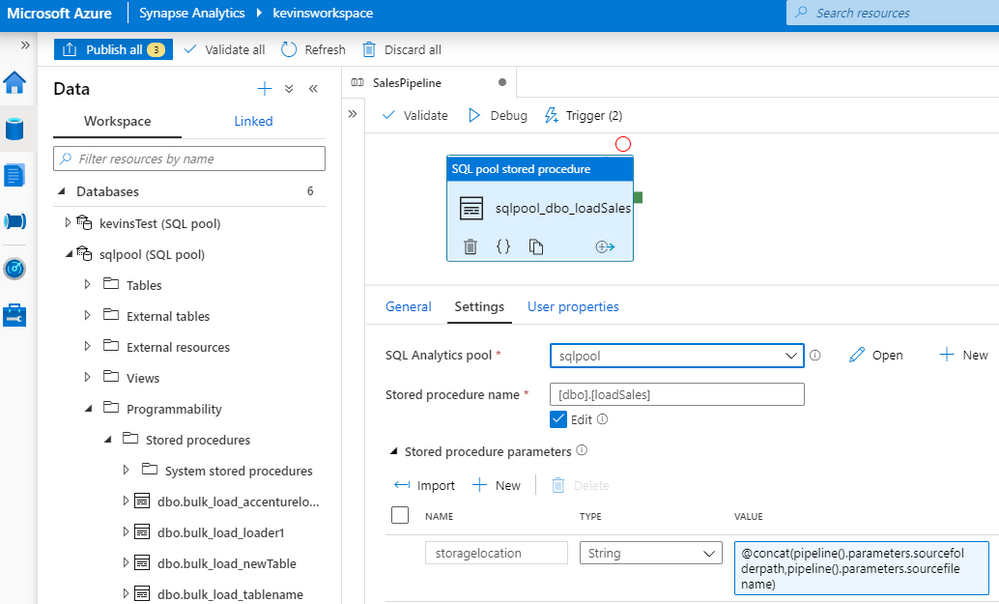

4. Add the stored procedure to a stored procedure activity in your pipeline where the stored procedure parameter is the blob path pipeline parameter and publish your pipeline to your workspace. Additional documentation on the stored procedure activity is here.

In just 4 steps, you have now created a data pipeline which automatically and continuously loads files as they land in your staging storage account location using the COPY statement in a stored procedure. You did not need to provision or integrate any event notification services such as Azure Event Grid or Azure Queue Storage to support this auto-ingestion workflow and there were minimal code changes.

Synapse pipelines are flexible where there is a range of configurations and customization you can set to address your scenarios. Here are some other considerations when operationalizing your data pipelines:

- Add additional transformation logic for further processing within your stored procedure or create additional stored procedures (activities) in your pipeline

- Instead of creating a stored procedure, you can leverage the pipeline COPY activity with the COPY statement. This will make your data pipeline authoring experience code-less. You can configure the COPY activity to ingest data based on blobs’ last modified date.

- Use data flows where you can quickly use pre-defined templates for handling common ETL patterns such as SCD1 and SCD2 in your pipeline - code free dimensional and fact processing.

- You can batch up files instead and leverage a schedule-based trigger. You can use a static storage location as your staging area to upload files. Note you may need to move and clean up files at the end of your pipeline to prevent duplicate loads if you follow this pattern - Synapse pipelines also has this capability built-in through the delete activity or move template.

For more information on Synapse data pipelines and getting started with data integration, visit the following documentation:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.