- Home

- Azure Data

- Azure SQL Blog

- Increase data file size to improve HammerDB workload performance on General Purpose Managed Instance

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The General Purpose tier of Managed Instance uses Azure Premium Storage to store database files. In Azure Premium Storage IO performance depends on the file size. Increasing file size might be an easy way to improve your performance. In this post you will see how to increase performance of HammerDB workload from 20K transactions/minute to 100K-300K transactions/minute by increasing the database file size.

In General Purpose architecture of Managed Instance, the database files in storage layer have dedicated IO characteristics. IO performance of every database file depends on the file size, as shown in the following table:

This means that you can easily provide better performance of IO subsystem by increasing the file size.

However, before you increase the file sizes, you should run your workload on the default file layout (with minimal sizes) and identify the bottlenecks. Otherwise you might change the wrong files and get unexpected effects, or you might use more storage than needed without getting any benefits.

In this article you will see how to analyze where the bottlenecks are and identify how to improve the performance.

In this experiment, we will see what effect increasing the file size has on workload performance of HammerDB. I am using the following parameters:

- Managed Instance Service tier: General Purpose

- vCores: 32

- Instance size: 256GB

- HammerDB Workload: TPCC

- HammerDB Databases size: 1000 warehouses (~100GB)

- HammerDB Virtual users: 50

HammerDB is well-known tool for simulating TPC workload and benchmarking performance of databases. Therefore, I will assume that you are familiar with HammerDB. I will show the following results of a HammerDB experiment:

- Results of HammerDB workload performance with the minimal files.

- Results of HammerDB workload performance with pre-allocated data files.

Default configuration

Running HammerDB on the default TPCC1000 database gives the following results:

With 50 HammerDB virtual users you can get ~20K transactions per minute.

Wait statistics might show what is slowing down the workload. One way to analyze wait statistic on Managed Instance is QPI library. By analyzing wait statistics, we find that IO related wait statistics are dominant:

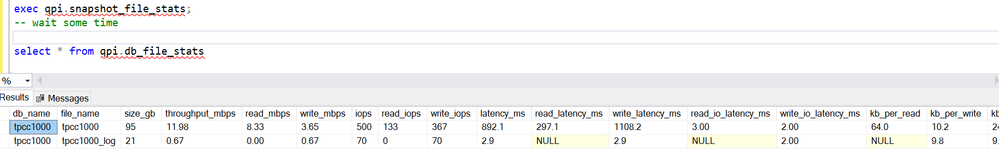

Buffer IO/PAGEIOLATCH wait statistics indicate that there is a potential issue with storage system. This can be confirmed by looking at the file IO statistics. Again, I’m using QPI library to analyze file IO statistics. The results of the file IO statistics analysis are shown below:

Expected average IO latency for General Purpose IO storage should be 5-10ms. However, we notice that the latency is between 0.2s and 1s. Note that IO latency is in the expected range (2-3ms); however, the requests on data file are queued and most of the IO requests are waiting although the ones that are actually executed are fast. Also, note that there is 500 IOPS on data file that represents max for the smallest file (P10).

The IOPS value close to the limit and the high difference between write_latency and write_io_latency or between read_latency and read_io_latency might indicate that the file doesn’t have enough IO capacity.

Since there is noticeable difference, I will repeat the experiment using a larger file size – in this case data file.

Increased file size

The General Purpose tier uses the remote Azure Premium Storage where IOPS and throughput depend on the file size. If we increase and pre-allocate the files, we will get better performance. Since there is an issue with data file latency, I’m increasing the size of tpcc1000 data file:

alter database tpcc1000

modify file (name='tpcc1000', size = 140 GB)

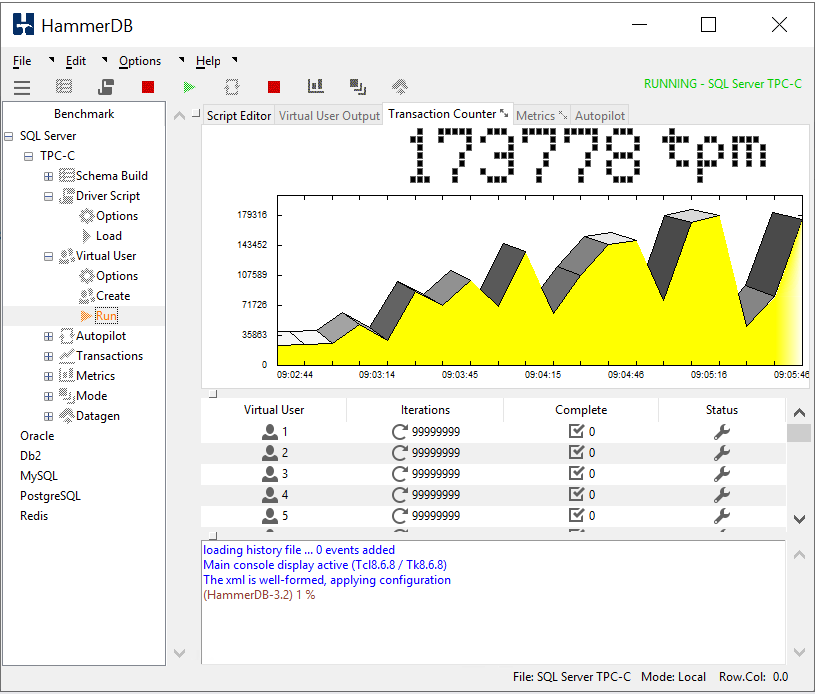

I can increase the file size to any value higher than 128GB to get the higher performance class. After executing this statement, performance of the HammerDB workload are slowly increasing as you can see in the picture below:

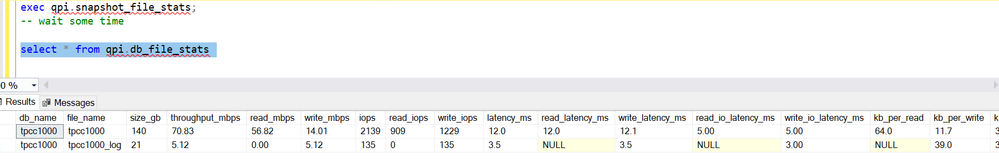

File IO statistic analysis shows that latency on data file is much better:

Latency is decreased to 12ms both for read and write. Wait statistics also show that PAGEIOLATCH is not so dominant like in the previous case.

After some time, HammerDB uses between 100K and 300K transactions/minute:

This way, we are getting 5-15x performance improvement with a simple change.

Conclusion

General Purpose Instance enables you to improve performance of your workload by increasing the file size. Every file is placed on a separate disk/container that has dedicated IO performance and will not share IO resources with other files.

If you properly identify IO bottlenecks you can improve performance of your workload by providing faster storage containers by increasing and pre-allocating the file size.

Note that the environment we used is not representative of your environment. When replicating this experiment, expect to see similar but not exactly the same numbers. Also, the HammerDB workload is not a standardized experiment and there is no precise definition of client VM characteristics, network throughput, point in time when the Managed Instance is performing backups, etc. However, you should increase the performance if you identify the bottlenecks properly.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.