- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- Using external data sources to enrich network logs using Azure storage and KQL

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Network data sources are one of the highest volume data sources hence threat hunting on such data sources becomes often challenging if we do not enrich such datasets. One of the common first steps done is matching against Threat Intel feeds. Apart from that, public ip addresses are difficult to investigate at scale unless we populate additional context around it or correlate with other data sources.

One of the common use-case request we have received from customers is tagging Azure Data Center ip Addresses in network telemetry so they can filter it out and focus on other telemetry to find suspicious traffic.

In this blog, we will show a custom solution to create reference lookup tables of such data sources in blob storage and call within KQL queries using native operators. Ofer has done a blog-post on Approximate, partial and combined lookups which you can reference if you are new to lookup tables. Also, thanks to Dennis Pike from CyberSecurity Solutions Group who contributed with compiling Single data source KQL query.

Note:

Microsoft do not recommend filtering or using these ranges as Allowed List for detections. Any such filtering needs to be reviewed and implemented with care so it does not introduce any blind spots in your security monitoring. However, these datasets can be used to enrich existing data sources, provide more context and to segregate or categorize the large portion of the network traffic for analysts/threat hunters while hunting or investigating large data sets.

Identification of Reliable Data sources:

Microsoft and other vendors publish various network data sources which are updated regularly (sometimes weekly). These datasets can be used to enrich the existing network data sources. The datasets can be downloaded via public links and can be directly called via externaldata operator if download links are static. These can be extended to Threat Intel feeds such as COVID-19 indicators.

- MSFT Public ips : https://www.microsoft.com/download/details.aspx?id=53602

- Azure IP Ranges and Service Tags – Public Cloud : https://www.microsoft.com/en-us/download/details.aspx?id=56519

- Office Endpoints Worldwide: https://endpoints.office.com/endpoints/worldwide?clientrequestid=b10c5ed1-bad1-445f-b386-b919946339a...

- Azure IP Ranges and Service Tags – US Government Cloud : https://www.microsoft.com/en-us/download/details.aspx?id=57063

- Azure China : https://www.microsoft.com/download/details.aspx?id=57062

- Azure Germany : https://www.microsoft.com/download/details.aspx?id=57064

- AWS IP Ranges: https://ip-ranges.amazonaws.com/ip-ranges.json

- COVID-19 Indicators : https://raw.githubusercontent.com/Azure/Azure-Sentinel/master/Sample%20Data/Feeds/Microsoft.Covid19....

Download and ingest datasets:

The datasets may not be always available as static links depending upon how they are being published. Also for certain feeds, the download url has date appended which is dynamic and can not be called with scheduled queries unless normalized on external storage.

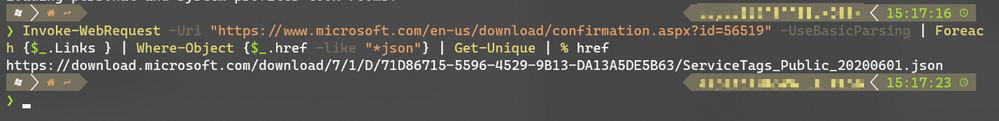

e.g. For AzureDataCenter IP Ranges – Cloud.

Download Page: https://www.microsoft.com/en-us/download/details.aspx?id=56519 which has download button linking to https://www.microsoft.com/en-us/download/confirmation.aspx?id=56519 When this link is visited from browser, it automatically redirects to the relevant URL with json behind the scenes and auto-downloads.

However, this direct download link is not static and may change its location or date depending on when its updated and available to download.

To find the actual download link programmatically, you can request HTML response for the download link and find href links ending with json. Below is an example PowerShell console from windows Terminal to parse a HTML response of the original download links and retrieve direct download link to the json.

For working with static URLs, you can also directly use Azure playbooks which provides friendly UI and step by step process to ingest in AzureSentinel. You can check recent blogpost Using Azure Playbooks to import text-based threat indicators to Azure Sentinel for more details. For complex use-cases where download link is not static, we can automate this via a serverless Azure Function and PowerShell to store this data directly in Azure blob storage. Once these files are stored in blob storage, you can generate a read only shared access link in order to use within KQL queries. You can also ingest these directly in Sentinel as custom log table however dataset is very tiny so we will cover blob storage method in this article. For examples ingestion instructions, check the json ingestion tools dotnet_loganalytics_json_import , Azure Log Analytics API Clients .

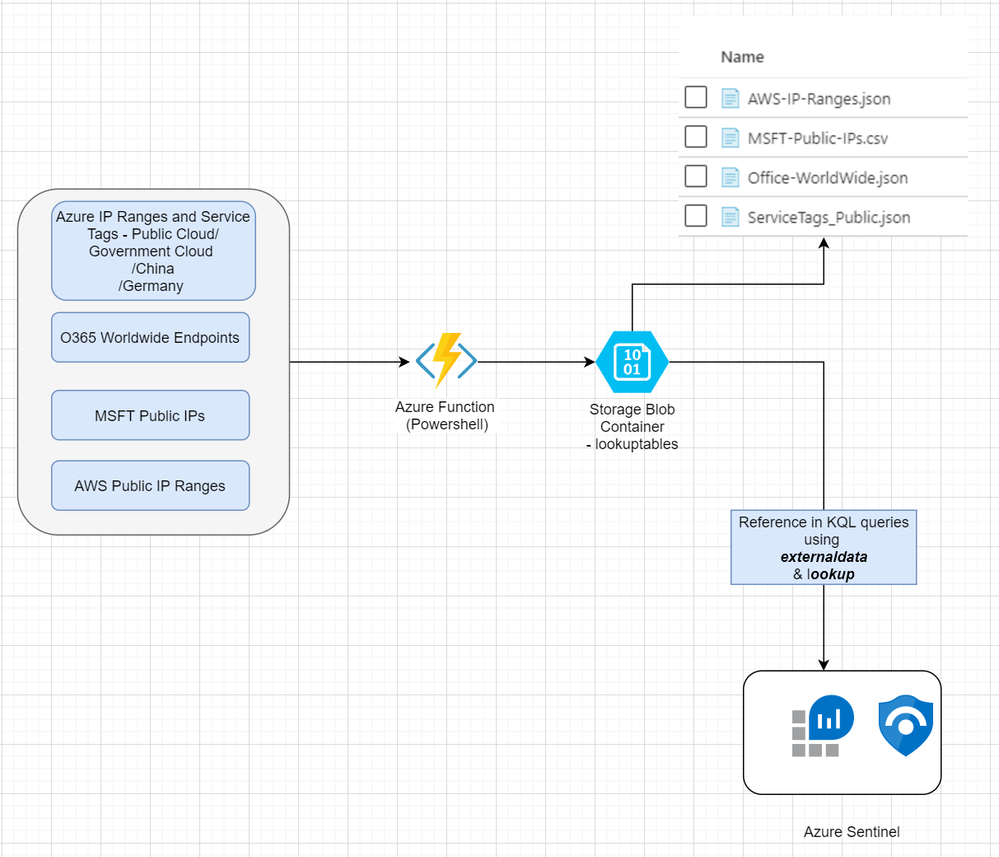

Architecture Diagram:

The below diagram explains the data workflow for our use-case. We will feed multiple URLs containing the datasets you want to use as reference tables. In the below example, we are using 4 such datasets. These will be processed via our Azure Function which will parse URLs to find direct download links if required and upload to blob storage. Once these files are uploaded to blob storage, you can generate read only shared access links to access via native KQL operators such as externaldata. We will also see how to use these operators in queries later part of the article.

When to use the Architecture:

Below are certain scenarios when you can consider deploying this into your environment.

- Centralized Lookup tables with normalized filenames to call in the scheduled KQL queries in Sentinel.

- When direct URL to download the file is not available and need normalization to use within queries.

- When you cannot use externaldata operator from KQL queries to access public sites directly but can reach to Azure storage domain. (blob.core.windows.net).

- When you need to store and access current or archived versions reliably when original source is not available.

Deploy the function:

You can deploy the function via two ways either through VS Code or directly via ARM template.

Detailed instructions with both methods can be found on GitHub :

https://github.com/Azure/Azure-Sentinel/blob/master/Tools/UploadToBlobLookupTables/readme.md

Generating Shared Access Links from Azure Blob Storage:

In order to access blob storage links, you can generate pre-approved shared access links with read-only permissions.

You can follow the tutorial – Get SAS for a blob Container to generate links for each blob files.

Step -1 : Get Shared Access Signature for the respective File in blob storage.

Step-2: Set desired expiry period and Permissions as Read.

Step 3: Copy the link from the last screen.

KQL query with externaldata for Azure IP Ranges:

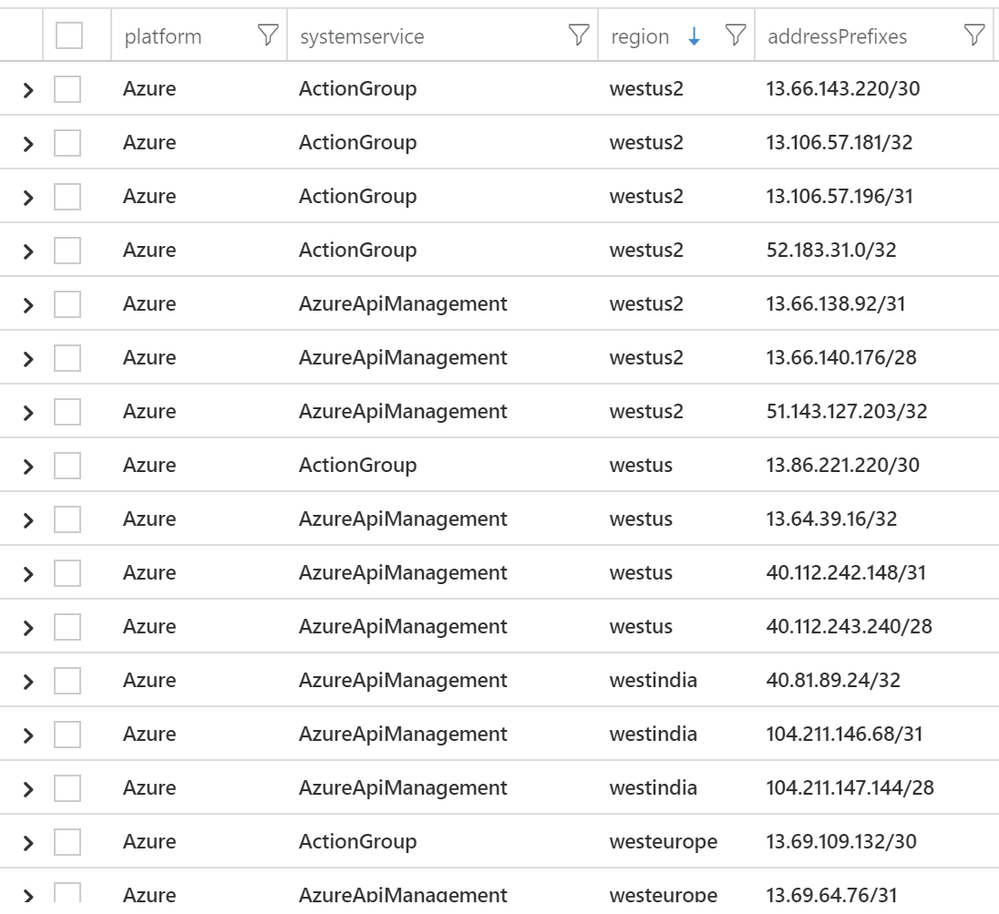

This query gives us around 5k subnet ranges.

KQL :

let AzureIPRangesPublicCloud = (externaldata(changeNumber:string,cloud:string, values: dynamic) [@"https://uploadtobloblookuptables.blob.core.windows.net/lookuptables/ServiceTags_Public.json?sv=2019-02-02&st=2020-06-08T17%3A21%3A23Z&se=2020-06-09T17%3A21%3A23Z&sr=b&sp=r&sig=zTATanBOqaDbi2NAQirIMWRJmees2z0CQexk4XQiTb0%3D"]

with (format="multijson")); let AzureSubnetRangelist = AzureIPRangesPublicCloud | mv-expand values | extend platform = parse_json(parse_json(values).properties).platform, systemservice = parse_json(parse_json(values).properties).systemService, region = parse_json(parse_json(values).properties).region, addressPrefixes = parse_json(parse_json(values).properties).addressPrefixes | mv-expand addressPrefixes | project platform, systemservice, region, addressPrefixes;

Sample Output:

The above query result into around 5K subnets with contexts such as platform, region and systemservice associated with it. You can selectively pickup from this lookup table depending on how your azure infrastructure. For this blog, we are going to use this table as it is.

KQL Query for filtering using Lookup for single datasource:

KQL Query using single data source to do subnet matching and filter on target/source IP ranges:

Datasource:

- AzureNetworkAnalytics_CL

let AzureIPRangesPublicCloud = (externaldata(changeNumber:string,cloud:string, values: dynamic)

[@"https://uploadtobloblookuptables.blob.core.windows.net/lookuptables/ServiceTags_Public.json?sv=2019-02-02&st=2020-06-08T17%3A21%3A23Z&se=2020-06-09T17%3A21%3A23Z&sr=b&sp=r&sig=zTATanBOqaDbi2NAQirIMWRJmees2z0CQexk4XQiTb0%3D"] with (format="multijson"));

let AzureSubnetRangelist = AzureIPRangesPublicCloud

| mv-expand values

| extend platform = parse_json(parse_json(values).properties).platform, systemservice = parse_json(parse_json(values).properties).systemService, region = parse_json(parse_json(values).properties).region, addressPrefixes = parse_json(parse_json(values).properties).addressPrefixes

| mv-expand addressPrefixes

| project platform, systemservice, region, addressPrefixes; let lookup = toscalar(AzureSubnetRangelist| summarize l=make_list(addressPrefixes)); let starttime = 1d; let endtime = 1h; let PrivateIPregex = @'^127\.|^10\.|^172\.1[6-9]\.|^172\.2[0-9]\.|^172\.3[0-1]\.|^192\.168\.'; let AllNSGTrafficLogs = AzureNetworkAnalytics_CL | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where SubType_s == "FlowLog" | distinct DestIP_s; let AzureSubnetMatchedIPs=materialize( AllNSGTrafficLogs | mv-apply l=lookup to typeof(string) on ( where ipv4_is_match (DestIP_s, l) ) | project-away l ); AzureNetworkAnalytics_CL | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where SubType_s == "FlowLog" | where isnotempty(DestIP_s) | extend DestinationIpType = iff(DestIP_s matches regex PrivateIPregex,"private" ,"public" ) | where DestinationIpType == "public" | where DestIP_s !in ((AzureSubnetMatchedIPs)) | project-reorder TimeGenerated, Type, SrcIP_s, DestIP_s, *

Sample Results :

Results showed below are from Traffic NSG logs(AzureNetworkAnalytics_CL) with filtering applied via the lookup table Azure IP ranges in destination IP field.

KQL Query for filtering using lookup for multiple datasources:

KQL query using multiple network data sources unioned to do subnet matching and allow listing on target ip ranges, the same can be done for source ip.

DataSources:

- CommonSecurityLogs

- VMConnection

- WireData

KQL Query:

let AzureIPRangesPublicCloud = (externaldata(changeNumber:string, cloud:string, values: dynamic) [@"https://uploadtobloblookuptables.blob.core.windows.net/lookuptables/ServiceTags_Public.json?sv=2019-02-02&st=2020-06-08T17%3A21%3A23Z&se=2020-06-09T17%3A21%3A23Z&sr=b&sp=r&sig=zTATanBOqaDbi2NAQirIMWRJmees2z0CQexk4XQiTb0%3D"] with (format="multijson")); let AzureSubnetRangelist = AzureIPRangesPublicCloud | mv-expand values | extend addressPrefixes = parse_json(parse_json(values).properties).addressPrefixes | project addressPrefixes; let lookup = toscalar(AzureSubnetRangelist| summarize l=make_set(addressPrefixes)); let starttime = 2h; let endtime = 1h; let PrivateIPregex = @'^127\.|^10\.|^172\.1[6-9]\.|^172\.2[0-9]\.|^172\.3[0-1]\.|^192\.168\.'; let AllDestIPs = materialize(union isfuzzy=true ( VMConnection | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where isnotempty(DestinationIp) and isnotempty(SourceIp) | extend DestinationIpType = iff(DestinationIp matches regex PrivateIPregex,"private" ,"public" ) | where DestinationIpType == "public" | extend DeviceVendor = "VMConnection" | extend DestinationIP = DestinationIp, SourceIP = SourceIp | distinct DestinationIP ), ( CommonSecurityLog | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where isnotempty(DestinationIP) and isnotempty(SourceIP) | extend DestinationIpType = iff(DestinationIP matches regex PrivateIPregex,"private" ,"public" ) | where DestinationIpType == "public" | distinct DestinationIP ), ( WireData | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where isnotempty(RemoteIP) and isnotempty(LocalIP) | extend DestinationIpType = iff(RemoteIP matches regex PrivateIPregex,"private" ,"public" ) | where DestinationIpType == "public" | extend DestinationIP = RemoteIP , SourceIP = LocalIP | extend DeviceVendor = "WireData" | distinct DestinationIP ) ); let AzureSubnetMatchedIPs=materialize( AllDestIPs | mv-apply l=lookup to typeof(string) on ( where ipv4_is_match (DestinationIP, l) ) | project-away l ); let TrafficLogsNonAzure = (union isfuzzy=true ( VMConnection | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where isnotempty(DestinationIp) and isnotempty(SourceIp) | extend DestinationIP = DestinationIp, SourceIP = SourceIp | where DestinationIP !in ((AzureSubnetMatchedIPs)) ), ( CommonSecurityLog | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where isnotempty(DestinationIP) and isnotempty(SourceIP) | where DestinationIP !in ((AzureSubnetMatchedIPs)) ), ( WireData | where TimeGenerated between (startofday(ago(starttime))..startofday(ago(endtime))) | where isnotempty(RemoteIP) and isnotempty(LocalIP) | extend DestinationIP = RemoteIP , SourceIP = LocalIP | extend DeviceVendor = "WireData" | where DestinationIP !in ((AzureSubnetMatchedIPs)) ) ); TrafficLogsNonAzure | project-away Type, RemoteIP, LocalIP, DestinationIp, SourceIp | project-reorder TimeGenerated, DeviceVendor, SourceIP, DestinationIP, *

Note: The above query checks multiple high volume tables and lookup query will be CPU and memory intensive. It is recommended to run it in smaller intervals (hourly) or split it per datasource to review the results.

Sample results:

Happy Hunting !!

References:

- Approximate Partial and combined lookups in Azure Sentinel

- Centralized Lookup Tables for enrichment in KQL with Azure Function to upload to blob storage

https://github.com/Azure/Azure-Sentinel/pull/720

- lookup operator

https://docs.microsoft.com/en-us/azure/data-explorer/kusto/query/lookupoperator?pivot=azuremonitor

- Externaldata Operator

- materialize function

https://docs.microsoft.com/en-us/azure/data-explorer/kusto/query/materializefunction

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.