- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- Using Azure Data Explorer for long term retention of Microsoft Sentinel logs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

** In February 2022, Azure Monitor Logs announced the new Archive tier, which is the new preferred way to store logs for long term retention in Microsoft Sentinel. Visit this link for more details about the new archive tier. **

In this blog post, we will explain how you can use Azure Data Explorer (will be referred to in this blog post as ADX from now on) as a secondary log store and when this might be appropriate for your organization.

One of the common questions that we get from customers and partners is how to save money on their Microsoft Sentinel bill, retention costs being one of the areas that can be optimized. As you may know, data retention in Sentinel is free for 90 days, after that, it is charged.

Customers normally need to keep data accessible for longer than three months. In some cases, this is

just due to regulatory requirements, but in some other cases they need to be able to run investigations on older data. ADX can be a great service to leverage in these cases, where the need to access older data exists, but at the same time customers want to save some costs on data retention.

What is Azure Data Explorer (ADX)?

ADX is a big data analytics platform that is highly optimized for all types of logs and telemetry data analytics. It provides low latency, high throughput ingestions with lightning speed queries over extremely large volumes of data. It is feature rich in time series analytics, log analytics, full text search, advanced analytics (e.g., pattern recognition, forecasting, anomaly detection), visualization, scheduling, orchestration, automation, and many more native capabilities.

Under the covers, it is a cluster comprised of engine nodes that serve out queries and data management service nodes that perform/orchestrate a variety of data related activities including ingestion and shard management. Some important features include:

- It offers configurable hot and cold caches backed by memory and local disk, with data persistency on Azure Storage.

- Data persisted in ADX is durably backed by Azure Storage that offers replication out of the box, locally within an Azure Data Center, zonally within an Azure Region.

- ADX uses Kusto Query Language (KQL) as the query language, which is what we also use in Microsoft Sentinel. This is a great benefit as we can use the same queries in both . Also, and as we will explain later in this article, we can perform cross-platform queries that aggregate/correlate data sitting across ADX and Sentinel/Log Analytics.

From a cost perspective, it offers reserved instance pricing for the compute nodes and autoscaling capabilities, which adjust the cluster size based on workload in execution. Azure Advisor also integrates with ADX to offer cost optimization recommendations.

You can learn much more about ADX in the official documentation pages and the ADX blog.

When to use ADX vs Azure for long term data

Microsoft Sentinel is a SaaS service with full SIEM+SOAR capabilities that offers very fast deployment and configuration times plus many advanced out-of-the-box security features needed in a SOC, to name a few: incident management, visual investigation, threat hunting, UEBA, detection rules engine powered by ML, etc.

That being said, security data stored in Sentinel might lose some of its value after a few months and SOC users might not need to access it as frequently as newer data. Still, they might need to access it for some sporadic investigations or audit purposes but remaining mindful of the retention cost in Sentinel. How do we achieve this balance then? ADX is the answer ![]()

With ADX we can store the data at a lower price but still allowing us to explore the data using the same KQL queries that we execute in Sentinel. We can also use ADX proxy feature, which enables us to perform cross platform queries to aggregate and correlate data spread across ADX, Application Insights, and Sentinel/Log Analytics. We can even build Workbooks that visualize data spread across these data stores. ADX also opens new ways to store data that provide us with better control and granularity (see Management Considerations section below).

As a summary, in the context of a SOC, ADX provides the right balance of cost/usability for aged data that might not benefit anymore from all the security intelligence built on top of Azure .

Log Analytics Data Export feature

Let’s take a look at the new Data Export feature in Log Analytics that we will use in some of the architectures below (full documentation on this feature here).

This feature lets you export data of selected tables (see supported tables here) in your Log Analytics workspace as it reaches ingestion, and continuously send it to Azure Storage Account and/or Event Hub.

Once data export is configured in your workspace, any new data arriving at Log Analytics ingestion endpoint and targeted to your workspace for the selected tables, is exported to your Storage Account hourly or to EventHub in near-real-time. NOTE: There isn't a way to filter data and limit the export to certain events – for example: when configuring data export rule for SecurityEvent table, all the data sent to SecurityEvent table is exported starting at configuration time.

Take the following into account:

- Both the workspace and the destination (Storage Account or Event Hub) must be located in the same region.

- Not all log types are supported as of now. See supported tables here.

- Custom log types are not supported as of now. They will be supported in the future.

Currently there’s no cost for this feature during preview, but in the future, there will be a cost associated with this feature based on the number of GB transferred. Pricing details can be seen here.

Architecture options

There are a couple of options to integrate Microsoft Sentinel and ADX.

- Send the data to Sentinel and ADX in parallel

- Sentinel data sent to ADX via Event Hub

Send the data to Sentinel and ADX in parallel

This architecture is also explained here. In this case, only data that has security value is sent to Microsoft Sentinel, where it will be used in detections, incident investigations, threat hunting, UEBA, etc. The retention in Microsoft Sentinel will be limited to serve the purpose of the SOC users, typically 3-12 months retention is enough. All data (regardless of its security value) will be sent to ADX and be retained there for longer term as this is cheaper storage than Sentinel/Log.

An additional benefit of this architecture is that you can correlate data spread across both data stores. This can be especially helpful when you want to enrich security data (hosted in Sentinel), with operational data stored in ADX (see details here).

Adopting this architecture means that there will be some data that is stored in both Log Analytics and ADX, because what we send to Log Analytics is a subset of what is sent to ADX. Even with this data duplication, the cost savings are significant as we reduce the retention costs in Sentinel.

Microsoft Sentinel data sent to ADX via Event Hub

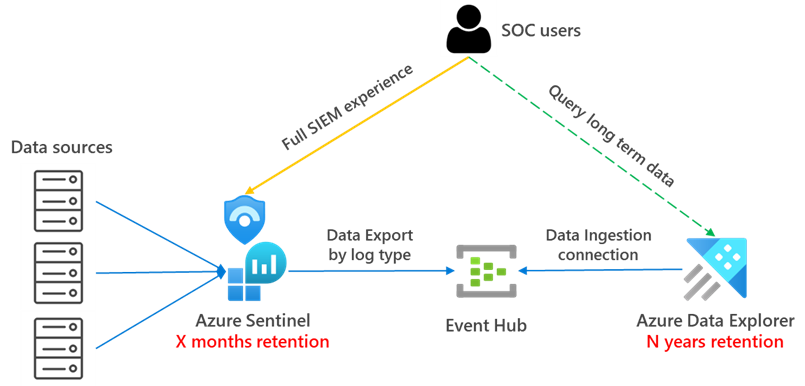

Combining the Data Export feature and ADX, we can choose to stream our logs to Event Hub and then ingest them into ADX. The high-level architecture would look like this:

With this architecture we have the full Sentinel SIEM experience (Incident management, Visual investigation, Threat hunting, advanced visualizations, UEBA,…) for data that needs to be accessed frequently (for example, 6 months) and then the ability to query long term data accessing directly the data stored in ADX. These queries to long term data can be ported without changes from Sentinel to ADX.

Similar to the previous case, with this architecture there will be some data duplication as the data is streamed to ADX as it arrives into Log Analytics.

How do you set this up you might ask? Here you have a high-level list of steps:

1. Configure Log Analytics Data Export to Event Hub. See detailed instructions here.

Steps 2 through 6 are documented in detail in this article: Ingest and query monitoring data in Azure Data Explorer.

2. Create ADX cluster and database. The database is basically a workspace in Log Analytics terminology. Detailed steps can be found here. For guidance around ADX sizing, you can visit this link.

3. Create target tables. The raw data is ingested first to an intermediate table where the raw data is stored. At that time, the data will be manipulated and expanded. Using an update policy (think of this as a function that will be applied to all new data), the expanded data will then be ingested into the final table that will have the same schema as the original one in Log Analytics/Sentinel. We will set the retention on the raw table to 0 days, because we want the data to be stored only in the properly formatted table and deleted in the raw data table as soon as it’s transformed. Detailed steps for this step can be found here.

4. Create table mapping. Because the data format is json, data mapping is required. This defines how records will land in the raw events table as they come from Event Hub. Details for this step can be found here.

5. Create update policy and attach it to raw records table. In this step we create a function (update policy) and we attach it to the destination table so the data is transformed at ingestion time. See details here. This step is only needed if you want to have the tables with the same schema and format as in Log Analytics.

6. Create data connection between EventHub and raw data table in ADX. In this step, we tell ADX where and how to get the data from. In our case, it would be from EventHub, specifying the target raw table, what is the data format (json) and the mapping to be applied (created in step 4). Details on how to perform this here.

7. Modify retention for target table. The default retention policy is 100 years, which might be too much in most cases. With the following command we will modify the retention policy to be 1 year: .alter-merge table <tableName> policy retention softdelete = 365d recoverability = disabled

The good news is that all these steps can be easily automated with this script by @sreedharande. Visit the script page for more details on how to use it.

Additional cost components of this architecture are:

- Log Analytics Data Export – charged per exported GBs

- Event Hub – charged by Throughput Unit (1 TU ~ 1 MB/s)

Management considerations in ADX

These are some of the areas where ADX offers additional controls:

- Cluster size and SKU. You must carefully plan for the number of nodes and the VM SKU in your cluster. These factors will determine the amount of processing power and the size of your hot cache (SSD and memory). The bigger the cache, the more data you will be able to query at a higher performance. We encourage you to visit the ADX sizing calculator, where you can play with different configurations and see the resulting cost. ADX also has an auto-scale capability that makes intelligent decisions to add/remove nodes as needed based on cluster load (see more details here)

- Hot/cold cache. In ADX you have greater control on what data tables will be in hot cache and therefore will return results faster. If you have big amounts of data in your ADX cluster, it might be advisable to break down tables by month, so you have greater granularity on which data is present in hot cache. See here for more details.

- Retention. In ADX we have a setting that defines when will the data be removed from a database or from an individual table. This is obviously an important setting to limit our storage costs. See here for more details.

- Security. There are several security settings in ADX that help you protect your data. These range from identity management, encryption, , etc. (see here for details). Specifically around RBAC, there are ways in ADX to restrict access to databases, tables or even rows within a table. Here you can see details about row level security.

- Data sharing. ADX allows you to make pieces of data available to other parties (partner, vendor), or even buy data from other parties (more details here).

Summary

As you have seen throughout this article, you can stream your telemetry data to ADX to be used as a long-term storage option with lower cost than Sentinel/Log Analytics, and still have the data available for exploration using the same KQL queries that you use in Sentinel ![]()

Please post below if you have any questions or comments.

Thanks to all the reviewers @Sarah_Young , @Jeremy Tan , @Tiander Turpijn , @Inwafula , @Matt_Lowe and special thanks to @Uri Barash and @minniw from the ADX team for the collaboration!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.