- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- Protecting your Teams with Azure Sentinel

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure Sentinel now has an integrated connector - https://docs.microsoft.com/en-us/azure/sentinel/connect-office-365 This is the recommended route for collecting these logs and supersedes the collection methods described below.

Updated versions of the queries in the blog that work with data collected via the official connector have been shared via the Azure Sentinel GitHub.

Recent events have forced many organizations (including Microsoft) to move to a work from home model for their users. In order to ensure their users remain connected and productive they are turning to productivity tools such as Microsoft Teams. We have seen an unprecedented spike in Teams usage, and now have more than 44 million daily users, a figure that has grown by 12 million in just the last seven days. And those users have generated over 900 million meeting and calling minutes on Teams each day this week. My own team has significantly increased our usage of Teams over the last few weeks with more virtual meetings, corridor conversations becoming text chats, and virtual social times organized during lunch breaks.

Moving to, or increasing usage of, Teams means that the service should be more of a focus for defenders than ever due to its critical role in communications and data sharing. There are multiple features to help you secure your Team’s usage, but in this blog we are going to focus on how we can collect Teams activity logs with Azure Sentinel, and how a SOC team can start hunting in that Teams data to protect thier organization and users.

Collecting Teams Data

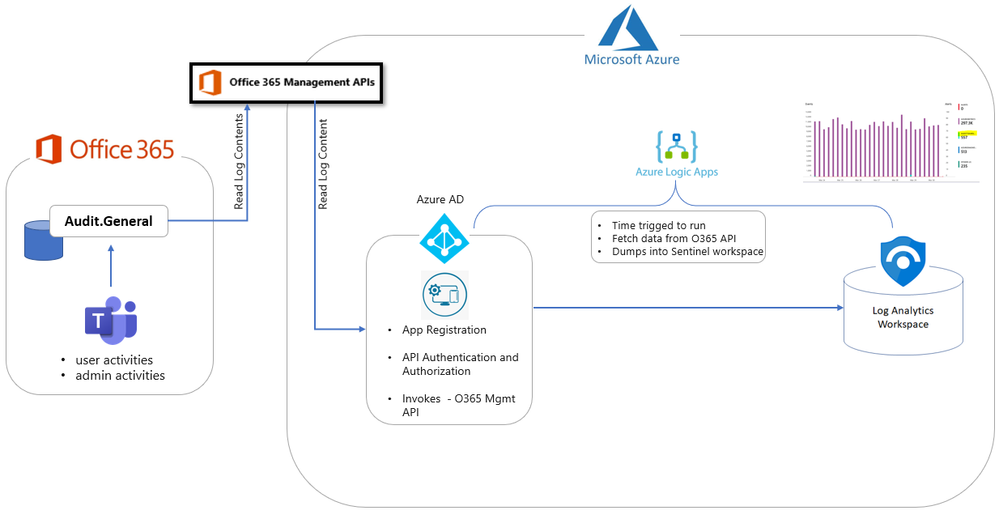

This section explains how to ingest Teams logs into Azure Sentinel via the O365 Management Activity API. Due to the flexibility of Azure there are multiple paths to a solutions, of which this blog outlines 2.

The first option leverages an Azure Logic App is suitable when the requirements are to quickly ingest logs into Sentinel with a couple of clicks and is best suited to smaller, or test environments. The second option uses an Azure Function App which is more cost efficient at large volumes and includes a number of additional features such as extended logs storage. This should be considered the primary option for enterprise scale deployment.

Enabling Audit Logs

Teams activity data is exposed in the Office 365 Audit log under the Audit.General subscription, and this source is used by both collection methods. By default, Audit logs are not collected for Office 365 tenants, however they contain valuable data on all sorts Office 365 activity, and I would strongly advise enabling Audit logging whether you are using Teams or not. Details on how to enable the Office 365 audit log can be found here.

Once audit logging is enabled you can proceed to deploy your chosen connection method.

Option 1:

This option leverages the below components and provides a quick and easy way to deploy connector.

Deployment steps:

Register an App

In order to handle the authentication and authorization to collect data from the API we are going to register an Azure AD app and authorize it to access the API. To do this navigate to the Azure Active Directory blade of your Azure portal and follow the steps below:

- Click on ‘App Registrations’

- Select ‘New Registration’

- Give it a name and click Register.

- Click ‘API Permissions’ blade.

- Click ‘Add a Permission’.

- Click ‘Office 365 Management APIs’.

- Click ‘Application Permissions’.

- Check ActivityFeed.Read. Click ‘Add permissions’.

- Click ‘grant admin consent’.

- Click ‘Certificates and Secrets’.

- Click ‘New Client Secret’

- Enter a description, select ‘never’. Click ‘Add’.

- IMPORTANT - Click copy next to the new secret and store it somewhere temporarily. You cannot come back to get the secret once you leave the blade.

- Copy the client Id from the application properties and store it somewhere.

- Copy the tenant Id from the main Azure Active Directory blade and store it.

If you get stuck with any of the above steps there are more details available on how to register your app available here.

Registering the API subscription

To collect this audit data via the Office 365 Management Activity API we need to register it as a subscription. This can be done via PowerShell. The first step will be to complete the commands below with data from your subscription and the Azure AD app we just registered in the previous step.

# Populate with App ID and Secret from your Azure AD app registration

$ClientID = "<app_id>"

$ClientSecret = "<client_secret>"

$loginURL = "https://login.microsoftonline.com/"

$tenantdomain = "<domain>.onmicrosoft.com"

# Get the tenant GUID from Properties | Directory ID under the Azure Active Directory section

$TenantGUID = "<Tenant GUID>"

$resource = "https://manage.office.com"

$body = @{grant_type="client_credentials";resource=$resource;client_id=$ClientID;client_secret=$ClientSecret}

$oauth = Invoke-RestMethod -Method Post -Uri $loginURL/$tenantdomain/oauth2/token?api-version=1.0 -Body $body

$headerParams = @{'Authorization'="$($oauth.token_type) $($oauth.access_token)"}

$publisher = New-Guid

Invoke-WebRequest -Method Post -Headers $headerParams -Uri "https://manage.office.com/api/v1.0/$tenantGuid/activity/feed/subscriptions/start?contentType=Audit.General&PublisherIdentifier=$Publisher"

If you are having copy and paste issues with these commands you can find them on GitHub as well.

Once this is done you can run the commands in PowerShell. If you get an error message stating the Tenant doesn’t exist this means your provisioning of audit logging has not yet been completed. This can take several hours so take a break, do something relaxing, and check back later. If you continue to have issues additional troubleshooting guidance can be found here.

Deploy a Logic App

The final piece to collect the data and ingest it into our Azure Sentinel workspace is a Logic App (referred to as Playbooks in Azure Sentinel). For more background on using Logic Apps to collect from a data source, check out this comprehensive blog from @Ofer_Shezaf .

Our Logic App will run on a set interval, query the Office 365 API for audit data, and then write that data into our Azure Sentinel workspace. Below you can see the components that will go into the Logic App and instructions on how to deploy the Logic App via an ARM template.

To make this simple we have created this template for you to use. Thanks to @Nicholas DiCola (SECURITY JEDI) for making this into an ARM template to make deployment quick and easy via the Deploy to Azure button on GitHub. When deploying make sure that you populate the settings with details from your Azure Sentinel Workspace and Azure AD app we configured. Additional details on how to deploy and configure these templates can be found here.

You should note that if you run this Logic App and there is no data available for the last 5 minutes it will fail, so if you test this and get a failure at the first HTTP step, check your audit log to see if there are any events that occurred within the last 5 minutes. This app collects all Audit.General events so it doesn’t need to be Teams specific events.

This Logic App provides a quick and simple way to start ingesting logs via the Office 365 Management Activity API. However, it may be more efficient and cost effective to use an Azure Function to achieve the same thing. @Nicholas DiCola (SECURITY JEDI) has already produced an Azure Function to do this, details on the Function and how to use it can be found on GitHub.

Option 2

This option was created by @Andrea_Piazza , Punit Acharya, and Maitreya Bodola from Microsoft Services and utilizes a wide range of Azure features to provide a robust and efficient solution.

Details on how to deploy this option can be found on our GitHub site.

Once your chosen connector is running you should see a custom table called O365API_CL appear in your Azure Sentinel workspace, and logs start to appear in it. Congratulations you are now collecting Teams events!

Monitoring Teams

As with most SaaS solutions, identity is a key attack vector when it comes to Teams and it should be protected and monitored. As Teams uses Azure Active Directory (Azure AD) for authentication you can collect Azure AD data into Azure Sentinel using the built in connector and use our detections and hunting queries to monitor for suspicious identity events with Azure Sentinel. But what about Teams specific threats? There are a number of scenarios that an attacker could attempt exploit in order to gain access to your organizations sensitive data with Teams that wouldn’t appear in Azure AD logs. Below we will look at some of these, as well as ideas of how to hunt and monitor for them.

Parsing the Data

Before building detections or hunting queries on the Teams data we collected we can use a KQL Function to parse and normalize the data to make it easier to use. For more background on Functions please read this blog.

In the case of Teams data we have a large number of fields in the Office 365 Management API that are used by other Office 365 services but not Teams, so the parser is going to help us select a subset of the fields relevant to Teams. You can find our suggested parser on GitHub but you can also modify this parser to fit your needs and preferences.

O365API_CL

| where Workload_s =~ "MicrosoftTeams"

| project TimeGenerated,

Workload=Workload_s,

Operation=Operation_s,

TeamName=columnifexists('TeamName_s', ""),

UserId=columnifexists('UserId_s', ""),

AddOnName=columnifexists('AddOnName_s', AddOnGuid_g),

Members=columnifexists('Members_s', ""),

Settings=iif(Operation_s contains "Setting", pack("Name", columnifexists('Name_s', ""), "Old Value", columnifexists('OldValue_s', ""), "New Value", columnifexists('NewValue_s', "")),""),

Details=pack("Id", columnifexists('Id_g', ""), "OrganizationId", columnifexists('OrganizationId_g', ""), "UserType", columnifexists('UserType_d', ""), "UserKey", columnifexists('UserKey_g', ""), "TeamGuid", columnifexists('TeamGuid_s', ""))

For the queries we will look at in the following sections, we are going to save this parser with an alias of TeamsData. Details on configuring and using a Function as a parser can be found in this blog.

Hunting Queries

The following queries are designed to help you find suspicious activity in your Teams environment, and whilst many are likely to return legitimate activity as well as potentially malicious activity, they can be useful in guiding your hunting. If after running these queries you are confident with the results you could consider turning some or all of them into Azure Sentinel Analytics to alert on. We have included entity mapping elements at the end of each query that you can use if you choose to use them as Analytics.

External users from anomalous organizations

Mitre ATT&CK technique T1136

One potential threat vector for Teams is the ability to add external contributors to your Teams environment. Whilst this feature provides vital collaboration capabilities with external organizations it also presents a means by which a malicious actor could gain access. Organizations will often collaborate closely with a small number of key partners and it is likely that many of the external users in Teams will be from these organizations. Therefore, we can look for potentially suspicious external users by looking at external users added to Teams who come from organizations we have not observed before.

// If you have more than 14 days worth of Teams data change this value

let data_date = 14d;

// If you want to look at users further back than the last day change this value

let lookback_data = 1d;

let known_orgs = (

TeamsData

| where TimeGenerated > ago(data_date)

| where Operation =~ "MemberAdded" or Operation =~ "TeamsSessionStarted"

// Extract the correct UPN and parse our external organization domain

| extend UPN = iif(Operation == "MemberAdded", tostring(parse_json(Members)[0].UPN), UserId)

| extend Organization = tostring(split(split(UPN, "_")[1], "#")[0])

| where isnotempty(Organization)

| summarize by Organization);

TeamsData

| where TimeGenerated > ago(lookback_data)

| where Operation =~ "MemberAdded"

| extend UPN = tostring(parse_json(Members)[0].UPN)

| extend Organization = tostring(split(split(UPN, "_")[1], "#")[0])

| where isnotempty(Organization)

| where Organization !in (known_orgs)

// Uncomment the following line to map query entities is you plan to use this as a detection query

//| extend timestamp = TimeGenerated, AccountCustomEntity = UPN

External users added then removed

Mitre ATT&CK technique T1136

Attackers with some level of existing access might also look to add an external account to Teams in order to access or exfiltrate data before removing that user to hide the access. We can look for external accounts that are added to Teams then quickly removed to see if we can identify such behavior.

// If you want to look at user added further than 7 days ago adjust this value

let time_ago = 7d;

// If you want to change the timeframe of how quickly accounts need to be added and removed change this value

let time_delta = 1h;

TeamsData

| where TimeGenerated > ago(time_ago)

| where Operation =~ "MemberAdded"

| extend UPN = tostring(parse_json(Members)[0].UPN)

| project TimeAdded=TimeGenerated, Operation, UPN, UserWhoAdded = UserId, TeamName, TeamGuid = tostring(Details.TeamGuid)

| join (

TeamsData

| where TimeGenerated > ago(time_ago)

| where Operation =~ "MemberRemoved"

| extend UPN = tostring(parse_json(Members)[0].UPN)

| project TimeDeleted=TimeGenerated, Operation, UPN, UserWhoDeleted = UserId, TeamName, TeamGuid = tostring(Details.TeamGuid)) on UPN, TeamGuid

| where TimeDeleted < (TimeAdded + time_delta)

| project TimeAdded, TimeDeleted, UPN, UserWhoAdded, UserWhoDeleted, TeamName, TeamGuid

// Uncomment the following line to map query entities is you plan to use this as a detection query

//| extend timestamp = TimeAdded, AccountCustomEntity = UPN

New bot or application added

Mitre ATT&CK techniques T1176, T1119

Teams has the ability to include apps or bots within a Team to extend the native feature set. Whilst many of these are included by default there is also the option to include custom bots and apps in a Team. An attacker could use such an app to establish persistence in Teams without a user account, or to access files or other data shared on Teams. We can hunt for new app or bot additions that have not been added to any Team within our organization before.

// If you have more than 14 days worth of Teams data change this value

let data_date = 14d;

let historical_bots = (

TeamsData

| where TimeGenerated > ago(data_date)

| where isnotempty(AddOnName)

| project AddOnName);

TeamsData

| where TimeGenerated > ago(1d)

// Look for add-ins we have never seen before

| where AddOnName in (historical_bots)

// Uncomment the following line to map query entities is you plan to use this as a detection query

//| extend timestamp = TimeGenerated, AccountCustomEntity = UserId

User made Owner of multiple Teams

Mitre ATT&CK technique T1078

Commonly within an organization, users will set up Teams as needed for specific projects or topics, and will own the Teams they create. Most organizations will have different Owners for each Team, and rarely will one user be an Owner of more than a small number of Teams. An attacker seeking to elevate privileges may look to make themselves Owner of a large number of Teams, we can monitor for a user being made an Owner in a large number of Teams.

// Adjust this value to change how many teams a user is made owner of before detecting

let max_owner_count = 3;

// Change this value to adjust how larger timeframe the query is run over.

let time_window = 1d;

let high_owner_count = (TeamsData

| where TimeGenerated > ago(time_window)

| where Operation =~ "MemberRoleChanged"

| extend Member = tostring(parse_json(Members)[0].UPN)

| extend NewRole = toint(parse_json(Members)[0].Role)

| where NewRole == 2

| summarize dcount(TeamName) by Member

| where dcount_TeamName > max_owner_count

| project Member);

TeamsData

| where TimeGenerated > ago(time_window)

| where Operation =~ "MemberRoleChanged"

| extend Member = tostring(parse_json(Members)[0].UPN)

| extend NewRole = toint(parse_json(Members)[0].Role)

| where NewRole == 2

| where Member in (high_owner_count)

| extend TeamGuid = tostring(Details.TeamGuid)

// Uncomment the following line to map query entities is you plan to use this as a detection query

//| extend timestamp = TimeGenerated, AccountCustomEntity = Member

Multiple Teams deleted by a single user

Mitre ATT&CK technique T1485, T1489

As with ownership of a Team, the process of deleting a Team is often one carried out by individual Owners rather than a single central user. Given Teams are often used for critical services such as incident management it is possible that an attacker looking to cause disruption could seek to delete multiple Teams. We can monitor for a single user deleting multiple Teams to detect such activity and identify the malicious user.

// Adjust this value to change how many Teams should be deleted before including

let max_delete = 3;

// Adjust this value to change the timewindow the query runs over

let time_window = 1d;

let deleting_users = (

TeamsData

| where TimeGenerated > ago(time_window)

| where Operation =~ "TeamDeleted"

| summarize count() by UserId

| where count_ > max_delete

| project UserId);

TeamsData

| where TimeGenerated > ago(time_window)

| where Operation =~ "TeamDeleted"

| where UserId in (deleting_users)

| extend TeamGuid = tostring(Details.TeamGuid)

| project-away AddOnName, Members, Settings

// Uncomment the following line to map query entities is you plan to use this as a detection query

//| extend timestamp = TimeGenerated, AccountCustomEntity = UserId

Other Hunting Opportunities

Once you have run these queries you can expand your hunting by combining these queries with other data sources such as Azure Active Directory or activity on other Office 365 workloads. For example you can combine our detection for suspicious patterns of Azure Active Directory SigninLogs to the Azure Portal and look for users appearing in that detection being made an owner of a Team:

let timeRange = 1d;

let lookBack = 7d;

let threshold_Failed = 5;

let threshold_FailedwithSingleIP = 20;

let threshold_IPAddressCount = 2;

let isGUID = "[0-9a-z]{8}-[0-9a-z]{4}-[0-9a-z]{4}-[0-9a-z]{4}-[0-9a-z]{12}";

let azPortalSignins = SigninLogs

| where TimeGenerated >= ago(timeRange)

// Azure Portal only and exclude non-failure Result Types

| where AppDisplayName has "Azure Portal" and ResultType !in ("0", "50125", "50140")

// Tagging identities not resolved to friendly names

| extend Unresolved = iff(Identity matches regex isGUID, true, false);

// Lookup up resolved identities from last 7 days

let identityLookup = SigninLogs

| where TimeGenerated >= ago(lookBack)

| where not(Identity matches regex isGUID)

| summarize by UserId, lu_UserDisplayName = UserDisplayName, lu_UserPrincipalName = UserPrincipalName;

// Join resolved names to unresolved list from portal signins

let unresolvedNames = azPortalSignins | where Unresolved == true | join kind= inner (

identityLookup ) on UserId

| extend UserDisplayName = lu_UserDisplayName, UserPrincipalName = lu_UserPrincipalName

| project-away lu_UserDisplayName, lu_UserPrincipalName;

// Join Signins that had resolved names with list of unresolved that now have a resolved name

let u_azPortalSignins = azPortalSignins | where Unresolved == false | union unresolvedNames;

let failed_signins = (u_azPortalSignins

| extend Status = strcat(ResultType, ": ", ResultDescription), OS = tostring(DeviceDetail.operatingSystem), Browser = tostring(DeviceDetail.browser)

| extend FullLocation = strcat(Location,'|', LocationDetails.state, '|', LocationDetails.city)

| summarize TimeGenerated = makelist(TimeGenerated), Status = makelist(Status), IPAddresses = makelist(IPAddress), IPAddressCount = dcount(IPAddress), FailedLogonCount = count()

by UserPrincipalName, UserId, UserDisplayName, AppDisplayName, Browser, OS, FullLocation

| mvexpand TimeGenerated, IPAddresses, Status

| extend TimeGenerated = todatetime(tostring(TimeGenerated)), IPAddress = tostring(IPAddresses), Status = tostring(Status)

| project-away IPAddresses

| summarize StartTime = min(TimeGenerated), EndTime = max(TimeGenerated) by UserPrincipalName, UserId, UserDisplayName, Status, FailedLogonCount, IPAddress, IPAddressCount, AppDisplayName, Browser, OS, FullLocation

| where (IPAddressCount >= threshold_IPAddressCount and FailedLogonCount >= threshold_Failed) or FailedLogonCount >= threshold_FailedwithSingleIP

| project UserPrincipalName);

TeamsData

| where TimeGenerated > ago(time_window)

| where Operation =~ "MemberRoleChanged"

| extend Member = tostring(parse_json(Members)[0].UPN)

| extend NewRole = toint(parse_json(Members)[0].Role)

| where NewRole == 2

| where Member in (failed_signins)

| extend TeamGuid = tostring(Details.TeamGuid)

In addition you can make the SigninLogs detections specific to Teams by adding a filter for only Teams based sign-ins with:

| where AppDisplayName startswith "Microsoft Teams"

For example this is our Successful logon from IP and failure from a different IP query scoped to only Teams sign-ins:

let timeFrame = 1d;

let logonDiff = 10m;

SigninLogs

| where TimeGenerated >= ago(timeFrame)

| where ResultType == "0"

| where AppDisplayName startswith "Microsoft Teams"

| project SuccessLogonTime = TimeGenerated, UserPrincipalName, SuccessIPAddress = IPAddress, AppDisplayName, SuccessIPBlock = strcat(split(IPAddress, ".")[0], ".", split(IPAddress, ".")[1])

| join kind= inner (

SigninLogs

| where TimeGenerated >= ago(timeFrame)

| where ResultType !in ("0", "50140")

| where ResultDescription !~ "Other"

| where AppDisplayName startswith "Microsoft Teams"

| project FailedLogonTime = TimeGenerated, UserPrincipalName, FailedIPAddress = IPAddress, AppDisplayName, ResultType, ResultDescription

) on UserPrincipalName, AppDisplayName

| where SuccessLogonTime < FailedLogonTime and FailedLogonTime - SuccessLogonTime <= logonDiff and FailedIPAddress !startswith SuccessIPBlock

| summarize FailedLogonTime = max(FailedLogonTime), SuccessLogonTime = max(SuccessLogonTime) by UserPrincipalName, SuccessIPAddress, AppDisplayName, FailedIPAddress, ResultType, ResultDescription

| extend timestamp = SuccessLogonTime, AccountCustomEntity = UserPrincipalName, IPCustomEntity = SuccessIPAddress

The Teams hunting queries detailed in this blog have been shared on the Azure Sentinel GitHub along with the parser and Logic App. We will be continuing to develop detections and hunting queries for Teams data over time so make sure you keep an eye on GitHub. As always if you have your own ideas for queries or detections please feel free to contribute to the Azure Sentinel community.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.